x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def cost(xs, ys):

cost = 0

for x, y in zip(xs, ys):

y_p = forward(x)

cost += (y_p - y) ** 2

return cost / len(xs)

def gradint(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y)

return grad / len(xs)

for epoch in range(100):

cost_val = cost(x_data, y_data)

grad_val = gradint(x_data, y_data)

w -= 0.01 * grad_val

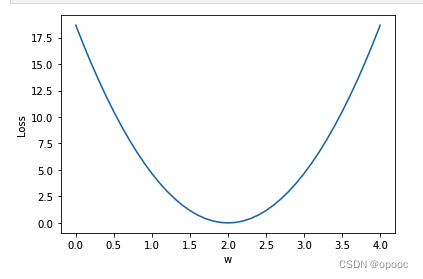

plt.plot(w_list,mse_list)

plt.ylabel('Loss')

plt.xlabel('w')

plt.show()

版权声明:本文为opooc原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。