yolo人脸检测数据集

计算机视觉

(

Computer Vision

)

Step by step instructions to train Yolo-v5 & do Inference(from

ultralytics

) to count the blood cells and localize them.

循序渐进的说明来训练Yolo-v5和进行推理(来自

Ultralytics

)以对血细胞进行计数并将其定位。

I vividly remember that I tried to do an object detection model to count the RBC, WBC, and platelets on microscopic blood-smeared images using Yolo v3-v4, but I couldn’t get as much as accuracy I wanted and the model never made it to the production.

我生动地记得我曾尝试过使用Yolo v3-v4尝试建立对象检测模型以对显微血涂图像上的RBC,WBC和血小板进行计数,但是我无法获得想要的精度,而且该模型从未成功它的生产。

Now recently I came across the release of the Yolo-v5 model from Ultralytics, which is built using PyTorch. I was a bit skeptical to start, owing to my previous failures, but after reading the manual in their Github repo, I was very confident this time and I wanted to give it a shot.

最近,我遇到了Ultralytics发行的Yolo-v5模型,该模型是使用PyTorch构建的。 由于之前的失败,我对起步有些怀疑,但是在阅读他们的Github存储库中的手册后,这次我非常有信心,我想尝试一下。

And it worked like a charm, Yolo-v5 is easy to train and easy to do inference.

Yolo-v5就像是一种魅力,易于训练且易于推理。

So this post summarizes my hands-on experience on the Yolo-v5 model on the Blood Cell Count dataset. Let’s get started.

因此,本篇文章总结了我在血细胞计数数据集的Yolo-v5模型上的动手经验。 让我们开始吧。

Ultralytics recently launched Yolo-v5. For time being, the first three versions of Yolo were created by Joseph Redmon. But the newer version has higher mean Average Precision and faster inference times than others. Along with that it’s built on top of PyTorch made the training & inference process very fast and the results are great.

Ultralytics最近发布了Yolo-v5。 目前,Yolo的前三个版本是由Joseph Redmon创建的。 但是较新版本具有更高的平均平均精度和更快的推理时间。 此外,它还建立在PyTorch的基础上,使训练和推理过程变得非常快,并且效果很好。

So let’s break down the steps in our training process.

因此,让我们分解一下培训过程中的步骤。

-

Data — Preprocessing (Yolo-v5 Compatible)

数据-预处理(与Yolo-v5兼容)

-

Model — Training

模型—培训

-

Inference

推理

And if you wish to follow along simultaneously, open up these notebooks,

如果您希望同时进行操作,请打开这些笔记本,

Google Colab Notebook — Training and Validation:

link

Google Colab Notebook —培训和验证:

链接

Google Colab Notebook — Inference:

link

Google Colab Notebook —推论:

链接

1.数据-预处理(与Yolo-v5兼容)

(

1. Data — Preprocessing (Yolo-v5 Compatible)

)

I used the dataset BCCD dataset available in

Github

, the dataset has blood smeared microscopic images and it’s corresponding bounding box annotations are available in an XML file.

我使用了

Github中

可用的BCCD数据集,该数据集具有涂血的显微图像,并且在XML文件中提供了相应的边界框注释。

Dataset Structure:- BCCD

- Annotations

- BloodImage_00000.xml

- BloodImage_00001.xml

...- JpegImages

- BloodImage_00001.jpg

- BloodImage_00001.jpg

...Sample Image and its annotation :

样本图片及其注释:

Upon mapping the annotation values as bounding boxes in the image will results like this,

在将注释值映射为图像中的边框时,将得到如下结果:

But to train the Yolo-v5 model, we need to organize our dataset structure and it requires images (.jpg/.png, etc.,) and it’s corresponding labels in .txt format.

但是要训练Yolo-v5模型,我们需要组织我们的数据集结构,它需要图像(.jpg / .png等)及其对应的.txt格式标签。

Yolo-v5 Dataset Structure:- BCCD

- Images

- Train (.jpg files)

- Valid (.jpg files)- Labels

- Train (.txt files)

- Valid (.txt files)And then the format of .txt files should be :

.txt文件的格式应为:

STRUCTURE OF .txt FILE :

.txt文件的结构:

– One row per object.

-每个对象一行。

– Each row is class x_center y_center width height format.

-每行都是x_center y_center width高度高度格式。

– Box coordinates must be in normalized xywh format (from 0–1). If your boxes are in pixels, divide x_center and width by image width, and y_center and height by image height.

-框坐标必须为标准化的xywh格式(从0到1)。 如果您的框以像素为单位,则将x_center和width除以图像宽度,将y_center和height除以图像高度。

– Class numbers are zero-indexed (start from 0).

-类号为零索引(从0开始)。

An Example label with class 1 (RBC) and class 2 (WBC) along with each of their x_center, y_center, width, height (All normalized 0–1) looks like the below one.

带有第1类(RBC)和第2类(WBC)以及它们的x_center,y_center,宽度,高度(全部归一化为0-1)的示例标签如下所示。

# class x_center y_center width height #

1 0.718 0.829 0.143 0.193

2 0.318 0.256 0.150 0.180

...So let’s see how we can pre-process our data in the above-specified structure.

因此,让我们看看如何在上述结构中预处理数据。

Our first step should be parsing the data from all the XML files and storing them in a data frame for further processing. Thus we run the below codes to accomplish it.

我们的第一步应该是解析所有XML文件中的数据,并将它们存储在数据框中以进行进一步处理。 因此,我们运行以下代码来完成它。

# Dataset Extraction from github

!git clone 'https://github.com/Shenggan/BCCD_Dataset.git'

import os, sys, random, shutil

import xml.etree.ElementTree as ET

from glob import glob

import pandas as pd

from shutil import copyfile

import pandas as pd

from sklearn import preprocessing, model_selection

import matplotlib.pyplot as plt

%matplotlib inline

from matplotlib import patches

import numpy as np

annotations = sorted(glob('/content/BCCD_Dataset/BCCD/Annotations/*.xml'))

df = []

cnt = 0

for file in annotations:

prev_filename = file.split('/')[-1].split('.')[0] + '.jpg'

filename = str(cnt) + '.jpg'

row = []

parsedXML = ET.parse(file)

for node in parsedXML.getroot().iter('object'):

blood_cells = node.find('name').text

xmin = int(node.find('bndbox/xmin').text)

xmax = int(node.find('bndbox/xmax').text)

ymin = int(node.find('bndbox/ymin').text)

ymax = int(node.find('bndbox/ymax').text)

row = [prev_filename, filename, blood_cells, xmin, xmax, ymin, ymax]

df.append(row)

cnt += 1

data = pd.DataFrame(df, columns=['prev_filename', 'filename', 'cell_type', 'xmin', 'xmax', 'ymin', 'ymax'])

data[['prev_filename','filename', 'cell_type', 'xmin', 'xmax', 'ymin', 'ymax']].to_csv('/content/blood_cell_detection.csv', index=False)

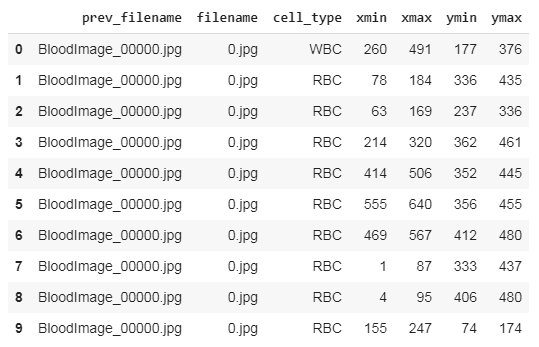

data.head(10)And the data frame should look like this,

数据框应该看起来像这样,

After saving this file, we need to make changes to convert them into Yolo-v5 compatible format.

保存此文件后,我们需要进行更改以将其转换为Yolo-v5兼容格式。

REQUIRED DATAFRAME STRUCTURE- filename : contains the name of the image- cell_type: denotes the type of the cell- xmin: x-coordinate of the bottom left part of the image- xmax: x-coordinate of the top right part of the image- ymin: y-coordinate of the bottom left part of the image- ymax: y-coordinate of the top right part of the image- labels : Encoded cell-type (Yolo - label input-1)- width : width of that bbox- height : height of that bbox- x_center : bbox center (x-axis)- y_center : bbox center (y-axis)- x_center_norm : x_center normalized (0-1) (Yolo - label input-2)- y_center_norm : y_center normalized (0-1) (Yolo - label input-3)- width_norm : width normalized (0-1) (Yolo - label input-4)- height_norm : height normalized (0-1) (Yolo - label input-5)I have written some code to transform our existing data frame into the structure specified in the above snippet.

我已经编写了一些代码,将现有的数据帧转换为上述片段中指定的结构。

img_width = 640

img_height = 480

def width(df):

return int(df.xmax - df.xmin)

def height(df):

return int(df.ymax - df.ymin)

def x_center(df):

return int(df.xmin + (df.width/2))

def y_center(df):

return int(df.ymin + (df.height/2))

def w_norm(df):

return df/img_width

def h_norm(df):

return df/img_height

df = pd.read_csv('/content/blood_cell_detection.csv')

le = preprocessing.LabelEncoder()

le.fit(df['cell_type'])

print(le.classes_)

labels = le.transform(df['cell_type'])

df['labels'] = labels

df['width'] = df.apply(width, axis=1)

df['height'] = df.apply(height, axis=1)

df['x_center'] = df.apply(x_center, axis=1)

df['y_center'] = df.apply(y_center, axis=1)

df['x_center_norm'] = df['x_center'].apply(w_norm)

df['width_norm'] = df['width'].apply(w_norm)

df['y_center_norm'] = df['y_center'].apply(h_norm)

df['height_norm'] = df['height'].apply(h_norm)

df.head(30)

After preprocessing our data frame looks like this, here we can see there exist many rows for a single image file (For instance BloodImage_0000.jpg), now we need to collect all the (

labels, x_center_norm, y_center_norm, width_norm, height_norm

) values for that single image file and save it as a .txt file.

预处理我们的数据帧看起来像在此之后,在这里我们可以看到存在许多行的一个图像文件(例如BloodImage_0000.jpg),现在我们需要收集所有(

标签,x_center_norm,y_center_norm,width_norm,height_norm)

值该单个图像文件,并将其另存为.txt文件。

Now we split the dataset into training and validation and save the corresponding images and it’s labeled .txt files. For that, I’ve written a small piece of the code snippet.

现在,我们将数据集分为训练和验证,并保存相应的图像,并将其标记为.txt文件。 为此,我只写了一小段代码。

df_train, df_valid = model_selection.train_test_split(df, test_size=0.1, random_state=13, shuffle=True)

print(df_train.shape, df_valid.shape)

os.mkdir('/content/bcc/')

os.mkdir('/content/bcc/images/')

os.mkdir('/content/bcc/images/train/')

os.mkdir('/content/bcc/images/valid/')

os.mkdir('/content/bcc/labels/')

os.mkdir('/content/bcc/labels/train/')

os.mkdir('/content/bcc/labels/valid/')

def segregate_data(df, img_path, label_path, train_img_path, train_label_path):

filenames = []

for filename in df.filename:

filenames.append(filename)

filenames = set(filenames)

for filename in filenames:

yolo_list = []

for _,row in df[df.filename == filename].iterrows():

yolo_list.append([row.labels, row.x_center_norm, row.y_center_norm, row.width_norm, row.height_norm])

yolo_list = np.array(yolo_list)

txt_filename = os.path.join(train_label_path,str(row.prev_filename.split('.')[0])+".txt")

# Save the .img & .txt files to the corresponding train and validation folders

np.savetxt(txt_filename, yolo_list, fmt=["%d", "%f", "%f", "%f", "%f"])

shutil.copyfile(os.path.join(img_path,row.prev_filename), os.path.join(train_img_path,row.prev_filename))

## Apply function ##

src_img_path = "/content/BCCD_Dataset/BCCD/JPEGImages/"

src_label_path = "/content/BCCD_Dataset/BCCD/Annotations/"

train_img_path = "/content/bcc/images/train"

train_label_path = "/content/bcc/labels/train"

valid_img_path = "/content/bcc/images/valid"

valid_label_path = "/content/bcc/labels/valid"

segregate_data(df_train, src_img_path, src_label_path, train_img_path, train_label_path)

segregate_data(df_valid, src_img_path, src_label_path, valid_img_path, valid_label_path)

print("No. of Training images", len(os.listdir('/content/bcc/images/train')))

print("No. of Training labels", len(os.listdir('/content/bcc/labels/train')))

print("No. of valid images", len(os.listdir('/content/bcc/images/valid')))

print("No. of valid labels", len(os.listdir('/content/bcc/labels/valid')))After running the code, we should have the folder structure as we expected and ready to train the model.

运行代码后,我们应该具有我们期望的文件夹结构,并准备训练模型。

No. of Training images 364

No. of Training labels 364No. of valid images 270

No. of valid labels 270&&- BCCD

- Images

- Train (364 .jpg files)

- Valid (270 .jpg files)- Labels

- Train (364 .txt files)

- Valid (270 .txt files)End of data pre-processing.

数据预处理结束。

2.模型—培训

(

2. Model — Training

)

To start the training process, we need to clone the official Yolo-v5’s weights and config files. It’s available

here

.

要开始培训过程,我们需要克隆官方的Yolo-v5的权重和配置文件。

在这里

可用。

!git clone 'https://github.com/ultralytics/yolov5.git'

!pip install -qr '/content/yolov5/requirements.txt' # install dependencies

## Create a yaml file and move it into the yolov5 folder ##

shutil.copyfile('/content/bcc.yaml', '/content/yolov5/bcc.yaml')Then install the required packages that they specified in the requirements.txt file.

然后安装它们在requirements.txt文件中指定的必需软件包。

bcc.yaml :

bcc.yaml:

Now we need to create a Yaml file that contains the directory of training and validation, number of classes and it’s label names. Later we need to move the .yaml file into the yolov5 directory that we cloned.

现在,我们需要创建一个Yaml文件,其中包含培训和验证的目录,类数及其标签名称。 稍后,我们需要将.yaml文件移动到克隆的yolov5目录中。

## Contents inside the .yaml filetrain: /content/bcc/images/train

val: /content/bcc/images/validnc: 3

names: ['Platelets', 'RBC', 'WBC']

model’s — YAML

:

模型的— YAML

:

Now we need to select a model(small, medium, large, xlarge) from the ./models folder.

现在我们需要从./models文件夹中选择一个模型(小,中,大,xlarge)。

The figures below describe various features such as no.of parameters etc., for the available models. You can choose any model of your choice depending on the complexity of the task in hand and by default, they all are available as .yaml file inside the models’ folder from the cloned repository

下图描述了可用型号的各种功能,例如参数编号等。 您可以根据手头任务的复杂性来选择任何模型,默认情况下,它们都可以从克隆的存储库中的模型文件夹中以.yaml文件的形式使用。

Now we need to edit the *.yaml file of the model of our choice. We just have to replace the number of classes in our case to match with the number of classes in the model’s YAML file. For simplicity, I am choosing the yolov5s.yaml for faster processing.

现在,我们需要编辑所选模型的* .yaml文件。 我们只需要替换本例中的类数即可与模型的YAML文件中的类数匹配。 为了简单起见,我选择yolov5s.yaml以进行更快的处理。

## parametersnc: 3 # number of classesdepth_multiple: 0.33 # model depth multiplewidth_multiple: 0.50 # layer channel multiple# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

...............

NOTE

: This step is not mandatory if we didn’t replace the nc in the model’s YAML file (which I did), it will automatically override the nc value that we created before (bcc.yaml) and while training the model, you will see this line, which confirms that we don’t have to alter it.

注意

:如果我们没有替换模型的YAML文件中的nc(我做了此步骤),则此步骤不是必需的,它将自动覆盖我们之前创建的nc值(bcc.yaml),并且在训练模型时,您将看到这一行,这确认我们不必更改它。

“Overriding ./yolov5/models/yolov5s.yaml nc=80 with nc=3”

“使用nc = 3覆盖./yolov5/models/yolov5s.yaml nc = 80”

火车模型参数:

(

Model train parameters :

)

We need to configure the training parameters such as no.of epochs, batch_size, etc.,

我们需要配置训练参数,例如时期数,batch_size等。

Training Parameters!python

- <'location of train.py file'>

- --img <'width of image'>

- --batch <'batch size'>

- --epochs <'no of epochs'>

- --data <'location of the .yaml file'>

- --cfg <'Which yolo configuration you want'>(yolov5s/yolov5m/yolov5l/yolov5x).yaml | (small, medium, large, xlarge)

- --name <'Name of the best model to save after training'>Also, we can view the logs in tensorboard if we wish.

另外,如果愿意,我们可以在tensorboard中查看日志。

# Start tensorboard (optional)

%load_ext tensorboard

%tensorboard --logdir runs/

!python yolov5/train.py --img 640 --batch 8 --epochs 100 \

--data bcc.yaml --cfg models/yolov5s.yaml --name BCCMThis will initiate the training process and takes a while to complete.

这将启动培训过程,并且需要一段时间才能完成。

I am posting some excerpts from my training process,

我正在发布一些培训过程的摘录,

METRICS FROM TRAINING PROCESSNo.of classes, No.of images, No.of targets, Precision (P), Recall (R), mean Average Precision (map)- Class | Images | Targets | P | R | mAP@.5 | mAP@.5:.95: |- all | 270 | 489 | 0.0899 | 0.827 | 0.0879 | 0.0551So from the values of P (Precision), R (Recall), and mAP (mean Average Precision) we can know whether our model is doing well or not. Even though I have trained the model for only 100 epochs, the performance was great.

因此,从P(精度),R(召回率)和mAP(平均平均精度)的值中,我们可以知道我们的模型是否运行良好。 即使我只训练了100个时间段的模型,但效果还是不错的。

End of model training.

模型训练结束。

3.推论

(

3. Inference

)

Now it’s an exciting time to test our model, to see how it makes the predictions. But we need to follow some simple steps.

现在是测试我们的模型,看看它如何做出预测的激动人心的时刻。 但是我们需要遵循一些简单的步骤。

推论参数

(

Inference Parameters

)

Inference Parameters!python

- <'location of detect.py file'>

- --source <'location of image/ folder to predict'>

- --weight <'location of the saved best weights'>

- --output <'location to store the outputs after prediction'>

- --img-size <'Image size of the trained model'>(Optional)- --conf-thres <"default=0.4", 'object confidence threshold')>

- --iou-thres <"default=0.5" , 'threshold for NMS')>

- --device <'cuda device or cpu')>

- --view-img <'display results')>

- --save-txt <'saves the bbox co-ordinates results to *.txt')>

- --classes <'filter by class: --class 0, or --class 0 2 3')>## And there are other more customization availble, check them in the detect.py file. ##Run the below code, to make predictions on a folder/image.

运行以下代码,对文件夹/图像进行预测。

## TO PREDICT IMAGES IN A FOLDER ##

!python yolov5/detect.py --source /content/bcc/images/valid/

--weights '/content/drive/My Drive/Machine Learning Projects/YOLO/best_yolov5_BCCM.pt'

--output '/content/inference/output'

## TO PREDICT A SINGLE IMAGE FILE ##

output = !python yolov5/detect.py --source /content/bcc/images/valid/BloodImage_00000.jpg

--weights '/content/drive/My Drive/Machine Learning Projects/YOLO/best_yolov5_BCCM.pt'

print(output)

The results are good, some excerpts.

摘录结果不错。

解释.txt文件的输出:(可选读取)

(

Interpret outputs from the .txt file : (Optional Read)

)

Just in case , say you are doing face detection and face recognition and want to move your process one more step say you want to crop the faces using opencv using the bbox coordinates and send them into a face recognition pipeline, in such case we not only need the output like the above figure,also we need the coordinates for every faces. So is there any way ? The answer is yes ,read along.

以防万一,假设您正在进行面部检测和面部识别,并且想将过程进一步移动,例如,您想使用bbox坐标使用opencv裁剪面部并将其发送到面部识别管道中,在这种情况下,我们不仅需要上图所示的输出,还需要每个面的坐标。 那有什么办法吗? 答案是肯定的,请继续阅读。

(I only used face detection and recognition as an example, Yolo-V5 can be used to do it as well)

(我仅以人脸检测和识别为例,Yolo-V5也可以使用它)

Also, we can save the output to a .txt file, which contains some of the input image’s bbox co-ordinates.

同样,我们可以将输出保存到.txt文件,该文件包含一些输入图像的bbox坐标。

# class x_center_norm y_center_norm width_norm height_norm #

1 0.718 0.829 0.143 0.193

...Run the below code, to get the outputs in .txt file,

运行以下代码,以获取.txt文件中的输出,

!python yolov5/detect.py --source /content/bcc/images/valid/BloodImage_00000.jpg

--weights '/content/runs/exp0_BCCM/weights/best_BCCM.pt'

--view-img

--save-txtUpon successfully running the code, we can see the output are stored in the inference folder here,

成功运行代码后,我们可以看到输出存储在此处的推理文件夹中,

Great, now the outputs in the .txt file are in the format,

太好了,现在.txt文件中的输出采用以下格式:

[ class x_center_norm y_center_norm width_norm height_norm ] "we need to convert it to the form specified below" [ class, x_min, y_min, width, height ]

[ class, X_center_norm, y_center_norm, Width_norm, Height_norm ] ,

we need to convert this into →

[ class, x_min, y_min, width, height ] ,

( Also De-normalized) to make plotting easy.

[class,X_center_norm,y_center_norm,Width_norm,Height_norm],

我们需要将其转换为→

[class,x_min,y_min,width,height],

(也要归一化)以

简化

绘图。

To do so, just run the below code which performs the above transformation.

为此,只需运行以下代码即可执行上述转换。

# Plotting bbox ffrom the .txt file output from yolo #

## Provide the location of the output .txt file ##

a_file = open("/content/inference/output/BloodImage_00000.txt", "r")

# Stripping data from the txt file into a list #

list_of_lists = []

for line in a_file:

stripped_line = line.strip()

line_list = stripped_line.split()

list_of_lists.append(line_list)

a_file.close()

# Conversion of str to int #

stage1 = []

for i in range(0, len(list_of_lists)):

test_list = list(map(float, list_of_lists[i]))

stage1.append(test_list)

# Denormalizing #

stage2 = []

mul = [1,640,480,640,480] #[constant, image_width, image_height, image_width, image_height]

for x in stage1:

c,xx,yy,w,h = x[0]*mul[0], x[1]*mul[1], x[2]*mul[2], x[3]*mul[3], x[4]*mul[4]

stage2.append([c,xx,yy,w,h])

# Convert (x_center, y_center, width, height) --> (x_min, y_min, width, height) #

stage_final = []

for x in stage2:

c,xx,yy,w,h = x[0]*1, (x[1]-(x[3]/2)) , (x[2]-(x[4]/2)), x[3]*1, x[4]*1

stage_final.append([c,xx,yy,w,h])

fig = plt.figure()

import cv2

#add axes to the image

ax = fig.add_axes([0,0,1,1])

# read and plot the image

## Location of the input image which is sent to model's prediction ##

image = plt.imread('/content/BCCD_Dataset/BCCD/JPEGImages/BloodImage_00000.jpg')

plt.imshow(image)

# iterating over the image for different objects

for x in stage_final:

class_ = int(x[0])

xmin = x[1]

ymin = x[2]

width = x[3]

height = x[4]

xmax = width + xmin

ymax = height + ymin

# assign different color to different classes of objects

if class_ == 1:

edgecolor = 'r'

ax.annotate('RBC', xy=(xmax-40,ymin+20))

elif class_ == 2:

edgecolor = 'b'

ax.annotate('WBC', xy=(xmax-40,ymin+20))

elif class_ == 0:

edgecolor = 'g'

ax.annotate('Platelets', xy=(xmax-40,ymin+20))

# add bounding boxes to the image

rect = patches.Rectangle((xmin,ymin), width, height, edgecolor = edgecolor, facecolor = 'none')

ax.add_patch(rect)Then the output plotted image looks like this, great isn’t.

然后输出的绘制图像看起来像这样,不是很好。

4.将模型投入生产

(

4. Moving Model to Production

)

Just in case, if you wish to move the model to the production or to deploy anywhere, you have to follow these steps.

以防万一,如果您希望将模型移至生产环境或部署到任何地方,则必须执行以下步骤。

First, install the dependencies to run the yolov5, and we need some files from the yolov5 folder and add them to the python system path directory to load the utils. So copy them into some location you need and later move them wherever you need.

首先,安装依赖项以运行yolov5,我们需要yolov5文件夹中的一些文件,并将它们添加到python系统路径目录中以加载utils。 因此,将它们复制到所需的位置,然后将其移至所需的位置。

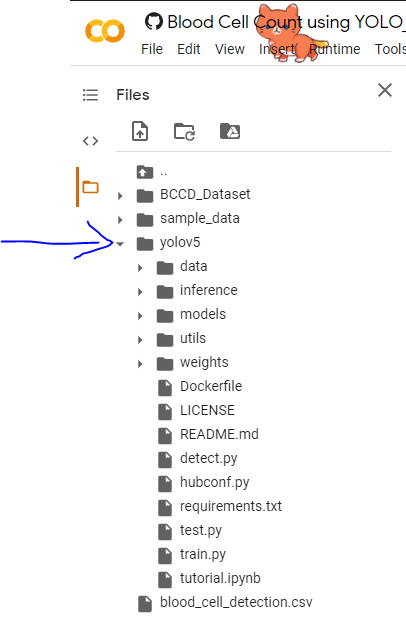

So in the below picture-1, I have boxed some folders and files, you can download them and keep them in a separate folder as in picture-2.

因此,在下面的图片1中,我将一些文件夹和文件装箱,您可以下载它们并将它们保存在单独的文件夹中,如图2所示。

Now we need to tell the python compiler to add the above folder location into account so that when we run our programs it will load the models and functions on runtime.

现在我们需要告诉python编译器将上述文件夹位置添加到帐户中,以便在运行程序时它将在运行时加载模型和函数。

In the below code snippet, on line-9, I have added the sys.path… command and in that, I have specified my folder location where I have moved those files, you can replace it with yours.

在下面的代码段的第9行中,我添加了sys.path…命令,并在其中指定了我将这些文件移动到的文件夹位置,您可以将其替换为您的文件。

Then fire up these codes to start prediction.

然后启动这些代码以开始预测。

import os, sys, random

from glob import glob

import matplotlib.pyplot as plt

%matplotlib inline

!pip install -qr '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/requirements.txt' # install dependencies

## Add the path where you have stored the neccessary supporting files to run detect.py ##

## Replace this with your path.##

sys.path.insert(0, '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/')

print(sys.path)

cwd = os.getcwd()

print(cwd)

## Single Image prediction

## Beware the contents in the output folder will be deleted for every prediction

output = !python '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/detect.py'

--source '/content/BloodImage_00026.jpg'

--weights '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/best_BCCM.pt'

--output '/content/OUTPUTS/' --device 'cpu'

print(output)

img = plt.imread('/content/OUTPUTS/BloodImage_00026.jpg')

plt.imshow(img)

## Folder Prediction

output = !python '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/detect.py'

--source '/content/inputs/'

--weights '/content/drive/My Drive/Machine Learning Projects/YOLO/SOURCE/best_BCCM.pt'

--output '/content/OUTPUTS/' --device 'cpu'

print(output)

And there we come to the end of this post. I hope you got some idea about how to train Yolo-v5 on other datasets you have. Let me know in the comments if you face any difficulty in training and inference.

至此,本文结束。 我希望您对如何在其他数据集中训练Yolo-v5有一些了解。 如果您在训练和推理方面遇到任何困难,请在评论中让我知道。

Until then, see you next time.

在那之前,下次见。

Article By:

文章作者:

BALAKRISHNAKUMAR V

BALAKRISHNAKUMAR V

Co-Founder —

DeepScopy

(An AI-Based Medical Imaging Startup)

联合创始人—

DeepScopy

(基于AI的医学成像初创公司)

Connect with me

→

LinkedIn

,

GitHub

,

Twitter

,

Medium

与我联系

→

LinkedIn

,

GitHub

,

Twitter

,

中

https://deepscopy.com/

https://deepscopy.com/

`

`

Visit us

→

DeepScopy

访问我们

→

DeepScopy

yolo人脸检测数据集