Kubernetes(k8s)是自动化容器操作的开源平台,这些操作包括部署,调度和节点集群间扩展。 Kubernetes不仅支持Docker,还支持Rocket,这是另一种容器技术。

使用Kubernetes可以实现如下功能:

- 自动化容器的部署和复制;

- 随时扩展或收缩容器规模;

- 将容器组织成组,并且提供容器间的负载均衡;

- 很容易地升级应用程序容器的新版本;

- 提供容器弹性,如果容器失效就替换它等。

一、环境信息

| 名称 | IP地址 | 主机名 | 节点角色 |

| master | 10.70.36.251 | master | master |

| node | 10.70.36.252 | node1 | worker |

| node | 10.70.36.253 | node2 | worker |

基础环境准备

master/node节点

1、master/node节点(设置主机名、主机名解析)

[root@master ~]# hostnamectl set-hostname master

[root@master ~]# cat /etc/hosts

master 10.70.36.251

node1 10.70.36.252

node2 10.70.36.2532、master/node节点需要先关闭防火墙、selinux和swap

[root@master ~]# systemctl stop firewalld &&systemctl disable firewalld

[root@master ~]# setenforce 0

[root@master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@master ~]# swapoff -a # 临时

[root@master ~]# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab #永久需重启

[root@master ~]# free -m #查看

[root@master ~]# free -m

total used free shared buff/cache available

Mem: 7783 184 7410 10 188 7340

Swap: 0 0 0

3、master/node节点配置内核参数,将桥接的IPv4流量传递到iptables的链

[root@master ~]# cat >> /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge‐nf‐call‐ip6tables = 1

net.bridge.bridge‐nf‐call‐iptables = 1

EOF[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p

[root@master ~]# lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 2 br_netfilter,ebtable_broute[root@master ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

* Applying /etc/sysctl.conf ...

[root@master ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

* Applying /etc/sysctl.conf ...

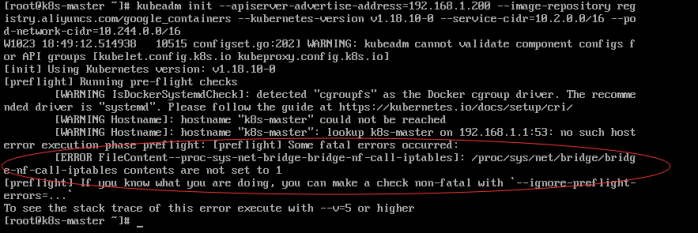

ERROR FileContent–proc-sys-net-bridge-bridge-nf-call-iptables 设置错误导致kubeadm安装k8s失败

解决办法:

echo “1”>/proc/sys/net/bridge/bridge-nf-call-iptables

echo “1”>/proc/sys/net/bridge/bridge-nf-call-ip6tables

4、master/node节点安装基本安装包

[root@master ~]# yum install wget net‐tools vim bash‐comp* ‐y

5、master/node节点配置阿里云的kubernetes、docker的yum源、

[root@master ~]# cat >>/etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@master ~]# yum clean all &&yum repolist

已加载插件:fastestmirror

正在清理软件源: base docker-ce-stable extras kubernetes

: updates

Cleaning up list of fastest mirrors

已加载插件:fastestmirror

Determining fastest mirrors

* base: mirror.bit.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

base | 3.6 kB 00:00

docker-ce-stable | 3.5 kB 00:00

extras | 2.9 kB 00:00

kubernetes/signature | 454 B 00:00

从 https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg 检索密钥

导入 GPG key 0xA7317B0F:

用户ID : "Google Cloud Packages Automatic Signing Key <gc-team@google.com>"

指纹 : d0bc 747f d8ca f711 7500 d6fa 3746 c208 a731 7b0f

来自 : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

是否继续?[y/N]:y

从 https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg 检索密钥

kubernetes/signature | 1.4 kB 00:02 !!!

updates | 2.9 kB 00:00

(1/7): base/7/x86_64/group_gz | 153 kB 00:00

(2/7): extras/7/x86_64/primary_db | 206 kB 00:00

(3/7): kubernetes/primary | 79 kB 00:00

(4/7): docker-ce-stable/x86_64/upda | 55 B 00:00

(5/7): updates/7/x86_64/primary_db | 4.5 MB 00:00

(6/7): base/7/x86_64/primary_db | 6.1 MB 00:00

(7/7): docker-ce-stable/x86_64/prim | 46 kB 00:01

kubernetes 579/579

源标识 源名称 状态

base/7/x86_64 CentOS-7 - Base 10,070

docker-ce-stable/x86_64 Docker CE Stable - x86_64 82

extras/7/x86_64 CentOS-7 - Extras 413

kubernetes Kubernetes 579

updates/7/x86_64 CentOS-7 - Updates 1,134

repolist: 12,2786、master/node节点安装docker-ce并开机自启动

[root@master ~]# yum install docker-ce -y

[root@master ~]# systemctl start docker && systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

在安装的时候还出现这个警告 处理方式如下

![]()

cat <<EOF> /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF#重启docker

systemctl restart docker

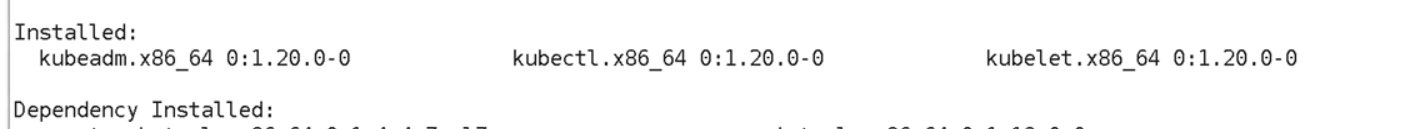

7、master/node节点kubelet、kubeadm、kubectl安装

[root@master ~]# yum install kubelet kubeadm kubectl -y

# 设置kubelet的开机自启

[root@master ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

由于版本更新频繁,这里指定版本号部署:

$ yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

$ systemctl enable kubelet

集群初始化

master和node节点初始化操作

master节点

1、在master进行Kubernetes集群初始化。

相关解释:

- –apiserver-advertise-address #集群通告地址,填写Master的物理网卡地址

- –image-repository #指定阿里云镜像仓库地址

- –kubernetes-version #K8s版本,与上面安装的一致

- –service-cidr #集群内部虚拟网络,指定Cluster IP的网段

- –pod-network-cidr #指定Pod IP的网段

- –ignore-preflight-errors=all #忽略安装过程的一些错误

定义POD的网段为: 10.244.0.0/16,apiserver地址就是master本机IP地址。

由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址,很多新手初次部署都卡在此环节无法进行后续配置

[root@master ~]# kubeadm init --kubernetes-version=1.20.0 \

--apiserver-advertise-address=10.70.36.251 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

W1102 16:41:12.383750 9147 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.0

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "master" could not be reached

[WARNING Hostname]: hostname "master": lookup master on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.1.0.1 10.70.36.251]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [10.70.36.251 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [10.70.36.251 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 13.503081 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 52ejyu.nxdlclgc8ablceht

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.70.36.251:6443 --token 52ejyu.nxdlclgc8ablceht \

--discovery-token-ca-cert-hash sha256:578c11c62f423dc241d44b96e88804a3bbe6f6c525bb7b10920ce19843b41c09

[root@master ~]#

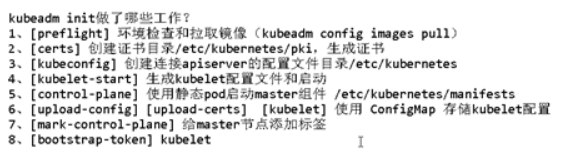

kubeadm-init的工作流

在运行了 kubeadm init 命令以后,都进行了那些操作呢?这里主要就是跟着官方文档来翻译一遍了:

首先会运行一系列预检代码来检查系统的状态;大部分的检查只会抛出一个警告,也有一部分会抛出异常错误从而导致工作流推出(比如没有关闭swap或者没有安装docker)。官方给出一个参数–ignore-preflight-errors=, 我估计八成大家用不到,除非真的明白自己在做啥。。。

生成一个用来认证k8s组件间调用的自签名的CA(Certificate Authority,证书授权);这个证书也可以通过–cert-dir(默认是/etc/kubernetets/pki)的方式传入,那么这一步就会跳过。

把kubelet、controller-manager和scheduler等组件的配置文件写到/etc/kubernets/目录,这几个组件会使用这些配置文件来连接API-server的服务;除了上面几个配置文件,还会生成一个管理相关的admin.conf文件。

如果参数中包含–feature-gates=DynamicKubeletConfig,会把kubelet的初始化配置文件写入/var/lib/kubelet/config/init/kubelet这个文件;官方给出一坨文字解释,这里先不探究了,因为我没有用到。。。

接下来就是创建一些 静态pod 的配置文件了,包括API-server、controller-manager和scheduler。假如没有提供外部etcd,还会另外生成一个etcd的静态Pod配置文件。这些静态pod会被写入/etc/kubernetes/manifests,kubelet进程会监控这个目录,从而创建相关的pod。

假如第五步比较顺利,这个时候k8s的控制面进程(api-server、controller-manager、scheduler)就全都起来了。

如果传入了参数–feature-gates=DynamicKubeletConfig,又会对kubelet进行一坨操作,因为没有用到,所以这里不做详细探究。

给当前的节点(Master节点)打label和taints,从而防止其他的负载在这个节点运行。

生成token,其他节点如果想加入当前节点(Master)所在的k8s集群,会用到这个token。

进行一些允许节点以 Bootstrap Tokens) 和 TLS bootstrapping 方式加入集群的必要的操作:

设置RBAC规则,同时创建一个用于节点加入集群的ConfigMap(包含了加入集群需要的所有信息)。

让Bootstrap Tokens可以访问CSR签名的API。

给新的CSR请求配置自动认证机制。

通过API-server安装DNS服务器(1.11版本后默认为CoreDNS,早期版本默认为kube-dns)和kube-proxy插件。这里需要注意的是,DNS服务器只有在安装了CNI(flannel或calico)之后才会真正部署,否则会处于挂起(pending)状态。

到这里基本上就告一段落了

报错

不慌,是版本问题把1.19改成1.20就好了

报错

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0: output: Error response from daemon: pull access denied for registry.aliyuncs.com/google_containers/coredns/coredns, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

解决

docker pull coredns/coredns:1.8.0

docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0这里注意:只是在master下载的这个需要每个节点都有,需要每个节点都下载并打tag

请备份好

kubeadm init

输出中的

kubeadm join

命令,因为后面会需要这个命令来给集群添加节点

kubeadm join 10.70.36.251:6443 --token 52ejyu.nxdlclgc8ablceht \

--discovery-token-ca-cert-hash sha256:578c11c62f423dc241d44b96e88804a3bbe6f6c525bb7b10920ce19843b41c09 2、配置kubectl工具

[root@master ~]# mkdir -p /root/.kube

[root@master ~]# cp /etc/kubernetes/admin.conf /root/.kube/config

[root@master ~]# kubectl get nodes[root@master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

发现controller-manager Unhealthy Get “http://127.0.0.1:10252/healthz”: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get “http://127.0.0.1:10251/healthz”: dial tcp 127.0.0.1:10251: connect: connection refused

解决思路:

注释掉/etc/kubernetes/manifests下的kube-controller-manager.yaml和kube-scheduler.yaml的- – port=0

[root@master manifests]# vi /etc/kubernetes/manifests/kube-controller-manager.yaml

[root@master manifests]# vi /etc/kubernetes/manifests/kube-scheduler.yaml

重启kubelet服务

[root@master ~]# systemctl restart kubelet

[root@master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

Kubernetes Node加入集群(Node节点操作)

执行kubeadm init输出的kubeadm join命令

kubeadm join 10.70.36.251:6443 --token 52ejyu.nxdlclgc8ablceht \

--discovery-token-ca-cert-hash sha256:578c11c62f423dc241d44b96e88804a3bbe6f6c525bb7b10920ce19843b41c09

-

输出结果:

[root@node1 ~]# kubeadm join 10.70.36.251:6443 --token sgtd6y.ah3g5wr5im49s9ph \

> --discovery-token-ca-cert-hash sha256:dcfa8bca6c6149cf1a27b70ca9e9c5202ce17e8ba01bfffc6759610031139531

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "node1" could not be reached

[WARNING Hostname]: hostname "node1": lookup node1 on 114.114.114.114:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在Master查看节点状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 11m v1.19.4

node1 NotReady <none> 21s v1.19.4

node2 NotReady <none> 11s v1.19.4

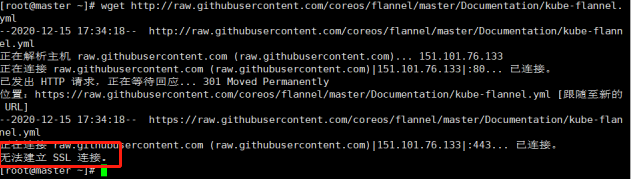

安装网络插件

第一种、

安装flannel

[root@master ~]# cat /etc/hosts

master 10.70.36.251

node1 10.70.36.252

node2 10.70.36.253

151.101.76.133 raw.githubusercontent.com

下面两种安装flannel方式

1.下载这个包

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2020-12-07 12:56:11-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.76.133

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|151.101.76.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:4821 (4.7K) [text/plain]

正在保存至: “kube-flannel.yml”

100%[========================================================>] 4,821 --.-K/s 用时 0s

2020-12-07 12:56:12 (63.7 MB/s) - 已保存 “kube-flannel.yml” [4821/4821])

[root@master ~]#

[root@master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel configured

clusterrolebinding.rbac.authorization.k8s.io/flannel configured

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg configured

daemonset.apps/kube-flannel-ds created

报错

解决方法,将https改成http。

1、1或者 直接用命令

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged configured

clusterrole.rbac.authorization.k8s.io/flannel configured

clusterrolebinding.rbac.authorization.k8s.io/flannel configured

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg configured

daemonset.apps/kube-flannel-ds unchanged

查看是否安装完成

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d56c8448f-js7b8 1/1 Running 0 18m

kube-system coredns-6d56c8448f-vsqfs 1/1 Running 0 18m

kube-system etcd-master 1/1 Running 0 18m

kube-system kube-apiserver-master 1/1 Running 0 18m

kube-system kube-controller-manager-master 1/1 Running 0 17m

kube-system kube-flannel-ds-2bzzb 1/1 Running 0 109s

kube-system kube-flannel-ds-ddtqt 1/1 Running 0 109s

kube-system kube-flannel-ds-s6kkj 1/1 Running 0 109s

kube-system kube-proxy-8m4fw 1/1 Running 0 18m

kube-system kube-proxy-kdnz4 1/1 Running 0 7m50s

kube-system kube-proxy-tfpt2 1/1 Running 0 7m40s

kube-system kube-scheduler-master 1/1 Running 0 17m

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-js7b8 1/1 Running 0 26m

coredns-6d56c8448f-vsqfs 1/1 Running 0 26m

etcd-master 1/1 Running 0 26m

kube-apiserver-master 1/1 Running 0 26m

kube-controller-manager-master 1/1 Running 0 26m

kube-flannel-ds-2bzzb 1/1 Running 0 10m

kube-flannel-ds-ddtqt 1/1 Running 0 10m

kube-flannel-ds-s6kkj 1/1 Running 0 10m

kube-proxy-8m4fw 1/1 Running 0 26m

kube-proxy-kdnz4 1/1 Running 0 16m

kube-proxy-tfpt2 1/1 Running 0 16m

kube-scheduler-master 1/1 Running 0 25m

第二种、安装Calico网络插件

wget https://docs.projectcalico.org/manifests/calico.yaml

vi calico.yaml

修改里面定义Pod网络(CALICO_IPV4POOL_CIDR)那行,该值与Kubeadm init指定的

--pod-network-cidr

需一致

[root@master ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

[root@master ~]# kubectl get pod -owide -nkube-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5dc87d545c-nqxbb 1/1 Running 0 2m7s 10.244.166.129 node1 <none> <none>

calico-node-9ffl6 1/1 Running 0 2m7s 10.70.36.251 node1 <none> <none>

calico-node-jcn5x 1/1 Running 0 2m7s 10.70.36.250 master <none> <none>

calico-node-k27jc 1/1 Running 0 2m7s 10.70.36.252 node2 <none> <none>

coredns-6d56c8448f-czqbs 1/1 Running 0 30m 10.244.104.1 node2 <none> <none>

coredns-6d56c8448f-hz7rp 1/1 Running 0 30m 10.244.166.130 node1 <none> <none>

etcd-master 1/1 Running 0 30m 10.70.36.250 master <none> <none>

kube-apiserver-master 1/1 Running 0 30m 10.70.36.250 master <none> <none>

kube-controller-manager-master 1/1 Running 0 17m 10.70.36.250 master <none> <none>

kube-proxy-dc6vm 1/1 Running 0 19m 10.70.36.252 node2 <none> <none>

kube-proxy-z6srj 1/1 Running 0 19m 10.70.36.251 node1 <none> <none>

kube-proxy-zzwnw 1/1 Running 0 30m 10.70.36.250 master <none> <none>

kube-scheduler-master 1/1 Running 0 16m 10.70.36.250 master <none> <none>

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5dc87d545c-nqxbb 1/1 Running 0 65m

calico-node-9ffl6 1/1 Running 0 65m

calico-node-jcn5x 1/1 Running 0 65m

calico-node-k27jc 1/1 Running 0 65m

coredns-6d56c8448f-czqbs 1/1 Running 0 93m

coredns-6d56c8448f-hz7rp 1/1 Running 0 93m

etcd-master 1/1 Running 0 93m

kube-apiserver-master 1/1 Running 0 93m

kube-controller-manager-master 1/1 Running 0 80m

kube-proxy-dc6vm 1/1 Running 0 83m

kube-proxy-z6srj 1/1 Running 0 83m

kube-proxy-zzwnw 1/1 Running 0 93m

kube-scheduler-master 1/1 Running 0 80m[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5dc87d545c-nqxbb 1/1 Running 0 66m

kube-system calico-node-9ffl6 1/1 Running 0 66m

kube-system calico-node-jcn5x 1/1 Running 0 66m

kube-system calico-node-k27jc 1/1 Running 0 66m

kube-system coredns-6d56c8448f-czqbs 1/1 Running 0 94m

kube-system coredns-6d56c8448f-hz7rp 1/1 Running 0 94m

kube-system etcd-master 1/1 Running 0 94m

kube-system kube-apiserver-master 1/1 Running 0 94m

kube-system kube-controller-manager-master 1/1 Running 0 81m

kube-system kube-proxy-dc6vm 1/1 Running 0 83m

kube-system kube-proxy-z6srj 1/1 Running 0 84m

kube-system kube-proxy-zzwnw 1/1 Running 0 94m

kube-system kube-scheduler-master 1/1 Running 0 81m

第三种、安装weave网络插件

[root@master ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.apps/weave-net created

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d56c8448f-czqbs 1/1 Running 0 96m

kube-system coredns-6d56c8448f-hz7rp 1/1 Running 0 96m

kube-system etcd-master 1/1 Running 0 96m

kube-system kube-apiserver-master 1/1 Running 0 96m

kube-system kube-controller-manager-master 1/1 Running 0 83m

kube-system kube-proxy-dc6vm 1/1 Running 0 86m

kube-system kube-proxy-z6srj 1/1 Running 0 86m

kube-system kube-proxy-zzwnw 1/1 Running 0 96m

kube-system kube-scheduler-master 1/1 Running 0 83m

kube-system weave-net-h7jlv 2/2 Running 0 39s

kube-system weave-net-q5r95 2/2 Running 0 39s

kube-system weave-net-t4pc9 2/2 Running 0 39s

查看集群状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 19m v1.19.4

node1 Ready <none> 8m7s v1.19.4

node2 Ready <none> 7m57s v1.19.4

部署完发现node1 和node2的ROLES是<none>

设置node1、node2的角色

kubectl label nodes node1 node-role.kubernetes.io/node= #这里node1可以用node1的ip 例如kubectl label nodes 10.70.36.251 node-role.kubernetes.io/node=

kubectl label nodes node2 node-role.kubernetes.io/node= #这里node2可以用node1的ip 例如kubectl label nodes 10.70.36.252 node-role.kubernetes.io/node=同理还有

设置集群角色

# 1、设置 某节点 为 master 角色

kubectl label nodes test1 node-role.kubernetes.io/master=

# 2、设置 某节点 为 node 角色

kubectl label nodes 192.168.0.92 node-role.kubernetes.io/node=

# 3、设置 master 一般情况下不接受负载

kubectl taint nodes test1 node-role.kubernetes.io/master=true:NoSchedule

#4、master运行pod

kubectl taint nodes test1 node-role.kubernetes.io/master-

#5、master不运行pod

kubectl taint nodes test1 node-role.kubernetes.io/master=:NoSchedule

手动重新添加到集群

如果因为某些问题,服务不能自动添加到集群中,我们就需要手动重新初始化添加一次。

在

master

节点上面先删除 node2节点。

kubectl delete node node2

在

node2

上面 reset。

kubeadm reset

重新使用

kubeadm init

初始化,但是发现token过期了,我们需要在

master

节点重新生成token。

[root@master ~]# kubeadm token create

v269qh.2mylwtmc96kd28sq生成ca-cert-hash sha256的值。

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

> openssl dgst -sha256 -hex | sed 's/^.* //'

84e50f7beaa4d3296532ae1350330aaf79f3f0d45ec8623fae6cd9fe9a804635然后在node节点上面重新使用kubeadm init进行添加集群中。

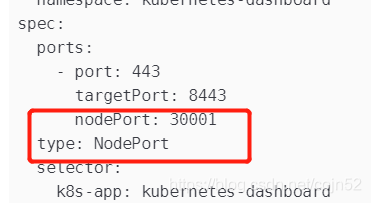

部署 Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

vi recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

kubectl apply -f recommended.yaml

kubectl get pods,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-694557449d-z8gfb 1/1 Running 0 2m18s

pod/kubernetes-dashboard-9774cc786-q2gsx 1/1 Running 0 2m19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.1.0.141 <none> 8000/TCP 2m19s

service/kubernetes-dashboard NodePort 10.1.0.239 <none> 443:30001/TCP 2m19s

访问地址:https://NodeIP:30001

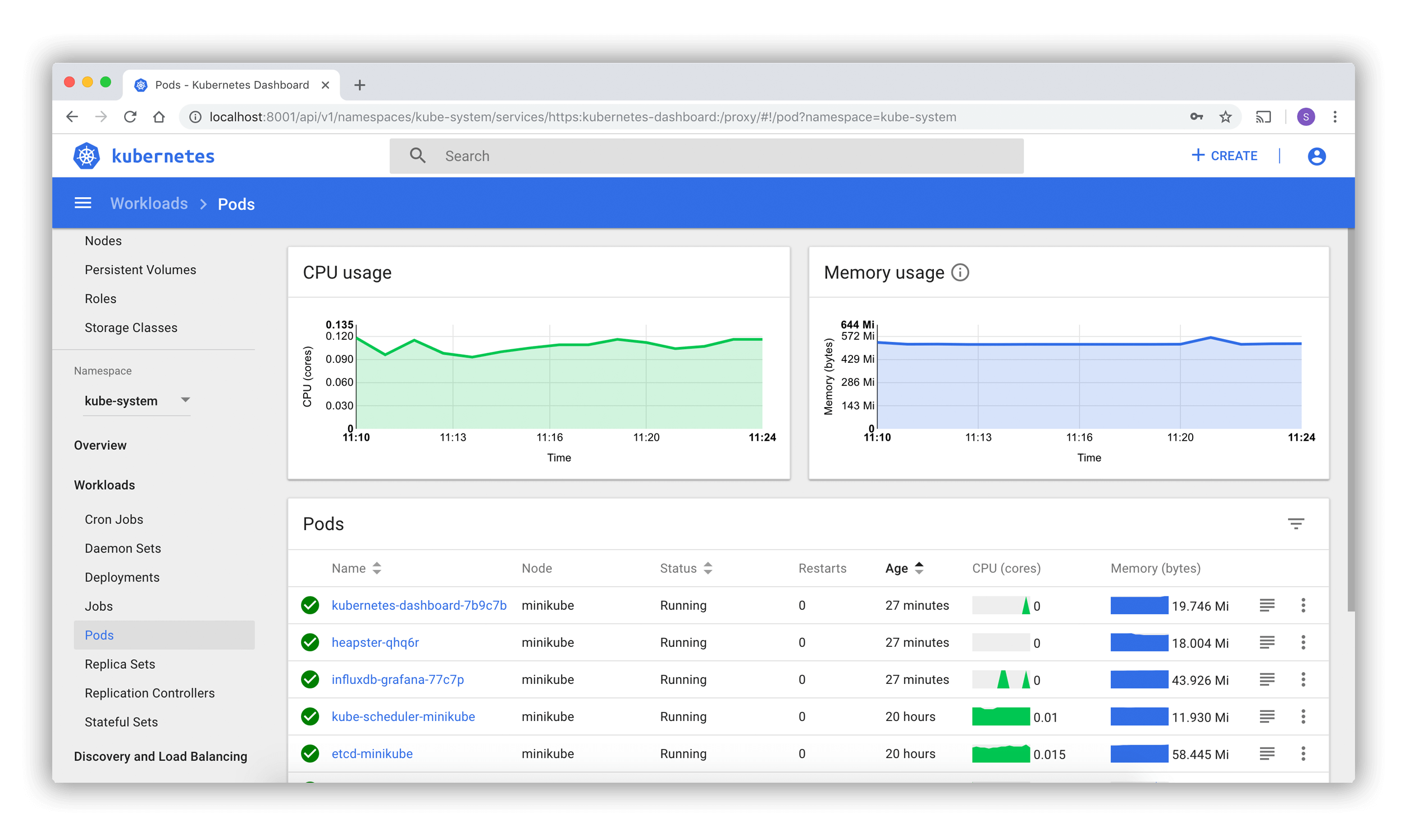

创建service account并绑定默认cluster-admin管理员集群角色:

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

将复制的token 填写到 上图中的 token选项,并选择token登录

使用输出的token登录Dashboard。

https://cloud.tencent.com/developer/article/1600672?from=information.detail.kubeadm%E9%83%A8%E7%BD%B2k8s