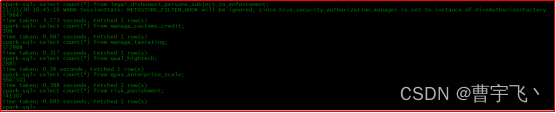

Spark-sql查询正常

Hsql查询异常

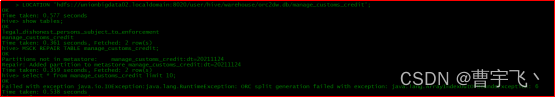

Failed with exception java.io.IOException:java.lang.RuntimeException: ORC split generation failed with exception: java.lang.ArrayIndexOutOfBoundsException: 6

spark-shell查询异常

查询报错

Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting

‘spark.debug.maxToStringFields’ in SparkEnv.conf.

无法获取到数据

![]()

Hsql查询异常

解决

参考链接:

替换CDH集群的jar包(每个节点都需要替换,注意备份原文件),重启hive客户端:

/opt/cloudera/parcels/CDH/lib/hive/lib/hive-exec-2.1.1-cdh6.2.1.jar

/opt/cloudera/parcels/CDH/jars/hive-exec-2.1.1-cdh6.2.1.jar

/opt/cloudera/parcels/CDH/lib/spark/hive/hive-exec-2.1.1-cdh6.2.1.jar

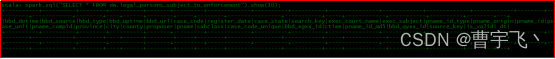

spark-shell异常

解决

参考链接:

[SPARK-15705] Spark won’t read ORC schema from metastore for partitioned tables – ASF JIRA

spark-defaults.conf增加配置:

spark.debug.maxToStringFields=200

spark.sql.hive.convertMetastoreOrc=false