二进制部署K8S集群从0到1

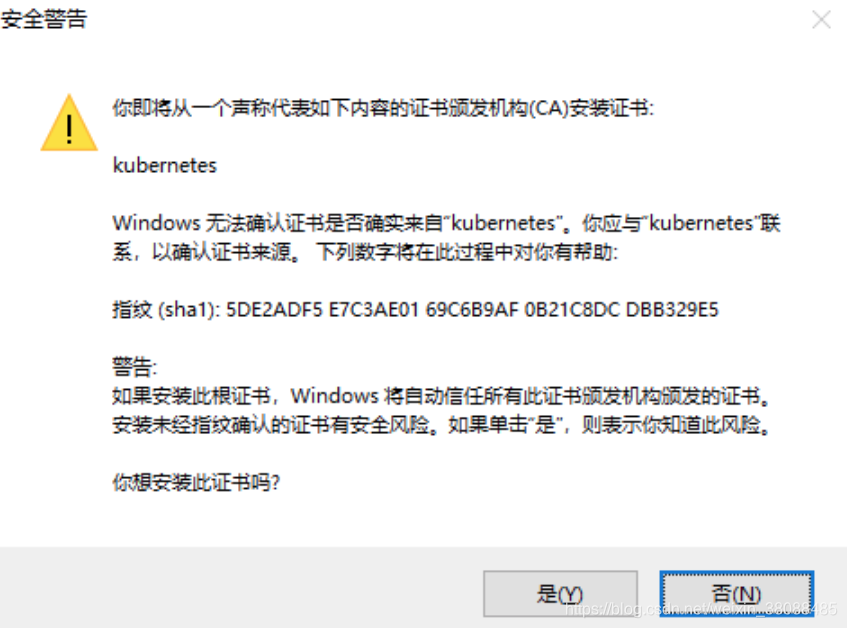

介绍:k8s集群系统的各组件需要使用

TLS

证书对通信进行加密,本文档使用

CloudFlare

的

PKI

工具集

cfssl

来生成

Certificate Authority(CA)

和其他证书。

管理集群中的TLS

前言

每个Kubernetes集群都有一个集群根证书颁发机构(CA)。 集群中的组件通常使用CA来验证API server的证书,由API服务器验证kubelet客户端证书等。为了支持这一点,CA证书包被分发到集群中的每个节点,并作为一个secret附加分发到默认service account上。 或者,你的workload可以使用此CA建立信任。 你的应用程序可以使用类似于

ACME草案

的协议,使用

certificates.k8s.io

API请求证书签名。

集群中的TLS信任

让Pod中运行的应用程序信任集群根CA通常需要一些额外的应用程序配置。 您将需要将CA证书包添加到TLS客户端或服务器信任的CA证书列表中。 例如,您可以使用golang TLS配置通过解析证书链并将解析的证书添加到

tls.Config

结构中的

Certificates

字段中,CA证书捆绑包将使用默认服务账户自动加载到pod中,路径为

/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

。 如果您没有使用默认服务账户,请请求集群管理员构建包含您有权访问使用的证书包的configmap。

集群部署

环境规划

| 软件 | 版本 |

|---|---|

| Linux操作系统 | CentOS Linux release 7.6.1810 (Core) |

| Kubernetes | 1.14.2 |

| Docker | 18.06.1-ce |

| Etcd | 3.3.1 |

| 角色 | IP | 组件 | 推荐配置 |

|---|---|---|---|

| k8s-master | 172.16.4.12 |

kube-apiserver kube-controller-manager kube-scheduler etcd |

8core和16GB内存 |

| k8s-node1 | 172.16.4.13 |

kubelet kube-proxy docker flannel etcd |

根据需要运行的容器数量进行配置 |

| k8s-node2 | 172.16.4.14 |

kubelet kube-proxy docker flannel etcd |

根据需要运行的容器数量进行配置 |

| 组件 | 使用的证书 |

|---|---|

| etcd | ca.pem, server.pem, server-key.pem |

| kube-apiserver | ca.pem, server.pem, server-key.pem |

| kubelet | ca.pem, ca-key.pem |

| kube-proxy | ca.pem, kube-proxy.pem, kube-proxy-key.pem |

| kubectl | ca.pem, admin.pem, admin-key.pem |

| kube-controller-manager | ca.pem, ca-key.pem |

| flannel | ca.pem, server.pem, server-key.pem |

环境准备

以下操作需要在master节点和各Node节点上执行

:

- 准备必要可用的软件包(非必须操作)

# 安装net-tools,可以使用ping,ifconfig等命令

yum install -y net-tools

# 安装curl,telnet命令

yum install -y curl telnet

# 安装vim编辑器

yum install -y vim

# 安装wget下载命令

yum install -y wget

# 安装lrzsz工具,可以直接拖拽文件到Xshell中上传文件到服务器或下载文件到本地。

yum -y install lrzsz

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭selinux

sed -i 's/enforcing/disabled' /etc/selinux/config

setenforce 0

# 或者进入到/etc/selinux/config将以下字段设置并重启生效:

SELINUX=disabled

- 关闭swap

swapoff -a # 临时

vim /etc/fstab #永久

- 确保net.bridge.bridge-nf-call-iptables在sysctl配置为1:

$ cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward =1

net.bridge.bridge-nf-call-ip6tables =1

net.bridge.bridge-nf-call-iptables =1

EOF

$ sysctl --system

- 添加主机名与IP对应关系(master和node节点都需要配置)

$ vim /etc/hosts

172.16.4.12 k8s-master

172.16.4.13 k8s-node1

172.16.4.14 k8s-node2

- 同步时间

# yum install ntpdate -y

# ntpdate ntp.api.bz

k8s需要容器运行时(Container Runtime Interface,CRI)的支持,目前官方支持的容器运行时包括:Docker、Containerd、CRI-O和frakti。此处以Docker作为容器运行环境,推荐版本为Docker CE 18.06 或 18.09.

- 安装Docker

# 为Docker配置阿里云源,注意是在/etc/yum.repos.d目录执行下述命令。

[root@k8s-master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# update形成缓存,并且列出可用源,发现出现docker-ce源。

[root@k8s-master yum.repos.d]# yum update && yum repolist

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.lzu.edu.cn

* extras: mirrors.nwsuaf.edu.cn

* updates: mirror.lzu.edu.cn

docker-ce-stable | 3.5 kB 00:00:00

(1/2): docker-ce-stable/x86_64/updateinfo | 55 B 00:00:00

(2/2): docker-ce-stable/x86_64/primary_db | 28 kB 00:00:00

No packages marked for update

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.lzu.edu.cn

* extras: mirrors.nwsuaf.edu.cn

* updates: mirror.lzu.edu.cn

repo id repo name status

base/7/x86_64 CentOS-7 - Base 10,019

docker-ce-stable/x86_64 Docker CE Stable - x86_64 43

extras/7/x86_64 CentOS-7 - Extras 409

updates/7/x86_64 CentOS-7 - Updates 2,076

repolist: 12,547

# 列出可用的docker-ce版本,推荐使用18.06或18.09的稳定版。

yum list docker-ce.x86_64 --showduplicates | sort -r

# 正式安装docker,此处以docker-ce-18.06.3.ce-3.el7为例。推荐第2种方式。

yum -y install docker-ce-18.06.3.ce-3.el7

# 在此处可能会报错:Delta RPMs disabled because /usr/bin/applydeltarpm not installed.采用如下命令解决。

yum provides '*/applydeltarpm'

yum install deltarpm -y

# 然后重新执行安装命令

yum -y install docker-ce-18.06.3.ce-3.el7

# 安装完成设置docker开机自启动。

systemctl enable docker

注意:以下操作都在 master 节点即 172.16.4.12 这台主机上执行,证书只需要创建一次即可,以后在向集群中添加新节点时只要将 /etc/kubernetes/ 目录下的证书拷贝到新节点上即可。

创建TLS证书和秘钥

- 采用二进制源码包安装CFSSL

# 首先创建存放证书的位置

$ mkdir ssl && cd ssl

# 下载用于生成证书的

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

# 用于将证书的json文本导入

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 查看证书信息

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

# 修改文件,使其具备执行权限

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

# 将文件移到/usr/local/bin/cfssl

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

# 如果是普通用户,可能需要将环境变量设置下

export PATH=/usr/local/bin:$PATH

创建CA(Certificate Authority)

注意以下命令,仍旧在/root/ssl文件目录下执行。

-

创建CA配置文件

# 生成一个默认配置

$ cfssl print-defaults config > config.json

# 生成一个默认签发证书的配置

$ cfssl print-defaults csr > csr.json

# 根据config.json文件的格式创建如下的ca-config.json文件,其中过期时间设置成了 87600h

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

字段说明

-

ca-config.json

:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile; -

signing

:表示该证书可用于签名其它证书;生成的 ca.pem 证书中

CA=TRUE

; -

server auth

:表示client可以用该 CA 对server提供的证书进行验证; -

client auth

:表示server可以用该CA对client提供的证书进行验证;

-

创建CA证书签名请求

# 创建ca-csr.json文件,内容如下

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

-

“CN”:

Common Name

,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法; -

“O”:

Organization

,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group);

-

生成CA证书和私钥

[root@k8s-master ~]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2019/06/12 11:08:53 [INFO] generating a new CA key and certificate from CSR

2019/06/12 11:08:53 [INFO] generate received request

2019/06/12 11:08:53 [INFO] received CSR

2019/06/12 11:08:53 [INFO] generating key: rsa-2048

2019/06/12 11:08:53 [INFO] encoded CSR

2019/06/12 11:08:53 [INFO] signed certificate with serial number 708489059891717538616716772053407287945320812263

# 此时/root下应该有以下四个文件。

[root@k8s-master ssl]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

-

创建Kubernetes证书

创建Kubernetes证书签名请求文件server-csr.json(kubernetes-csr.json),并将受信任的IP修改添加到hosts,比如我的三个节点的IP为:172.16.4.12 172.16.4.13 172.16.4.14

$ cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.16.4.12",

"172.16.4.13",

"172.16.4.14",

"10.10.10.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 正式生成Kubernetes证书和私钥

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2019/06/12 12:00:45 [INFO] generate received request

2019/06/12 12:00:45 [INFO] received CSR

2019/06/12 12:00:45 [INFO] generating key: rsa-2048

2019/06/12 12:00:45 [INFO] encoded CSR

2019/06/12 12:00:45 [INFO] signed certificate with serial number 276381852717263457656057670704331293435930586226

2019/06/12 12:00:45 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

# 查看生成的server.pem和server-key.pem

[root@k8s-master ssl]# ls server*

server.csr server-csr.json server-key.pem server.pem

-

如果 hosts 字段不为空则需要指定授权使用该证书的

IP 或域名列表

,由于该证书后续被

etcd

集群和

kubernetes master

集群使用,所以上面分别指定了

etcd

集群、

kubernetes master

集群的主机 IP 和

kubernetes 服务的服务 IP

(一般是

kube-apiserver

指定的

service-cluster-ip-range

网段的第一个IP,如 10.10.10.1)。 - 这是最小化安装的kubernetes集群,包括一个私有镜像仓库,三个节点的kubernetes集群,以上物理节点的IP也可以更换为主机名。

-

创建admin证书

创建admin证书签名请求文件,

admin-csr.json

:

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

-

后续

kube-apiserver

使用

RBAC

对客户端(如

kubelet

、

kube-proxy

、

Pod

)请求进行授权; -

kube-apiserver

预定义了一些

RBAC

使用的

RoleBindings

,如

cluster-admin

将 Group

system:masters

与 Role

cluster-admin

绑定,该 Role 授予了调用

kube-apiserver

的

所有 API

的权限; -

O 指定该证书的 Group 为

system:masters

,

kubelet

使用该证书访问

kube-apiserver

时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的

system:masters

,所以被授予访问所有 API 的权限;

注意

:这个admin 证书,是将来生成管理员用的kube config 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group(具体参考

Kubernetes中的用户与身份认证授权

中 X509 Client Certs 一段)。

生成admin证书和私钥

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2019/06/12 14:52:32 [INFO] generate received request

2019/06/12 14:52:32 [INFO] received CSR

2019/06/12 14:52:32 [INFO] generating key: rsa-2048

2019/06/12 14:52:33 [INFO] encoded CSR

2019/06/12 14:52:33 [INFO] signed certificate with serial number 491769057064087302830652582150890184354925110925

2019/06/12 14:52:33 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

#查看生成的证书和私钥

[root@k8s-master ssl]# ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem

- 创建kube-proxy证书

创建 kube-proxy 证书签名请求文件

kube-proxy-csr.json

,让它携带证书访问集群:

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

-

CN 指定该证书的 User 为

system:kube-proxy

; -

kube-apiserver

预定义的 RoleBinding

system:node-proxier

将User

system:kube-proxy

与 Role

system:node-proxier

绑定,该 Role 授予了调用

kube-apiserver

Proxy 相关 API 的权限;

生成 kube-proxy 客户端证书和私钥

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy && ls kube-proxy*

2019/06/12 14:58:09 [INFO] generate received request

2019/06/12 14:58:09 [INFO] received CSR

2019/06/12 14:58:09 [INFO] generating key: rsa-2048

2019/06/12 14:58:09 [INFO] encoded CSR

2019/06/12 14:58:09 [INFO] signed certificate with serial number 175491367066700423717230199623384101585104107636

2019/06/12 14:58:09 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

-

校验证书

以server证书为例

使用openssl命令

[root@k8s-master ssl]# openssl x509 -noout -text -in server.pem

......

Signature Algorithm: sha256WithRSAEncryption

Issuer: C=CN, ST=Beijing, L=Beijing, O=k8s, OU=System, CN=kubernetes

Validity

Not Before: Jun 12 03:56:00 2019 GMT

Not After : Jun 9 03:56:00 2029 GMT

Subject: C=CN, ST=BeiJing, L=BeiJing, O=k8s, OU=System, CN=kubernetes

......

X509v3 extensions:

X509v3 Key Usage: critical

Digital Signature, Key Encipherment

X509v3 Extended Key Usage:

TLS Web Server Authentication, TLS Web Client Authentication

X509v3 Basic Constraints: critical

CA:FALSE

X509v3 Subject Key Identifier:

E9:99:37:41:CC:E9:BA:9A:9F:E6:DE:4A:3E:9F:8B:26:F7:4E:8F:4F

X509v3 Authority Key Identifier:

keyid:CB:97:D5:C3:5F:8A:EB:B5:A8:9D:39:DE:5F:4F:E0:10:8E:4C:DE:A2

X509v3 Subject Alternative Name:

DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster, DNS:kubernetes.default.svc.cluster.local, IP Address:127.0.0.1, IP Address:172.16.4.12, IP Address:172.16.4.13, IP Address:172.16.4.14, IP Address:10.10.10.1

......

-

确认

Issuer

字段的内容和

ca-csr.json

一致; -

确认

Subject

字段的内容和

server-csr.json

一致; -

确认

X509v3 Subject Alternative Name

字段的内容和

server-csr.json

一致; -

确认

X509v3 Key Usage、Extended Key Usage

字段的内容和

ca-config.json

中 “kubernetes profile` 一致;

使用

cfssl-certinfo

命令

[root@k8s-master ssl]# cfssl-certinfo -cert server.pem

{

"subject": {

"common_name": "kubernetes",

"country": "CN",

"organization": "k8s",

"organizational_unit": "System",

"locality": "BeiJing",

"province": "BeiJing",

"names": [

"CN",

"BeiJing",

"BeiJing",

"k8s",

"System",

"kubernetes"

]

},

"issuer": {

"common_name": "kubernetes",

"country": "CN",

"organization": "k8s",

"organizational_unit": "System",

"locality": "Beijing",

"province": "Beijing",

"names": [

"CN",

"Beijing",

"Beijing",

"k8s",

"System",

"kubernetes"

]

},

"serial_number": "276381852717263457656057670704331293435930586226",

"sans": [

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"127.0.0.1",

"172.16.4.12",

"172.16.4.13",

"172.16.4.14",

"10.10.10.1"

],

"not_before": "2019-06-12T03:56:00Z",

"not_after": "2029-06-09T03:56:00Z",

"sigalg": "SHA256WithRSA",

......

}

-

分发证书

将生成的证书和秘钥文件(后缀名为

.pem

)拷贝到所有机器的

/etc/kubernetes/ssl

目录下备用;

[root@k8s-master ssl]# mkdir -p /etc/kubernetes/ssl

[root@k8s-master ssl]# cp *.pem /etc/kubernetes/ssl

[root@k8s-master ssl]# ls /etc/kubernetes/ssl/

admin-key.pem admin.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem server-key.pem server.pem

# 留下pem文件,删除其余无用文件(非必须操作,可以不执行)

ls | grep -v pem |xargs -i rm {}

创建kubeconfig文件

以下命令在master节点运行,没有指定运行目录,则默认是用户家目录,root用户则在/root下执行。

下载kubectl

注意请下载对应的Kubernetes版本的安装包。

# 下述网站,如果访问不了网站,请移步百度云下载:

wget https://dl.k8s.io/v1.14.3/kubernetes-client-linux-amd64.tar.gz

tar -xzvf kubernetes-client-linux-amd64.tar.gz

cp kubernetes/client/bin/kube* /usr/bin/

chmod a+x /usr/bin/kube*

创建kubectl kubeconfig文件

# 172.16.4.12是master节点的IP,注意更改。

# 创建kubeconfig 然后需要指定k8s的api的https的访问入口

export KUBE_APISERVER="https://172.16.4.12:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER}

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

# 设置默认上下文

kubectl config use-context kubernetes

-

admin.pem

证书 OU 字段值为

system:masters

,

kube-apiserver

预定义的 RoleBinding

cluster-admin

将 Group

system:masters

与 Role

cluster-admin

绑定,该 Role 授予了调用

kube-apiserver

相关 API 的权限; -

生成的 kubeconfig 被保存到

~/.kube/config

文件;

注意:

~/.kube/config

文件拥有对该集群的最高权限,请妥善保管。

kubelet

、

kube-proxy

等 Node 机器上的进程与 Master 机器的

kube-apiserver

进程通信时需要认证和授权;

以下操作只需要在master节点上执行,生成的

*.kubeconfig

文件可以直接拷贝到node节点的

/etc/kubernetes

目录下。

创建TLS Bootstrapping token

Token auth file

Token可以是任意的包含128 bit的字符串,可以使用安全的随机数发生器生成。

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

后三行是一句,直接复制上面的脚本运行即可。

注意:在进行后续操作前请检查 token.csv 文件,确认其中的 ${BOOTSTRAP_TOKEN}环境变量已经被真实的值替换。

BOOTSTRAP_TOKEN

将被写入到 kube-apiserver 使用的 token.csv 文件和 kubelet 使用的

bootstrap.kubeconfig

文件,

如果后续重新生成了 BOOTSTRAP_TOKEN,则需要

:

- 更新 token.csv 文件,分发到所有机器 (master 和 node)的 /etc/kubernetes/ 目录下,分发到node节点上非必需;

- 重新生成 bootstrap.kubeconfig 文件,分发到所有 node 机器的 /etc/kubernetes/ 目录下;

- 重启 kube-apiserver 和 kubelet 进程;

- 重新 approve kubelet 的 csr 请求;

cp token.csv /etc/kubernetes/

创建kubelet bootstrapping kubeconfig文件

执行下面的命令时需要先安装kubectl命令

# 在执行之前,可以先安装kubectl 自动补全命令工具。

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

# 进到执行目录/etc/kubernetes下。

cd /etc/kubernetes

export KUBE_APISERVER="https://172.16.4.12:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

-

--embed-certs

为

true

时表示将

certificate-authority

证书写入到生成的

bootstrap.kubeconfig

文件中; -

设置客户端认证参数时

没有

指定秘钥和证书,后续由

kube-apiserver

自动生成;

创建kube-proxy kubeconfig文件

export KUBE_APISERVER="https://172.16.4.12:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

-

设置集群参数和客户端认证参数时

--embed-certs

都为

true

,这会将

certificate-authority

、

client-certificate

和

client-key

指向的证书文件内容写入到生成的

kube-proxy.kubeconfig

文件中; -

kube-proxy.pem

证书中 CN 为

system:kube-proxy

,

kube-apiserver

预定义的 RoleBinding

cluster-admin

将User

system:kube-proxy

与 Role

system:node-proxier

绑定,该 Role 授予了调用

kube-apiserver

Proxy 相关 API 的权限;

分发kubeconfig文件

将两个 kubeconfig 文件分发到所有 Node 机器的

/etc/kubernetes/

目录下:

# 现在可以把其他节点加入互信,首先需要生成证书,三次回车即可。

ssh-keygen

# 查看生成的证书

ls /root/.ssh/

id_rsa id_rsa.pub

# 将生成的证书拷贝到node1和node2

ssh-copy-id root@172.16.4.13

# 输入节点用户的密码即可访问。同样方式加入node2为互信。

# 把kubeconfig文件拷贝到node节点的/etc/kubernetes,该目录需要事先手动创建好。

scp bootstrap.kubeconfig kube-proxy.kubeconfig root@172.16.4.13:/etc/kubernetes

scp bootstrap.kubeconfig kube-proxy.kubeconfig root@172.16.4.14:/etc/kubernetes

创建 ETCD HA集群

etcd服务作为k8s集群的主数据库,在安装k8s各服务之前需要首先安装和启动。kuberntes 系统使用 etcd 存储所有数据,本文档介绍部署一个三节点高可用 etcd 集群的步骤,这三个节点复用 kubernetes master 机器,分别命名为

k8s-master

、

k8s-node1

、

k8s-node2

:

| 角色 | IP |

|---|---|

| k8s-master | 172.16.4.12 |

| k8s-node1 | 172.16.4.13 |

| k8s-node2 | 172.16.4.14 |

TLS认证文件

需要为 etcd 集群创建加密通信的 TLS 证书,这里复用以前创建的 kubernetes 证书:

# 将/root/ssl下的ca.pem, server-key.pem, server.pem复制到/etc/kubernetes/ssl

cp ca.pem server-key.pem server.pem /etc/kubernetes/ssl

-

kubernetes 证书的

hosts

字段列表中包含上面三台机器的 IP,否则后续证书校验会失败;

下载二进制文件

二进制包下载地址:此文最新为etcd-v3.3.13,读者可以到https://github.com/coreos/etcd/releases页面下载最新版本的二进制文件。

wget https://github.com/etcd-io/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gz

tar zxvf etcd-v3.3.13-linux-amd64.tar.gz

mv etcd-v3.3.13-linux-amd64/etcd* /usr/local/bin

或者直接使用yum命令安装:

yum install etcd

注意

:若使用yum安装,默认etcd命令将在

/usr/bin

目录下,注意修改下面

的etcd.service

文件中的启动命令地址为

/usr/bin/etcd

。

创建etcd的数据目录

mkdir -p /var/lib/etcd/default.etcd

创建etcd的systemd unit文件

在/usr/lib/systemd/system/目录下创建文件etcd.service,内容如下。注意替换IP地址为你自己的etcd集群的主机IP。

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \

--name ${ETCD_NAME} \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/server.pem \

--peer-key-file=/etc/kubernetes/ssl/server-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--initial-advertise-peer-urls ${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster etcd-master=https://172.16.4.12:2380,etcd-node1=https://172.16.4.13:2380,etcd-node2=https://172.16.4.14:2380 \

--initial-cluster-state=new \

--data-dir=${ETCD_DATA_DIR}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

-

指定

etcd

的工作目录为

/var/lib/etcd

,数据目录为

/var/lib/etcd

,

需在启动服务前创建这个目录

,否则启动服务的时候会报错“Failed at step CHDIR spawning /usr/bin/etcd: No such file or directory”; - 为了保证通信安全,需要指定 etcd 的公私钥(cert-file和key-file)、Peers 通信的公私钥和 CA 证书(peer-cert-file、peer-key-file、peer-trusted-ca-file)、客户端的CA证书(trusted-ca-file);

-

创建

server.pem

证书时使用的

server-csr.json

文件的

hosts

字段

包含所有 etcd 节点的IP

,否则证书校验会出错; -

--initial-cluster-state

值为

new

时,

--name

的参数值必须位于

--initial-cluster

列表中;

创建etcd的环境变量配置文件/etc/etcd/etcd.conf

mkdir -p /etc/etcd

touch etcd.conf

写入内容如下:

# [member]

ETCD_NAME=etcd-master

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.4.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.4.12:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.4.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.4.12:2379"

这是172.16.4.12节点的配置,其他两个etcd节点只要将上面的IP地址改成相应节点的IP地址即可。ETCD_NAME换成对应节点的etcd-node1 etcd-node2。

部署node节点的etcd

# 1. 从master节点传送TLS认证文件到各节点。注意需要在各节点上事先创建/etc/kubernetes/ssl目录。

scp /etc/kubernetes/ssl/*.pem root@172.16.4.13:/etc/kubernetes/ssl/

scp /etc/kubernetes/ssl/*.pem root@172.16.4.14:/etc/kubernetes/ssl/

# 2. 把master节点的etcd和etcdctl命令直接传到各节点上,

scp /usr/local/bin/etcd* root@172.16.4.13:/usr/local/bin/

scp /usr/local/bin/etcd* root@172.16.4.14:/usr/local/bin/

# 3. 把etcd配置文件上传至各node节点上。注意事先在各节点上创建好/etc/etcd目录。

scp /etc/etcd/etcd.conf root@172.16.4.13:/etc/etcd/

scp /etc/etcd/etcd.conf root@172.16.4.14:/etc/etcd/

# 4. 需要修改/etc/etcd/etcd.conf的相应参数。以k8s-node1(IP:172.16.4.13)为例:

# [member]

ETCD_NAME=etcd-node1

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.4.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.4.13:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.4.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.4.13:2379"

# 上述文件主要是修改ETCD_NAME和对应的IP为节点IP即可。同样修改node2的配置文件。

# 5. 把/usr/lib/systemd/system/etcd.service的etcd服务配置文件上传至各节点。

scp /usr/lib/systemd/system/etcd.service root@172.16.4.13:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@172.16.4.14:/usr/lib/systemd/system/

启动服务

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

在所有的 kubernetes master 节点重复上面的步骤,直到所有机器的 etcd 服务都已启动。

注意:如果日志中出现连接异常信息,请确认所有节点防火墙是否开放2379,2380端口。 以centos7为例:

firewall-cmd --zone=public --add-port=2380/tcp --permanent

firewall-cmd --zone=public --add-port=2379/tcp --permanent

firewall-cmd --reload

验证服务

在任一 kubernetes master 机器上执行如下命令:

[root@k8s-master ~]# etcdctl \

> --ca-file=/etc/kubernetes/ssl/ca.pem \

> --cert-file=/etc/kubernetes/ssl/server.pem \

> --key-file=/etc/kubernetes/ssl/server-key.pem \

> cluster-health

member 287080ba42f94faf is healthy: got healthy result from https://172.16.4.13:2379

member 47e558f4adb3f7b4 is healthy: got healthy result from https://172.16.4.12:2379

member e531bd3c75e44025 is healthy: got healthy result from https://172.16.4.14:2379

cluster is healthy

结果最后一行为

cluster is healthy

时表示集群服务正常。

部署Master节点

kubernetes master 节点包含的组件:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

目前这三个组件需要部署在同一台机器上。

-

kube-scheduler

、

kube-controller-manager

和

kube-apiserver

三者的功能紧密相关; -

同时只能有一个

kube-scheduler

、

kube-controller-manager

进程处于工作状态,如果运行多个,则需要通过选举产生一个 leader;

TLS证书文件

以下

pem

证书文件我们在”创建TLS证书和秘钥“这一步中已经创建过了,

token.csv

文件在“创建kubeconfig文件”的时候创建。我们再检查一下。

[root@k8s-master ~]# ls /etc/kubernetes/ssl/

admin-key.pem admin.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem server-key.pem server.pem

下载最新版本的二进制文件

从https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG.md页面

client

或

server

tarball 文件

server

的 tarball

kubernetes-server-linux-amd64.tar.gz

已经包含了

client

(

kubectl

) 二进制文件,所以不用单独下载

kubernetes-client-linux-amd64.tar.gz

文件;

wget https://dl.k8s.io/v1.14.3/kubernetes-server-linux-amd64.tar.gz

# 如果官网访问不到,可以移步百度云:链接:https://pan.baidu.com/s/1G6e981Q48mMVWD9Ho_j-7Q 提取码:uvc1 下载。

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

tar -xzvf kubernetes-src.tar.gz

将二进制文件拷贝到指定路径

[root@k8s-master kubernetes]# cp -r server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} /usr/local/bin/

配置和启动kube-apiserver

(1)创建kube-apiserver的service配置文件

service配置文件

/usr/lib/systemd/system/kube-apiserver.service

内容:

[Unit]

Description=Kubernetes API Service

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

ExecStart=/usr/local/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

(2) 创建/etc/kubernetes/config文件内容为:

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://172.16.4.12:8080"

该配置文件同时被kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy使用。

apiserver配置文件

/etc/kubernetes/apiserver

内容为:

###

### kubernetes system config

###

### The following values are used to configure the kube-apiserver

###

##

### The address on the local server to listen to.

KUBE_API_ADDRESS="--advertise-address=172.16.4.12 --bind-address=172.16.4.12 --insecure-bind-address=172.16.4.12"

##

### The port on the local server to listen on.

##KUBE_API_PORT="--port=8080"

##

### Port minions listen on

##KUBELET_PORT="--kubelet-port=10250"

##

### Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=https://172.16.4.12:2379,https://172.16.4.13:2379,https://172.16.4.14:2379"

##

### Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.10.10.0/24"

##

### default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=ServiceAccount,NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

##

### Add your own!

KUBE_API_ARGS="--authorization-mode=RBAC \

--runtime-config=rbac.authorization.k8s.io/v1beta1 \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/server.pem \

--tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/server.pem \

--etcd-keyfile=/etc/kubernetes/ssl/server-key.pem \

--enable-swagger-ui=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--event-ttl=1h"

-

如果中途修改过

--service-cluster-ip-range

地址,则必须将default命名空间的

kubernetes

的service给删除,使用命令:

kubectl delete service kubernetes

,然后系统会自动用新的ip重建这个service,不然apiserver的log有报错

the cluster IP x.x.x.x for service kubernetes/default is not within the service CIDR x.x.x.x/24; please recreate

-

--authorization-mode=RBAC

指定在安全端口使用 RBAC 授权模式,拒绝未通过授权的请求; -

kube-scheduler、kube-controller-manager 一般和 kube-apiserver 部署在同一台机器上,它们使用

非安全端口

和 kube-apiserver通信; -

kubelet、kube-proxy、kubectl 部署在其它 Node 节点上,如果通过

安全端口

访问 kube-apiserver,则必须先通过 TLS 证书认证,再通过 RBAC 授权; - kube-proxy、kubectl 通过在使用的证书里指定相关的 User、Group 来达到通过 RBAC 授权的目的;

-

如果使用了 kubelet TLS Boostrap 机制,则不能再指定

--kubelet-certificate-authority

、

--kubelet-client-certificate

和

--kubelet-client-key

选项,否则后续 kube-apiserver 校验 kubelet 证书时出现 ”x509: certificate signed by unknown authority“ 错误; -

--admission-control

值必须包含

ServiceAccount

; -

--bind-address

不能为

127.0.0.1

; -

runtime-config

配置为

rbac.authorization.k8s.io/v1beta1

,表示运行时的apiVersion; -

--service-cluster-ip-range

指定 Service Cluster IP 地址段,该地址段不能路由可达; -

缺省情况下 kubernetes 对象保存在 etcd/registry 路径下,可以通过

--etcd-prefix

参数进行调整; -

如果需要开通http的无认证的接口,则可以增加以下两个参数:

--insecure-port=8080 --insecure-bind-address=127.0.0.1

。注意,生产上不要绑定到非127.0.0.1的地址上。

注意:完整 unit 见

kube-apiserver.service

可以根据自身集群需求修改参数。

(3)启动kube-apiserver

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

配置和启动kube-controller-manager

(1)创建kube-controller-manager的service配置文件

文件路径

/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

ExecStart=/usr/local/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

(2)配置文件

/etc/kubernetes/controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 \

--service-cluster-ip-range=10.10.10.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--leader-elect=true"

-

--service-cluster-ip-range

参数指定 Cluster 中 Service 的CIDR范围,该网络在各 Node 间必须路由不可达,必须和 kube-apiserver 中的参数一致; -

--cluster-signing-*

指定的证书和私钥文件用来签名为 TLS BootStrap 创建的证书和私钥; -

--root-ca-file

用来对 kube-apiserver 证书进行校验,

指定该参数后,才会在Pod 容器的 ServiceAccount 中放置该 CA 证书文件

; -

--address

值必须为

127.0.0.1

,kube-apiserver 期望 scheduler 和 controller-manager 在同一台机器;

(3)启动kube-controller-manager

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

我们启动每个组件后可以通过执行命令

kubectl get cs

,来查看各个组件的状态;

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

-

如果有组件report unhealthy请参考:

https://github.com/kubernetes-incubator/bootkube/issues/64

配置和启动kube-scheduler

(1)创建kube-scheduler的service的配置文件

文件路径

/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

ExecStart=/usr/local/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

(2) 配置文件

/etc/kubernetes/scheduler

。

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=127.0.0.1"

-

--address

值必须为

127.0.0.1

,因为当前 kube-apiserver 期望 scheduler 和 controller-manager 在同一台机器;

注意:完整 unit 见

kube-scheduler.service

可以根据自身集群情况添加参数。

(3) 启动kube-scheduler

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

验证master节点功能

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

# 此时发现,ERROR那一栏再没有报错了。

安装flannel网络插件

所有的node节点都需要安装网络插件才能让所有的Pod加入到同一个局域网中,本文是安装flannel网络插件的参考文档。

建议直接使用yum安装flanneld,除非对版本有特殊需求,默认安装的是0.7.1版本的flannel。

(1)安装flannel

# 查看默认安装的flannel版本,下面显示是0.7.1.个人建议安装较新版本。

[root@k8s-master ~]# yum list flannel --showduplicates | sort -r

* updates: mirror.lzu.edu.cn

Loading mirror speeds from cached hostfile

Loaded plugins: fastestmirror

flannel.x86_64 0.7.1-4.el7 extras

* extras: mirror.lzu.edu.cn

* base: mirror.lzu.edu.cn

Available Packages

[root@k8s-master ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

# 解压文件,可以看到产生flanneld和mk-docker-opts.sh两个可执行文件。

[root@k8s-master ~]# tar zxvf flannel-v0.11.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

# 把两个可执行文件传至node1和node2中

[root@k8s-master ~]# scp flanneld root@172.16.4.13:/usr/bin/

flanneld 100% 34MB 62.9MB/s 00:00

[root@k8s-master ~]# scp flanneld root@172.16.4.14:/usr/bin/

flanneld 100% 34MB 121.0MB/s 00:00

[root@k8s-master ~]# scp mk-docker-opts.sh root@172.16.4.13:/usr/libexec/flannel

mk-docker-opts.sh 100% 2139 1.2MB/s 00:00

[root@k8s-master ~]# scp mk-docker-opts.sh root@172.16.4.14:/usr/libexec/flannel

mk-docker-opts.sh 100% 2139 1.1MB/s 00:00

- 注意在node节点上一定要实现创建好盛放flanneld和mk-docker-opts.sh的目录。

(2)

/etc/sysconfig/flanneld

配置文件:

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="https://172.16.4.12:2379,https://172.16.4.13:2379,https://172.16.4.14:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/kube-centos/network"

# Any additional options that you want to pass

FLANNEL_OPTIONS="-etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/server.pem -etcd-keyfile=/etc/kubernetes/ssl/server-key.pem"

(3)创建service配置文件

/usr/lib/systemd/system/flanneld.service

。

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/sysconfig/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/bin/flanneld --ip-masq \

-etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS} \

-etcd-prefix=${FLANNEL_ETCD_PREFIX} \

$FLANNEL_OPTIONS

ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

- 注意如果是多网卡(例如vagrant环境),则需要在FLANNEL_OPTIONS中增加指定的外网出口的网卡,例如-iface=eth1

(4)在etcd中创建网络配置

执行下面的命令为docker分配IP地址段。

etcdctl --endpoints=https://172.16.4.12:2379,https://172.16.4.13:2379,https://172.16.4.14:2379 \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem

mkdir -p /kube-centos/network

[root@k8s-master network]# etcdctl --endpoints=https://172.16.4.12:2379,https://172.16.4.13:2379,https://172.16.4.14:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/server.pem --key-file=/etc/kubernetes/ssl/server-key.pem mk /kube-centos/network/config '{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}'

[root@k8s-master network]# etcdctl --endpoints=https://172.16.4.12:2379,https://172.16.4.13:2379,https://172.16.4.14:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/server.pem --key-file=/etc/kubernetes/ssl/server-key.pem set /kube-centos/network/config '{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}'

{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}

(5)启动flannel

systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl status flanneld

现在查询etcd中的内容可以看到:

[root@k8s-master ~]# etcdctl --endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem \

ls /kube-centos/network/subnets

/kube-centos/network/subnets/172.30.20.0-24

/kube-centos/network/subnets/172.30.69.0-24

/kube-centos/network/subnets/172.30.53.0-24

[root@k8s-master ~]# etcdctl --endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem \

get /kube-centos/network/config

{"Network":"172.30.0.0/16","SubnetLen":24,"Backend":{"Type":"vxlan"}}

[root@k8s-master ~]# etcdctl --endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem \

get /kube-centos/network/subnets/172.30.20.0-24

{"PublicIP":"172.16.4.13","BackendType":"vxlan","BackendData":{"VtepMAC":"5e:ef:ff:37:0a:d2"}}

[root@k8s-master ~]# etcdctl --endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/server.pem \

--key-file=/etc/kubernetes/ssl/server-key.pem \

get /kube-centos/network/subnets/172.30.53.0-24

{"PublicIP":"172.16.4.12","BackendType":"vxlan","BackendData":{"VtepMAC":"e2:e6:b9:23:79:a2"}}

[root@k8s-master ~]# etcdctl --endpoints=${ETCD_ENDPOINTS} \

> --ca-file=/etc/kubernetes/ssl/ca.pem \

> --cert-file=/etc/kubernetes/ssl/server.pem \

> --key-file=/etc/kubernetes/ssl/server-key.pem \

> get /kube-centos/network/subnets/172.30.69.0-24

{"PublicIP":"172.16.4.14","BackendType":"vxlan","BackendData":{"VtepMAC":"06:0e:58:69:a0:41"}}

同时还可以查看到其他信息:

# 1. 比如可以查看到flannel网络的信息

[root@k8s-master ~]# ifconfig

.......

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.30.53.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::e0e6:b9ff:fe23:79a2 prefixlen 64 scopeid 0x20<link>

ether e2:e6:b9:23:79:a2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

.......

# 2. 可以查看到分配的子网的文件。

[root@k8s-master ~]# cat /run/flannel/docker

DOCKER_OPT_BIP="--bip=172.30.53.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.30.53.1/24 --ip-masq=false --mtu=1450"

(6)将docker应用于flannel

# 需要修改/usr/lib/systemd/system/docker.servce的ExecStart字段,引入上述\$DOCKER_NETWORK_OPTIONS字段,docker.service详细配置见下。

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# add by gzr

EnvironmentFile=-/run/flannel/docker

EnvironmentFile=-/run/docker_opts.env

EnvironmentFile=-/run/flannel/subnet.env

EnvironmentFile=-/etc/sysconfig/docker

EnvironmentFile=-/etc/sysconfig/docker-storage

EnvironmentFile=-/etc/sysconfig/docker-network

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

# 重启docker使得配置生效。

[root@k8s-master ~]# systemctl daemon-reload && systemctl restart docker.service

# 再次查看docker和flannel的网络,会发现两者在同一网段

[root@k8s-master ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.30.53.1 netmask 255.255.255.0 broadcast 172.30.53.255

ether 02:42:1e:aa:8b:0f txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

......

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.30.53.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::e0e6:b9ff:fe23:79a2 prefixlen 64 scopeid 0x20<link>

ether e2:e6:b9:23:79:a2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

.....

# 同理,可以应用到其他各节点上,以node1为例。

[root@k8s-node1 ~]# vim /usr/lib/systemd/system/docker.service

[root@k8s-node1 ~]# systemctl daemon-reload && systemctl restart docker

[root@k8s-node1 ~]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.30.20.1 netmask 255.255.255.0 broadcast 172.30.20.255

inet6 fe80::42:23ff:fe7f:6a70 prefixlen 64 scopeid 0x20<link>

ether 02:42:23:7f:6a:70 txqueuelen 0 (Ethernet)

RX packets 18 bytes 2244 (2.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 48 bytes 3469 (3.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

......

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.30.20.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::5cef:ffff:fe37:ad2 prefixlen 64 scopeid 0x20<link>

ether 5e:ef:ff:37:0a:d2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

......

veth82301fa: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet6 fe80::6855:cfff:fe99:5143 prefixlen 64 scopeid 0x20<link>

ether 6a:55:cf:99:51:43 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7 bytes 586 (586.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

部署node节点

# 把master节点上的flanneld.service文件分发到各node节点上。

scp /usr/lib/systemd/system/flanneld.service root@172.16.4.13:/usr/lib/systemd/system

scp /usr/lib/systemd/system/flanneld.service root@172.16.4.13:/usr/lib/systemd/system

# 重新启动flanneld

systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl status flanneld

配置Docker

不论您使用何种方式安装的flannel,将以下配置加入到

/var/lib/systemd/systemc/docker.service

中可确保万无一失。

# 待加入内容

EnvironmentFile=-/run/flannel/docker

EnvironmentFile=-/run/docker_opts.env

EnvironmentFile=-/run/flannel/subnet.env

EnvironmentFile=-/etc/sysconfig/docker

EnvironmentFile=-/etc/sysconfig/docker-storage

EnvironmentFile=-/etc/sysconfig/docker-network

# 最终完整的docker.service文件内容如下

[root@k8s-master ~]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# add by gzr

EnvironmentFile=-/run/flannel/docker

EnvironmentFile=-/run/docker_opts.env

EnvironmentFile=-/run/flannel/subnet.env

EnvironmentFile=-/etc/sysconfig/docker

EnvironmentFile=-/etc/sysconfig/docker-storage

EnvironmentFile=-/etc/sysconfig/docker-network

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

(2)启动docker

安装配置kubelet

(1)检查是否禁用sawp

[root@k8s-master ~]# free

total used free shared buff/cache available

Mem: 32753848 730892 27176072 377880 4846884 31116660

Swap: 0 0 0

-

或者进入

/etc/fstab

目录,将swap系统注释掉。

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper cluster 角色(role), 然后 kubelet 才能有权限创建认证请求(certificate signing requests):

(2)从master节点的/usr/local/bin将kubelet和kube-proxy文件传至各节点

[root@k8s-master ~]# scp /usr/local/bin/kubelet root@172.16.4.13:/usr/local/bin/

[root@k8s-master ~]# scp /usr/local/bin/kubelet root@172.16.4.14:/usr/local/bin/

(3)在master节点上创建角色。

# 需要在master端创建权限分配角色, 然后在node节点上再重新启动kubelet服务

[root@k8s-master kubernetes]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

(4)创建kubelet服务

第一种方式:在node节点上创建执行脚本

# 创建kubelet的配置文件和kubelet.service文件,此处采用创建脚本kubelet.sh的一键执行。

#!/bin/bash

NODE_ADDRESS=${1:-"172.16.4.13"}

DNS_SERVER_IP=${2:-"10.10.10.2"}

cat <<EOF >/etc/kubernetes/kubelet

KUBELET_ARGS="--logtostderr=true \\

--v=4 \\

--address=${NODE_ADDRESS} \\

--hostname-override=${NODE_ADDRESS} \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \\

--api-servers=172.16.4.12 \\

--cert-dir=/etc/kubernetes/ssl \\

--allow-privileged=true \\

--cluster-dns=${DNS_SERVER_IP} \\

--cluster-domain=cluster.local \\

--fail-swap-on=false \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/bin/kubelet \$KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet && systemctl status kubelet

2)执行脚本

chmod +x kubelet.sh

./kubelet.sh 172.16.4.14 10.10.10.2

# 同时还可以查看生成的kubelet.service文件

[root@k8s-node2 ~]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/bin/kubelet $KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

- 注意:在node1上执行kubelet.sh脚本,传入172.16.4.13(node1 IP)和 10.10.10.2(DNS服务器IP)。在其他节点执行脚本时,记得替换相应的参数。

或者采用第二种方式

:

1)创建kubelet的配置文件/etc/kubernetes/kubelet,内容如下:

###

## kubernetes kubelet (minion) config

#

## The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=172.16.4.12"

#

## The port for the info server to serve on

#KUBELET_PORT="--port=10250"

#

## You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=172.16.4.12"

#

## location of the api-server

## COMMENT THIS ON KUBERNETES 1.8+

KUBELET_API_SERVER="--api-servers=http://172.16.4.12:8080"

#

## pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=jimmysong/pause-amd64:3.0"

#

## Add your own!

KUBELET_ARGS="--cgroup-driver=systemd \

--cluster-dns=10.10.10.2 \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--require-kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--cluster-domain=cluster.local \

--hairpin-mode promiscuous-bridge \

--serialize-image-pulls=false"

-

如果使用systemd方式启动,则需要额外增加两个参数

--runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice

-

--address

不能设置为

127.0.0.1

,否则后续 Pods 访问 kubelet 的 API 接口时会失败,因为 Pods 访问的

127.0.0.1

指向自己而不是 kubelet; -

"--cgroup-driver

配置成

systemd

,不要使用

cgroup

,否则在 CentOS 系统中 kubelet 将启动失败(保持docker和kubelet中的cgroup driver配置一致即可,不一定非使用

systemd

)。 -

--bootstrap-kubeconfig

指向 bootstrap kubeconfig 文件,kubelet 使用该文件中的用户名和 token 向 kube-apiserver 发送 TLS Bootstrapping 请求; -

管理员通过了 CSR 请求后,kubelet 自动在

--cert-dir

目录创建证书和私钥文件(

kubelet-client.crt

和

kubelet-client.key

),然后写入

--kubeconfig

文件; -

建议在

--kubeconfig

配置文件中指定

kube-apiserver

地址,如果未指定

--api-servers

选项,则必须指定

--require-kubeconfig

选项后才从配置文件中读取 kube-apiserver 的地址,否则 kubelet 启动后将找不到 kube-apiserver (日志中提示未找到 API Server),

kubectl get nodes

不会返回对应的 Node 信息;

--require-kubeconfig

在1.10版本被移除,参看

PR

; -

--cluster-dns

指定 kubedns 的 Service IP(可以先分配,后续创建 kubedns 服务时指定该 IP),

--cluster-domain

指定域名后缀,这两个参数同时指定后才会生效; -

--cluster-domain

指定 pod 启动时

/etc/resolve.conf

文件中的

search domain

,起初我们将其配置成了

cluster.local.

,这样在解析 service 的 DNS 名称时是正常的,可是在解析 headless service 中的 FQDN pod name 的时候却错误,因此我们将其修改为

cluster.local

,去掉最后面的 ”点号“ 就可以解决该问题,关于 kubernetes 中的域名/服务名称解析请参见我的另一篇文章。 -

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig

中指定的

kubelet.kubeconfig

文件在第一次启动kubelet之前并不存在,请看下文,当通过CSR请求后会自动生成

kubelet.kubeconfig

文件,如果你的节点上已经生成了

~/.kube/config

文件,你可以将该文件拷贝到该路径下,并重命名为

kubelet.kubeconfig

,所有node节点可以共用同一个kubelet.kubeconfig文件,这样新添加的节点就不需要再创建CSR请求就能自动添加到kubernetes集群中。同样,在任意能够访问到kubernetes集群的主机上使用

kubectl --kubeconfig

命令操作集群时,只要使用

~/.kube/config

文件就可以通过权限认证,因为这里面已经有认证信息并认为你是admin用户,对集群拥有所有权限。 -

KUBELET_POD_INFRA_CONTAINER

是基础镜像容器,这里我用的是私有镜像仓库地址,

大家部署的时候需要修改为自己的镜像

。可以使用Google的pause镜像

gcr.io/google_containers/pause-amd64:3.0

,这个镜像只有300多K。

2) 创建kubelet的service配置文件

文件位置:

/usr/lib/systemd/system/kubelet.service

。

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

注意:上述两种方式都可以创建kubelet服务,个人建议采用脚本一键式执行所有任务,采用第二种方式配置时,需要手动创建工作目录:

/var/lib/kubelet

。此处不再演示。

(5)通过kubelet的TLS证书请求

kubelet 首次启动时向 kube-apiserver 发送证书签名请求,必须通过后 kubernetes 系统才会将该 Node 加入到集群。

1)在master节点上查看未授权的CSR请求

[root@k8s-master ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-4799pnHJjREEcWDGgSFvNaoyfcn4HiOML9cpEI1IbMs 3h6m kubelet-bootstrap Pending

node-csr-e3mql7Dm878tLhPUxu2pzg8e8eM17Togc6lHQX-mXZs 3h kubelet-bootstrap Pending

2)通过CSR请求

[root@k8s-master ~]# kubectl certificate approve node-csr-4799pnHJjREEcWDGgSFvNaoyfcn4HiOML9cpEI1IbMs

certificatesigningrequest.certificates.k8s.io/node-csr-4799pnHJjREEcWDGgSFvNaoyfcn4HiOML9cpEI1IbMs approved

[root@k8s-master ~]# kubectl certificate approve node-csr-e3mql7Dm878tLhPUxu2pzg8e8eM17Togc6lHQX-mXZs

certificatesigningrequest.certificates.k8s.io/node-csr-e3mql7Dm878tLhPUxu2pzg8e8eM17Togc6lHQX-mXZs approved

# 授权后发现两个node节点的csr已经approved.

3)自动生成了 kubelet kubeconfig 文件和公私钥

[root@k8s-node1 ~]# ls -l /etc/kubernetes/kubelet.kubeconfig

-rw------- 1 root root 2294 Jun 14 15:19 /etc/kubernetes/kubelet.kubeconfig

[root@k8s-node1 ~]# ls -l /etc/kubernetes/ssl/kubelet*

-rw------- 1 root root 1273 Jun 14 15:19 /etc/kubernetes/ssl/kubelet-client-2019-06-14-15-19-10.pem

lrwxrwxrwx 1 root root 58 Jun 14 15:19 /etc/kubernetes/ssl/kubelet-client-current.pem -> /etc/kubernetes/ssl/kubelet-client-2019-06-14-15-19-10.pem

-rw-r--r-- 1 root root 2177 Jun 14 11:50 /etc/kubernetes/ssl/kubelet.crt

-rw------- 1 root root 1679 Jun 14 11:50 /etc/kubernetes/ssl/kubelet.key

假如你更新kubernetes的证书,只要没有更新

token.csv

,当重启kubelet后,该node就会自动加入到kuberentes集群中,而不会重新发送

certificaterequest

,也不需要在master节点上执行

kubectl certificate approve

操作。前提是不要删除node节点上的

/etc/kubernetes/ssl/kubelet*

和

/etc/kubernetes/kubelet.kubeconfig

文件。否则kubelet启动时会提示找不到证书而失败。

[root@k8s-master ~]# scp /etc/kubernetes/token.csv root@172.16.4.13:/etc/kubernetes/

[root@k8s-master ~]# scp /etc/kubernetes/token.csv root@172.16.4.14:/etc/kubernetes/

**注意:**如果启动kubelet的时候见到证书相关的报错,有个trick可以解决这个问题,可以将master节点上的

~/.kube/config

文件(该文件在

[安装kubectl命令行工具]:

这一步中将会自动生成)拷贝到node节点的

/etc/kubernetes/kubelet.kubeconfig

位置,这样就不需要通过CSR,当kubelet启动后就会自动加入的集群中。注意同时记得也把.kube/config中的内容复制粘贴到/etc/kubernetes/kubelet.kubeconfig中,替换原先内容。

[root@k8s-master ~]# cat .kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR2akNDQXFhZ0F3SUJBZ0lVZkJtL2lzNG1EcHdqa0M0aVFFTWF5SVJaVHVjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEU1TURZeE1qQXpNRFF3TUZvWERUSTVNRFl3T1RBek1EUXdNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbGFXcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBOEZQK2p0ZUZseUNPVDc0ZzRmd1UKeDl0bDY3dGVabDVwTDg4ZStESzJMclBJZDRXMDRvVDdiWTdKQVlLT3dPTkM4RjA5MzNqSjVBdmxaZmppTkJCaQp2OTlhYU5tSkdxeWozMkZaaDdhTkYrb3Fab3BYdUdvdmNpcHhYTWlXbzNlVHpWVUh3d2FBeUdmTS9BQnE0WUY0ClprSVV5UkJaK29OVXduY0tNaStOR2p6WVJyc2owZEJRR0ROZUJ6OEgzbCtjd1U1WmpZdEdFUFArMmFhZ1k5bG0KbjhyOUFna2owcW9uOEdQTFlRb2RDYzliSWZqQmVNaGIzaHJGMjJqMDhzWTczNzh3MzN5VWRHdjg1YWpuUlp6UgpIYkN6UytYRGJMTTh2aGh6dVZoQmt5NXNrWXB6M0hCNGkrTnJPR1Fmdm4yWkY0ZFh4UVUyek1Dc2NMSVppdGg0Ckt3SURBUUFCbzJZd1pEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0VnWURWUjBUQVFIL0JBZ3dCZ0VCL3dJQkFqQWQKQmdOVkhRNEVGZ1FVeTVmVncxK0s2N1dvblRuZVgwL2dFSTVNM3FJd0h3WURWUjBqQkJnd0ZvQVV5NWZWdzErSwo2N1dvblRuZVgwL2dFSTVNM3FJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFOb3ZXa1ovd3pEWTZSNDlNNnpDCkhoZlZtVGk2dUZwS24wSmtvMVUzcHA5WTlTTDFMaXVvK3VwUjdJOCsvUXd2Wm95VkFWMTl4Y2hRQ25RSWhRMEgKVWtybXljS0crdWtsSUFUS3ZHenpzNW1aY0NQOGswNnBSSHdvWFhRd0ZhSFBpNnFZWDBtaW10YUc4REdzTk01RwpQeHdZZUZncXBLQU9Tb0psNmw5bXErQnhtWEoyZS8raXJMc3N1amlPKzJsdnpGOU5vU29Yd1RqUGZndXhRU3VFCnZlSS9pTXBGV1o0WnlCYWJKYkw5dXBldm53RTA2RXQrM2g2N3JKOU5mZ2N5MVhNSU0xeGo1QXpzRXgwVE5ETGkKWGlOQ0Zram9zWlA3U3dZdE5ncHNuZmhEandHRUJLbXV1S3BXR280ZWNac2lMQXgwOTNaeTdKM2dqVDF6dGlFUwpzQlE9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://172.16.4.12:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: admin

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQzVENDQXNXZ0F3SUJBZ0lVVmlPdjZ6aFlHMzIzdWRZS2RFWEcvRVJENW8wd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEU1TURZeE1qQTJORGd3TUZvWERUSTVNRFl3T1RBMk5EZ3dNRm93YXpFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbGFVcHBibWN4RnpBVgpCZ05WQkFvVERuTjVjM1JsYlRwdFlYTjBaWEp6TVE4d0RRWURWUVFMRXdaVGVYTjBaVzB4RGpBTUJnTlZCQU1UCkJXRmtiV2x1TUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUFuL29MQVpCcENUdWUKci95eU15a1NYelBpWk9mVFdZQmEwNjR6c2Y1Y1Z0UEt2cnlCSjVHVlVSUlFUc2F3eWdFdnFBSXI3TUJrb21GOQpBeFVNaFNxdlFjNkFYemQzcjRMNW1CWGQxZ3FoWVNNR2lJL3hEMG5RaEF1azBFbVVONWY5ZENZRmNMMTVBVnZSCituN2wwaVcvVzlBRjRqbXRtYUtLVUdsUU9vNzQ3anNCYWRndU9SVHBMSkwxUGw3SlVLZnFBWktEbFVXZnpwZXcKOE1ETVMzN1FodmVQc24va2RwUVZ0bzlJZWcwSFhBcXlmZHNaZjZKeGdaS1FmUUNyYlJEMkd2L29OVVRlYnpWMwpWVm9ueEpUYmFrZFNuOHR0cCtLWFlzTUYvQy8wR29sL1JkS1Mrc0t4Z2hUUWdJMG5CZXJBM0x0dGp6WVpySWJBClo0RXBRNmc0ZFFJREFRQUJvMzh3ZlRBT0JnTlZIUThCQWY4RUJBTUNCYUF3SFFZRFZSMGxCQll3RkFZSUt3WUIKQlFVSEF3RUdDQ3NHQVFVRkJ3TUNNQXdHQTFVZEV3RUIvd1FDTUFBd0hRWURWUjBPQkJZRUZCQThrdnFaVDhRRApaSnIvTUk2L2ZWalpLdVFkTUI4R0ExVWRJd1FZTUJhQUZNdVgxY05maXV1MXFKMDUzbDlQNEJDT1RONmlNQTBHCkNTcUdTSWIzRFFFQkN3VUFBNElCQVFDMnZzVDUwZVFjRGo3RVUwMmZQZU9DYmJ6cFZWazEzM3NteGI1OW83YUgKRDhONFgvc3dHVlYzU0V1bVNMelJYWDJSYUsyUU04OUg5ZDlpRkV2ZzIvbjY3VThZeVlYczN0TG9Ua29NbzlUZgpaM0FNN0NyM0V5cWx6OGZsM3p4cmtINnd1UFp6VWNXV29vMUJvR1VCbEM1Mi9EbFpQMkZCbHRTcWtVL21EQ3IxCnJJWkFYYjZDbXNNZG1SQzMrYWwxamVUak9MZEcwMUd6dlBZdEdsQ0p2dHRJNzBuVkR3Nkh3QUpkRVN0UUh0cWsKakpCK3NZU2NSWDg1YTlsUXVIU21DY0kyQWxZQXFkK0t2NnNKNUVFZnpwWHNUVXdya0tKbjJ0UTN2UVNLaEgyawpabUx2N0MvcWV6YnJvc3pGeHNZWEtRelZiODVIVkxBbXo2UVhYV1I2Q0ZzMAotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBbi9vTEFaQnBDVHVlci95eU15a1NYelBpWk9mVFdZQmEwNjR6c2Y1Y1Z0UEt2cnlCCko1R1ZVUlJRVHNhd3lnRXZxQUlyN01Ca29tRjlBeFVNaFNxdlFjNkFYemQzcjRMNW1CWGQxZ3FoWVNNR2lJL3gKRDBuUWhBdWswRW1VTjVmOWRDWUZjTDE1QVZ2UituN2wwaVcvVzlBRjRqbXRtYUtLVUdsUU9vNzQ3anNCYWRndQpPUlRwTEpMMVBsN0pVS2ZxQVpLRGxVV2Z6cGV3OE1ETVMzN1FodmVQc24va2RwUVZ0bzlJZWcwSFhBcXlmZHNaCmY2SnhnWktRZlFDcmJSRDJHdi9vTlVUZWJ6VjNWVm9ueEpUYmFrZFNuOHR0cCtLWFlzTUYvQy8wR29sL1JkS1MKK3NLeGdoVFFnSTBuQmVyQTNMdHRqellackliQVo0RXBRNmc0ZFFJREFRQUJBb0lCQUE1cXFDZEI3bFZJckNwTAo2WHMyemxNS0IvTHorVlh0ZlVIcVJ2cFpZOVRuVFRRWEpNUitHQ2l3WGZSYmIzOGswRGloeVhlU2R2OHpMZUxqCk9MZWZleC9CRGt5R1lTRE4rdFE3MUR2L3hUOU51cjcveWNlSTdXT1k4UWRjT2lFd2IwVFNVRmN5bS84RldVenIKdHFaVGhJVXZuL2dkSG9uajNmY1ZKb2ZBYnFwNVBrLzVQd2hFSU5Pdm1FTFZFQWl6VnBWVmwxNzRCSGJBRHU1Sgp2Nm9xc0h3SUhwNC9ZbGo2NHhFVUZ1ZFA2Tkp0M1B5Uk14dW5RcWd3SWZ1bktuTklRQmZEVUswSklLK1luZmlJClgrM1lQam5sWFU3UnhYRHRFa3pVWTFSTTdVOHJndHhiNWRQWnhocGgyOFlFVnJBVW5RS2RSTWdCVVNad3hWRUYKeFZqWmVwa0NnWUVBeEtHdXExeElHNTZxL2RHeGxDODZTMlp3SkxGajdydTkrMkxEVlZsL2h1NzBIekJ6dFFyNwpMUGhUZnl2SkVqNTcwQTlDbk4ybndjVEQ2U1dqbkNDbW9ESk10Ti9iZlJaMThkZTU4b0JCRDZ5S0JGbmV1eWkwCk1oVWFmSzN5M091bGkxMjBKS3lQb2hvN1lyWUxNazc1UzVEeVRGMlEyV3JYY0VQaTlVRzNkNzhDZ1lFQTBFY3YKTUhDbE9XZ1hJUVNXNCtreFVEVXRiOFZPVnpwYjd3UWZCQ3RmSTlvTDBnVWdBd1M2U0lub2tET3ozdEl4aXdkQQpWZTVzMklHbVAzNS9qdm5FbThnaE1XbEZ3eHB5ZUxKK0hraTl1dFNPblJGWHYvMk9JdjBYbE01RlY5blBmZ01NCkMxQ09zZklKaVREaXJFOGQrR2cxV010dWxkVGo4Z0JKazRQRXZNc0NnWUJoNHA4aWZVa0VQdU9lZ1hJbWM3QlEKY3NsbTZzdjF2NDVmQTVaNytaYkxwRTd3Njl6ZUJuNXRyNTFaVklHL1RFMjBrTFEzaFB5TE1KbmFpYnM5OE44aQpKb2diRHNta0pyZEdVbjhsNG9VQStZS25rZG1ZVURZTUxJZElCQXcvd0N0a0NweXdHUnRUdGoxVDhZMzNXR3N3CkhCTVN3dzFsdnBOTE52Qlg2WVFjM3dLQmdHOHAvenJJZExjK0lsSWlJL01EREtuMXFBbW04cGhGOHJtUXBvbFEKS05oMjBhWkh5LzB3Y2NpenFxZ0VvSFZHRk9GU2Zua2U1NE5yTjNOZUxmRCt5SHdwQmVaY2ZMcVVqQkoxbWpESgp2RkpTanNld2NQaHMrWWNkTkkvY3hGQU9WZHU0L3Aydlltb0JlQ3Q4SncrMnJwVmQ4Vk15U1JTNWF1eElVUHpsCjhJU2ZBb0dBVituYjJ3UGtwOVJ0NFVpdmR0MEdtRjErQ052YzNzY3JYb3RaZkt0TkhoT0o2UTZtUkluc2tpRWgKVnFQRjZ6U1BnVmdrT1hmU0xVQ3Y2cGdWR2J5d0plRWo1SElQRHFuU25vNFErZFl2TXozcWN5d1hLbFEyUjZpcAo3VE0wWHNJaGFMRDFmWUNjaDhGVHNiZHNrQUNZUHpzeEdBa1l2TnRDcDI5WExCRmZWbkE9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

# 分发.kube/config到各节点。

[root@k8s-master ~]# scp .kube/config root@172.16.4.13:/etc/kubernetes/

[root@k8s-master ~]# scp .kube/config root@172.16.4.14:/etc/kubernetes/

# 比如在node2的/etc/kubernetes/目录下则出现了config文件。

[root@k8s-node2 ~]# ls /etc/kubernetes/

bin bootstrap.kubeconfig config kubelet kubelet.kubeconfig kube-proxy.kubeconfig ssl token.csv

配置kube-proxy

脚本方式配置

(1)编写kube-proxy.sh脚本内容如下(在各node上编写该脚本):

#!/bin/bash

NODE_ADDRESS=${1:-"172.16.4.13"}

cat <<EOF >/etc/kubernetes/kube-proxy

KUBE_PROXY_ARGS="--logtostderr=true \

--v=4 \

--hostname-override=${NODE_ADDRESS} \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \$KUBE_PROXY_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload && systemctl enable kube-proxy

systemctl restart kube-proxy && systemctl status kube-proxy

-

--hostname-override

参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 iptables 规则; -

kube-proxy 根据

--cluster-cidr

判断集群内部和外部流量,指定

--cluster-cidr

或

--masquerade-all

选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT; -

--kubeconfig

指定的配置文件嵌入了 kube-apiserver 的地址、用户名、证书、秘钥等请求和认证信息; -

预定义的 RoleBinding

cluster-admin

将User

system:kube-proxy

与 Role

system:node-proxier

绑定,该 Role 授予了调用

kube-apiserver

Proxy 相关 API 的权限;

完整 unit 见

kube-proxy.service

(2)执行脚本

# 首先将前端master的kube-proxy命令拷贝至各个节点。

[root@k8s-master ~]# scp /usr/local/bin/kube-proxy root@172.16.4.13:/usr/local/bin/

[root@k8s-master ~]# scp /usr/local/bin/kube-proxy root@172.16.4.14:/usr/local/bin/

# 并在各个节点上更改执行权限。

chmod +x kube-proxy.sh

[root@k8s-node2 ~]# ./kube-proxy.sh 172.16.4.14

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-06-14 16:01:47 CST; 39ms ago

Main PID: 117068 (kube-proxy)

Tasks: 10

Memory: 8.8M

CGroup: /system.slice/kube-proxy.service

└─117068 /usr/local/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=172.16.4.14 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Jun 14 16:01:47 k8s-node2 systemd[1]: Started Kubernetes Proxy.

(3) –kubeconfig=/etc/kubernetes/kubelet.kubeconfig

中指定的

kubelet.kubeconfig

文件在第一次启动kubelet之前并不存在,请看下文,当通过CSR请求后会自动生成

kubelet.kubeconfig

文件,如果你的节点上已经生成了

~/.kube/config

文件,你可以将该文件拷贝到该路径下,并重命名为

kubelet.kubeconfig

,所有node节点可以共用同一个kubelet.kubeconfig文件,这样新添加的节点就不需要再创建CSR请求就能自动添加到kubernetes集群中。同样,在任意能够访问到kubernetes集群的主机上使用

kubectl –kubeconfig

命令操作集群时,只要使用

~/.kube/config`文件就可以通过权限认证,因为这里面已经有认证信息并认为你是admin用户,对集群拥有所有权限。

[root@k8s-master ~]# scp .kube/config root@172.16.4.13:/etc/kubernetes/

[root@k8s-node1 ~]# mv config kubelet.kubeconfig

[root@k8s-master ~]# scp .kube/config root@172.16.4.14:/etc/kubernetes/

[root@k8s-node2 ~]# mv config kubelet.kubeconfig

验证测试

# 以下操作在master节点上运行。

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.16.4.13 Ready <none> 66s v1.14.3

172.16.4.14 Ready <none> 7m14s v1.14.3

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

# 以nginx服务测试集群可用性

[root@k8s-master ~]# kubectl run nginx --replicas=3 --labels="run=load-balancer-example" --image=nginx --port=80

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/nginx created

[root@k8s-master ~]# kubectl expose deployment nginx --type=NodePort --name=example-service

service/example-service exposed

[root@k8s-master ~]# kubectl describe svc example-service

Name: example-service

Namespace: default

Labels: run=load-balancer-example

Annotations: <none>

Selector: run=load-balancer-example

Type: NodePort

IP: 10.10.10.222

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 40905/TCP

Endpoints: 172.17.0.2:80,172.17.0.2:80,172.17.0.3:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

# 在node节点上访问

[root@k8s-node1 ~]# curl "10.10.10.222:80"

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 外网测试访问

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example-service NodePort 10.10.10.222 <none> 80:40905/TCP 6m26s

kubernetes ClusterIP 10.10.10.1 <none> 443/TCP 21h

# 由上可知,服务暴露外网端口为40905.输入172.16.4.12:40905即可访问。

DNS服务搭建与配置

从k8s v1.11版本开始,Kubernetes集群的DNS服务由CoreDNS提供。它是CNCF基金会的一个项目,使用Go语言实现的高性能、插件式、易扩展的DNS服务端。它解决了KubeDNS的一些问题,如dnsmasq的安全漏洞,externalName不能使用stubDomains设置等。

安装CoreDNS插件

官方的yaml文件目录:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

在部署CoreDNS应用前,至少需要创建一个ConfigMap,一个Deployment和一个Service共3个资源对象。在启用了RBAC的集群中,还可以设置ServiceAccount、ClusterRole、ClusterRoleBinding对CoreDNS容器进行权限限制。

(1)为了起到镜像加速的作用,首先将docker的配置源更改为国内阿里云

cat << EOF > /etc/docker/daemon.json

{

"registry-mirrors":["https://registry.docker-cn.com","https://h23rao59.mirror.aliyuncs.com"]

}

EOF

# 重新载入配置并重启docker

[root@k8s-master ~]# systemctl daemon-reload && systemctl restart docker

(2)此处将svc,configmap,ServiceAccount等写在一个yaml文件里,coredns.yaml内容见下。

[root@k8s-master ~]# cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

k8s-app: kube-dns

name: coredns

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kube-dns

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

k8s-app: kube-dns

spec:

containers:

- args:

- -conf

- /etc/coredns/Corefile

image: docker.io/fengyunpan/coredns:1.2.6

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

resources:

limits:

memory: 170Mi