天气最高温度预测任务

我们要完成三项任务:

- 使用随机森林算法完成基本建模任务

基本任务需要我们处理数据,观察特征,完成建模并进行可视化展示分析

- 观察数据量与特征个数对结果影响

在保证算法一致的前提下,加大数据个数,观察结果变换。重新考虑特征工程,引入新特征后观察结果走势。

- 对随机森林算法进行调参,找到最合适的参数

掌握机器学习中两种经典调参方法,对当前模型进行调节

# 数据读取

import pandas as pd

features = pd.read_csv('data/temps.csv')

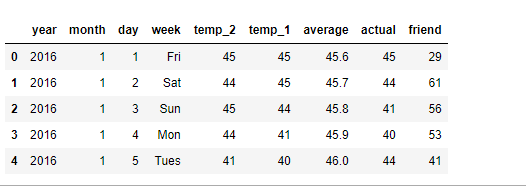

features.head()

数据表中

- year,moth,day,week分别表示的具体的时间

- temp_2:前天的最高温度值

- temp_1:昨天的最高温度值

- average:在历史中,每年这一天的平均最高温度值

- actual:这就是我们的标签值了,当天的真实最高温度

- friend:这一列可能是凑热闹的,你的朋友猜测的可能值,咱们不管它就好了

print('数据维度:',features.shape)

features.describe()

数据维度: (348, 9)

其中包括了各个列的数量,如果有缺失数据,数量就有所减少,这里因为并不存在缺失值,所以各个列的数量值就都是348了,均值,标准差,最大最小值等指标在这里就都显示出来了。

对于时间数据,我们也可以进行一些转换,目的就是有些工具包在绘图或者计算的过程中,需要标准的时间格式:

# 处理时间数据

import datetime

# 分别得到年月日

years = features['year']

months = features['month']

days = features['day']

# 转化成datetime格式

dates = [str(int(year)) + '-'+str(int(month))+'-'+str(int(day)) for year,month,day in zip(years,months,days)]

dates = [datetime.datetime.strptime(date,'%Y-%m-%d') for date in dates]

print(dates[:5])

[datetime.datetime(2016, 1, 1, 0, 0), datetime.datetime(2016, 1, 2, 0, 0), datetime.datetime(2016, 1, 3, 0, 0), datetime.datetime(2016, 1, 4, 0, 0), datetime.datetime(2016, 1, 5, 0, 0)]

数据展示

# 准备画图

import matplotlib.pyplot as plt

%matplotlib inline

# 指定默认风格

plt.style.use('fivethirtyeight')

# 创建子图与设置布局

fig,((ax1,ax2),(ax3,ax4)) = plt.subplots(nrows=2,ncols=2,figsize = (10,10))

fig.autofmt_xdate(rotation = 45)

# 标签值

ax1.plot(dates,features['actual'])

ax1.set_xlabel('')

ax1.set_ylabel('Temperature')

ax1.set_title('Max Temp')

# 昨天

ax2.plot(dates,features['actual'])

ax2.set_xlabel('')

ax2.set_ylabel('Temperature')

ax2.set_title('Previous Max Temp')

# 前天

ax3.plot(dates,features['temp_2'])

ax3.set_xlabel('Date')

ax3.set_ylabel('Temperature')

ax3.set_title('Two Days Prior Max Temp')

# 我的黑驴朋友

ax4.plot(dates,features['friend'])

ax4.set_xlabel('Date')

ax4.set_ylabel('Temperature')

ax4.set_title('Donkey Estimate')

数据预处理

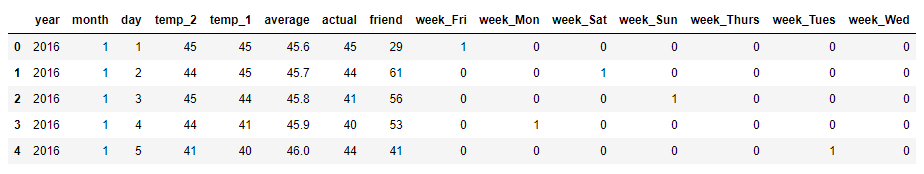

One-Hot Encoding

原始数据:

| week |

|---|

| Mon |

| Tue |

| Wed |

| Thu |

| Fri |

编码转换后:

| Mon | Tue | Wed | Thu | Fri |

|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 0 | 0 |

| 0 | 0 | 1 | 0 | 0 |

| 0 | 0 | 0 | 1 | 0 |

| 0 | 0 | 0 | 0 | 1 |

# 独热编码

print(features.dtypes)

features = pd.get_dummies(features)

print('Shape of features after one-hot encoding:',features.shape)

features.head(5)

year int64

month int64

day int64

week object

temp_2 int64

temp_1 int64

average float64

actual int64

friend int64

dtype: object

Shape of features after one-hot encoding: (348, 15)

标签与数据格式转换

# 数据与标签

import numpy as np

#标签

labels = np.array(features['actual'])

# 在特征中去掉标签

features = features.drop('actual',axis=1)

# 名字单独保存一份,以备后患

features_list = list(features.columns)

# 转换成合适的格式

features = np.array(features)

训练集与测试集

# 数据集划分

from sklearn.model_selection import train_test_split

train_features,test_features,train_labels,test_labels = train_test_split(features,labels,test_size=0.25,random_state=42)

print('训练集特征维度:',train_features.shape)

print('训练集标签维度:',train_labels.shape)

print('测试集特征维度:',test_features.shape)

print('测试集标签维度:',test_labels.shape)

训练集特征维度: (261, 14)

训练集标签维度: (261,)

测试集特征维度: (87, 14)

测试集标签维度: (87,)

建立一个基础的随机森林模型

万事俱备,我们可以来建立随机森林模型啦,首先导入工具包,先建立1000个树试试吧,其他参数先用默认值,之后我们会再深入到调参任务中:

# 导入算法

from sklearn.ensemble import RandomForestRegressor

# 建模

rf = RandomForestRegressor(n_estimators = 1000,random_state=42)

# 训练

rf.fit(train_features,train_labels)

测试

# 预测结果

predictions = rf.predict(test_features)

# 计算误差

errors = abs(predictions - test_labels)

# mean absolute percentage error(MAPE)

mape = 100 * (errors / test_labels)

print('MAPE:',np.mean(mape))

MAPE指标

可视化展示树

先安装:graphviz

# 导入所需工具包

from sklearn.tree import export_graphviz

import pydot

# 拿到其中地一棵树

tree = rf.estimators_[5]

# 导出成dot文件

export_graphviz(tree,out_file = 'tree.dot',feature_names = features_list,rounded = True,precision = 1)

# 绘图

(graph,) = pydot.graph_from_dot_file('tree.dot')

# 展示

graph.write_png('tree.png')

还是小一点吧。。。

# 限制一下树模型

rf_small = RandomForestRegressor(n_estimators=10, max_depth = 3, random_state=42)

rf_small.fit(train_features, train_labels)

# 提取一颗树

tree_small = rf_small.estimators_[5]

# 保存

export_graphviz(tree_small, out_file = 'small_tree.dot', feature_names = feature_list, rounded = True, precision = 1)

(graph, ) = pydot.graph_from_dot_file('small_tree.dot')

graph.write_png('small_tree.png');

特征重要性

# 得到特征重要度

importances = list(rf.feature_importances_)

# 转换格式

feature_importances = [(feature,round(importance,2))for feature,importance in zip(features_list,importances)]

# 排序

feature_importances = sorted(feature_importances,key = lambda x:x[1],reverse = True)

# 对应进行打印

[print('Variable:{:20} Importance:{}'.format(*pair)) for pair in feature_importances]

Variable:temp_1 Importance:0.7

Variable:average Importance:0.19

Variable:day Importance:0.03

Variable:temp_2 Importance:0.02

Variable:friend Importance:0.02

Variable:month Importance:0.01

Variable:year Importance:0.0

Variable:week_Fri Importance:0.0

Variable:week_Mon Importance:0.0

Variable:week_Sat Importance:0.0

Variable:week_Sun Importance:0.0

Variable:week_Thurs Importance:0.0

Variable:week_Tues Importance:0.0

Variable:week_Wed Importance:0.0

用最重要的特征再来试试

# 选择最重要的那两个特征来试一试

rf_most_important = RandomForestRegressor(n_estimators = 1000,random_state = 42)

# 拿到这两个特征

important_indices = [features_list.index('temp_1'),features_list.index('average')]

train_important = train_features[:,important_indices]

test_important = test_features[:,important_indices]

# 重新训练模型

rf_most_important.fit(train_important,train_labels)

# 预测结果

predictions = rf_most_important.predict(test_important)

errors = abs(predictions-test_labels)

# 评估结果

mape = np.mean(100 * (errors / test_labels))

print('mape',mape)

mape 6.229055723613811

# 转换成list格式

x_values = list(range(len(importances)))

# 绘图

plt.bar(x_values,importances)

# x轴名字

plt.xticks(x_values,features_list,rotation = 'vertical')

# 图名与标签

plt.ylabel('Importance')

plt.xlabel('Variable')

plt.title('Variable Importances')

预测值与真实值之间的差异

# 日期数据

months = features[:,features_list.index('month')]

days = features[:,features_list.index('day')]

years = features[:,features_list.index('year')]

# 转换成日期格式

dates = [str(int(year))+'-'+str(int(month))+'-'+str(int(day)) for year,month,day in zip(years,months,days)]

dates = [datetime.datetime.strptime(date,'%Y-%m-%d') for date in dates]

# 创建一个表格来存日期和对应的数值

true_data = pd.DataFrame(data = {'date':dates,'actual':labels})

# 同理,再创建一个来存日期和对应的模型预测值

months = test_features[:,features_list.index('month')]

days = test_features[:,features_list.index('day')]

years = test_features[:,features_list.index('year')]

test_dates = [str(int(year))+'-'+str(int(month))+'-'+str(int(day)) for year,month,day in zip(years,months,days)]

test_dates = [datetime.datetime.strptime(date,'%Y-%m-%d') for date in test_dates]

predictions_data = pd.DataFrame(data = {'date':test_dates,'prediction':predictions})

# 真实值

plt.plot(true_data['date'],true_data['actual'],'b-',label = 'actual')

# 预测值

plt.plot(predictions_data['date'],predictions_data['prediction'],'ro',label = 'prediction')

plt.xticks(rotation = '60')

plt.legend()

# 图名

plt.xlabel('Date')

plt.ylabel('Maximum Temperature (F)')

plt.title('Actual and Predicted Values')

看起来还可以,这个走势我们的模型已经基本能够掌握了,接下来我们要再深入到数据中了,考虑几个问题:

1.如果可以利用的数据量增大,会对结果产生什么影响呢?

2.加入新的特征会改进模型效果吗?此时的时间效率又会怎样?