目录

问题描述:

使用keras中的顺序模型来分类keras电影评论数据集的二分类问题

代码实现:

1.引入依赖,加载数据

from cProfile import label

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

from keras import optimizers,optimizer_v1,optimizer_v2

import matplotlib.pyplot as plt

from keras import losses

from keras import metrics

#仅保留数据中前10000单词

(train_data,train_labels),(test_data,test_labels) = imdb.load_data(num_words=10000)

2.数据处理和数据编码

word_index = imdb.get_word_index()

#键值颠倒,将整数索引映射为单词

reverse_word_index = dict(

[(value,key) for (key,value) in word_index.items()]

)

decoded_review = ''.join([reverse_word_index.get(i-3,'?')for i in train_data[0]])

#编码成为二进制矩阵

def vectorize_sequences(sequences,dimension=10000):

results = np.zeros((len(sequences),dimension))

for i ,sequence in enumerate(sequences):

results[i,sequence] = 1

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

#标签向量化

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

3.构建网络

'''

参考

1.#编译模型

#bianary_crossentropy----二元交叉熵

model.compile(optimizer='rmsprop',loss="binary_crossentropy",metrics=['accuracy'])

2.#配置优化器

model.compile(optimizer=optimizer_v1.RMSprop(lr = 0.001),loss = 'binary_crossentropy',metrics=['accuracy'])

3.#使用自定义得损失和指标

model.compile(optimizer=optimizer_v1.RMSprop(lr = 0.001),loss = losses.binary_crossentropy,metrics=[metrics.binary_accuracy])

'''

#构建为网络

model = models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape = (10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

4.编译模型

#留出验证集

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

#训练模型

model.compile(optimizer='rmsprop',loss="binary_crossentropy",metrics=['acc'])

5.数据可视化

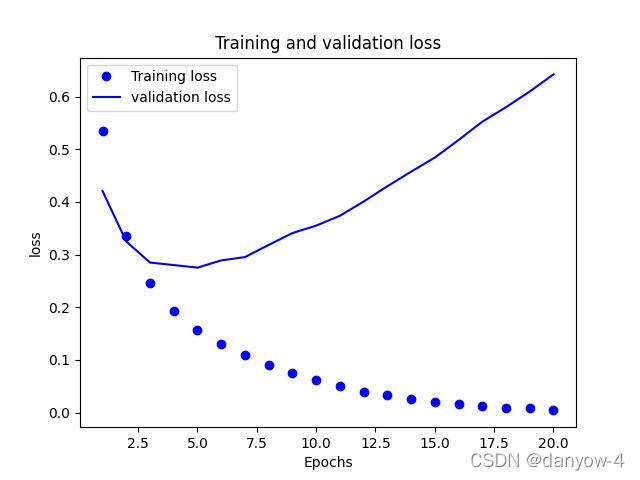

# 1.绘制训练损失和验证损失

history_dict = history.history

loss_value = history_dict['loss']

val_loss_values = history_dict['val_loss']

epochs = range(1,len(loss_value) +1)

plt.plot(epochs,loss_value,'bo',label='Training loss')

plt.plot(epochs,val_loss_values,'b',label="validation loss")

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel("loss")

plt.legend()

plt.show()

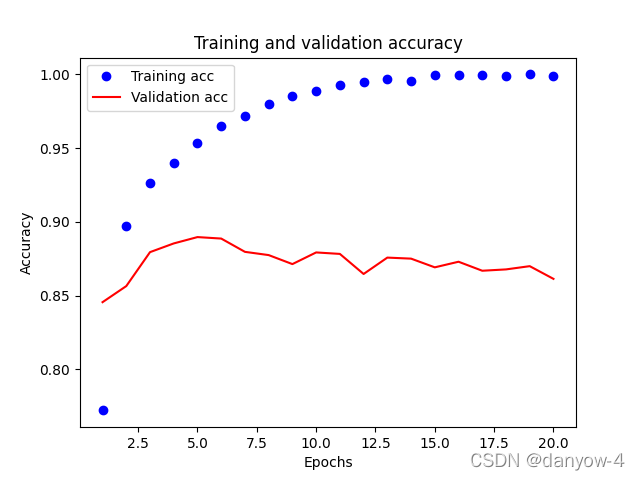

#2. 绘制训练精度和验证精度

plt.clf()#清空图像

acc = history_dict['acc']

val_acc = history_dict['val_acc']

plt.plot(epochs,acc,'bo',label = 'Training acc')

plt.plot(epochs,val_acc,'r',label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

代码展示:

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

from keras import optimizers,optimizer_v1,optimizer_v2

import matplotlib.pyplot as plt

from keras import losses

from keras import metrics

#仅保留数据中前10000单词

(train_data,train_labels),(test_data,test_labels) = imdb.load_data(num_words=10000)

word_index = imdb.get_word_index()

#键值颠倒,将整数索引映射为单词

reverse_word_index = dict(

[(value,key) for (key,value) in word_index.items()]

)

decoded_review = ''.join([reverse_word_index.get(i-3,'?')for i in train_data[0]])

#编码成为二进制矩阵

def vectorize_sequences(sequences,dimension=10000):

results = np.zeros((len(sequences),dimension))

for i ,sequence in enumerate(sequences):

results[i,sequence] = 1

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

#标签向量化

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

#构建为网络

model = models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape = (10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#留出验证集

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

#训练模型

model.compile(optimizer='rmsprop',loss="binary_crossentropy",metrics=['acc'])

'''

history_dict.keys()

>>>dict_keys(['val_acc', 'acc', 'val_loss', 'loss'])

'''

history = model.fit(partial_x_train,partial_y_train,epochs = 20,batch_size =512,validation_data=(x_val,y_val))

#绘制训练损失和验证损失

history_dict = history.history

loss_value = history_dict['loss']

val_loss_values = history_dict['val_loss']

epochs = range(1,len(loss_value) +1)

plt.plot(epochs,loss_value,'bo',label='Training loss')

plt.plot(epochs,val_loss_values,'b',label="validation loss")

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel("loss")

plt.legend()

plt.show()

#绘制训练精度和验证精度

plt.clf()#清空图像

acc = history_dict['acc']

val_acc = history_dict['val_acc']

plt.plot(epochs,acc,'bo',label = 'Training acc')

plt.plot(epochs,val_acc,'r',label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

实现截图:

训练过程

可视化

参考:

《Python深度学习》

版权声明:本文为dannnnnnnnnnnn原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。