在web项目里出现高并发时,可以通过负载均衡来处理,redis的插槽分配机制就是一个负载均衡的模式。

Redis集群节点复制介绍

(1)Redis集群的每个节点都有两种角色可选:主节点master node、从节点slave node。其中主节点用于存储数据,而从节点则是某个主节点的复制品。

(2)当用户需要处理更多读请求的时候,添加从节点可以扩展系统的读性能,因为Redis集群重用了单机Redis复制特性的代码,所以集群的复制行为和单机复制特性的行为是完全一样的。

Redis集群故障转移介绍

(1)Redis集群的主节点内置了类似Redis Sentinel的节点故障检测和自动故障转移功能,当集群中的某个master节点下线时,集群中的其他在线master节点会注意到这一点,并对已下线的主节点进行故障转移

(2)集群进行故障转移的方法和Redis Sentinel进行故障转移的方法基本一样,不同的是,在集群里面,故障转移是由集群中其他在线的主节点负责进行的(而不是像sentinel那样提升slave为master),所以集群不必另外使用Redis Sentinel

(3)故障转移过程:当超过半数的持有槽的master节点发送ping消息给B节点(也是master)但是都没收到B节点的pong反馈消息时,B客观下线,那么B节点的所有slave节点立即被触发故障转移,通过选举机制后,其中一个slave节点变为master角色,然后会把B节点负责的槽(slot)委派给自己,再向整个集群广播自己的pong消息,通知所有节点自己变为master节点。

redis 插槽分配机制简介

在redis官方给出的集群方案中,数据的分配是按照槽位来进行分配的,每一个数据的键被哈希函数映射到一个槽位,redis-3.0.0开始一共有16384个槽位,当然这个可以根据用户的喜好进行配置。当用户put或者是get一个数据的时候,首先会查找这个数据对应的槽位是多少,然后查找对应的节点,然后才把数据放入这个节点。这样就做到了把数据均匀的分配到集群中的每一个节点上,从而做到了每一个节点的负载均衡,充分利用集群。

Redis集群搭建

redis集群由多个Redis服务器组成,是一个分布式网络服务集群。

每一个Redis服务器称为节点Node,节点之间会互相通信,两两相连。

Redis集群无中心节点。

搭建流程概要——

创建多个主节点,为每一个节点指派slot,将多个节点连接起来,组成一个集群。槽位分配完成后,集群进入上线状态。

6个节点:3个主节点,每一个主节点有一个从节点。

Redis集群至少需要3个节点,因为投票容错机制要求超过半数节点认为某个节点挂了该节点才是挂了,所以2个节点无法构成集群。

要保证集群的高可用,需要每个节点都有从节点,也就是备份节点,所以Redis集群至少需要6台服务器。

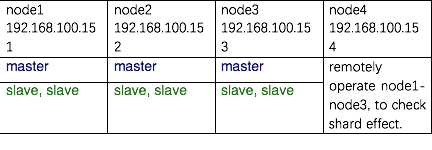

我这里以redis3.0为例演示搭建集群,对搭建过程的问题也做了记录,让读者少踩坑;也对搭建后的集群做了测试,以便观察redis集群如何自动将客户端的读、写请求分到不同的slot节点处理的。

集群规划表:

开始为3个节点(node1~node3)安装redis,准备redis运行环境

1、 下载redis3.0以及以上的tar包

http://download.redis.io/releases/

2、 解压 tar –zxf redis-3.0.0-tar.gz –C /bigdata/

3、 每个节点安装gcc 和tcl

yum install gcc tcl -y

4、 每个节点分别编译

make

5、 每个节点分别编译安装

查看README可知,可对生成的bin目录自定义位置:make install PREFIX=/bigdata/redis-3.0.0/

6、 将配置文件拷贝到安装目录的bin下面

cp /bigdata/redis-3.0.0/redis.conf /bigdata/redis3.0.0/bin/

按照上面的集群规划表,为3个节点准备总共6份安装目录:

node1:

cd /bigdata/ && mv redis-3.0.0 redis6371 && cp -r redis6371 redis6381 && cp -r redis6371 redis6391

node2:

cd /bigdata/ && mv redis-3.0.0 redis6372 && cp -r redis6372 redis6382 && cp -r redis6372 redis6392

node3:

cd /bigdata/ && mv redis-3.0.0 redis6373 && cp -r redis6373 redis6383 && cp -r redis6373 redis6393

分别编辑6份redis.conf配置项:

port指定相关端口,daemonize改成yes,cluster-enabled改成yes

分别写脚本启动3个节点上master、slave角色的redis-server实例,总共9个

[root@node1 bigdata]# cat allStartRedisServer.node1 # 须按此语法写,否则无法在一个节点同时启动三个实例

cd /bigdata/redis6371/bin && ./redis-server /bigdata/redis6371/redis.conf

cd /bigdata/redis6381/bin && ./redis-server /bigdata/redis6381/redis.conf

cd /bigdata/redis6391/bin && ./redis-server /bigdata/redis6391/redis.conf

[root@node2 bigdata]# cat allStartRedisServer.node2

cd /bigdata/redis6372/bin && ./redis-server /bigdata/redis6372/redis.conf

cd /bigdata/redis6382/bin && ./redis-server /bigdata/redis6382/redis.conf

cd /bigdata/redis6392/bin && ./redis-server /bigdata/redis6392/redis.conf

[root@node3 bigdata]# cat allStartRedisServer.node3

cd /bigdata/redis6373/bin && ./redis-server /bigdata/redis6373/redis.conf

cd /bigdata/redis6383/bin && ./redis-server /bigdata/redis6383/redis.conf

cd /bigdata/redis6393/bin && ./redis-server /bigdata/redis6393/redis.conf

[root@node4 bin]# cat shutdownAllredisInstance # 关闭3个节点总共9个实例

cd /bigdata/redis-3.0.0/bin

for port in {71,81,91}; do

redis-cli -h 192.168.100.151 -p 63"${port}" shutdown

done

for port in {72,82,92}; do

redis-cli -h 192.168.100.152 -p 63"${port}" shutdown

done

for port in {73,83,93}; do

redis-cli -h 192.168.100.153 -p 63"${port}" shutdown

done

启动3个节点总共9个redis-server 实例以后,查看端口号

[root@node1 bigdata]# ps aux | grep -v grep | grep redis

root 5230 0.0 0.4 137456 7532 ? Ssl 01:24 0:00 ./redis-server *:6371 [cluster]

root 5232 0.0 0.4 137456 7528 ? Ssl 01:24 0:00 ./redis-server *:6381 [cluster]

root 5236 0.0 0.4 137456 7524 ? Ssl 01:24 0:00 ./redis-server *:6391 [cluster]

[root@node2 bigdata]# ps aux | grep -v grep | grep redis

root 4723 0.2 0.4 137456 7528 ? Ssl 01:24 0:00 ./redis-server *:6372 [cluster]

root 4725 0.1 0.4 137456 7532 ? Ssl 01:24 0:00 ./redis-server *:6382 [cluster]

root 4731 0.1 0.4 137456 7528 ? Ssl 01:24 0:00 ./redis-server *:6392 [cluster]

[root@node3 bigdata]# ps aux | grep -v grep | grep redis

root 4735 0.2 0.4 137456 7528 ? Ssl 01:24 0:00 ./redis-server *:6373 [cluster]

root 4737 0.2 0.4 137456 7532 ? Ssl 01:24 0:00 ./redis-server *:6383 [cluster]

root 4739 0.2 0.4 137456 7524 ? Ssl 01:24 0:00 ./redis-server *:6393 [cluster]

再用redis-trib.rb脚本搭建redis高可用主从集群(必须写IP地址,不能写主机名),注意写的顺序是先写master角色的ip:port,后写slave角色的ip:port 这里node1的6371、6372、6373各有一个master

[root@node1 ~]# /bigdata/redis6371/src/redis-trib.rb create –replicas 2 192.168.100.151:6371 192.168.100.152:6372 192.168.100.153:6373 192.168.100.151:6381 192.168.100.151:6391 192.168.100.152:6382 192.168.100.152:6392 192.168.100.153:6383 192.168.100.153:6393

Can I set the above configuration? (type ‘yes’ to accept): yes

/usr/lib/ruby/gems/1.8/gems/redis-3.0.0/lib/redis/client.rb:79:in `call’: ERR Slot 16011 is already busy (Redis::CommandError)

错误原因分析:slot插槽被占用了(这是 搭建集群前时,以前redis的旧数据和配置信息没有清理干净。)

解决方案是 ——用redis-cli 登录到每个节点执行 删除dump.rdb文件、flushall、cluster reset 就可以了

[root@node4 bin]# cat flushAllInstencesAndResetCluster # 在9个实例运行时清除冲掉所有数据并重置集群

#!/bin/bash

cd /bigdata/redis-3.0.0/bin

for port in {71,81,91}; do

redis-cli -h 192.168.100.151 -p 63${port} flushall

redis-cli -h 192.168.100.151 -p 63${port} cluster reset

done

for port in {72,82,92}; do

redis-cli -h 192.168.100.152 -p 63${port} flushall

redis-cli -h 192.168.100.152 -p 63${port} cluster reset

done

for port in {73,83,93}; do

redis-cli -h 192.168.100.153 -p 63${port} flushall

redis-cli -h 192.168.100.153 -p 63${port} cluster reset

done

关闭9个实例、删掉旧的数据文件

[root@node1 bigdata]# for h in

seq 3

; do ssh node

KaTeX parse error: Expected ‘EOF’, got ‘#’ at position 53: …@node1 bigdata]#̲ for h in `seq …

h ls -l /bigdata/dump.rdb; done

然后启动9个实例、重新执行脚本搭建redis集群:

[root@node1 bigdata]# /bigdata/redis6371/src/redis-trib.rb create –replicas 2 192.168.100.151:6371 192.168.100.152:6372 192.168.100.153:6373 192.168.100.151:6381 192.168.100.151:6391 192.168.100.152:6382 192.168.100.152:6392 192.168.100.153:6383 192.168.100.153:6393

Connecting to node 192.168.100.151:6371: OK

Connecting to node 192.168.100.152:6372: OK

Connecting to node 192.168.100.153:6373: OK

Connecting to node 192.168.100.151:6381: OK

Connecting to node 192.168.100.151:6391: OK

Connecting to node 192.168.100.152:6382: OK

Connecting to node 192.168.100.152:6392: OK

Connecting to node 192.168.100.153:6383: OK

Connecting to node 192.168.100.153:6393: OK

">>> Performing hash slots allocation on 9 nodes...

Using 3 masters:

192.168.100.153:6373

192.168.100.152:6372

192.168.100.151:6371

Adding replica 192.168.100.152:6382 to 192.168.100.153:6373

Adding replica 192.168.100.151:6381 to 192.168.100.153:6373

Adding replica 192.168.100.153:6383 to 192.168.100.152:6372

Adding replica 192.168.100.153:6393 to 192.168.100.152:6372

Adding replica 192.168.100.152:6392 to 192.168.100.151:6371

Adding replica 192.168.100.151:6391 to 192.168.100.151:6371

M: 8e738bced4a402ae26a06db4da97135a5d2cb3b8 192.168.100.151:6371

slots:10923-16383 (5461 slots) master

M: e6114843d6d3408b60a56876041f567efbb83ba8 192.168.100.152:6372

slots:5461-10922 (5462 slots) master

M: 81d86fe9624b1719a1474f6b2e93c2a171a013eb 192.168.100.153:6373

slots:0-5460 (5461 slots) master

S: 827f48d471221589718a46070960de3ec563b079 192.168.100.151:6381

replicates 81d86fe9624b1719a1474f6b2e93c2a171a013eb

S: 0743f14942f57c9e3f75652063dc5c7afd9f3f1d 192.168.100.151:6391

replicates 8e738bced4a402ae26a06db4da97135a5d2cb3b8

S: 52162c0ffdfbc56030480e30af2eaa7c3d87e582 192.168.100.152:6382

replicates 81d86fe9624b1719a1474f6b2e93c2a171a013eb

S: c7c436a7cf8f66a16e253f796c7e0af88b953a19 192.168.100.152:6392

replicates 8e738bced4a402ae26a06db4da97135a5d2cb3b8

S: 51b944e003ea74702923e63c2796b3d0adaeff10 192.168.100.153:6383

replicates e6114843d6d3408b60a56876041f567efbb83ba8

S: 8d0636954e0877c7019782a856f40c7d0260ef81 192.168.100.153:6393

replicates e6114843d6d3408b60a56876041f567efbb83ba8

Can I set the above configuration? (type 'yes' to accept): yes

">>> Nodes configuration updated

">>> Assign a different config epoch to each node

">>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join....

">>> Performing Cluster Check (using node 192.168.100.151:6371)

M: 8e738bced4a402ae26a06db4da97135a5d2cb3b8 192.168.100.151:6371

slots:10923-16383 (5461 slots) master

M: e6114843d6d3408b60a56876041f567efbb83ba8 192.168.100.152:6372

slots:5461-10922 (5462 slots) master

M: 81d86fe9624b1719a1474f6b2e93c2a171a013eb 192.168.100.153:6373

slots:0-5460 (5461 slots) master

M: 827f48d471221589718a46070960de3ec563b079 192.168.100.151:6381

slots: (0 slots) master

replicates 81d86fe9624b1719a1474f6b2e93c2a171a013eb

M: 0743f14942f57c9e3f75652063dc5c7afd9f3f1d 192.168.100.151:6391

slots: (0 slots) master

replicates 8e738bced4a402ae26a06db4da97135a5d2cb3b8

M: 52162c0ffdfbc56030480e30af2eaa7c3d87e582 192.168.100.152:6382

slots: (0 slots) master

replicates 81d86fe9624b1719a1474f6b2e93c2a171a013eb

M: c7c436a7cf8f66a16e253f796c7e0af88b953a19 192.168.100.152:6392

slots: (0 slots) master

replicates 8e738bced4a402ae26a06db4da97135a5d2cb3b8

M: 51b944e003ea74702923e63c2796b3d0adaeff10 192.168.100.153:6383

slots: (0 slots) master

replicates e6114843d6d3408b60a56876041f567efbb83ba8

M: 8d0636954e0877c7019782a856f40c7d0260ef81 192.168.100.153:6393

slots: (0 slots) master

replicates e6114843d6d3408b60a56876041f567efbb83ba8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>>> Check slots coverage...

[OK] All 16384 slots covered.

搭建redis集群成功,下面开始测试集群。

在未启动redis实例的node4节点上用redis-cli客户端连接集群,登入一个redis集群节点操作一下,验证分片(sharding)效果——

[root@node4 bin]# redis-cli -h node3 -p 6373

node3:6373> keys *

(empty list or set)

node3:6373> LPUSH listFromNode4 hello tony how you ?

(error) MOVED 10146 192.168.100.152:6372

node3:6373> exit

由于Redis集群无中心节点,请求会发给任意主节点。主节点只会处理自己负责槽位的命令请求,对其它槽位的命令请求,该主节点会向客户端返回一个

转向错误

,客户端需要根据错误中包含的地址和端口重新向负责相应槽位的主节点发起命令请求。

[root@node4 bin]# redis-cli -h node2 -p 6372

node2:6372> keys *

(empty list or set)

node2:6372> LPUSH listFromNode4 hello tony how you ?

(integer) 5

node2:6372> keys *

-

“listFromNode4”

node2:6372> get listFromNodes

(nil)

node2:6372> LRANGE listFromNode4 0 -1 - “?”

- “you”

- “how”

- “tony”

- “hello”

继续在node2:6272写入key-value

node2:6372> set str_key1 nihao

OK

node2:6372> keys *

- “str_key1”

-

“listFromNode4”

node2:6372> get str_key1

“nihao”

node2:6372> set strkeyFromNode4 “SAY HI FROM node4”

(error) MOVED 2223 192.168.100.153:6373

node2:6372> exit

[root@node4 bin]# redis-cli -h node3 -p 6373

node3:6373> keys *

(empty list or set)

node3:6373> set strkeyFromNode4 “SAY HI FROM node4”

OK

node3:6373> keys * - “strkeyFromNode4”

由于我们刚才搭建的redis集群有负载均衡功能,所以存储的数据(key-value)是被均匀地分配到不同的节点,那么我们要查的key有可能位于其他节点上.

node3:6373> keys *

-

“strkeyFromNode4”

node3:6373> get listFromNode4

(error) MOVED 10146 192.168.100.152:6372

node3:6373> exit

[root@node4 bin]# redis-cli -s 192.168.100.152:6372

Could not connect to Redis at 192.168.100.152:6372: No such file or directory

not connected> exit

[root@node4 bin]# redis-cli -h 192.168.100.152 -p 6372

192.168.100.152:6372> keys * - “str_key1”

-

“listFromNode4”

192.168.100.152:6372> get listFromNode4

(error) WRONGTYPE Operation against a key holding the wrong kind of value

192.168.100.152:6372> LRANGE listFromNode4 0 -1 - “?”

- “you”

- “how”

- “tony”

- “hello”

结语

本文以redis-3.0.0为例说明了如何搭建高可用的、自动均衡负载的redis集群。更高的版本也是类似的集群搭建方式,大家可自行尝试。

本文有什么疏漏的地方,或者读者有什么意见,欢迎给我留言!