1、版本

elasticsearch:7.14.2

kibana:7.14.2

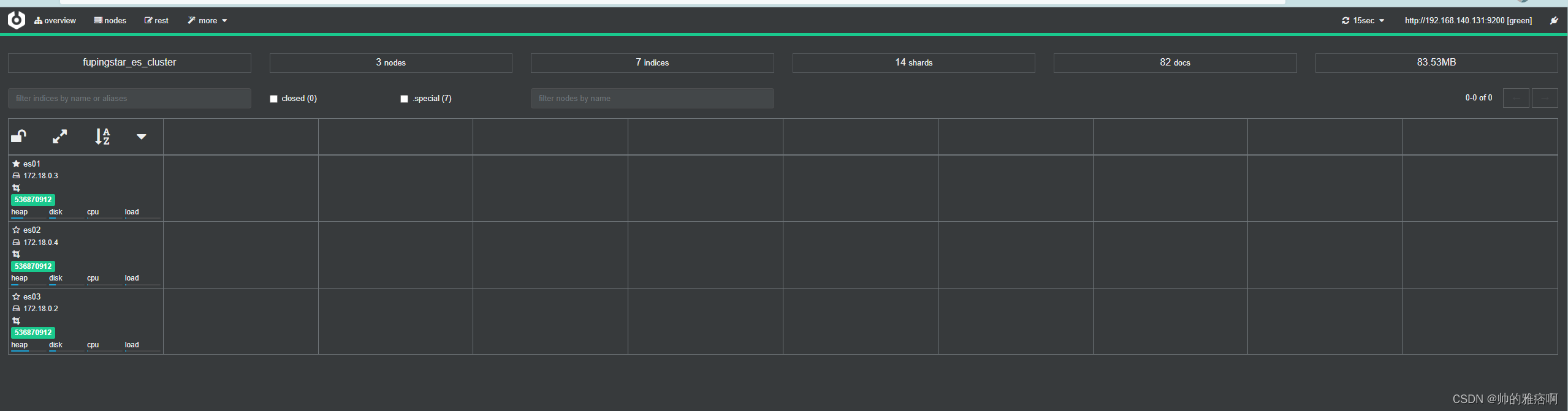

cerebro:0.9.4

logstash:7.14.0

2、文件创建与数据获取

1)es

此集群为 3 节点,故创建 es01,es02,es03 三个文件夹,在每个文件夹下新建 data、conf、plugins 文件夹。

在 es0x/conf 创建 elasticsearch.yml,添加如下内容

es01/conf/elasticsearch.yml

cluster.name: fupingstar_es_cluster # 集群名称,集群名称相同的节点自动组成一个集群

node.name: es01 # 节点名称

network.host: 0.0.0.0 # 同时设置bind_host和publish_host

http.port: 9200 # rest客户端连接端口

transport.tcp.port: 9300 # 集群中节点互相通信端口

node.master: true # 设置master角色

node.data: true # 设置data角色

node.ingest: true # 设置ingest角色 在索引之前,对文档进行预处理,支持pipeline管道,相当于过滤器

bootstrap.memory_lock: false

node.max_local_storage_nodes: 1

http.cors.enabled: true # 跨域配置

http.cors.allow-origin: /.*/ # 跨域配置

es02/conf/elasticsearch.yml

cluster.name: fupingstar_es_cluster

node.name: es02

network.host: 0.0.0.0

http.port: 9201

transport.tcp.port: 9300

node.master: true

node.data: true

node.ingest: true

bootstrap.memory_lock: false

node.max_local_storage_nodes: 1

http.cors.enabled: true

http.cors.allow-origin: /.*/

es03/conf/elasticsearch.yml

cluster.name: fupingstar_es_cluster

node.name: es03

network.host: 0.0.0.0

http.port: 9202

transport.tcp.port: 9300

node.master: true

node.data: true

node.ingest: true

bootstrap.memory_lock: false

node.max_local_storage_nodes: 1

http.cors.enabled: true

http.cors.allow-origin: /.*/

-

kibana

创建 kibana 文件夹,在文件夹下创建 kibana.yml 文件,在文件中添加如下内容:

注意 IP 修改成自己的

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://192.168.140.131:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

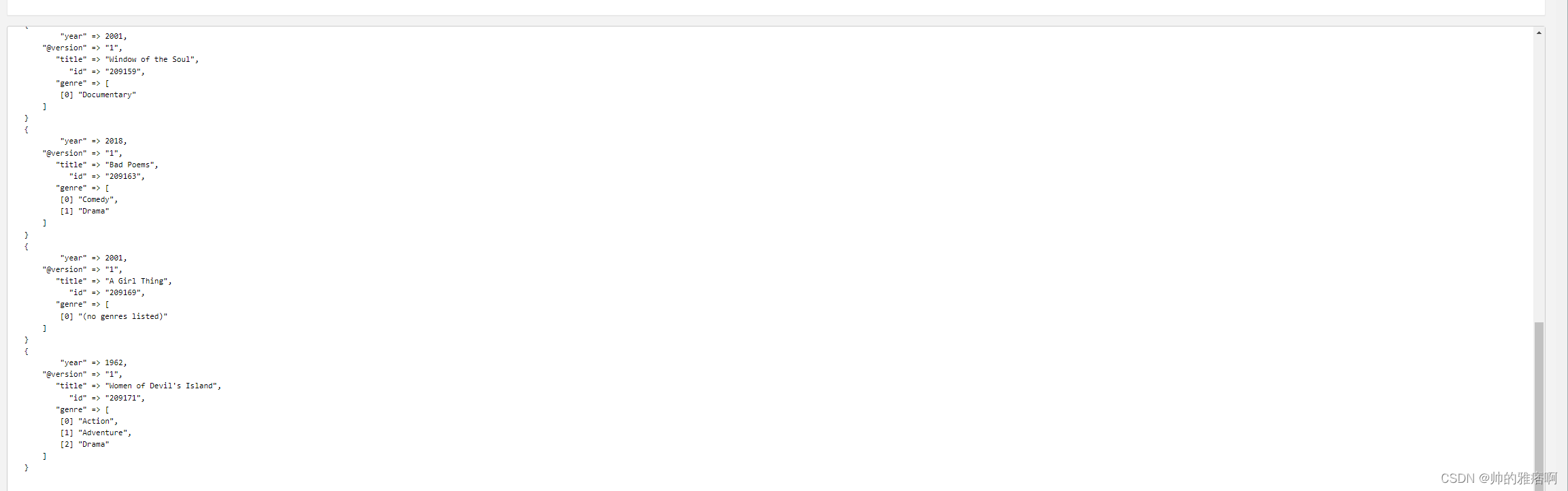

3)logstash

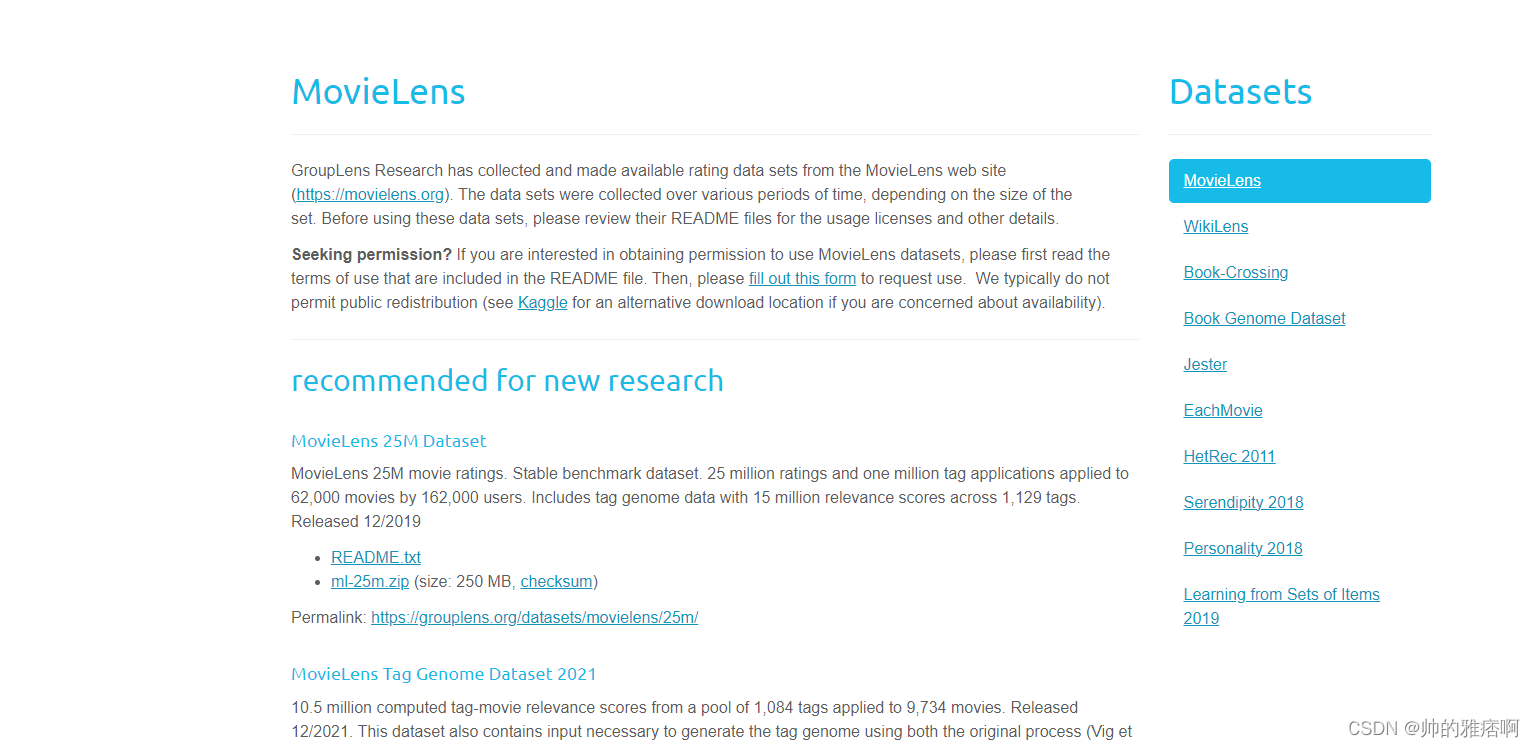

logstash 数据下载:https://grouplens.org/datasets/movielens/

下载后解压缩:

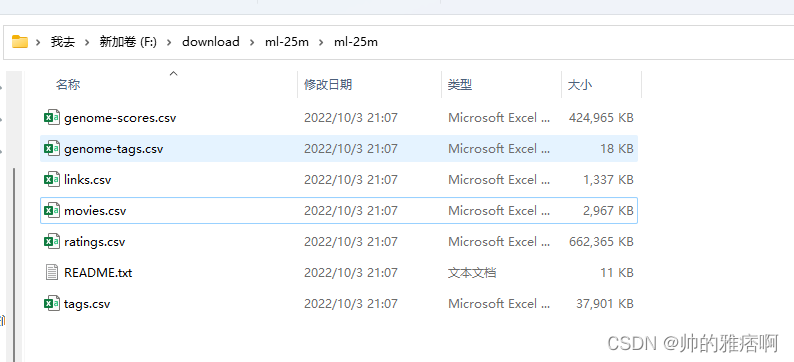

创建 logstash 文件夹,在其下创建 conf 文件夹和 mydata 文件夹,将解压缩出来的 movies.csv 放入 mydata 文件夹。

在 conf 文夹夹下创建两个文件,并填入以下内容:

logstash.yml

http.host: "0.0.0.0"

logstash.conf

input {

file {

path => "/usr/share/logstash/data/movies.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

columns => ["id","content","genre"]

}

mutate {

split => { "genre" => "|" }

remove_field => ["path", "host","@timestamp","message"]

}

mutate {

split => ["content", "("]

add_field => { "title" => "%{[content][0]}"}

add_field => { "year" => "%{[content][1]}"}

}

mutate {

convert => {

"year" => "integer"

}

strip => ["title"]

remove_field => ["path", "host","@timestamp","message","content"]

}

}

output {

elasticsearch {

hosts => "http://192.168.140.131:9200"

index => "movies"

document_id => "%{id}"

}

stdout {}

}

3、编写 docker-compose.yml 文件。

修改端口、挂载目录等。

version: '3'

services:

es01:

image: elasticsearch:7.14.2

container_name: es01

environment:

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- /learn/docker/elk/es01/data:/usr/share/elasticsearch/data

- /learn/docker/elk/es01/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /learn/docker/elk/es01/plugins:/usr/share/elasticsearch/plugins

ports:

- 9200:9200

es02:

image: elasticsearch:7.14.2

container_name: es02

environment:

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- /learn/docker/elk/es02/data:/usr/share/elasticsearch/data

- /learn/docker/elk/es02/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /learn/docker/elk/es02/plugins:/usr/share/elasticsearch/plugins

ports:

- 9201:9201

es03:

image: elasticsearch:7.14.2

container_name: es03

environment:

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- /learn/docker/elk/es03/data:/usr/share/elasticsearch/data

- /learn/docker/elk/es03/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /learn/docker/elk/es03/plugins:/usr/share/elasticsearch/plugins

ports:

- 9202:9202

kibana:

image: kibana:7.14.2

container_name: kibana

depends_on:

- es01

environment:

ELASTICSEARCH_URL: http://es01:9200

ELASTICSEARCH_HOSTS: http://es01:9200

volumes:

- /learn/docker/elk/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

ports:

- 5601:5601

cerebro:

image: lmenezes/cerebro:0.9.4

container_name: cerebro

ports:

- "9801:9000"

command:

- -Dhosts.0.host=http://192.168.140.131:9200

logstash:

image: logstash:7.14.0

container_name: elk_logstash

restart: always

volumes:

- /learn/docker/elk/logstash/conf/logstash.conf:/usr/share/logstash/pipeline/logstash.conf:rw

- /learn/docker/elk/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml

- /learn/docker/elk/logstash/mydata/movies.csv:/usr/share/logstash/data/movies.csv

depends_on:

- es01

ports:

- 4560:4560

4、启动集群,在docker-compose文件所在目录下运行命令

docker-compose up -d

5、启动成功后,访问对应地址。

查看 logstash 容器日志,可看到在执行相关数据导入工作。

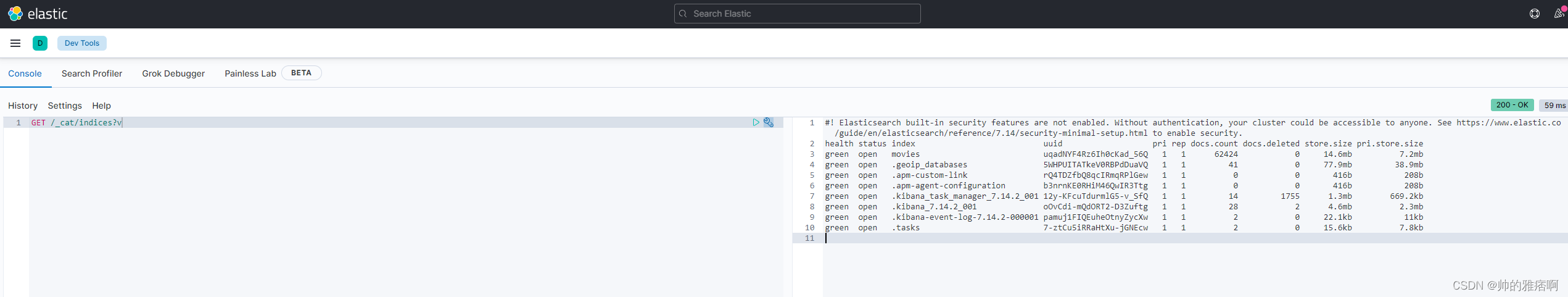

在 kibana 的 Dev tools 执行 GET /_cat/indices?v 查看,可以看到 movies,说明数据也导入成功。