basic

1. describe

basic files

<home>/app

├── CMakeLists.txt

├── prj.conf

└── src

└── main.c

# SPDX-License-Identifier: Apache-2.0

# CMakeLists.txt

cmake_minimum_required(VERSION 3.13.1)

find_package(Zephyr HINTS $ENV{ZEPHYR_BASE})

project(blinky)

target_sources(app PRIVATE src/main.c)

# prj.conf

CONFIG_GPIO=y

/*

* main.c

* Copyright (c) 2016 Intel Corporation

*

* SPDX-License-Identifier: Apache-2.0

*/

#include <zephyr.h>

#include <device.h>

#include <devicetree.h>

#include <drivers/gpio.h>

/* 1000 msec = 1 sec */

#define SLEEP_TIME_MS 1000

/* The devicetree node identifier for the "led0" alias. */

#define LED0_NODE DT_ALIAS(led0)

#if DT_HAS_NODE(LED0_NODE)

#define LED0 DT_GPIO_LABEL(LED0_NODE, gpios)

#define PIN DT_GPIO_PIN(LED0_NODE, gpios)

#if DT_PHA_HAS_CELL(LED0_NODE, gpios, flags)

#define FLAGS DT_GPIO_FLAGS(LED0_NODE, gpios)

#endif

#else

/* A build error here means your board isn't set up to blink an LED. */

#error "Unsupported board: led0 devicetree alias is not defined"

#define LED0 ""

#define PIN 0

#endif

#ifndef FLAGS

#define FLAGS 0

#endif

void main(void)

{

struct device *dev;

bool led_is_on = true;

int ret;

dev = device_get_binding(LED0);

if (dev == NULL) {

return;

}

ret = gpio_pin_configure(dev, PIN, GPIO_OUTPUT_ACTIVE | FLAGS);

if (ret < 0) {

return;

}

while (1) {

gpio_pin_set(dev, PIN, (int)led_is_on);

led_is_on = !led_is_on;

k_msleep(SLEEP_TIME_MS);

}

}

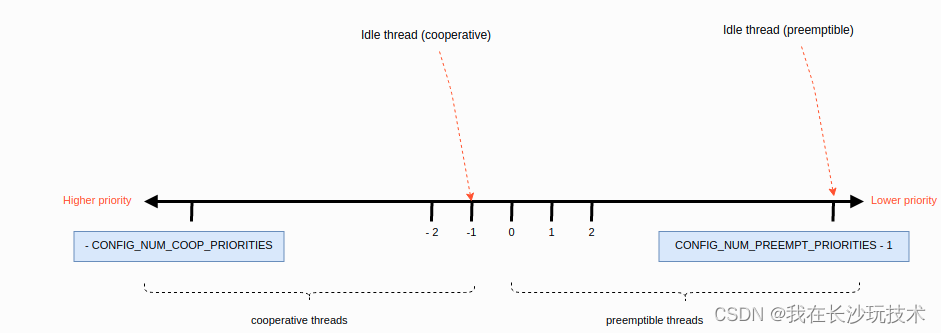

2. threads

priority

stack

K_KERNEL_STACK/K_THREAD_STACK(CONFIG_USERSPACE=y)

K_THREAD_STACK=K_KERNEL_STACK when CONFIG_USERSPACE=n

K_THREAD_STACK_DEFINE

difference with other os,

- fix 3 paramters

-

delay for the thread to startup,

K_NO_WAIT

for no delay

functions

// macro, static create

// K_ESSENTIAL, K_FP_REGS, and K_SSE_REG, use '|' to join

#define K_THREAD_DEFINE(name, stack_size, entry, p1, p2, p3, prio, options, delay)

// dynamic

k_tid_t k_thread_create(struct k_thread *new_thread,

k_thread_stack_t *stack,

size_t stack_size,

k_thread_entry_t entry,

void *p1, void *p2, void *p3,

int prio, u32_t options, k_timeout_t delay);

// start/resume/abort/join

void k_thread_start(k_tid_t thread);

void k_thread_resume(k_tid_t thread);

int k_thread_join(struct k_thread *thread, k_timeout_t timeout);

void k_thread_abort(k_tid_t thread);

//sleep

s32_t k_msleep(s32_t ms);

s32_t k_usleep(s32_t us);

s32_t k_sleep(k_timeout_t timeout);

3. thread sync

# spcific value

K_NO_WAIT and K_FOREVER

# call in isr should use K_NO_WAIT

# irq lock

unsigned int irq_lock();

void irq_unlock(unsigned int)

# sem

int k_sem_take(struct k_sem *sem, k_timeout_t timeout);

void k_sem_give(struct k_sem *sem);

void k_sem_reset(struct k_sem *sem);

unsigned int k_sem_count_get(struct k_sem *sem);

// static create

#define K_SEM_DEFINE(name, initial_count, count_limit) \

Z_STRUCT_SECTION_ITERABLE(k_sem, name) = \

Z_SEM_INITIALIZER(name, initial_count, count_limit); \

BUILD_ASSERT(((count_limit) != 0) && \

((initial_count) <= (count_limit)));

// dynamic create

int k_sem_init(struct k_sem *sem, unsigned int initial_count,

unsigned int limit);

# mutex

// static create

#define K_MUTEX_DEFINE(name) \

Z_STRUCT_SECTION_ITERABLE(k_mutex, name) = \

_K_MUTEX_INITIALIZER(name)

// dynamic create

int k_mutex_init(struct k_mutex *mutex);

// A thread is permitted to lock a mutex it has already locked. The operation

// completes immediately and the lock count is increased by 1.

int k_mutex_lock(struct k_mutex *mutex, k_timeout_t timeout);

// The mutex cannot be claimed by another thread until it has been unlocked by

// the calling thread as many times as it was previously locked by that

// thread.

int k_mutex_unlock(struct k_mutex *mutex);

# queue

void k_queue_init(struct k_queue *queue);

void k_queue_cancel_wait(struct k_queue *queue);

extern void k_queue_append(struct k_queue *queue, void *data);

s32_t k_queue_alloc_append(struct k_queue *queue, void *data);

extern void k_queue_prepend(struct k_queue *queue, void *data);

s32_t k_queue_alloc_prepend(struct k_queue *queue, void *data);

void k_queue_insert(struct k_queue *queue, void *prev, void *data);

nt k_queue_append_list(struct k_queue *queue, void *head, void *tail);

int k_queue_merge_slist(struct k_queue *queue, sys_slist_t *list);

void *k_queue_get(struct k_queue *queue, k_timeout_t timeout);

bool k_queue_remove(struct k_queue *queue, void *data)

bool k_queue_unique_append(struct k_queue *queue, void *data)

int k_queue_is_empty(struct k_queue *queue);

void *k_queue_peek_head(struct k_queue *queue);

void *k_queue_peek_tail(struct k_queue *queue);

#define K_QUEUE_DEFINE(name) \

Z_STRUCT_SECTION_ITERABLE(k_queue, name) = \

_K_QUEUE_INITIALIZER(name)

# msgq

// static reate

#define K_MSGQ_DEFINE(q_name, q_msg_size, q_max_msgs, q_align) \

static char __noinit __aligned(q_align) \

_k_fifo_buf_##q_name[(q_max_msgs) * (q_msg_size)]; \

Z_STRUCT_SECTION_ITERABLE(k_msgq, q_name) = \

_K_MSGQ_INITIALIZER(q_name, _k_fifo_buf_##q_name, \

q_msg_size, q_max_msgs)

// dynamic create

void k_msgq_init(struct k_msgq *q, char *buffer, size_t msg_size,

u32_t max_msgs);

int k_msgq_alloc_init(struct k_msgq *msgq, size_t msg_size,

u32_t max_msgs);

int k_msgq_cleanup(struct k_msgq *msgq);

int k_msgq_put(struct k_msgq *msgq, void *data, k_timeout_t timeout);

int k_msgq_get(struct k_msgq *msgq, void *data, k_timeout_t timeout);

// get the data and keep in the queue

int k_msgq_peek(struct k_msgq *msgq, void *data);

void k_msgq_purge(struct k_msgq *msgq);

u32_t k_msgq_num_free_get(struct k_msgq *msgq);

u32_t k_msgq_num_used_get(struct k_msgq *msgq);

# spin_lock

k_spinlock_key_t k_spin_lock(struct k_spinlock *l);

void k_spin_unlock(struct k_spinlock *l,k_spinlock_key_t key);

# poll_event

extern void k_poll_event_init(struct k_poll_event *event, u32_t type,

int mode, void *obj);

int k_poll(struct k_poll_event *events, int num_events,

k_timeout_t timeout);

void k_poll_signal_init(struct k_poll_signal *signal);

void k_poll_signal_reset(struct k_poll_signal *signal);

void k_poll_signal_check(struct k_poll_signal *signal,

unsigned int *signaled, int *result);

int k_poll_signal_raise(struct k_poll_signal *signal, int result);

# atomic

// compare and set

bool atomic_cas(atomic_t *target, atomic_val_t old_value,

atomic_val_t new_value);

bool atomic_ptr_cas(atomic_ptr_t *target, void *old_value,

void *new_value);

atomic_val_t atomic_add(atomic_t *target, atomic_val_t value);

atomic_val_t atomic_sub(atomic_t *target, atomic_val_t value);

atomic_val_t atomic_inc(atomic_t *target);

atomic_val_t atomic_dec(atomic_t *target);

atomic_val_t atomic_get(const atomic_t *target);

void *atomic_ptr_get(const atomic_ptr_t *target);

atomic_val_t atomic_set(atomic_t *target, atomic_val_t value);

void *atomic_ptr_set(atomic_ptr_t *target, void *value);

atomic_val_t atomic_clear(atomic_t *target);

void *atomic_ptr_clear(atomic_ptr_t *target);

atomic_val_t atomic_or(atomic_t *target, atomic_val_t value);

atomic_val_t atomic_xor(atomic_t *target, atomic_val_t value);

atomic_val_t atomic_nand(atomic_t *target, atomic_val_t value);

# more to known

notify mbox work_q

4. timer

void k_timer_init(struct k_timer *timer,

k_timer_expiry_t expiry_fn,

k_timer_stop_t stop_fn);

void k_timer_start(struct k_timer *timer,

k_timeout_t duration, k_timeout_t period);

void k_timer_stop(struct k_timer *timer);

u32_t k_timer_status_get(struct k_timer *timer);

u32_t k_timer_status_sync(struct k_timer *timer);

k_ticks_t k_timer_expires_ticks(struct k_timer *timer);

k_ticks_t k_timer_remaining_ticks(struct k_timer *timer);

u32_t k_timer_remaining_get(struct k_timer *timer);

s64_t k_uptime_ticks(void);

s64_t k_uptime_get(void); // unit ms

u32_t k_cycle_get_32(void);

5. utils

# fifo, just wrap of the queue, thread safe

#define Z_FIFO_INITIALIZER(obj) \

{ \

._queue = _K_QUEUE_INITIALIZER(obj._queue) \

}

#define k_fifo_init(fifo) \

k_queue_init(&(fifo)->_queue)

#define k_fifo_is_empty(fifo) \

k_queue_is_empty(&(fifo)->_queue)

#define k_fifo_peek_head(fifo) \

k_queue_peek_head(&(fifo)->_queue)

#define k_fifo_peek_tail(fifo) \

k_queue_peek_tail(&(fifo)->_queue)

#define K_FIFO_DEFINE(name) \

Z_STRUCT_SECTION_ITERABLE(k_fifo, name) = \

Z_FIFO_INITIALIZER(name)

# lifo just wrap of the queue

like upper

# rbtree

void rb_insert(struct rbtree *tree, struct rbnode *node);

void rb_remove(struct rbtree *tree, struct rbnode *node);

struct rbnode *rb_get_min(struct rbtree *tree)

struct rbnode *rb_get_max(struct rbtree *tree)

bool rb_contains(struct rbtree *tree, struct rbnode *node);

void rb_walk(struct rbtree *tree, rb_visit_t visit_fn,

void *cookie);

struct rbnode *z_rb_foreach_next(struct rbtree *tree, struct _rb_foreach *f);

#define RB_FOR_EACH(tree, node) \

for (struct _rb_foreach __f = _RB_FOREACH_INIT(tree, node); \

(node = z_rb_foreach_next(tree, &__f)); \

/**/)

# list

dlist slist like rb tree

6. interrupt

不受控的中断使用时需要打开

CONFIG_ZERO_LATENCY_IRQS

并且不使用系统调用。

普通中断如下

The following code defines and enables an ISR.

#define MY_DEV_IRQ 24 /* device uses IRQ 24 */

#define MY_DEV_PRIO 2 /* device uses interrupt priority 2 */

/* argument passed to my_isr(), in this case a pointer to the device */

#define MY_ISR_ARG DEVICE_GET(my_device)

#define MY_IRQ_FLAGS 0 /* IRQ flags */

void my_isr(void *arg)

{

... /* ISR code */

}

void my_isr_installer(void)

{

...

IRQ_CONNECT(MY_DEV_IRQ, MY_DEV_PRIO, my_isr, MY_ISR_ARG, MY_IRQ_FLAGS);

irq_enable(MY_DEV_IRQ);

...

}

Since the

IRQ_CONNECT

macro requires that all its parameters be known at build time, in some cases this may not be acceptable. It is also possible to install

interrupt

s at runtime with

irq_connect_dynamic()

. It is used in exactly the same way as

IRQ_CONNECT

:

void my_isr_installer(void)

{

...

irq_connect_dynamic(MY_DEV_IRQ, MY_DEV_PRIO, my_isr, MY_ISR_ARG,

MY_IRQ_FLAGS);

irq_enable(MY_DEV_IRQ);

...

}

Dynamic

interrupt

s require the

CONFIG_DYNAMIC_INTERRUPTS

option to be enabled. Removing or re-configuring a dynamic interrupt is currently unsupported.

7. memory

// CONFIG_HEAP_MEM_POOL_SIZE

// user heap

k_heap_xxx

// user pool

k_mem_pool

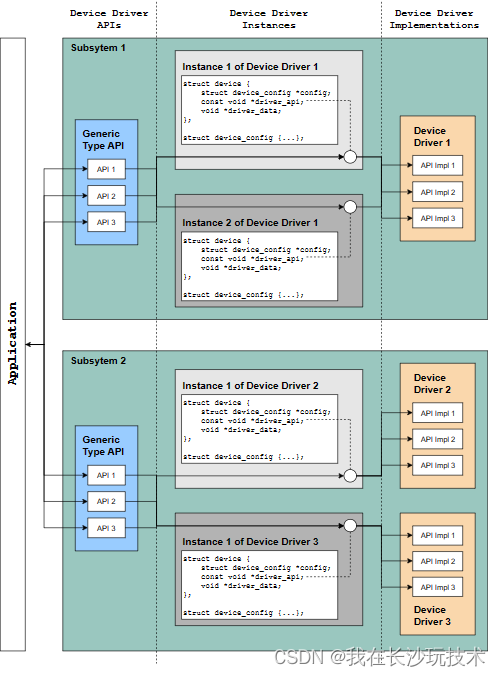

8. device

struct device {

const char *name;

const void *config; ---> readonly config, struct

const void *api; ---> api interface struct, reference sub system

void * const data;

};