1、环境说明(安装时配置IP及主机名)

| 序号 | 主机IP | 主机名 | 系统 | 备注 |

| 1 | 192.168.3.102 | master1 | rockylinux8.6最小化安装 | 控制节点 |

| 2 | 192.168.3.103 | master2 | rockylinux8.6最小化安装 | 控制节点 |

| 3 | 192.168.3.104 | master3 | rockylinux8.6最小化安装 | 控制节点 |

| 4 | 192.168.3.105 | node1 | rockylinux8.6最小化安装 | 工作节点 |

| 5 | 192.168.3.106 | node2 | rockylinux8.6最小化安装 | 工作节点 |

2、关闭selinux,firewalld及swap分区(在五台设备上执行)

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

systemctl disable firewalld

swapoff -a

reboot注:swapoff -a 为临时关闭swap分区。永久关闭swap分区,vi /etc/fstab 注释swap分区一行

3、修改/etc/hosts文件,增加如下三行(五台设备)

cat <<EOF >> /etc/hosts

192.168.3.102 master1

192.168.3.103 master2

192.168.3.104 master3

192.168.3.105 node1

192.168.3.106 node2

EOF

4、更改yum源为阿里云(五台设备执行)

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.aliyun.com/rockylinux|g' \

-i.bak \

/etc/yum.repos.d/Rocky-*.repo

dnf makecache

5、配置命令补全及vim工具(五台设备执行)

dnf install -y wget bash-completion vim

6、配置免密登录(三台master上执行,可省)

ssh-keygen

for host in { master1 master2 master3 node1 node2 };do ssh-copy-id $host;done

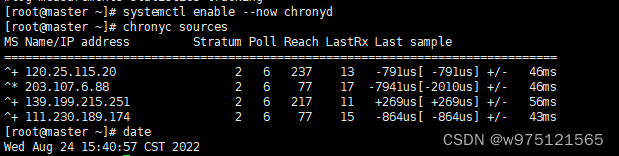

7、配置时间同步(五台设备执行)

dnf install -y chrony更改 /etc/chrony.conf 配置文件

将pool 2.pool.ntp.org iburst

改为

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp1.tencent.com iburst

server ntp2.tencent.com iburst

systemctl enable --now chronyd

chronyc sources

for host in { master1 master2 master3 node1 node2 };do ssh $host date;done

8、修改内核参数(五台设备上执行)

modprobe br_netfilter

lsmod | grep br_netfilter

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

9、安装依整包及配置docker源、k8s源(五台设备执行)

dnf install -y yum-utils device-mapper-persistent-data lvm2 ipvsadm net-tools

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

dnf makecache

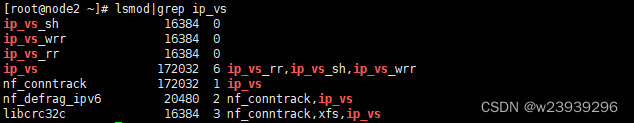

10、开启Ipvs 五台设备

lsmod|grep ip_vs

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

lsmod|grep ip_vs

modprobe br_netfilter

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/ipv4/ip_forward

11、安装containerd(五台设备执行)

dnf install -y containerd

containerd config default > /etc/containerd/config.toml

更改配置文件

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

sed -i "s#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

配置镜像加速

sed -i '/registry.mirrors]/a\ \ \ \ \ \ \ \ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]' /etc/containerd/config.toml

sed -i '/registry.mirrors."docker.io"]/a\ \ \ \ \ \ \ \ \ \ endpoint = ["https://0x3urqgf.mirror.aliyuncs.com"]' /etc/containerd/config.toml

启动containerd

systemctl enable --now containerd.service

systemctl status containerd.service

12、安装kubelet kubeadm kubectl

在三台master设备上执行

dnf install -y kubelet-1.24.6 kubeadm-1.24.6 kubectl-1.24.6

systemctl enable kubelet

在node1及node2上执行

dnf install -y kubelet-1.24.6 kubeadm-1.24.6

systemctl enable kubelet

13、安装keepalive+nginx

1)(三台master上执行)

yum install nginx keepalived nginx-mod-stream -ycat << EOF > /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.3.102:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.3.103:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.3.104:6443 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

EOF

2)keeplive配置(master1)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.3.101/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service

2)keeplive配置(master2)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.3.101/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service

3)keeplive配置(master3)

cat << EOF > /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass password

}

virtual_ipaddress {

192.168.3.101/24

}

track_script {

check_nginx

}

}

EOF

cat << EOF > /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

systemctl enable keepalived.service

systemctl enable nginx.service

systemctl start nginx.service

systemctl start keepalived.service

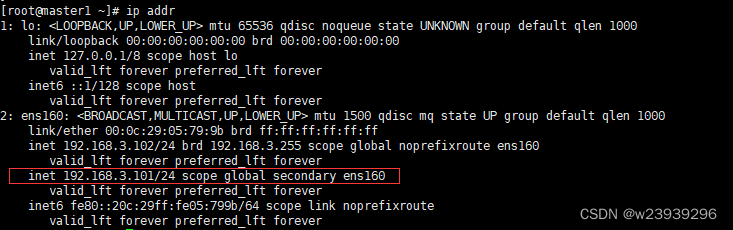

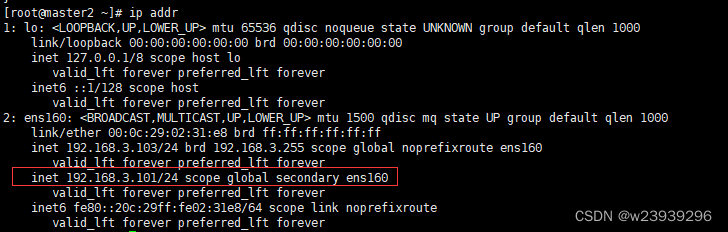

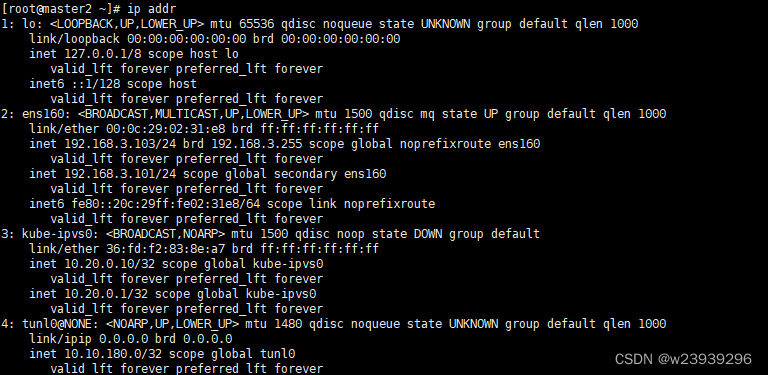

4)测试

master1

ip addrsystemctl stop keepalived

master2

master1

systemctl start keepalived

ip addr

14、初始化k8s集群(master节点上执行)

配置文件

cat > kubeadm-config.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.24.6

controlPlaneEndpoint: 192.168.3.101:16443

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 192.168.3.102

- 192.168.3.103

- 192.168.3.104

- 192.168.3.101

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.20.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

初始化集群

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification | tee $HOME/k8s.abt

执行命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

k8s命令自动补全

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

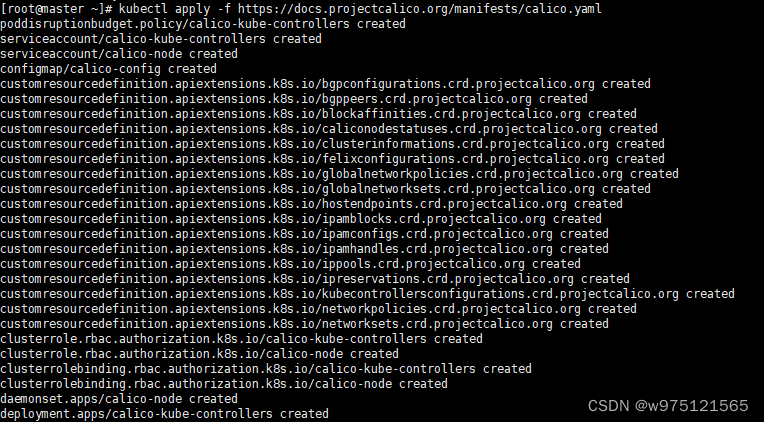

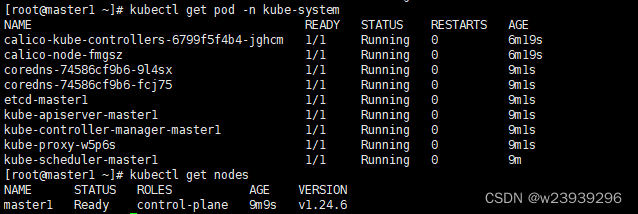

14、安装网络组件

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

监控pod状态,待所有pod状态为running

kubectl get pods -n kube-system -w

kubectl get nodes

15、将master2、master3加入集群

master2 master3

mkdir -p /etc/kubernetes/pki/etcd

mkdir -p ~/.kube/

master1

scp /etc/kubernetes/pki/ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master3:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master3:/etc/kubernetes/pki/etcd/kubeadm token create --print-join-command

![]()

master2 master3

kubeadm join 192.168.3.101:16443 --token ifto9q.urmyl2uw8nz9x95c \

--discovery-token-ca-cert-hash sha256:be7b61236f41d5d75b331be0b282a29ab57ff3e8925bd3b065ca23dc9f71c220 \

--control-plane --ignore-preflight-errors=SystemVerification

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

15、工作节点加入集群

master节点上执行

kubeadm token create --print-join-command

![]()

两台node节点上执行

kubeadm join 192.168.3.101:16443 --token ifto9q.urmyl2uw8nz9x95c --discovery-token-ca-cert-hash sha256:be7b61236f41d5d75b331be0b282a29ab57ff3e8925bd3b065ca23dc9f71c220

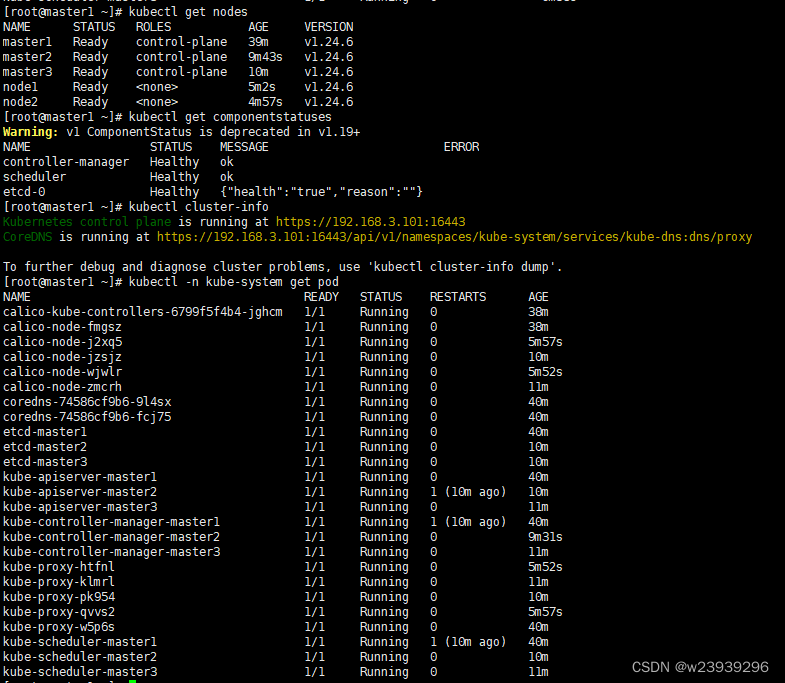

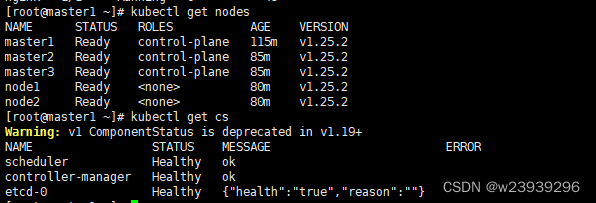

16、验证

在master1上查看

监控pod状态,待所有pod状态为running

kubectl get nodes

kubectl get componentstatuses

kubectl cluster-info

kubectl -n kube-system get pod

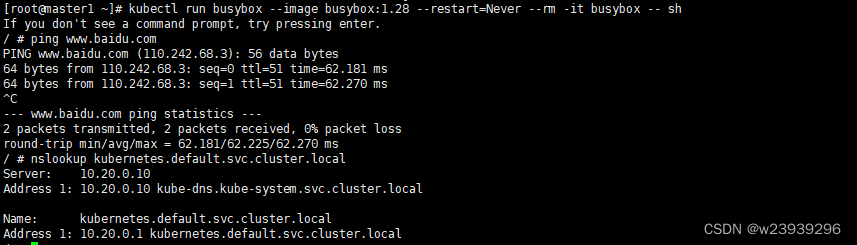

17、测试

1)测试网络

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

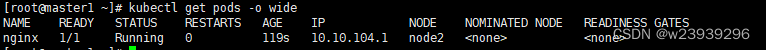

2) 测试pod

cat > mypod.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

EOFkubectl get pods -o wide

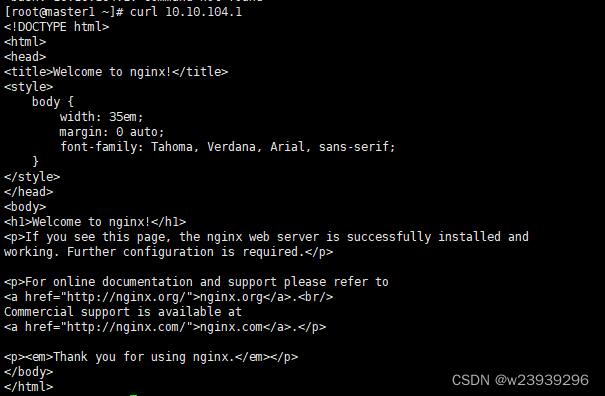

curl 10.10.104.1

3)1)测试高可用

关闭master1

master2

kubectl get pods -o wide

curl 10.10.104.1

kubectl get componentstatuses

kubectl cluster-info

kubectl get nodes

18、升级

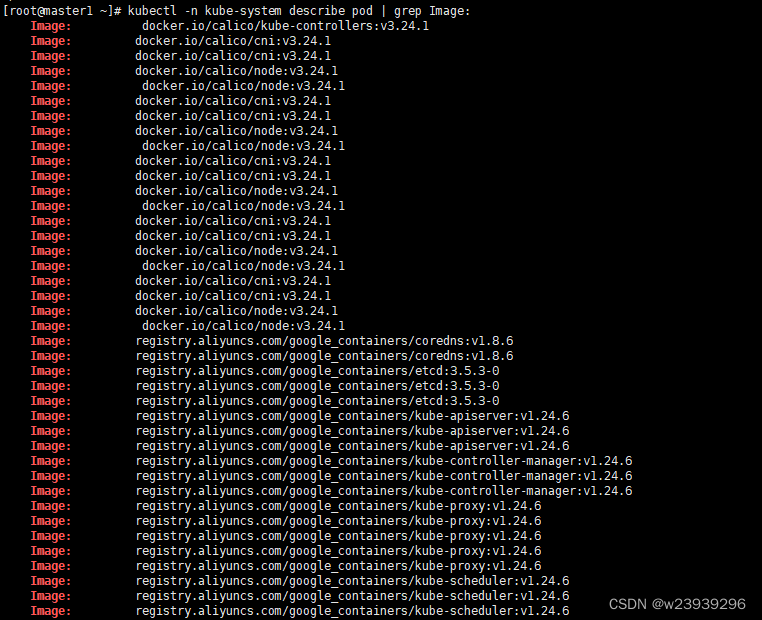

1)查看系统组件版本

kubectl -n kube-system describe pod | grep Image:

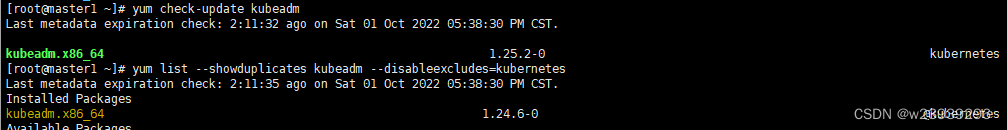

2) 查看可升级版本

yum check-update kubeadm

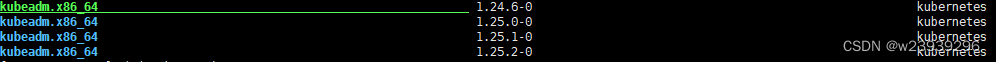

yum list --showduplicates kubeadm --disableexcludes=kubernetes

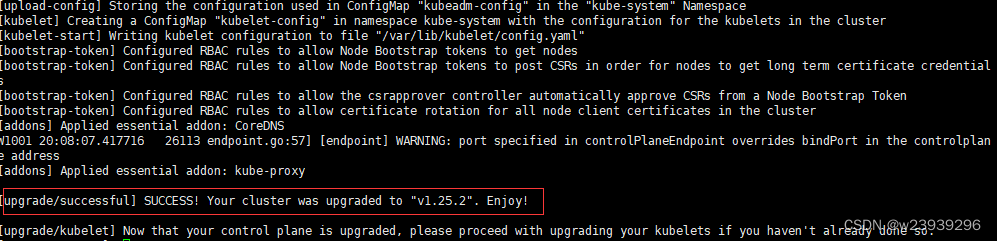

yum install -y kubeadm-1.25.2-0 --disableexcludes=kubernetes3)验证下载操作正常,并且 kubeadm 版本正确及升级计划

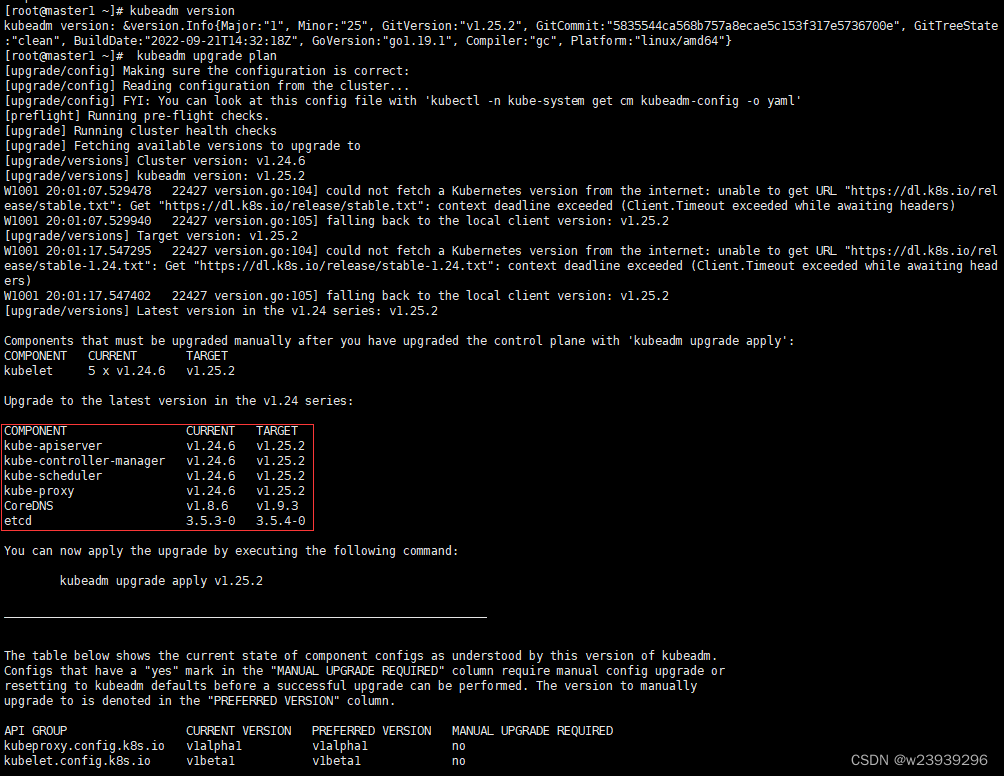

kubeadm version

kubeadm upgrade plan

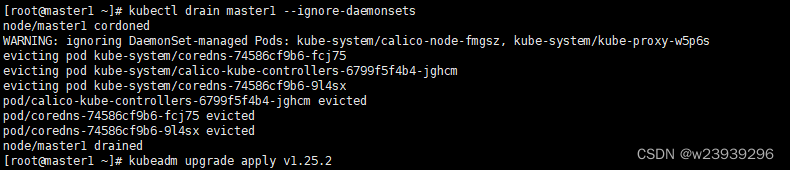

4)驱逐master1节点、升级

kubectl drain master1 --ignore-daemonsets

kubeadm upgrade apply v1.25.2

5)取消对master1的保护

kubectl uncordon master1

6) 升级master2

yum install -y kubeadm-1.25.2-0 --disableexcludes=kubernetes验证下载操作正常,并且 kubeadm 版本正确及升级计划

kubeadm version

kubeadm upgrade plan驱逐master2节点、升级

kubectl drain master2 --ignore-daemonsets

kubeadm upgrade apply v1.25.2取消对master2的保护

kubectl uncordon master2

7)升级master3

yum install -y kubeadm-1.25.2-0 --disableexcludes=kubernetes验证下载操作正常,并且 kubeadm 版本正确及升级计划

kubeadm version

kubeadm upgrade plan驱逐master2节点、升级

kubectl drain master3 --ignore-daemonsets

kubeadm upgrade apply v1.25.2取消对master2的保护

kubectl uncordon master3

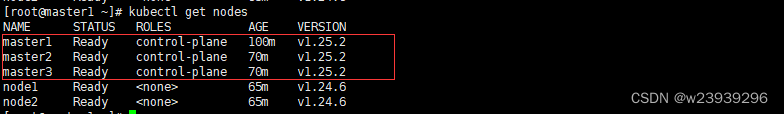

8)升级kubelet、kubectl(三台master上执行)

yum install -y kubelet-1.25.2-0 kubectl-1.25.2-0 --disableexcludes=kubernetes

systemctl daemon-reload

systemctl restart kubelet

kubectl get nodes

控制节点升级完毕

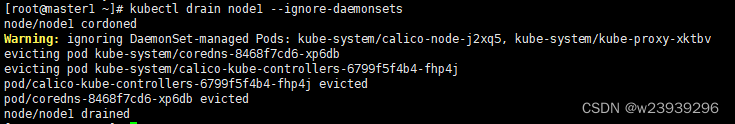

9)升级工作节点node1

升级工作节点kubeadm(node1上执行)

yum install -y kubeadm-1.25.2-0 --disableexcludes=kubernetes驱逐所有负载,准备node1节点的维护(在master1上执行)

kubectl drain node1 --ignore-daemonsets

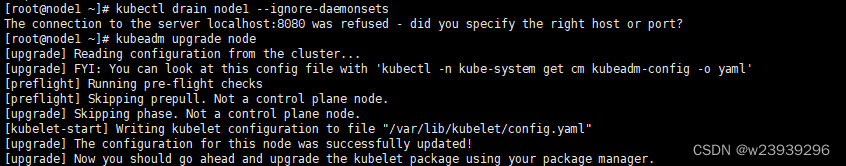

执行 “kubeadm upgrade”(node1上执行)

kubeadm upgrade node

升级kubelet(node1上执行)

yum install -y kubelet-1.25.2-0 --disableexcludes=kubernetes

systemctl daemon-reload

systemctl restart kubelet取消对节点的保护(master1上执行)

kubectl uncordon node1

10)升级工作节点node2

升级工作节点kubeadm(node2上执行)

yum install -y kubeadm-1.25.2-0 --disableexcludes=kubernetes驱逐所有负载,准备node2节点的维护(在master1上执行)

kubectl drain node2 --ignore-daemonsets执行 “kubeadm upgrade”(node2上执行)

kubeadm upgrade node升级kubelet(node2上执行)

yum install -y kubelet-1.25.2-0 --disableexcludes=kubernetes

systemctl daemon-reload

systemctl restart kubelet取消对节点的保护(master1上执行)

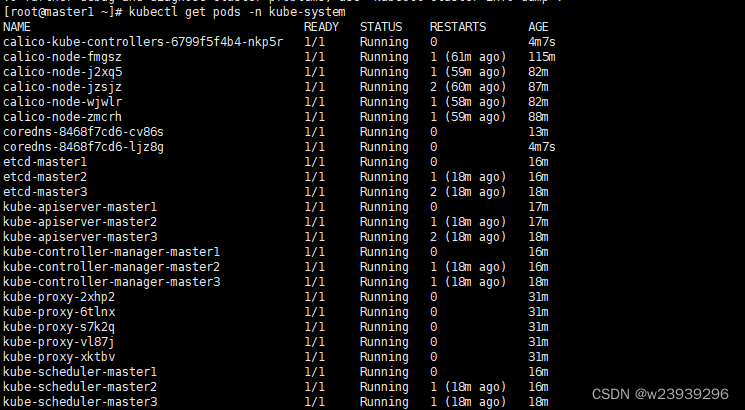

kubectl uncordon node211)验证

kubectl get nodes

kubectl get cs

kubectl get pods -n kube-system

注:如果coredns报错,则升级

kubectl edit deployment coredns -n kube-system

将 image: registry.aliyuncs.com/google_containers/coredns:v1.8.6

改为 image: registry.aliyuncs.com/google_containers/coredns:v1.9.3