数据集概括

Cora数据集由机器学习论文组成。 这些论文分为以下七个类别之一:

- 基于案例

- 遗传算法

- 神经网络

- 概率方法

- 强化学习

- 规则学习

- 理论

这些论文的选择方式是,在最终语料库中,每篇论文引用或被至少一篇其他论文引用。整个语料库中有 2708篇 论文。

在词干堵塞和去除词尾后,只剩下 1433个 唯一的单词。文档频率小于10的所有单词都被删除。

数据集文件说明

该数据集由 cora.cites 与 cora.content 两个文件组成。

cora.content

.content文件包含以下格式的论文描述:<paper_id> <word_attributes>+ <class_label>

每行(其实就是图的一个节点)的第一个字段是论文的唯一字符串标识,后跟 1433 个字段(取值为二进制值),表示1433个词汇中的每个单词在文章中是存在(由1表示)还是不存在(由0表示)。最后,该行的最后一个字段表示论文的类别标签(7个)。因此该数据的特征应该有 1433 个维度,另外加上第一个字段 idx,最后一个字段 label, 一共有 1433 + 2 个维度。

cora.cites

.cites文件包含语料库的引用关系‘图’。

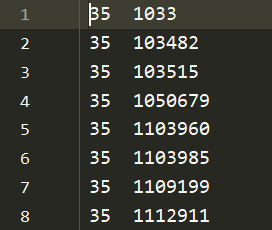

每行(其实就是图的一条边)用以下格式描述一个引用关系:<被引论文编号> <引论文编号>

每行包含两个paper id。第一个字段是被引用论文的标识,第二个字段代表引用的论文。引用关系的方向是从右向左。如果一行由“论文1 论文2”表示,则“论文2 引用 论文1”,即链接是“论文2 – >论文1”。可以通过论文之间的链接(引用)关系建立邻接矩阵adj。

ind.cora.x : 训练集节点特征向量,保存对象为:scipy.sparse.csr.csr_matrix,实际展开后大小为: (140, 1433)

ind.cora.tx : 测试集节点特征向量,保存对象为:scipy.sparse.csr.csr_matrix,实际展开后大小为: (1000, 1433)

ind.cora.allx : 包含有标签和无标签的训练节点特征向量,保存对象为:scipy.sparse.csr.csr_matrix,实际展开后大小为:(1708, 1433),可以理解为除测试集以外的其他节点特征集合,训练集是它的子集

ind.cora.y : one-hot表示的训练节点的标签,保存对象为:numpy.ndarray

ind.cora.ty : one-hot表示的测试节点的标签,保存对象为:numpy.ndarray

ind.cora.ally : one-hot表示的ind.cora.allx对应的标签,保存对象为:numpy.ndarray

ind.cora.graph : 保存节点之间边的信息,保存格式为:{ index : [ index_of_neighbor_nodes ] }

ind.cora.test.index : 保存测试集节点的索引,保存对象为:List,用于后面的归纳学习设置。

import numpy as np

import pickle as pkl

import networkx as nx

import scipy.sparse as sp

# from scipy.sparse.linalg.eigen.arpack import eigsh 不知道为什么这个报错

from scipy.sparse.linalg.eigen import arpack

import sys

def parse_index_file(filename):

"""Parse index file."""

index = []

for line in open(filename):

index.append(int(line.strip()))

return index

def sample_mask(idx, l):

"""Create mask."""

mask = np.zeros(l)

mask[idx] = 1

return np.array(mask, dtype=np.bool)

def load_data(dataset_str):

"""

Loads input data from gcn/data directory

ind.dataset_str.x => the feature vectors of the training instances as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.tx => the feature vectors of the test instances as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.allx => the feature vectors of both labeled and unlabeled training instances

(a superset of ind.dataset_str.x) as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.y => the one-hot labels of the labeled training instances as numpy.ndarray object;

ind.dataset_str.ty => the one-hot labels of the test instances as numpy.ndarray object;

ind.dataset_str.ally => the labels for instances in ind.dataset_str.allx as numpy.ndarray object;

ind.dataset_str.graph => a dict in the format {index: [index_of_neighbor_nodes]} as collections.defaultdict

object;

ind.dataset_str.test.index => the indices of test instances in graph, for the inductive setting as list object.

All objects above must be saved using python pickle module.

:param dataset_str: Dataset name

:return: All data input files loaded (as well the training/test data).

"""

names = ['x', 'y', 'tx', 'ty', 'allx', 'ally', 'graph']

objects = []

for i in range(len(names)): # 分别读取文件

with open("data/ind.{}.{}".format(dataset_str, names[i]), 'rb') as f:

if sys.version_info > (3, 0): # python版本大于3.0

data = pkl.load(f, encoding='latin1')

if (names[i].find('graph') == -1): # 如果不是.graph文件

print(f)

"""

x:(140, 1433) 140个节点参与训练,每个节点的向量为1433维度

y:(140,7) 140个参与训练的节点的训练目标,7维的独热编码

tx:(1000, 1433) 1000个参与测试的节点

ty:(1000, 7)

allx: (1708, 1433)

ally: (1708, 7)

"""

print(data.shape)

print(type(data))

# >>> <class 'scipy.sparse._csr.csr_matrix'>

print(type(data[0]))

# >>> <class 'scipy.sparse._csr.csr_matrix'>

for j in range(data.shape[0]): # 矩阵的行数

"""

#x: data[j]第j个节点的向量表示

#y: data[j]第j个节点的标签 y j (7,)

"""

print('********', names[i], j, data[j].shape, '**********')

print(data[j])

print('\n')

else:

print(f)

print(type(data))

# >>> <class 'collections.defaultdict'>

print(data)

objects.append(data)

else:

objects.append(pkl.load(f))

x, y, tx, ty, allx, ally, graph = tuple(objects)

# 训练数据集

print(x[0][0], x.shape, type(x)) ##x是一个稀疏矩阵,记住1的位置,140个实例,每个实例的特征向量维度是1433 (140,1433)

print(y[0], y.shape) ##y是标签向量,7分类,140个实例 (140,7)

##测试数据集

print(tx[0][0], tx.shape, type(tx)) ##tx是一个稀疏矩阵,1000个实例,每个实例的特征向量维度是1433 (1000,1433)

print(ty[0], ty.shape) ##y是标签向量,7分类,1000个实例 (1000,7)

##allx,ally和上面的形式一致

print(allx[0][0], allx.shape, type(allx)) ##tx是一个稀疏矩阵,1708个实例,每个实例的特征向量维度是1433 (1708,1433)

print(ally[0], ally.shape) ##y是标签向量,7分类,1708个实例 (1708,7)

##graph是一个字典,大图总共2708个节点

for i in graph:

print(i, graph[i])

#dataset_str:core数据集,format: 函数与参数结合使用,{}里面的内容将会被format()里面的参数替换

#获取text.index里面所有的数据

test_idx_reorder = parse_index_file("data/ind.{}.test.index".format(dataset_str))

test_idx_range = np.sort(test_idx_reorder)

print(test_idx_range.size)

print(type(test_idx_range))

print(test_idx_range)

if dataset_str == 'citeseer':

# Fix citeseer dataset (there are some isolated nodes in the graph)

# Find isolated nodes, add them as zero-vecs into the right position

test_idx_range_full = range(min(test_idx_reorder), max(test_idx_reorder) + 1)

tx_extended = sp.lil_matrix((len(test_idx_range_full), x.shape[1]))

tx_extended[test_idx_range - min(test_idx_range), :] = tx

tx = tx_extended

ty_extended = np.zeros((len(test_idx_range_full), y.shape[1]))

ty_extended[test_idx_range - min(test_idx_range), :] = ty

ty = ty_extended

features = sp.vstack((allx, tx)).tolil()

features[test_idx_reorder, :] = features[test_idx_range, :]

adj = nx.adjacency_matrix(nx.from_dict_of_lists(graph))

# print(adj,adj.shape)

labels = np.vstack((ally, ty))

labels[test_idx_reorder, :] = labels[test_idx_range, :]

idx_test = test_idx_range.tolist()

idx_train = range(len(y))

idx_val = range(len(y), len(y) + 500)

train_mask = sample_mask(idx_train, labels.shape[0])

val_mask = sample_mask(idx_val, labels.shape[0])

test_mask = sample_mask(idx_test, labels.shape[0])

y_train = np.zeros(labels.shape)

y_val = np.zeros(labels.shape)

y_test = np.zeros(labels.shape)

y_train[train_mask, :] = labels[train_mask, :]

y_val[val_mask, :] = labels[val_mask, :]

y_test[test_mask, :] = labels[test_mask, :]

return adj, features, y_train, y_val, y_test, train_mask, val_mask, test_mask

def sparse_to_tuple(sparse_mx):

"""Convert sparse matrix to tuple representation."""

def to_tuple(mx):

if not sp.isspmatrix_coo(mx):

mx = mx.tocoo()

coords = np.vstack((mx.row, mx.col)).transpose()

values = mx.data

shape = mx.shape

return coords, values, shape

if isinstance(sparse_mx, list):

for i in range(len(sparse_mx)):

sparse_mx[i] = to_tuple(sparse_mx[i])

else:

sparse_mx = to_tuple(sparse_mx)

return sparse_mx

def preprocess_features(features):

"""Row-normalize feature matrix and convert to tuple representation"""

rowsum = np.array(features.sum(1))

r_inv = np.power(rowsum, -1).flatten()

r_inv[np.isinf(r_inv)] = 0.

r_mat_inv = sp.diags(r_inv)

features = r_mat_inv.dot(features)

return sparse_to_tuple(features)

def normalize_adj(adj):

"""Symmetrically normalize adjacency matrix."""

adj = sp.coo_matrix(adj)

rowsum = np.array(adj.sum(1))

d_inv_sqrt = np.power(rowsum, -0.5).flatten()

d_inv_sqrt[np.isinf(d_inv_sqrt)] = 0.

d_mat_inv_sqrt = sp.diags(d_inv_sqrt)

return adj.dot(d_mat_inv_sqrt).transpose().dot(d_mat_inv_sqrt).tocoo()

def preprocess_adj(adj):

"""Preprocessing of adjacency matrix for simple GCN model and conversion to tuple representation."""

adj_normalized = normalize_adj(adj + sp.eye(adj.shape[0]))

return sparse_to_tuple(adj_normalized)

def construct_feed_dict(features, support, labels, labels_mask, placeholders):

"""Construct feed dictionary."""

feed_dict = dict()

feed_dict.update({placeholders['labels']: labels})

feed_dict.update({placeholders['labels_mask']: labels_mask})

feed_dict.update({placeholders['features']: features})

feed_dict.update({placeholders['support'][i]: support[i] for i in range(len(support))})

feed_dict.update({placeholders['num_features_nonzero']: features[1].shape})

return feed_dict

def chebyshev_polynomials(adj, k):

"""Calculate Chebyshev polynomials up to order k. Return a list of sparse matrices (tuple representation)."""

print("Calculating Chebyshev polynomials up to order {}...".format(k))

adj_normalized = normalize_adj(adj)

laplacian = sp.eye(adj.shape[0]) - adj_normalized

largest_eigval, _ = eigsh(laplacian, 1, which='LM')

scaled_laplacian = (2. / largest_eigval[0]) * laplacian - sp.eye(adj.shape[0])

t_k = list()

t_k.append(sp.eye(adj.shape[0]))

t_k.append(scaled_laplacian)

def chebyshev_recurrence(t_k_minus_one, t_k_minus_two, scaled_lap):

s_lap = sp.csr_matrix(scaled_lap, copy=True)

return 2 * s_lap.dot(t_k_minus_one) - t_k_minus_two

for i in range(2, k + 1):

t_k.append(chebyshev_recurrence(t_k[-1], t_k[-2], scaled_laplacian))

return sparse_to_tuple(t_k)

load_data('cora')