1 实验目标

- pytorch实现fgsm attack

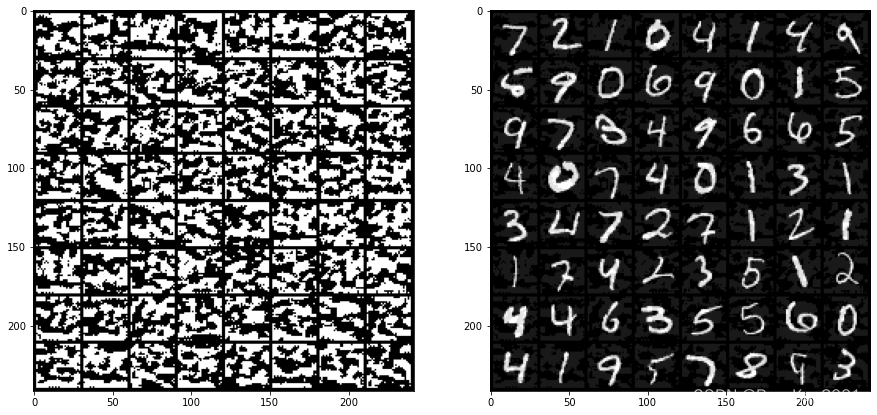

- 原始样本、对抗样本与对抗扰动的可视化

- 探究不同epsilon值对accuracy的影响

2 实验流程

- 搭建LeNet网络训练MNIST分类模型,测试准确率。

- 生成不同epsilon值的对抗样本,送入训练好的模型,再次测试准确率,得到结果

2.1 搭建LeNet训练,测试准确度

导入pytorch必要库

import os.path

import torch

import torchvision

import torchvision.transforms as transforms

from torch import nn

from torch.utils.data import DataLoader

import numpy as np

import matplotlib.pyplot as plt

加载torchvision中的MNIST数据集

train_data = torchvision.datasets.MNIST(

root='data',

train=True,

download=True,

transform=transforms.ToTensor()

)

test_data = torchvision.datasets.MNIST(

root='data',

train=False,

download=True,

transform=transforms.ToTensor()

)

batch_size = 64

train_dataloader = DataLoader(dataset=train_data, batch_size=batch_size)

test_dataloader = DataLoader(dataset=test_data, batch_size=batch_size)

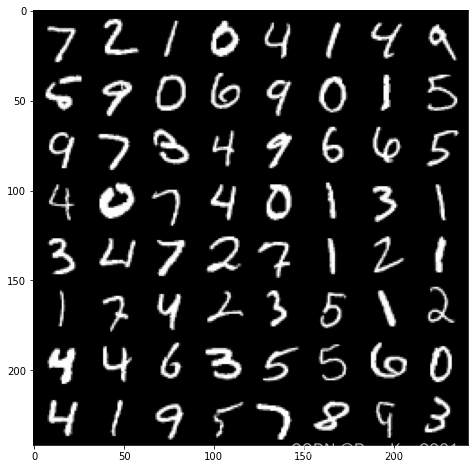

matplotlib展示MNIST图像

plt.figure(figsize=(8, 8))

iter_dataloader = iter(test_dataloader)

n=1

# 取出n*batch_size张图片可视化

for i in range(n):

images, labels = next(iter_dataloader)

image_grid = torchvision.utils.make_grid(images)

plt.subplot(1, n, i+1)

plt.imshow(np.transpose(image_grid.numpy(), (1, 2, 0)))

转移到GPU训练

device = 'cuda:0' if torch.cuda.is_available() else 'cpu'

print(device)

搭建LeNet网络

class LeNet(nn.Module):

def __init__(self):

super(LeNet,self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(1,6,3,stride=1,padding=1),

nn.MaxPool2d(2,2),

nn.Conv2d(6,16,5,stride=1,padding=1),

nn.MaxPool2d(2,2)

)

self.fc = nn.Sequential(

nn.Linear(576,120),

nn.Linear(120,84),

nn.Linear(84,10)

)

def forward(self,x):

out = self.conv(x)

out = out.view(out.size(0),-1)

out = self.fc(out)

return out

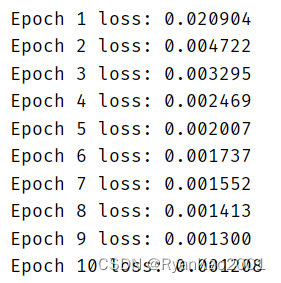

定义训练函数

def train(network):

losses = []

iteration = 0

epochs = 10

for epoch in range(epochs):

loss_sum = 0

for i, (X, y) in enumerate(train_dataloader):

X, y = X.to(device), y.to(device)

pred = network(X)

loss = loss_fn(pred, y)

loss_sum += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

mean_loss = loss_sum / len(train_dataloader.dataset)

losses.append(mean_loss)

iteration += 1

print(f"Epoch {epoch+1} loss: {mean_loss:>7f}")

# 训练完毕保存最后一轮训练的模型

torch.save(network.state_dict(), "model.pth")

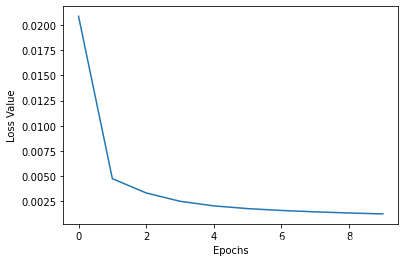

# 绘制损失函数曲线

plt.xlabel("Epochs")

plt.ylabel("Loss Value")

plt.plot(list(range(iteration)), losses)

network = LeNet()

network.to(device)

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(params=network.parameters(), lr=0.001, momentum=0.9)

if os.path.exists('model.pth'):

network.load_state_dict(torch.load('model.pth'))

else:

train(network)

得到损失值与损失值图像

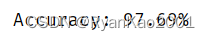

对模型进行测试,得到准确度

positive = 0

negative = 0

for X, y in test_dataloader:

with torch.no_grad():

X, y = X.to(device), y.to(device)

pred = network(X)

for item in zip(pred, y):

if torch.argmax(item[0]) == item[1]:

positive += 1

else:

negative += 1

acc = positive / (positive + negative)

print(f"{acc * 100}%")

2.2 fgsm生成对抗样本

# 寻找对抗样本,并可视化

eps = [0.01, 0.05, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8]

for X, y in test_dataloader:

X, y = X.to(device), y.to(device)

X.requires_grad = True

pred = network(X)

network.zero_grad()

loss = loss_fn(pred, y)

loss.backward()

plt.figure(figsize=(15, 8))

plt.subplot(121)

image_grid = torchvision.utils.make_grid(torch.clamp(X.grad.sign(), 0, 1))

plt.imshow(np.transpose(image_grid.cpu().numpy(), (1, 2, 0)))

X_adv = X + eps[2] * X.grad.sign()

X_adv = torch.clamp(X_adv, 0, 1)

plt.subplot(122)

image_grid = torchvision.utils.make_grid(X_adv)

plt.imshow(np.transpose(image_grid.cpu().numpy(), (1, 2, 0)))

break

左图为对抗扰动,右图为对抗样本

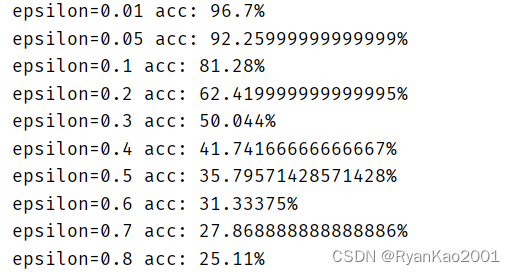

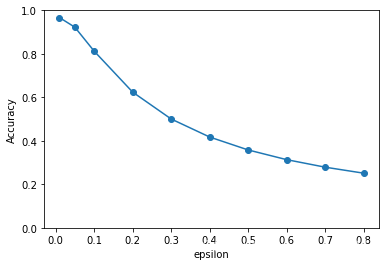

2.3 探究不同epsilon值对分类准确度的影响

# 用对抗样本替代原始样本,测试准确度

# 探究不同epsilon对LeNet分类准确度的影响

positive = 0

negative = 0

acc_list = []

for epsilon in eps:

for X, y in test_dataloader:

X, y = X.to(device), y.to(device)

X.requires_grad = True

pred = network(X)

network.zero_grad()

loss = loss_fn(pred, y)

loss.backward()

X = X + epsilon * X.grad.sign()

X_adv = torch.clamp(X, 0, 1)

pred = network(X_adv)

for item in zip(pred, y):

if torch.argmax(item[0]) == item[1]:

positive += 1

else:

negative += 1

acc = positive / (positive + negative)

print(f"epsilon={epsilon} acc: {acc * 100}%")

acc_list.append(acc)

plt.xlabel("epsilon")

plt.ylabel("Accuracy")

plt.ylim(0, 1)

plt.plot(eps, acc_list, marker='o')

3 实验结果

对同一个分类模型来说,随着epsilon的增加,fgsm生成的对抗样本使得分类准确度减小

4 完整代码

版权声明:本文为gaowencheng01原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。