-

SpringCloud DataFlow — 0. 本地部署部署

-

SpringCloud DataFlow — 1. 自定义Processor

-

SpringCloud DataFlow — 2. 自定义Sink

-

SpringCloud DataFlow — 3. 暴露properties

-

SpringCloud DataFlow — 4. Prometheus + Grafana 监控

-

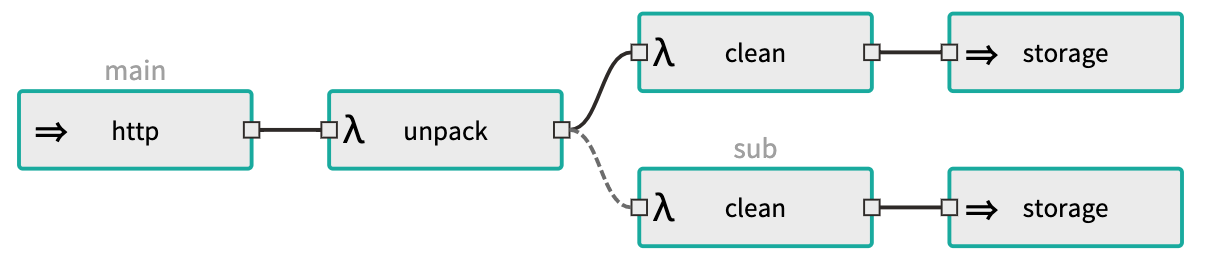

SpringCloud DataFlow — 5. 多分支负载

1. 目的

通过分支部署, 起到负载的效果, 提升处理速度

2. 示例

3. 导入jar

app import --uri https://dataflow.spring.io/kafka-maven-latest

app register --type processor --name unpack --uri maven://etl.dmt.quick:unpack-processor-kafka:0.0.1-SNAPSHOT

app register --type processor --name clean --uri maven://etl.dmt.quick:clean-processor-kafka:0.0.1-SNAPSHOT

app register --type sink --name storage --uri maven://etl.dmt.quick:storage-sink-kafka:0.0.1-SNAPSHOT

3. 创建流

stream create --name main --definition "http --path-pattern=/quick --port=9000 | unpack | clean | storage"

stream create --name sub --definition ":main.unpack > clean | storage"

4. 部署流

stream deploy --name main --properties "app.clean.spring.cloud.stream.bindings.input.group=quick,app.unpack.spring.cloud.stream.bindings.output.producer.partitionCount=2"

stream deploy --name sub --properties "app.clean.spring.cloud.stream.bindings.input.group=quick"

5. 测试

5.1 查看kafka

bin/kafka-topics.sh --zookeeper localhost:2181 --topic main.unpack --describe

Topic:main.unpack PartitionCount:2 ReplicationFactor:1 Configs:

Topic: main.unpack Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: main.unpack Partition: 1 Leader: 0 Replicas: 0 Isr: 0

5.2 发送http请求, 并查看日志

1. 打开dashboard (http://localhost:9393/dashboard)

2. 切换至Runtime

3. 点击 "main.clean-v1-0", 其中 stdout 即为日志文件目录, 使用tail -f 命令查看

4. 同上 "sub.clean-v1-0"

5. 发送http请求, 例如使用curl

curl -H "Content-Type:application/json" -X POST --data '{"message": "hello world"}' http://localhost:9000/quick

转载于:https://my.oschina.net/tianshl/blog/3097725