1. 概述

关系型数据库是企业中业务数据存储的主要形式。MySQL 就是典型代表,尽管在大数据处理中直接与 MySQL 交互的场景不多,但最终处理的计算结果是要给外部应用消费使用的,而外部应用读取的数据存储往往就是 MySQL

2. 使用

2.1 添加依赖(注意引入的驱动版本)

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

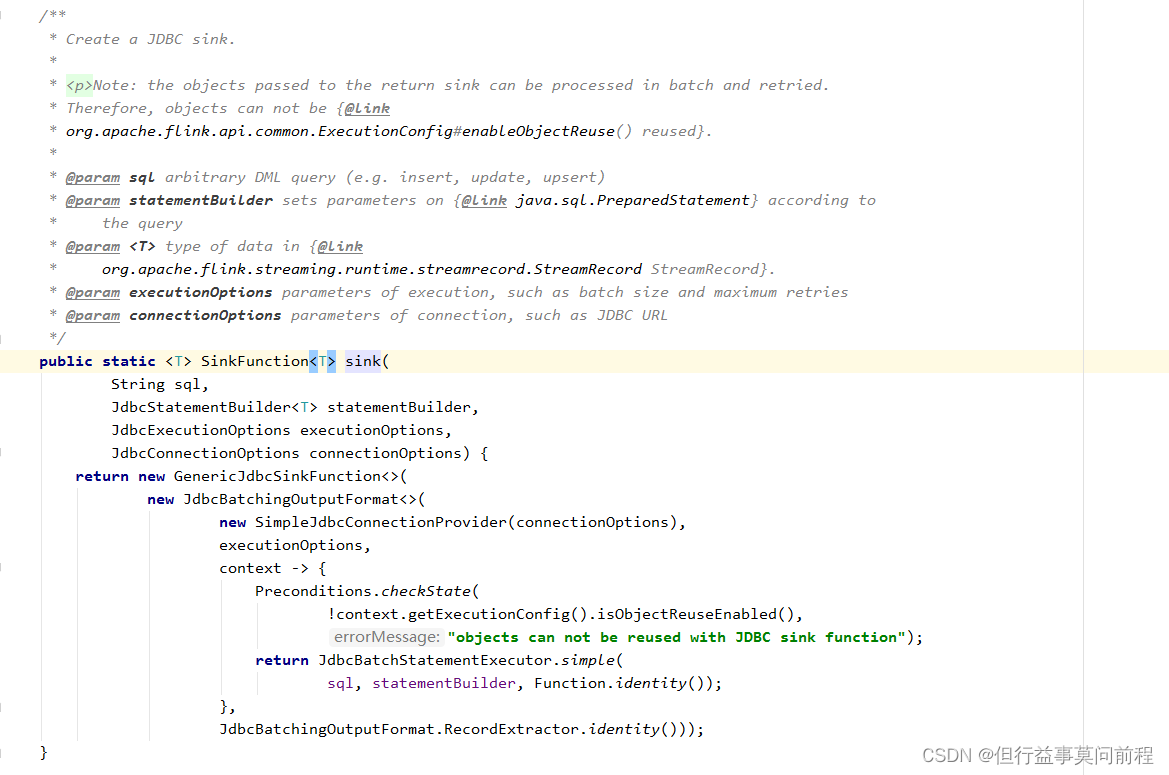

2.2 源码

按照批次的处理数据,需要提供的参数:

sql:DML query (e.g. insert, update, upsert)

statementBuilder: 设置占位符参数值

executionOptions: 设置 batch size 和 maximum retries参数值等

connectionOptions: 设置连接 参数, JDBC URL等

数据核心处理逻辑:

GenericJdbcSinkFunction类

@Override

public void invoke(T value, Context context) throws IOException {

outputFormat.writeRecord(value);

}

JdbcBatchingOutputFormat类 判断batchCount是否大于设置的批次大小,决定调用flush方法

@Override

public final synchronized void writeRecord(In record) throws IOException {

checkFlushException();

try {

addToBatch(record, jdbcRecordExtractor.apply(record));

batchCount++;

if (executionOptions.getBatchSize() > 0

&& batchCount >= executionOptions.getBatchSize()) {

flush();

}

} catch (Exception e) {

throw new IOException("Writing records to JDBC failed.", e);

}

}

除了按照batch大小写入数据,在JdbcBatchingOutputFormat类的open()方法中,设置有按BatchInterval定时调度,flush数据

@Override

public void open(int taskNumber, int numTasks) throws IOException {

super.open(taskNumber, numTasks);

jdbcStatementExecutor = createAndOpenStatementExecutor(statementExecutorFactory);

if (executionOptions.getBatchIntervalMs() != 0 && executionOptions.getBatchSize() != 1) {

this.scheduler =

Executors.newScheduledThreadPool(

1, new ExecutorThreadFactory("jdbc-upsert-output-format"));

this.scheduledFuture =

this.scheduler.scheduleWithFixedDelay(

() -> {

synchronized (JdbcBatchingOutputFormat.this) {

if (!closed) {

try {

flush();

} catch (Exception e) {

flushException = e;

}

}

}

},

executionOptions.getBatchIntervalMs(),

executionOptions.getBatchIntervalMs(),

TimeUnit.MILLISECONDS);

}

}

2.3 代码

pojo对象

public class Event {

public String user;

public String url;

public long timestamp;

public Event() {

}

public Event(String user, String url, Long timestamp) {

this.user = user;

this.url = url;

this.timestamp = timestamp;

}

@Override

public int hashCode() {

return super.hashCode();

}

public String getUser() {

return user;

}

public void setUser(String user) {

this.user = user;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public Long getTimestamp() {

return timestamp;

}

public void setTimestamp(Long timestamp) {

this.timestamp = timestamp;

}

@Override

public String toString() {

return "Event{" +

"user='" + user + '\'' +

", url='" + url + '\'' +

", timestamp=" + new Timestamp(timestamp) +

'}';

}

}

flink写入mysql

(1) 读取kafka

(2) flatmap扁平映射

(3) sink写入mysql

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "192.168.42.102:9092,192.168.42.103:9092,192.168.42.104:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

DataStreamSource<String> stream = env.addSource(new

FlinkKafkaConsumer<String>(

"clicks",

new SimpleStringSchema(),

properties

));

SingleOutputStreamOperator<Event> flatMap = stream.flatMap(new FlatMapFunction<String, Event>() {

@Override

public void flatMap(String s, Collector<Event> collector) throws Exception {

String[] split = s.split(",");

collector.collect(new Event(split[0], split[1], Long.parseLong(split[2])));

}

});

JdbcStatementBuilder<Event> jdbcStatementBuilder = new JdbcStatementBuilder<Event>() {

@Override

public void accept(PreparedStatement preparedStatement, Event event) throws SQLException {

preparedStatement.setString(1, event.getUser());

preparedStatement.setString(2, event.getUrl());

preparedStatement.setLong(3,event.getTimestamp());

}

};

JdbcConnectionOptions jdbcConnectionOptions = new JdbcConnectionOptions.JdbcConnectionOptionsBuilder().withUrl("jdbc:mysql://192.168.0.23/demo")

// 对于 MySQL 5.7,用"com.mysql.jdbc.Driver"

.withDriverName("com.mysql.jdbc.Driver")

.withUsername("root")

.withPassword("C/hEz8ygYA4g459j/TsQHg==htdata")

.build();

JdbcExecutionOptions executionOptions = JdbcExecutionOptions.builder()

.withBatchSize(1000)

.withBatchIntervalMs(200)

.withMaxRetries(5)

.build();

flatMap.addSink(JdbcSink.sink(" insert into event (user,url,timestamp)values (?,?,?)", jdbcStatementBuilder, executionOptions, jdbcConnectionOptions));

env.execute();

}

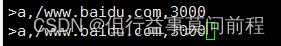

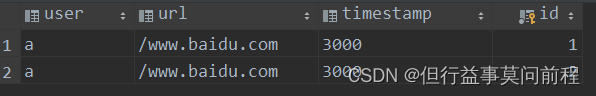

结果

./kafka-console-producer.sh --broker-list 192.168.42.102:9092,192.168.42.103:9092,192.168.42.104:9092 --topic clicks

版权声明:本文为javahelpyou原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。