Python爬虫爬取滚动新闻

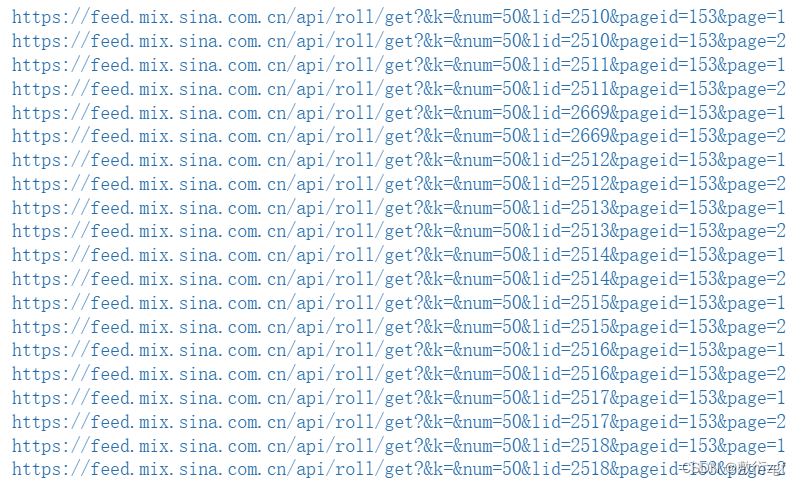

一、观察爬取网址的特征

https://news.sina.com.cn/roll/#pageid=153&lid=2509&k=&num=50&page=1

https://news.sina.com.cn/roll/#pageid=153&lid=2510&k=&num=50&page=1 国内

https://news.sina.com.cn/roll/#pageid=153&lid=2511&k=&num=50&page=1 国际

https://news.sina.com.cn/roll/#pageid=153&lid=2669&k=&num=50&page=1 社会

https://news.sina.com.cn/roll/#pageid=153&lid=2512&k=&num=50&page=1 体育

…

不同的新闻分类,只有 lid编号的差异, num表示每一页显示50条数据,page 表示当前页码

二、定义字典,存放lid值

由此我们可以先定义一个字典,存放所有新闻类别标签所对应的lid值

import pandas as pd

import requests

import json

import re

import numpy as np

if __name__ == "__main__":

data_dic = {"国内":2510,

"国际":2511,

"社会":2669,

"体育":2512,

"娱乐":2513,

"军事":2514,

"科技":2515,

"财经":2516,

"股市":2517,

"美股":2518}

url = "https://feed.mix.sina.com.cn/api/roll/get?&k=&num=50" # 50表示每一页包含50条数据

get = get_data(data_dic,url)

get.main()

三、定义类获取数据

class get_data():

def __init__(self,dic,url):

self.url = url

self.dic = dic

def get_url(self,lid,page,pageid=153): # 每一页的pageid始终为153

# https://news.sina.com.cn/roll/#pageid=153&lid=2510&k=&num=50&page=2

return self.url +"&lid="+str(lid)+"&pageid="+str(pageid)+"&page="+str(page)

def get_json_url(self,url):

out = []

json_req = requests.get(url)

user_dict = json.loads(json_req.text)

print(url)

for dic in user_dict["result"]["data"]:

out.append([dic["url"],dic['intro'],dic['title']])

return out

def getfind_data(self,list1,label):

out_date = []

for line in list1:

try:

req = requests.get(line[0])

req.encoding = "utf-8"

req = req.text

# content = re.findall(r'<font cms-style="font-L strong-Bold">(.*?)</font>', req, re.S) + re.findall(r'<p cms-style="font-L">(.*?))</p>',req,re.S)

content = re.findall('<p cms-style="font-L">(.*?)</p>',req,re.S)

# content = re.findall('<!-- 行情图end -->.*<!-- news_keyword_pub',req,re.S)

# if len(content)!=0:

# pass

# else:

# content = re.findall('<!-- 正文 start -->.*<!--', req, re.S)

# if len(content)!=0:

# pass

# else:

# content = re.findall('<!--新增众测推广文案-->.*?<!-- ', req, re.S)

# print(content)

if len(line[0].split("/")[3])==10:

out_date.append([line[0].split("/")[3], line[2], line[1], content, label])

elif len(line[0].split("/")[4])==10:

out_date.append([line[0].split("/")[4], line[2], line[1], content, label])

elif len(line[0].split("/")[5])==10:

out_date.append([line[0].split("/")[5], line[2], line[1], content, label])

elif len(line[0].split("/")[6]) == 10:

out_date.append([line[0].split("/")[6],line[2],line[1],content,label])

except:

pass

return out_date

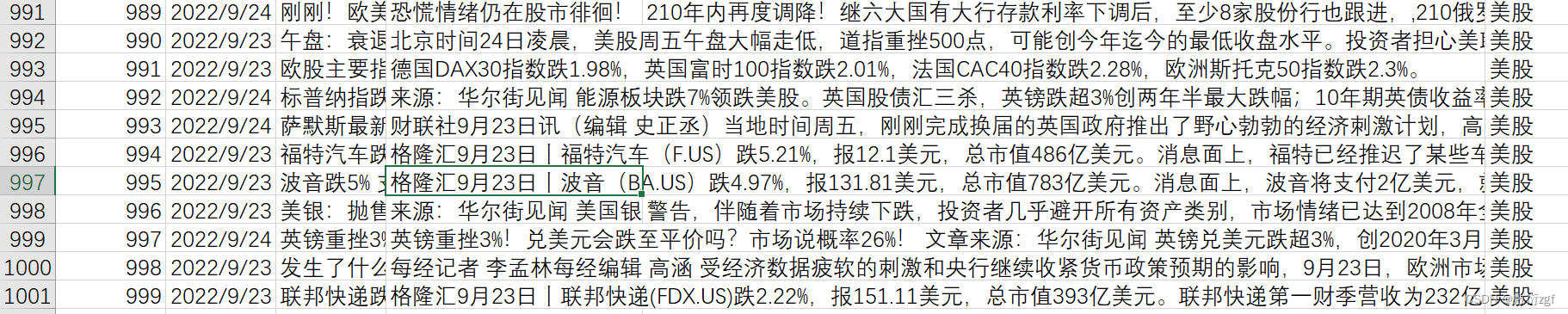

def main(self):

out_data_list = [] # 存放输入的数据

for label,lid in self.dic.items():

for page in range(1,3): # 爬取前2页的数据

he_url = self.get_url(lid,page)

json_url_list = self.get_json_url(he_url)

output_data = self.getfind_data(json_url_list,label)

out_data_list+=output_data

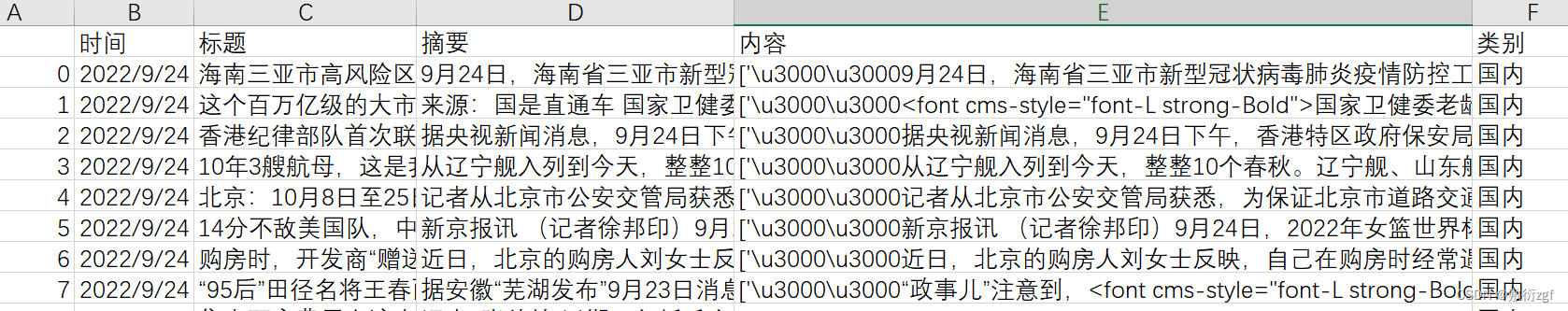

data = pd.DataFrame(np.array(out_data_list), columns=['时间', '标题', '摘要', '内容','类别'])

data.to_csv("data.csv",encoding="utf_8_sig") # encoding='utf-8'还会有乱码

四、数据清洗

import pandas as pd

import re

data = pd.read_csv("data.csv",index_col=0)

def function_(x):

if len(x)>2:

return True

else:

return False

def funtion1(x):

res1 = ''.join(re.findall('[\u4e00-\u9fa5]*[",","。","!","”","%","“",":","1","2","3","4","5","6","7","8","9","0"]',x[20:])) # [\u4e00-\u9fa5] 匹配中文

res1 = res1.replace("\"","")

res1 = res1.replace("引文","")

res1 = res1.replace("正文","")

res1 = res1.replace("30003000","")

return res1

# 获取数据内容

data["内容"] = data["内容"].apply(funtion1)

# 数据去重

data = data.drop_duplicates()

# import numpy as np

# 因为对url进行切片可能获取到的不是时间数据所有使用如下函数删掉

def out_data(x):

if "2022" in x or "2021" in x or "2020" in x:

return True

else:

return False

data = data[[out_data(i) for i in data["时间"].astype(str)]]

data.to_csv("pre_data.csv",encoding="utf_8_sig")

data[data["内容"].apply(function_)]["类别"].value_counts()

版权声明:本文为qq_45556665原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。