一、常用库

1、requests 做请求的时候用到。

requests.get(“url”)

2、selenium 自动化会用到。

3、lxml

4、beautifulsoup

5、pyquery 网页解析库 说是比beautiful 好用,语法和jquery非常像。

6、pymysql 存储库。操作mysql数据的。

7、pymongo 操作MongoDB 数据库。

8、redis 非关系型数据库。

9、jupyter 在线记事本。

二、什么是Urllib

Python内置的Http请求库

urllib.request 请求模块 模拟浏览器

urllib.error 异常处理模块

urllib.parse url解析模块 工具模块,如:拆分、合并

urllib.robotparser robots.txt 解析模块

2和3的区别

Python2

import urllib2

response = urllib2.urlopen(‘http://www.baidu.com’);

Python3

import urllib.request

response =urllib.request.urlopen(‘http://www.baidu.com’);

用法:

urlOpen 发送请求给服务器。

urllib.request.urlopen(url,data=None[参数],[timeout,]*,cafile=None,capath=None,cadefault=false,context=None)

例子:

例子1:

import urllib.requests

response=urllib.reqeust.urlopen(‘http://www.baidu.com’)

print(response.read().decode(‘utf-8’))

例子2:

import urllib.request

import urllib.parse

data=bytes(urllib.parse.urlencode({‘word’:‘hello’}),encoding=‘utf8’)

response=urllib.reqeust.urlopen(‘http://httpbin.org/post’,data=data)

print(response.read())

注:加data就是post发送,不加就是以get发送。

例子3:

超时测试

import urllib.request

response =urllib.request.urlopen(‘http://httpbin.org/get’,timeout=1)

print(response.read())

—–正常

import socket

import urllib.reqeust

import urllib.error

try:

response=urllib.request.urlopen(‘http://httpbin.org/get’,timeout=0.1)

except urllib.error.URLError as e:

if isinstance(e.reason,socket.timeout):

print(‘TIME OUT’)

这是就是输出 TIME OUT

响应

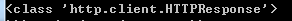

响应类型

import urllib.request

response=urllib.request.urlopen(‘https://www.python.org’)

print(type(response))

输出:print(type(response))

状态码、响应头

import urllib.request

response = urllib.request.urlopen(‘http://www.python.org’)

print(response.status) // 正确返回200

print(response.getheaders()) //返回请求头

print(response.getheader(‘Server’))

三、Request 可以添加headers

import urllib.request

request=urllib.request.Request(‘https://python.org’)

response=urllib.request.urlopen(request)

print(response.read().decode(‘utf-8’))

例子:

from urllib import request,parse

url=‘http://httpbin.org/post’

headers={

User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.75 Safari/537.36

Host:httpbin.org

}

dict={

‘name’:‘Germey’

}

data=bytes(parse.urlencode(dict),encoding=‘utf8’)

req= request.Request(url=url,data=data,headers=headers,method=‘POST’)

response = request.urlopen(req)

print(response.read().decode(‘utf-8’))

四、代理

import urllib.request

proxy_handler =urllib.request.ProxyHandler({

‘http’:‘http://127.0.0.1:9743’,

‘https’:‘http://127.0.0.1:9743’,

})

opener =urllib.request.build_opener(proxy_handler)

response= opener.open(‘http://httpbin.org/get’)

print(response.read())

五、Cookie

import http.cookiejar,urllib.request

cookie = http.cookiejar.Cookiejar()

handler=urllib.request.HTTPCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

response = opener.open(‘http://www.baidu.com’)

for item in cookie:

print(item.name+”=”+item.value)

第一种保存cookie方式

import http.cookiejar,urllib.request

filename = ‘cookie.txt’

cookie =http.cookiejar.MozillaCookieJar(filename)

handler= urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response= opener.open(‘http://www.baidu.com’)

cookie.save(ignore_discard=True,ignore_expires=True)

第二种保存cookie方式

import http.cookiejar,urllib.request

filename = ‘cookie.txt’

cookie =http.cookiejar.LWPCookieJar(filename)

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open(‘http://www.baidu.com’)

cookie.save(ignore_discard=True,ignore_expires=True)

读取cookie

import http.cookiejar,urllib.request

cookie=http.cookiejar.LWPCookieJar()

cookie.load(‘cookie.txt’,ignore_discard=True,ignore_expires=True)

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open(‘http://www.baidu.com’)

print(response.read().decode(‘utf-8’))

六、异常处理

例子1:

from urllib import reqeust,error

try:

response =request.urlopen(‘http://cuiqingcai.com/index.htm’)

except error.URLError as e:

print(e.reason) //url异常捕获

例子2:

from urllib import reqeust,error

try:

response =request.urlopen(‘http://cuiqingcai.com/index.htm’)

except error.HTTPError as e:

print(e.reason,e.code,e.headers,sep=’\n’) //url异常捕获

except error.URLError as e:

print(e.reason)

else:

print(‘Request Successfully’)

7、URL解析

urlparse //url 拆分

urllib.parse.urlparse(urlstring,scheme=’’,allow_fragments=True)

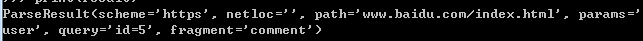

例子:

from urllib.parse import urlparse //url 拆分

result = urlparse(‘http://www.baidu.com/index.html;user?id=5#comment’)

print(type(result),result)

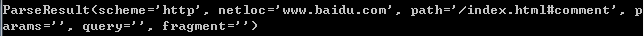

结果:

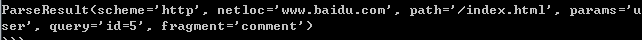

例子2:

from urllib.parse import urlparse //没有http

result = urlparse(‘www.baidu.com/index.html;user?id=5#comment’,scheme=‘https’)

print(result)

例子3:

from urllib.parse import urlparse

result = urlparse(‘http://www.baidu.com/index.html;user?id=5#comment’,scheme=‘https’)

print(result)

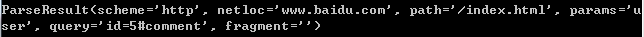

例子4:

from urllib.parse import urlparse

result = urlparse(‘http://www.baidu.com/index.html;user?id=5#comment’,allow_fragments=False)

print(result)

例子5:

from urllib.parse import urlparse

result = urlparse(‘http://www.baidu.com/index.html#comment’,allow_fragments=False)

print(result)

七、拼接

urlunparse

例子:

from urllib.parse import urlunparse

data=[‘http’,‘www.baidu.com’,‘index.html’,‘user’,‘a=6’,‘comment’]

print(urlunparse(data))

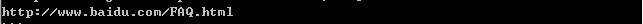

urljoin

from urllib.parse import urljoin

print(urljoin(‘http://www.baidu.com’,‘FAQ.html’))

后面覆盖前面的

urlencode

from urllib.parse import urlencode

params={

‘name’:‘gemey’,

‘age’:22

}

base_url=‘http//www.baidu.com?’

url = base_url+urlencode(params)

print(url)

http://www.baidu.com?name=gemey&age=22