实验环境:需要三台全新的Centos7.6系统。

Kubernetes各组件之间的关系:

1、Kubernetes 的架构由一个 master 和多个 minion 组成,master 通过 api 提供服务,接受kubectl 的请求来调度管理整个集群;kubectl是一个 k8s 平台的一个管理命令。

2、Replication controller 定义了多个 pod 或者容器需要运行,如果当前集群中运行的 pod 或容器达不到配置的数量,replication controller 会调度容器在多个 minion 上运行,保证集群中的 pod 数量。

3、service 定义了真实对外提供的服务,一个 service 会对应后端运行的多个 container。

4、Kubernetes 是一个管理平台,minion 上的 kube-proxy 拥有提供真是服务公网 IP,客户端访问kubernetes 中提供的服务,是直接访问到 kube-proxy 上的。

5、在kubernetes 中 pod 是一个基本单元,一个 pod 可以是提供相同功能的多个 container,这些容器会被部署在同一个 minion 上;minion 是运行 kubelet 中容器的虚拟机,minion 接受 master 的指令创建 pod 或者容器。

搭建Kubernetes容器集群管理系统

平台版本说明:

这里master和etcd使用同一台机器

节点角色 IP地址 CPU 内存

master 192.168.10.111 4核 4G

etcd 192.168.10.111 4核 4G

node1 192.168.10.112 4核 4G

node2 192.168.10.113 4核 4G

attention:正常需要四台机器,内存不够的话我们可以将master和etcd运行在同一台机器上。

配置kubernetes的yum源

首先在所有的节点添加k8s组件的yum源

自己在线安装:centos 系统中自带的 yum 源就可以安装 kubernetes

想自己配置 yum 源,创建以一下文件:

[root@master ~]# vim /etc/yum.repos.d/CentOS-Base.repo #插入以下内容,使用

aliyun 的源

[base]

name=CentOS-$releasever - Base

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7.6.1804/os/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

http://mirrors.aliyuncs.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7.6.1804/updates/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

http://mirrors.aliyuncs.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7.6.1804/extras/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

http://mirrors.aliyuncs.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7.6.1804/centosplus/x86_64/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

http://mirrors.aliyuncs.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7.6.1804/contrib/x86_64/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

http://mirrors.aliyuncs.com/centos/RPM-GPG-KEY-CentOS-7

[epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=http://mirrors.aliyun.com/epel/7/x86_64/

failovermethod=priority

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

在所有节点执行

yum clean all && yum makecache

其实还有其他方法,我这里只介绍我所用的这一种。

在各个节点上面安装Kubernetes组件

配置master为etcd和master结点

[root@master ~]# yum install -y kubernetes etcd flannel ntp

注意:Flannel为Docker提供一种可配置的虚拟重叠网络,实现跨物理机的容器之间能直接访问。

1、Flannel在每台主机上运行一个agent;

2、flanneld,负责在提前配置好的地址空间中分配子网租约,Flannel使用etcd来存储网络配置。

3、ntp:主要用于同步容器员平台中所有节点的时间,云平台中节点的时间需要保持一致。

4、kubernetes中包括了服务端和客户端相关的软件包。

5、etcd是etcd服务的软件包。

node1:

[root@node1 ~]# yum install -y kubernetes flannel ntp

node2:

[root@node2 ~]# yum install -y kubernetes flannel ntp #每个 node 都要安装一样

优化Linux系统:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config &> /dev/null

setenforce 0

systemctl stop firewalld &> /dev/null

systemctl disable firewalld &> /dev/null

iptables -F

yum install -y iptables-services

service iptables save

systemctl stop NetworkManager &> /dev/null

systemctl disable NetworkManager &> /dev/null

echo 1 > /proc/sys/net/ipv4/ip_forward

到这里就安装成功了,下面开始配置kubernetes。

配置etcd和master节点

修改所有结点的主机名,IP地址和hosts文件

1、修改主机名:

[root@master ~]# echo master > /etc/hostname #修改主机名

[root@etcd ~]# echo etcd > /etc/hostname #这里只有三台机器,不需要执行这个

命令,如果是4台,需要执行这个

[root@node1 ~]# echo node1 > /etc/hostname

[root@node2 ~]# echo node2 > /etc/hostname

修改 hosts 文件:

[root@master ~]# vim /etc/hosts

192.168.10.111 master

192.168.10.111 etcd

192.168.10.112 node1

192.168.10.113 node2

[root@master ~]# scp /etc/hosts 192.168.10.112:/etc/

The authenticity of host '192.168.10.112 (192.168.10.112)' can't be established.

ECDSA key fingerprint is SHA256:PKnxewvWHj24RTEPD8kvS0U1PsEbEF+5LqnnJZlG/Xk.

ECDSA key fingerprint is MD5:66:fd:81:28:e2:2d:56:a4:3b:16:fe:67:42:2c:b0:6a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.10.112' (ECDSA) to the list of known hosts.

root@192.168.10.112's password:

hosts 100% 243 81.1KB/s 00:00

[root@master ~]# scp /etc/hosts 192.168.10.113:/etc/

The authenticity of host '192.168.10.113 (192.168.10.113)' can't be established.

ECDSA key fingerprint is SHA256:PKnxewvWHj24RTEPD8kvS0U1PsEbEF+5LqnnJZlG/Xk.

ECDSA key fingerprint is MD5:66:fd:81:28:e2:2d:56:a4:3b:16:fe:67:42:2c:b0:6a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.10.113' (ECDSA) to the list of known hosts.

root@192.168.10.113's password:

hosts 100% 243 81.6KB/s 00:00

配置etcd服务器master

[root@master ~]# vim /etc/etcd/etcd.conf #修改原文件

改:9 ETCD_NAME=default

为:9 ETCD_NAME="etcd"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

改:6 ETCD_LISTEN_CLIENT_URLS="http://localhost:2379"

为:ETCD_LISTEN_CLIENT_URLS="http://localhost:2379,http://192.168.10.111:2379"

改:21 ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379"

为:ETCD_ADVERTISE_CLIENT_URLS="http://192.168.10.111:2379"

/etc/etcd/etcd.conf 配置文件含意如下:

ETCD_NAME="etcd"

etcd 节点名称,默认名称为 default

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

etcd 存储数据的目录

ETCD_LISTEN_CLIENT_URLS="http://localhost:2379,http://192.168.10.111:2379"

etcd 对外服务监听地址,一般指定 2379 端口,如果为 0.0.0.0 将会监听所有接口

ETCD_ARGS=""

需要额外添加的参数,可以自己添加,etcd 的所有参数可以通过 etcd -h 查看

启动服务

[root@master ~]# systemctl start etcd

[root@master ~]# systemctl status etcd

[root@master ~]# systemctl enable etcd

etcd 通讯使用 2379 端口

查看:

[root@master ~]# netstat -antup | grep 2379

[root@master ~]# netstat -antup | grep 2379

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 2575/etcd

tcp 0 0 192.168.10.111:2379 0.0.0.0:* LISTEN 2575/etcd

检查 etcd 集群成员列表,这里只有一台

[root@master ~]# etcdctl member list

8e9e05c52164694d: name=etcd peerURLs=http://localhost:2380 clientURLs=http://192.168.10.111:2379 isLeader=true

至此,配置etcd节点成功!

配置master服务器master

配置kubernetes配置文件

[root@master ~]# vim /etc/kubernetes/config

改:22 KUBE_MASTER="--master=http://127.0.0.1:8080"

为:22 KUBE_MASTER="--master=http://192.168.10.111:8080"

#指定 master 在 192.168.10.111 IP 上监听端口 8080

/etc/kubernetes/config 配置文件含意:

KUBE_LOGTOSTDERR="--logtostderr=true" #表示错误日志记录到文件还是输出到 stderr 标准错误输出

KUBE_LOG_LEVEL="--v=0" #日志等级

KUBE_ALLOW_PRIV="--allow_privileged=false" #是否允讲运行特权容器,false 表示不允进特权容器

配置apiserver配置文件

[root@master ~]# vim /etc/kubernetes/apiserver

改:8 KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1"

为:8 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

改:17 KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379"

为:17 KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.10.111:2379"

改 23 行:

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

为:KUBE_ADMISSION_CONTROL="--admission-control=AlwaysAdmit" #这里必须配置正确

/etc/kubernetes/apiserver 配置文件含意:

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" ##监听的接口,如果配置为127.0.0.1 则只监听 localhost,配置为 0.0.0.0 会监听所有接口,这里配置为 0.0.0.0

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.10.111:2379" #etcd 服务地址,前面已经启动了 etcd 服务

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" #kubernetes可以分配的IP的范围,kubernetes启动的每一个pod以及 serveice 都会分配一个IP地址,将从这个范围中分配 IP

KUBE_ADMISSION_CONTROL="--admission-control=AlwaysAdmit" #不做限制,允许所有节点可以访问 apiserver ,对所有请求开绿灯

扩展:

admission-control(准入控制) 概述:admission controller 本质上一段代码,在对 kubernetes

api 的请求过程中,顺序为先经过认证和授权,然后执行准入操作,最后对目标对象进行操作。配置kube-controller-manager配置文件:默认的配置应该够用:

[root@master ~]# cat /etc/kubernetes/controller-manager #不需要修改

配置kube-scheduler配置文件

[root@master ~]# vim /etc/kubernetes/scheduler

改:7 KUBE_SCHEDULER_ARGS=""

为:7 UBE_SCHEDULER_ARGS="0.0.0.0"

#改 scheduler 监听到的地址为:0.0.0.0,默认是 127.0.0.1

配置etcd,指定容器云中docker的IP网段(在master上操作)

etcd是一个非关系型数据库,如何添加删除数据?

1、扩展:etcdctl 命令使用方法

etcdctl 是操作 etcd 非关系型数据库的一个命令行客户端,它能提供一些简洁的命令,供用户直接跟etcd 数据库打交道。

etcdctl 的命令,大体上分为数据库操作和非数据库操作两类;

数据库操作主要是围绕对键值和目录的 CRUD 完整生命周期的管理;

注:CRUD 即 Create, Read, Update, Delete。

2、etcd 在键的组织上采用了层次化的空间结构(类似于文件系统中目录的概念),用户指定的键可以为单独的名字,如 testkey,此时实际上放在根目录 / 下面,也可以为指定目录结构,如cluster1/node2/testkey,则将创建相应的目录结构。

set指定某个键的值

eg:

[root@master ~]# etcdctl set weme "legend"

legend

[root@master ~]# etcdctl set /testdir/testkey "hello world"

hello world

get获取指定键的值

[root@master ~]# etcdctl get /testdir/testkey

hello world

update当键存在时,更新值的内容

[root@master ~]# etcdctl update /testdir/testkey legend666

legend666

[root@master ~]# etcdctl get /testdir/testkey

legend666

rm删除某个键的值

[root@master ~]# etcdctl rm /testdir/testkey

PrevNode.Value: legend666

#etcdctl mk 和 etcdctl set 的区别如下:

#etcdctl mk 如果给定的键不存在,则创建一个新的键值,如果给定的键存在,则报错,无法创建。

#etcdctl set ,不管给定的键是否存在,都会创建一个新的键值。

eg:

[root@master ~]# etcdctl mk /testdir/testkey "Hello World"

Hello World

[root@master ~]# etcdctl mk /testdir/testkey "abc"

Error: 105: Key already exists (/testdir/testkey) [8]

etcdctl mkdir #创建一个目录

[root@master ~]# etcdctl mkdir testdir1

[root@master ~]# etcdctl ls

/weme

/testdir

/testdir1

[root@master ~]# etcdctl ls /testdir

/testdir/testkey

3、非数据库操作

etcdctl member 后面可以加参数 list、add、remove 命令,表示列出、添加、删除 etcd 实例到 etcd 集群中

例如本地启动一个 etcd 服务实例后,可以用如下命令进行查看

[root@master ~]# etcdctl member list

8e9e05c52164694d: name=etcd peerURLs=http://localhost:2380 clientURLs=http://192.168.10.111:2379 isLeader=true4、设置etcd网络

[root@master ~]# etcdctl mkdir /k8s/network #创建一个目录/k8s/network 用于存储flannel 网络信息

[root@master ~]# etcdctl set /k8s/network/config '{"Network":"10.255.0.0/16"}'

{"Network":"10.255.0.0/16"}

#给/k8s/network/config 赋一个字符串的值 '{"Network": "10.255.0.0/16"}'

[root@master ~]# etcdctl get /k8s/network/config #查看

{"Network":"10.255.0.0/16"}

注:在启动 flannel 之前,需要在 etcd 中添加一条网络配置记录,这个配置将用于 flannel 分配给每个 docker 的虚拟 IP 地址段,用于配置在 minion 上 docker 的 IP 地址

由于 flannel 将覆盖 docker0 上的地址,所以 flannel 服务要先于 docker 服务启动;如果 docker服务已经启动,则先停止 docker 服务,然后启动 flannel,再启动 docker

5、flannel启动过程解析

(1)、从 etcd 中获取出/k8s/network/config 的值;

(2)、划分 subnet 子网,并在 etcd 中进行注册;

(3)、将子网信息记录到/run/flannel/subnet.env 中;

6、配置flannel服务

[root@master ~]# vim /etc/sysconfig/flanneld

改:4 FLANNEL_ETCD_ENDPOINTS="http://127.0.0.1:2379"

为:4 FLANNEL_ETCD_ENDPOINTS="http://192.168.10.111:2379"

改:8 FLANNEL_ETCD_PREFIX="/atomic.io/network"

为:8 FLANNEL_ETCD_PREFIX="/k8s/network"

#其中/k8s/network 与上面 etcd 中的 network 对应

改:11 #FLANNEL_OPTIONS=""

为:11 FLANNEL_OPTIONS="--iface=ens33" #指定通信的物理网卡

[root@master ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/docker.service.d

└─flannel.conf

Active: inactive (dead)

Docs: http://docs.docker.com

[root@master ~]# systemctl restart flanneld

[root@master ~]# systemctl enable flanneld

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@master ~]# systemctl status flanneld

● flanneld.service - Flanneld overlay address etcd agent

Loaded: loaded (/usr/lib/systemd/system/flanneld.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 16:51:59 CST; 19s ago

Main PID: 13539 (flanneld)

CGroup: /system.slice/flanneld.service

└─13539 /usr/bin/flanneld -etcd-endpoints=http://192.168.10.111:2379 -etcd-prefix=/k8...

12月 02 16:51:59 master systemd[1]: Starting Flanneld overlay address etcd agent...

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.122592 13539 main.go:132] In...ers

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.126130 13539 manager.go:149]...111

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.126276 13539 manager.go:166]...11)

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.135228 13539 local_manager.g...ing

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.140744 13539 manager.go:250].../24

12月 02 16:51:59 master flanneld-start[13539]: I1202 16:51:59.142097 13539 network.go:98] ...ses

12月 02 16:51:59 master systemd[1]: Started Flanneld overlay address etcd agent.

Hint: Some lines were ellipsized, use -l to show in full.

查看

[root@master ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.10.111 netmask 255.255.255.0 broadcast 192.168.10.255

inet6 fe80::161:8036:f582:e5e4 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:c8:91:b5 txqueuelen 1000 (Ethernet)

RX packets 161152 bytes 209811259 (200.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 40018 bytes 4081455 (3.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.255.2.0 netmask 255.255.0.0 destination 10.255.2.0

inet6 fe80::acf3:6444:d21d:14cc prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 7942 bytes 1507168 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 7942 bytes 1507168 (1.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

7、查看子网信息/run/flannel/subnet.env,等flannel 起来后就可以看到了,现在看不到此文件

[root@master ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.255.0.0/16

FLANNEL_SUBNET=10.255.2.1/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=false

之后将会有一个脚本将 subnet.env 转写成一个docker的环境变量文件/run/flannel/docker

flannel0 的地址是由 /run/flannel/subnet.env 的 FLANNEL_SUBNET 参数决定的。

[root@master ~]# cat /run/flannel/docker

DOCKER_OPT_BIP="--bip=10.255.2.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=true"

DOCKER_OPT_MTU="--mtu=1472"

DOCKER_NETWORK_OPTIONS=" --bip=10.255.2.1/24 --ip-masq=true --mtu=1472"

启动master上的4个服务

[root@master ~]# systemctl restart kube-apiserver kube-controller-manager kube-scheduler flanneld

[root@master ~]# systemctl status kube-apiserver kube-controller-manager kube-scheduler flanneld

[root@master ~]# systemctl enable kube-apiserver kube-controller-manager kube-scheduler flanneld

到这里之后,etcd和master节点配置成功!

配置node1网络,采用flannel方法来配置

配置 flanneld 服务:

[root@node1 ~]# vim /etc/sysconfig/flanneld

改:4 FLANNEL_ETCD_ENDPOINTS="http://127.0.0.1:2379"

为:4 FLANNEL_ETCD_ENDPOINTS="http://192.168.10.111:2379"

改:8 FLANNEL_ETCD_PREFIX="/atomic.io/network"

为:8 FLANNEL_ETCD_PREFIX="/k8s/network"

#注意 /k8s/network 与上面 etcd 中的 network 对应

改:11 #FLANNEL_OPTIONS=""

为:11 FLANNEL_OPTIONS="--iface=ens33" #指定通信的物理网卡

配置 node1 上的 master 地址和 kube-proxy

1、配置node1上的 master 地址

[root@node1 ~]# vim /etc/kubernetes/config

改:22 KUBE_MASTER="--master=http://127.0.0.1:8080"

为:22 KUBE_MASTER="--master=http://192.168.10.111:8080"

2、kube-proxy 的作用主要是负责 service 的实现,具体来说,就是实现了内部从 pod 到 service

[root@node1 ~]# grep -v '^#' /etc/kubernetes/proxy

KUBE_PROXY_ARGS=""

#不用修改,默认就是监听所有 IP

注:如果启动服务失败,可以使用 tail -f /var/log/messages 动态查看日志

配置node1 Kubelet

Kubelet 运行在 minion 节点上,Kubelet 组件管理 Pod、Pod 中容器及容器的镜像和卷等信息

[root@node1 ~]# vim /etc/kubernetes/kubelet

改:5 KUBELET_ADDRESS="--address=127.0.0.1"

为:5 KUBELET_ADDRESS="--address=0.0.0.0" #默认只监听 127.0.0.1,要改成:0.0.0.0

后期要使用 kubectl 进程连接到 kubelet 服务上,来查看 pod 及 pod 中容器的状态,如果是 127就无法进程连接 kubelet 服务

改:11 KUBELET_HOSTNAME="--hostname-override=127.0.0.1"

为:11 KUBELET_HOSTNAME="--hostname-override=node1"

#minion 的主机名,设置成和本主机名一样,便于识别

改:14 KUBELET_API_SERVER="--api-servers=http://127.0.0.1:8080"

为:14 KUBELET_API_SERVER="--api-servers=http://192.168.10.111:8080" #批定 apiserver的地址

扩展:第 17 行的意思:

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_POD_INFRA_CONTAINER 指定 pod 基础容器镜像地址。这个是一个基础容器,每一个Pod 启动的时候都会启动一个这样的容器。如果你的本地没有这个镜像,kubelet 会连接外网把这个镜像下载下来。最开始的时候是在 Google 的 registry 上,因此国内因为 GFW 都下载不了导致 Pod 运行不起来。现在每个版本的 Kubernetes 都把这个镜像地址改成红帽的地址了。你也可以提前传到自己的registry 上,然后再用这个参数指定成自己的镜像链接。

注:https://access.redhat.com/containers/ 是红帽的容器下载站点

启动node1服务

[root@node1 ~]# systemctl restart flanneld kube-proxy kubelet docker

[root@node1 ~]# systemctl enable flanneld kube-proxy kubelet docker

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@node1 ~]# systemctl status flanneld kube-proxy kubelet docker

● flanneld.service - Flanneld overlay address etcd agent

Loaded: loaded (/usr/lib/systemd/system/flanneld.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 17:22:48 CST; 22s ago

Main PID: 13095 (flanneld)

CGroup: /system.slice/flanneld.service

└─13095 /usr/bin/flanneld -etcd-endpoints=http://192.168.10.111:2379 -etcd-prefix=/k8...

12月 02 17:22:48 node1 systemd[1]: Starting Flanneld overlay address etcd agent...

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.894736 13095 main.go:132] Ins...ers

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.896722 13095 manager.go:149] ...112

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.896762 13095 manager.go:166] ...12)

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.901691 13095 local_manager.go...5.0

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.963494 13095 manager.go:250] .../24

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.964374 13095 network.go:98] W...ses

12月 02 17:22:48 node1 flanneld-start[13095]: I1202 17:22:48.969156 13095 network.go:191] .../24

12月 02 17:22:48 node1 systemd[1]: Started Flanneld overlay address etcd agent.

● kube-proxy.service - Kubernetes Kube-Proxy Server

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 17:22:48 CST; 22s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 13102 (kube-proxy)

CGroup: /system.slice/kube-proxy.service

└─13102 /usr/bin/kube-proxy --logtostderr=true --v=0 --master=http://192.168.10.111:8...

12月 02 17:22:49 node1 kube-proxy[13102]: E1202 17:22:49.122526 13102 server.go:421] Can't...und

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.124223 13102 server.go:215] Using...er.

12月 02 17:22:49 node1 kube-proxy[13102]: W1202 17:22:49.125856 13102 server.go:468] Faile...und

12月 02 17:22:49 node1 kube-proxy[13102]: W1202 17:22:49.125917 13102 proxier.go:248] inva...eIP

12月 02 17:22:49 node1 kube-proxy[13102]: W1202 17:22:49.125926 13102 proxier.go:253] clus...fic

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.125954 13102 server.go:227] Teari...es.

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.187634 13102 conntrack.go:81] Set...072

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.188190 13102 conntrack.go:66] Set...768

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.188344 13102 conntrack.go:81] Set...400

12月 02 17:22:49 node1 kube-proxy[13102]: I1202 17:22:49.188377 13102 conntrack.go:81] Set...600

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 17:22:50 CST; 21s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 13316 (kubelet)

CGroup: /system.slice/kubelet.service

├─13316 /usr/bin/kubelet --logtostderr=true --v=0 --api-servers=http://192.168.10.111...

└─13365 journalctl -k -f

12月 02 17:22:51 node1 kubelet[13316]: W1202 17:22:51.353771 13316 manager.go:247] Registr...sed

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.353782 13316 factory.go:54] Register...ory

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.354066 13316 factory.go:86] Register...ory

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.354226 13316 manager.go:1106] Starte...ger

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.355822 13316 oomparser.go:185] oompa...emd

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.361141 13316 manager.go:288] Startin...ers

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.411388 13316 manager.go:293] Recover...ted

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.439701 13316 kubelet_node_status.go:...ach

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.441492 13316 kubelet_node_status.go:...de1

12月 02 17:22:51 node1 kubelet[13316]: I1202 17:22:51.446394 13316 kubelet_node_status.go:...de1

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/docker.service.d

└─flannel.conf

Active: active (running) since 五 2022-12-02 17:22:50 CST; 21s ago

Docs: http://docs.docker.com

Main PID: 13177 (dockerd-current)

CGroup: /system.slice/docker.service

├─13177 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker...

└─13198 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/do...

12月 02 17:22:49 node1 dockerd-current[13177]: time="2022-12-02T17:22:49.180095532+08:00" le...nd"

12月 02 17:22:49 node1 dockerd-current[13177]: time="2022-12-02T17:22:49.182485905+08:00" le...98"

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.375073747+08:00" le...ds"

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.376745887+08:00" le...t."

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.417794287+08:00" le...se"

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.505054728+08:00" le...e."

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.538967699+08:00" le...on"

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.539095482+08:00" le...3.1

12月 02 17:22:50 node1 dockerd-current[13177]: time="2022-12-02T17:22:50.547110177+08:00" le...ck"

12月 02 17:22:50 node1 systemd[1]: Started Docker Application Container Engine.

Hint: Some lines were ellipsized, use -l to show in full.

[root@node1 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.255.79.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:00:50:de:03 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.10.112 netmask 255.255.255.0 broadcast 192.168.10.255

inet6 fe80::161:8036:f582:e5e4 prefixlen 64 scopeid 0x20<link>

inet6 fe80::4723:2048:b65b:3121 prefixlen 64 scopeid 0x20<link>

ether 00:50:56:24:1c:ae txqueuelen 1000 (Ethernet)

RX packets 152266 bytes 198896270 (189.6 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 34100 bytes 2543039 (2.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.255.79.0 netmask 255.255.0.0 destination 10.255.79.0

inet6 fe80::ec84:2291:26be:ccc9 prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 76 bytes 5952 (5.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 76 bytes 5952 (5.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@node1 ~]# netstat -antup | grep proxy

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 13102/kube-proxy

tcp 0 0 192.168.10.112:56042 192.168.10.111:8080 ESTABLISHED 13102/kube-proxy

tcp 0 0 192.168.10.112:56046 192.168.10.111:8080 ESTABLISHED 13102/kube-proxy

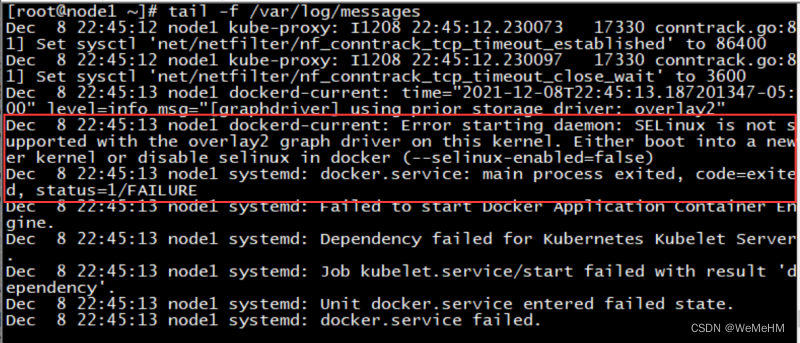

可能会遇到以下报错:

Dec 8 22:49:50 node1 dockerd-current: Error starting daemon: SELinux is not supported with the overlay2 graph driver on this kernel. Either boot into a newer kernel or disable selinux in docker (–selinux-enabled=false)

Dec 8 22:49:50 node1 systemd: docker.service: main process exited, code=exited, status=1/FAILURE

Error starting daemon: SELinux is not supported with the overlay2 graph driver

Dec 08 22:49:50 node1 systemd[1]: docker.service: main process exited, code=exited, status=1/FAILURE

Dec 08 22:49:50 node1 systemd[1]: Failed to start Docker Application Container Engine.

— Subject: Unit docker.service has failed

解决方法:

[root@node1 ~]# vim /etc/sysconfig/docker

注释掉以下这行

#OPTIONS=’–selinux-enabled –log-driver=journald –signature-verification=false’

[root@node1 ~]# systemctl restart docker

[root@node1 ~]# systemctl restart flanneld kube-proxy kubelet docker

或者

yum install -y kernel

reboot

配置node2网络,采用flannel方法来配置

配置方法和 node1 一样

[root@node1 ~]# scp /etc/sysconfig/flanneld 192.168.10.113:/etc/sysconfig/

The authenticity of host '192.168.10.113 (192.168.10.113)' can't be established.

ECDSA key fingerprint is SHA256:PKnxewvWHj24RTEPD8kvS0U1PsEbEF+5LqnnJZlG/Xk.

ECDSA key fingerprint is MD5:66:fd:81:28:e2:2d:56:a4:3b:16:fe:67:42:2c:b0:6a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.10.113' (ECDSA) to the list of known hosts.

root@192.168.10.113's password:

flanneld 100% 371 94.0KB/s 00:00

#查看

[root@node2 ~]# grep -v '^#' /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.10.111:2379"

FLANNEL_ETCD_PREFIX="/k8s/network"

FLANNEL_OPTIONS="--iface=ens33"

[root@node2 ~]# systemctl start flanneld

[root@node2 ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.10.113 netmask 255.255.255.0 broadcast 192.168.10.255

inet6 fe80::161:8036:f582:e5e4 prefixlen 64 scopeid 0x20<link>

inet6 fe80::4187:adb5:434b:c4c8 prefixlen 64 scopeid 0x20<link>

inet6 fe80::4723:2048:b65b:3121 prefixlen 64 scopeid 0x20<link>

ether 00:50:56:2e:5c:3c txqueuelen 1000 (Ethernet)

RX packets 152483 bytes 198741495 (189.5 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 38098 bytes 2661094 (2.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.255.96.0 netmask 255.255.0.0 destination 10.255.96.0

inet6 fe80::8ae9:ee24:d40e:4806 prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2 bytes 96 (96.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 72 bytes 5712 (5.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 72 bytes 5712 (5.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

配置 node2 上 master 地址和 kube-proxy

[root@node1 ~]# scp /etc/kubernetes/config 192.168.10.113:/etc/kubernetes/

root@192.168.10.113's password:

config 100% 660 263.7KB/s 00:00

[root@node1 ~]# scp /etc/kubernetes/proxy 192.168.10.113:/etc/kubernetes/

root@192.168.10.113's password:

proxy 100% 103 33.9KB/s 00:00

[root@node2 ~]# systemctl start kube-proxy

[root@node2 ~]# systemctl enable kube-proxy

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@node2 ~]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube-Proxy Server

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 17:32:18 CST; 21s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 13156 (kube-proxy)

CGroup: /system.slice/kube-proxy.service

└─13156 /usr/bin/kube-proxy --logtostderr=true --v=0 --master=http://192.168.10.111:8...

12月 02 17:32:19 node2 kube-proxy[13156]: E1202 17:32:19.211578 13156 server.go:421] Can't...und

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.215203 13156 server.go:215] Using...er.

12月 02 17:32:19 node2 kube-proxy[13156]: W1202 17:32:19.216912 13156 server.go:468] Faile...und

12月 02 17:32:19 node2 kube-proxy[13156]: W1202 17:32:19.216972 13156 proxier.go:248] inva...eIP

12月 02 17:32:19 node2 kube-proxy[13156]: W1202 17:32:19.216978 13156 proxier.go:253] clus...fic

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.217009 13156 server.go:227] Teari...es.

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.300118 13156 conntrack.go:81] Set...072

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.300608 13156 conntrack.go:66] Set...768

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.301257 13156 conntrack.go:81] Set...400

12月 02 17:32:19 node2 kube-proxy[13156]: I1202 17:32:19.301310 13156 conntrack.go:81] Set...600

Hint: Some lines were ellipsized, use -l to show in full.

配置node2 kubelet

[root@node1 ~]# scp /etc/kubernetes/kubelet 192.168.10.113:/etc/kubernetes/

root@192.168.10.113's password:

kubelet 100% 614 649.7KB/s 00:00

[root@node2 ~]# vim /etc/kubernetes/kubelet

改:11 KUBELET_HOSTNAME="--hostname-override=node1"

为:11 KUBELET_HOSTNAME="--hostname-override=node2" #这里修改成 node2

[root@node2 ~]# systemctl restart kubelet

[root@node2 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node2 ~]# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2022-12-02 17:34:36 CST; 19s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 14767 (kubelet)

CGroup: /system.slice/kubelet.service

├─14767 /usr/bin/kubelet --logtostderr=true --v=0 --api-servers=http://192.168.10.111...

└─14816 journalctl -k -f

12月 02 17:34:36 node2 kubelet[14767]: W1202 17:34:36.681568 14767 manager.go:247] Registr...sed

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.681577 14767 factory.go:54] Register...ory

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.681995 14767 factory.go:86] Register...ory

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.682337 14767 manager.go:1106] Starte...ger

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.683565 14767 oomparser.go:185] oompa...emd

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.685672 14767 manager.go:288] Startin...ers

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.730679 14767 manager.go:293] Recover...ted

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.770421 14767 kubelet_node_status.go:...ach

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.772143 14767 kubelet_node_status.go:...de2

12月 02 17:34:36 node2 kubelet[14767]: I1202 17:34:36.774454 14767 kubelet_node_status.go:...de2

Hint: Some lines were ellipsized, use -l to show in full.

#查看:已经建立起了连接

[root@node2 ~]# netstat -antup | grep 8080

tcp 0 0 192.168.10.113:47096 192.168.10.111:8080 ESTABLISHED 14767/kubelet

tcp 0 0 192.168.10.113:47092 192.168.10.111:8080 ESTABLISHED 14767/kubelet

tcp 0 0 192.168.10.113:47098 192.168.10.111:8080 ESTABLISHED 14767/kubelet

tcp 0 0 192.168.10.113:47093 192.168.10.111:8080 ESTABLISHED 14767/kubelet

tcp 0 0 192.168.10.113:47084 192.168.10.111:8080 ESTABLISHED 13156/kube-proxy

tcp 0 0 192.168.10.113:47086 192.168.10.111:8080 ESTABLISHED 13156/kube-proxy

tcp 0 0 192.168.10.113:47100 192.168.10.111:8080 ESTABLISHED 14767/kubelet

启动 node2 所有服务

[root@node2 ~]# systemctl restart flanneld kube-proxy kubelet docker

[root@node2 ~]# systemctl enable flanneld kube-proxy kubelet docker

[root@node2 ~]# systemctl status flanneld kube-proxy kubelet docker

查看 docker0 IP: node1 和 node2 是不一样

[root@node2 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.255.96.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:be:65:0d:d4 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

测试:

[root@node2 ~]# netstat -antup | grep proxy

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 16211/kube-proxy

tcp 0 0 192.168.10.113:47104 192.168.10.111:8080 ESTABLISHED 16211/kube-proxy

tcp 0 0 192.168.10.113:47102 192.168.10.111:8080 ESTABLISHED 16211/kube-proxy

注:kubeproxy 监控听端口号是 10249

注:kubeproxy 和 master 的 8080 进行通信

登录master查看整个集群的运行状态:

[root@master ~]# kubectl get nodes

NAME STATUS AGE

node1 Ready 19m

node2 Ready 7m

至此:整个Kubernetes集群搭建完毕!

kubernetes 每个节点需要启动的服务和开放端口号

在我本次操作中 kubernetes 4 个结点一共需要启动 13 个服务,开 6 个端口号

详情如下:

etcd:一共 1 个服务 ,通讯使用 2379 端口

启动服务

[root@master ~]# systemctl restart etcd

master: 一共 4 个服务,通讯使用 8080 端口

[root@master ~]# systemctl restart kube-apiserver kube-controller-manager kube-scheduler flanneld

node1: 一共 4 个服务

kubeproxy 监控听端口号是 10249 ,kubelet 监听端口 10248、10250、10255 三个端口

[root@node1 ~]# systemctl restart flanneld kube-proxy kubelet docker

node2: 一共 4 个服务

[root@node2 ~]# systemctl restart flanneld kube-proxy kubelet docker