2 矩阵相加

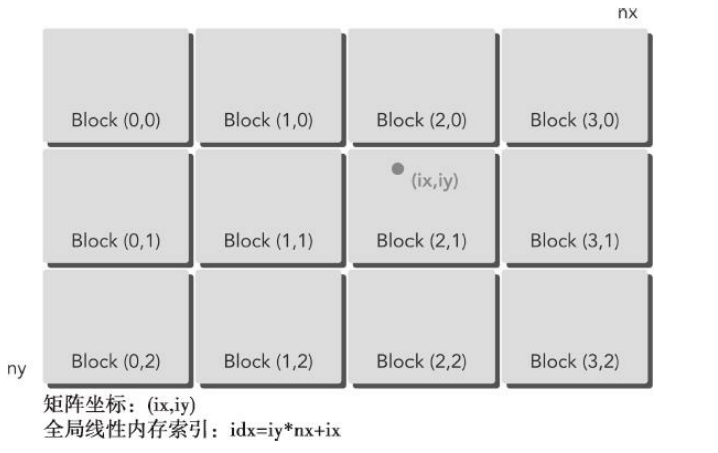

使用二维网格以及二维块来编写矩阵加法核函数。

其中进行矩阵相加的核函数的最关键步骤是将每个线程从它的线程索引映射到全局线性内存索引中。

注意到,ix实际上表示的是列的变化,iy实际上是表示行的变化,nx和ny表示的是矩阵的维度。说明一行

所以idx=iy*nx+ix;

每一行有nx个数字,iy表示行的变化,ix表示在这行的某个位置,所以idx表示的是在整个数据中的索引。

-

在CPU上实现矩阵相加

其实就是定义三个矩阵的数组,以全局索引的形式保存数据,ic=ia+ib;

就是对于数组中的每一个位置上的数,进行一对一相加。当一行的数据都加和之后,那么在第二行数据中,ia,ib,ic指针的位置需要指向第二行,因为一行的数据为nx,所以指针同步增加nx

void sumMatrixOnHost(float* a, float* b, float* c, const int nx, const int ny) { float* ia = a; float* ib = b; float* ic = c; for (int iy = 0; iy < ny; iy++) { for (int ix = 0; ix < nx; ix++) { ic[ix] = ia[ix] + ib[ix]; } ia += nx; ib += nx; ic += nx; } } -

在GPU上实现矩阵相加

对于每个线程,计算出它在全局索引中的位置,实现矩阵的加法操作。

__global__ void sumMatrixOnGPU2D(float* a, float* b, float* c, const int nx, const int ny) { int ix = threadIdx.x + blockDim.x * blockIdx.x; int iy = threadIdx.y + blockDim.y * blockIdx.y; unsigned int idx = iy * nx + ix; if (ix < nx && iy < ny) { c[idx] = a[idx] + b[idx]; } }

全代码:

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include<stdio.h>

#include<stdlib.h>

#include<malloc.h>

#include<time.h>

#include<algorithm>

//随机初始化数组

void init(float* ip, float size) {

for (int i = 0; i < size; i++) {

ip[i] = float(rand() & 0xff) / 66.6;

}

}

//打印数组

void printMatrix(float* a, float* b, float* c, const int nx, const int ny) {

float* ia = a;

float* ib = b;

float* ic = c;

printf("\nMatric:(%d,%d)\n", nx, ny);

for (int iy = 0; iy < ny; iy++) {

for (int ix = 0; ix < nx; ix++) {

printf("%f+%f=%f", ia[ix], ib[ix], ic[ix]);

}

ia += nx;

ib += nx;

ic += nx;

printf("\n");

}

printf("\n");

}

//打印矩阵之差

void printResult(float* c, float* cc, const int nx, const int ny) {

float* ic = c;

float* icc = cc;

for (int iy = 0; iy < ny; iy++) {

for (int ix = 0; ix < nx; ix++) {

printf("%f", ic[ix] - icc[ix]);

}

ic += nx;

icc += nx;

printf("\n");

}

printf("\n");

}

//验证结果

void checkResult(float* hostRef, float* gpuRef, const int N) {

double epsilon = 1.0E-8;

int match = 1;

for (int i = 0; i < N; i++) {

if (abs(hostRef[i] - gpuRef[i]) > epsilon) {

match = 0;

printf("Array don't match");

printf("host %5.2f gpu %5.2f at current %d\n", hostRef[i], gpuRef[i], i);

break;

}

}

if (match) {

printf("Array match.\n\n");

return;

}

}

//CPU上两个矩阵相加

void sumMatrixOnHost(float* a, float* b, float* c, const int nx, const int ny) {

float* ia = a;

float* ib = b;

float* ic = c;

for (int iy = 0; iy < ny; iy++) {

for (int ix = 0; ix < nx; ix++) {

ic[ix] = ia[ix] + ib[ix];

}

ia += nx;

ib += nx;

ic += nx;

}

}

__global__ void sumMatrixOnGPU2D(float* a, float* b, float* c, const int nx, const int ny) {

int ix = threadIdx.x + blockDim.x * blockIdx.x;

int iy = threadIdx.y + blockDim.y * blockIdx.y;

unsigned int idx = iy * nx + ix;

if (ix < nx && iy < ny) {

c[idx] = a[idx] + b[idx];

}

}

int main() {

int dev = 0;

cudaDeviceProp deviceprop;

cudaGetDeviceProperties(&deviceprop, dev);

printf("using Device :%d %s\n\n", dev, deviceprop.name);

//设置矩阵维度

int nx = 1 << 12;

int ny = 1 << 12;

int nxy = nx * ny;

int nBytes = nxy * sizeof(float);

//分配CPU的相关数据内存

float* h_a, *h_b, *h_c, *h_cc;

h_a = (float*)malloc(nBytes);

h_b = (float*)malloc(nBytes);

h_c = (float*)malloc(nBytes);

h_cc = (float*)malloc(nBytes);

//初始化数据

init(h_a, nxy);

init(h_b, nxy);

//开始计时

clock_t cpuStart = clock();

sumMatrixOnHost(h_a, h_b, h_c, nx, ny);

clock_t cpuEnd = clock();

float cpuTime = (float)(cpuEnd - cpuStart) / CLOCKS_PER_SEC;

printf("cpu time %f\n", cpuTime);

//分配gpu内存

float* d_a, *d_b, *d_c;

cudaMalloc((void**)&d_a, nBytes);

cudaMalloc((void**)&d_b, nBytes);

cudaMalloc((void**)&d_c, nBytes);

//初始化网格以及块大小

dim3 block(128,1);

dim3 grid((nx + block.x - 1) / block.x, (ny + block.y - 1) / block.y);

//数据从cpu拷贝gpu

cudaMemcpy(d_a, h_a, nBytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, h_b, nBytes, cudaMemcpyHostToDevice);

//gpu调用核函数

clock_t gpuStart = clock();

sumMatrixOnGPU2D << <grid, block >> > (d_a, d_b, d_c, nx, ny);

cudaDeviceSynchronize();

clock_t gpuEnd = clock();

float gpuTime = (float)(gpuEnd - gpuStart) / CLOCKS_PER_SEC;

printf("GPU time:%f\n", gpuTime);

// 结果从gpu再拷贝回cpu

cudaMemcpy(h_cc, d_c, nBytes, cudaMemcpyDeviceToHost);

checkResult(h_c, h_cc, nxy);

//释放内存

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);

free(h_a);

free(h_b);

free(h_c);

free(h_cc);

return 0;

}

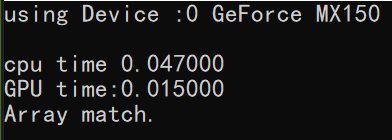

执行结果:

打印array match,说明cpu上的结果与gpu并行的结果一致。

注意:此时的gpu的时间效率比gpu高,但是在windows下无法使用gettimeofday获取精确时间,使用的是clock()函数获取当前时间,在计算gpu的并行时间的时候可能有误差

版权声明:本文为weixin_44911248原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。