首先需要对测试集做批量测试,即需要将每个测试图像输入到模型中,得到测试结果。然后统计测试结果;

本文用的事darknet中v

alid

接口函数,这里valid可以作为训练时候,使用验证集检测模型训练情况,这里使用

valid对训练好的模型做测试

;(即用来批量统计输入测试图像经过模型得到的结果)

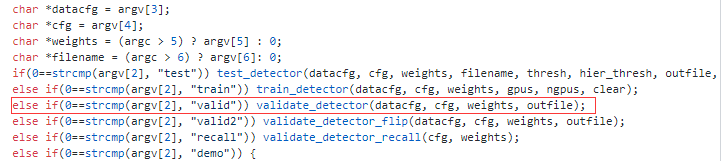

看下源码

detector.c

中run_detector函数中

valid接口

用法

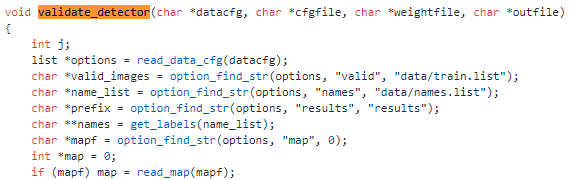

具体用法:

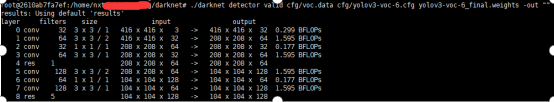

./darknet detector valid cfg/voc.data cfg/yolov3-voc-6.cfg yolov3-voc-6_final.weights -out ""

注意

voc.data 中vailde改成测试集路径

![]()

数据集路径格式

![]()

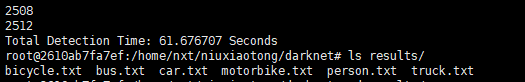

测试结果默认保存在当前路径下的./results文件夹下,如果没有,新建;

输出测试图像数

vim bicycle.txt

008153 0.005640 231.401428 410.399536 375.000000 490.319397

按列,分别为:图像名称 | 置信度 | xmin,ymin,xmax,ymax

计算各类的MAP

python reval_voc_py3.py --year 2007 --classes data/coco-6.names --image_set test --voc_dir /home/nxt/xxx/darknet/VOCdevkit --output_dir results

部分输出结果:

resultsEvaluating detections

VOC07 metric? Yes

devkit_path= /home/nxt/xx/darknet/VOCdevkit , year = 2007

!!! cachefile = /home/nxt/xxx/darknet/VOCdevkit/annotations_cache/annots.pkl

AP for bicycle = 0.8458

!!! cachefile = /home/nxt/xxx/darknet/VOCdevkit/annotations_cache/annots.pkl

AP for bus = 0.8877

!!! cachefile = /home/nxt/xxx/darknet/VOCdevkit/annotations_cache/annots.pkl

AP for car = 0.8566

.....

reval_voc_py3.py

为

非官方

计算方法,从google了解,官方使用的MATALB的工具箱计算法,需自行了解,此处代码从github找到的,时间就有点忘,后期想起来,补从地址

# reval_voc_py3.py

# !/usr/bin/env python

# Adapt from ->

# --------------------------------------------------------

# Fast R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick

# --------------------------------------------------------

# <- Written by Yaping Sun

"""Reval = re-eval. Re-evaluate saved detections."""

import os, sys, argparse

import numpy as np

import _pickle as cPickle

#import cPickle

from voc_eval_py3 import voc_eval

def parse_args():

"""

Parse input arguments

"""

parser = argparse.ArgumentParser(description='Re-evaluate results')

parser.add_argument('output_dir', nargs=1, help='results directory',

type=str)

parser.add_argument('--voc_dir', dest='voc_dir', default='data/VOCdevkit', type=str)

parser.add_argument('--year', dest='year', default='2017', type=str)

parser.add_argument('--image_set', dest='image_set', default='test', type=str)

parser.add_argument('--classes', dest='class_file', default='data/voc.names', type=str)

if len(sys.argv) == 1:

parser.print_help()

sys.exit(1)

args = parser.parse_args()

return args

def get_voc_results_file_template(image_set, out_dir = 'results'):

#filename = 'comp4_det_' + image_set + '_{:s}.txt'

filename = '{:s}.txt'

path = os.path.join(out_dir, filename)

return path

def do_python_eval(devkit_path, year, image_set, classes, output_dir = 'results'):

annopath = os.path.join(

devkit_path,

'VOC' + year+'_test', # voc2007_test

'Annotations',

'{}.xml')

imagesetfile = os.path.join(

devkit_path,

'VOC' + year+'_test',

'ImageSets',

'Main',

image_set + '.txt')

cachedir = os.path.join(devkit_path, 'annotations_cache')

aps = []

# The PASCAL VOC metric changed in 2010

use_07_metric = True if int(year) < 2010 else False

print('VOC07 metric? ' + ('Yes' if use_07_metric else 'No'))

print('devkit_path=',devkit_path,', year = ',year)

if not os.path.isdir(output_dir):

os.mkdir(output_dir)

for i, cls in enumerate(classes):

if cls == '__background__':

continue

filename = get_voc_results_file_template(image_set).format(cls)

rec, prec, ap = voc_eval(

filename, annopath, imagesetfile, cls, cachedir, ovthresh=0.5,

use_07_metric=use_07_metric)

print('rec:', rec.shape)

#np.savetxt('%s.txt',i, rec)

print('prec:', prec.shape)

#print(prec)

aps += [ap]

print('AP for {} = {:.4f}'.format(cls, ap))

cls_prec = cls+'_prec'

np.savetxt(cls,rec)

np.savetxt(cls_prec,prec)

with open(os.path.join(output_dir, cls + '_pr.pkl'), 'wb') as f:

cPickle.dump({'rec': rec, 'prec': prec, 'ap': ap}, f)

print('Mean AP = {:.4f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('Results:')

for ap in aps:

print('{:.3f}'.format(ap))

print('{:.3f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('')

print('--------------------------------------------------------------')

print('Results computed with the **unofficial** Python eval code.')

print('Results should be very close to the official MATLAB eval code.')

print('-- Thanks, The Management')

print('--------------------------------------------------------------')

if __name__ == '__main__':

args = parse_args() # input parameter

output_dir = os.path.abspath(args.output_dir[0]) # output dir

with open(args.class_file, 'r') as f:

lines = f.readlines()

classes = [t.strip('\n') for t in lines] # class names

print('Evaluating detections')

do_python_eval(args.voc_dir, args.year, args.image_set, classes, output_dir)

voc_eval.py

代码原味python2这里我更为python3版本

#!/usr/bin/env python

# Adapt from ->

# --------------------------------------------------------

# Fast R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick

# --------------------------------------------------------

# <- Written by Yaping Sun

"""Reval = re-eval. Re-evaluate saved detections."""

import os, sys, argparse

import numpy as np

import _pickle as cPickle

#import cPickle

from voc_eval_py3 import voc_eval

def parse_args():

"""

Parse input arguments

"""

parser = argparse.ArgumentParser(description='Re-evaluate results')

parser.add_argument('output_dir', nargs=1, help='results directory',

type=str)

parser.add_argument('--voc_dir', dest='voc_dir', default='data/VOCdevkit', type=str)

parser.add_argument('--year', dest='year', default='2017', type=str)

parser.add_argument('--image_set', dest='image_set', default='test', type=str)

parser.add_argument('--classes', dest='class_file', default='data/voc.names', type=str)

if len(sys.argv) == 1:

parser.print_help()

sys.exit(1)

args = parser.parse_args()

return args

def get_voc_results_file_template(image_set, out_dir = 'results'):

#filename = 'comp4_det_' + image_set + '_{:s}.txt'

filename = '{:s}.txt'

path = os.path.join(out_dir, filename)

return path

def do_python_eval(devkit_path, year, image_set, classes, output_dir = 'results'):

annopath = os.path.join(

devkit_path,

'VOC' + year+'_test', # voc2007_test

'Annotations',

'{}.xml')

imagesetfile = os.path.join(

devkit_path,

'VOC' + year+'_test',

'ImageSets',

'Main',

image_set + '.txt')

cachedir = os.path.join(devkit_path, 'annotations_cache')

aps = []

# The PASCAL VOC metric changed in 2010

use_07_metric = True if int(year) < 2010 else False

print('VOC07 metric? ' + ('Yes' if use_07_metric else 'No'))

print('devkit_path=',devkit_path,', year = ',year)

if not os.path.isdir(output_dir):

os.mkdir(output_dir)

for i, cls in enumerate(classes):

if cls == '__background__':

continue

filename = get_voc_results_file_template(image_set).format(cls)

rec, prec, ap = voc_eval(

filename, annopath, imagesetfile, cls, cachedir, ovthresh=0.5,

use_07_metric=use_07_metric)

print('rec:', rec.shape)

#np.savetxt('%s.txt',i, rec)

print('prec:', prec.shape)

#print(prec)

aps += [ap]

print('AP for {} = {:.4f}'.format(cls, ap))

cls_prec = cls+'_prec'

np.savetxt(cls,rec)

np.savetxt(cls_prec,prec)

with open(os.path.join(output_dir, cls + '_pr.pkl'), 'wb') as f:

cPickle.dump({'rec': rec, 'prec': prec, 'ap': ap}, f)

print('Mean AP = {:.4f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('Results:')

for ap in aps:

print('{:.3f}'.format(ap))

print('{:.3f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('')

print('--------------------------------------------------------------')

print('Results computed with the **unofficial** Python eval code.')

print('Results should be very close to the official MATLAB eval code.')

print('-- Thanks, The Management')

print('--------------------------------------------------------------')

if __name__ == '__main__':

args = parse_args() # input parameter

output_dir = os.path.abspath(args.output_dir[0]) # output dir

with open(args.class_file, 'r') as f:

lines = f.readlines()

classes = [t.strip('\n') for t in lines] # class names

print('Evaluating detections')

do_python_eval(args.voc_dir, args.year, args.image_set, classes, output_dir)

本人略菜,有问题请指出;