目录

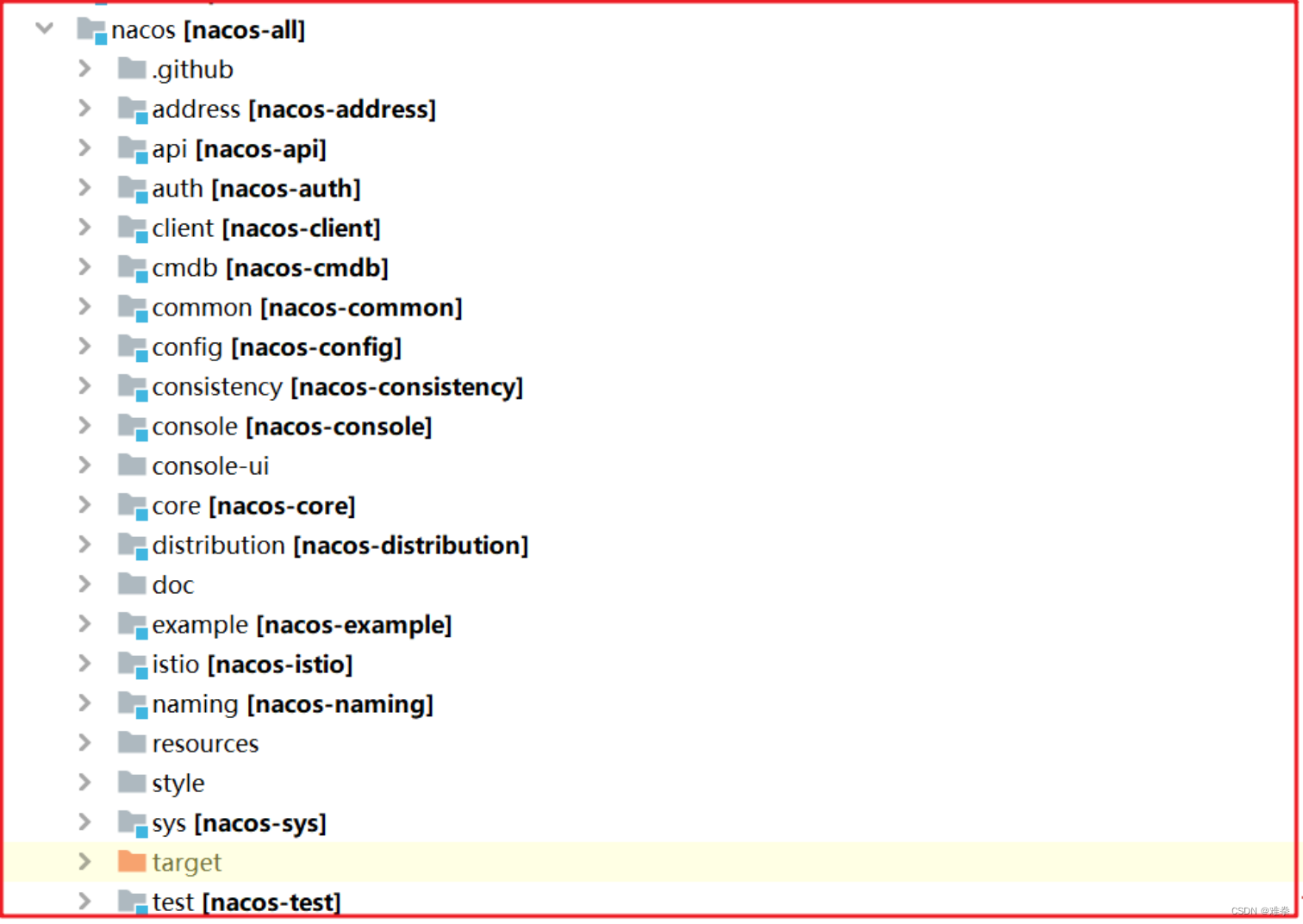

1.0.Nacos源码剖析

1.1.Nacos配置存储mysql数据库

1.2.客户端工作流程

1.2.1服务创建

1.2.2.服务注册

1.2.3.服务发现

1.2.4.服务下线

1.2.4.服务订阅

1.3.服务端工作流程

1.3.1.注册处理

1.3.2.一致性算法Distro协议介绍

1.3.3.Distro服务启动-寻址模式

1.3.3.1.单机寻址模式

1.3.3.2.文件寻址模式

编辑

1.3.3.3.服务器寻址模式

1.3.5.集群数据同步

1.3.5.1.全量同步

◆ 任务启动

◆ 数据执行加载

◆ 数据同步

1.3.5.2.增量同步

◆ 增量数据入口

◆ 增量同步操作

◆ 详细增量数据同步(单机)

2 .0.Sentinel Dashboard数据持久化

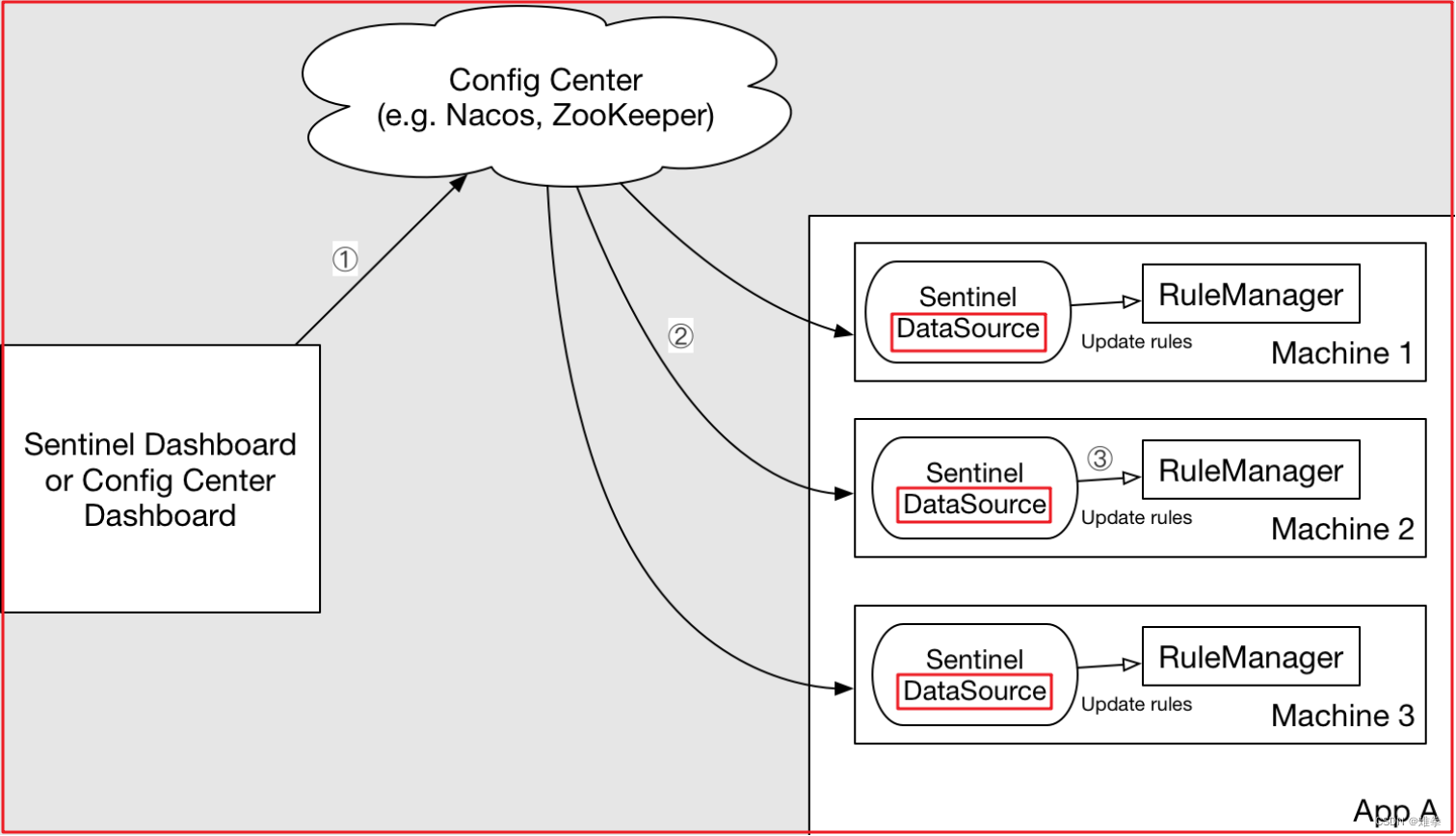

2.1.动态配置原理

2.2.Sentinel+Nacos数据持久化

2.2.1.Dashboard改造分析

2.2.2.页面改造

2.2.3.Nacos配置

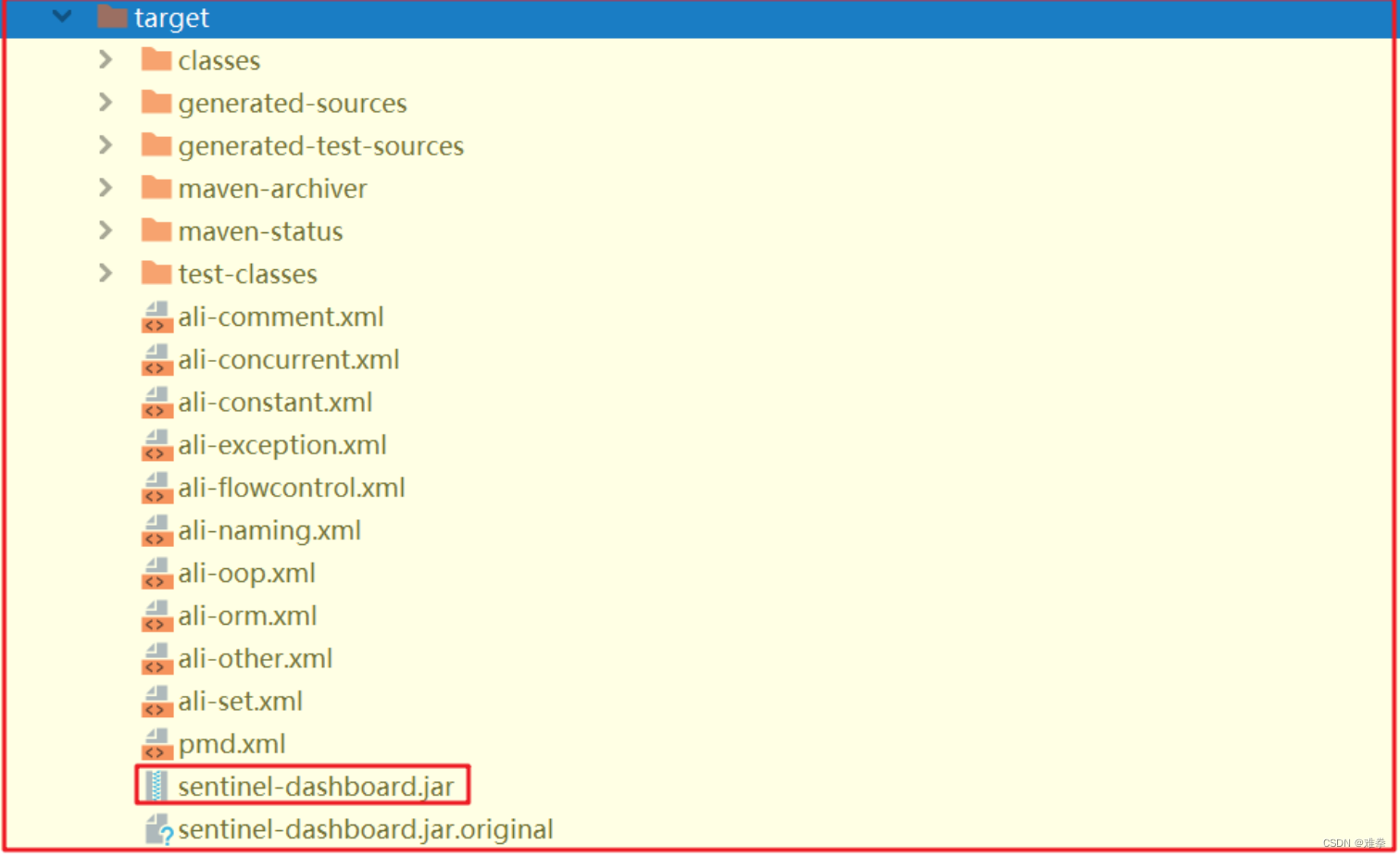

2.3.4.Dashboard持久化改造

2.3.5.Nacos配置创建

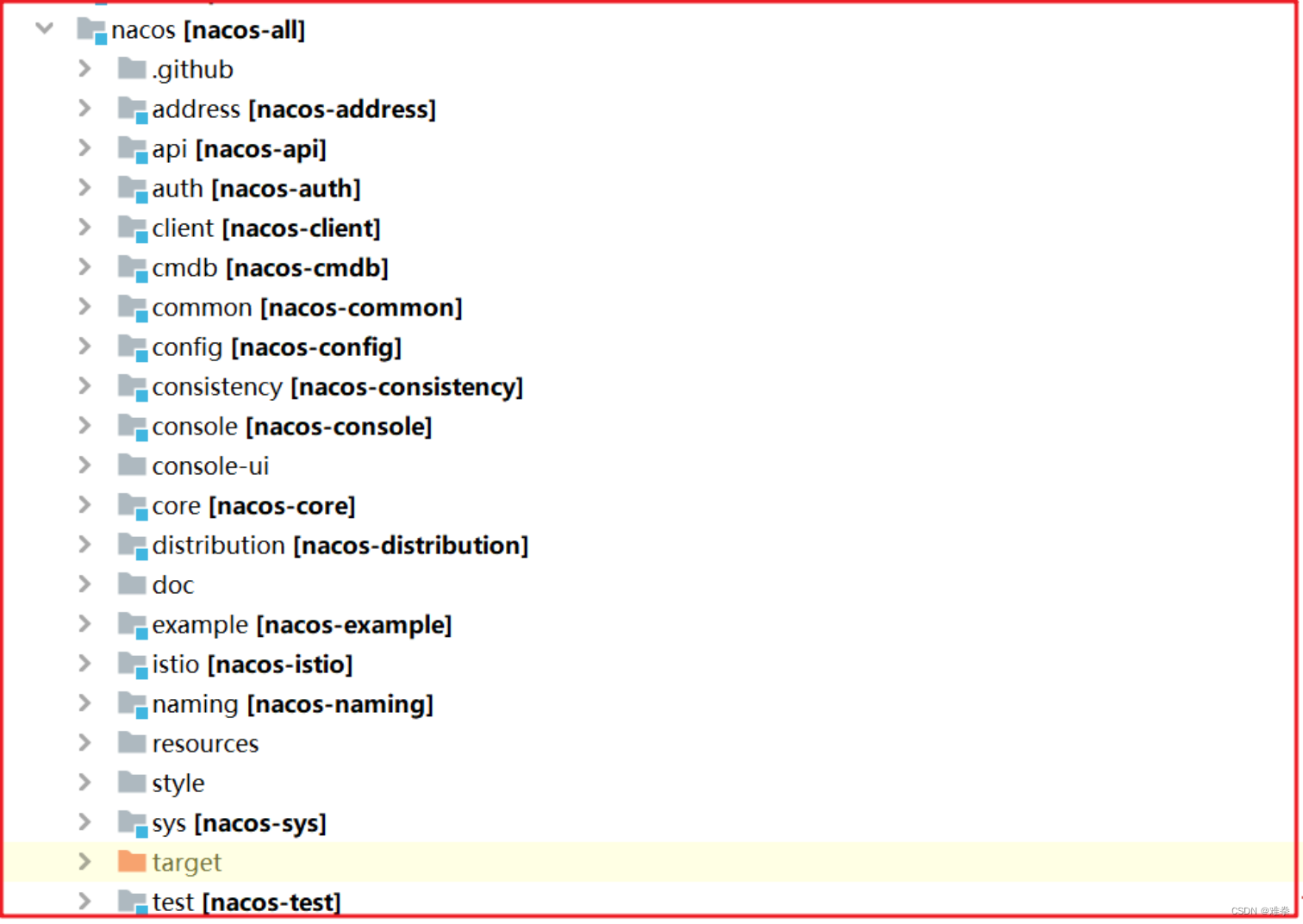

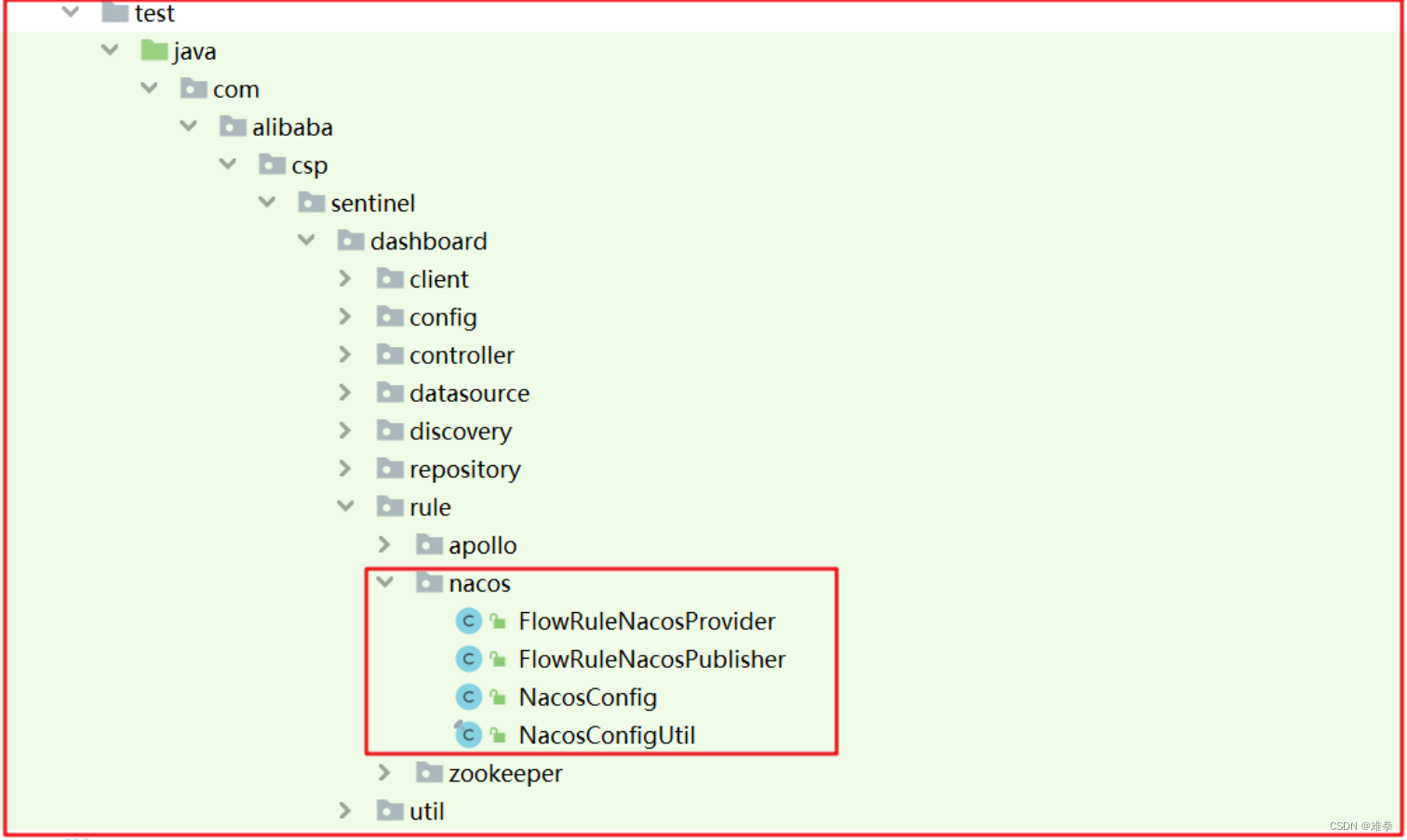

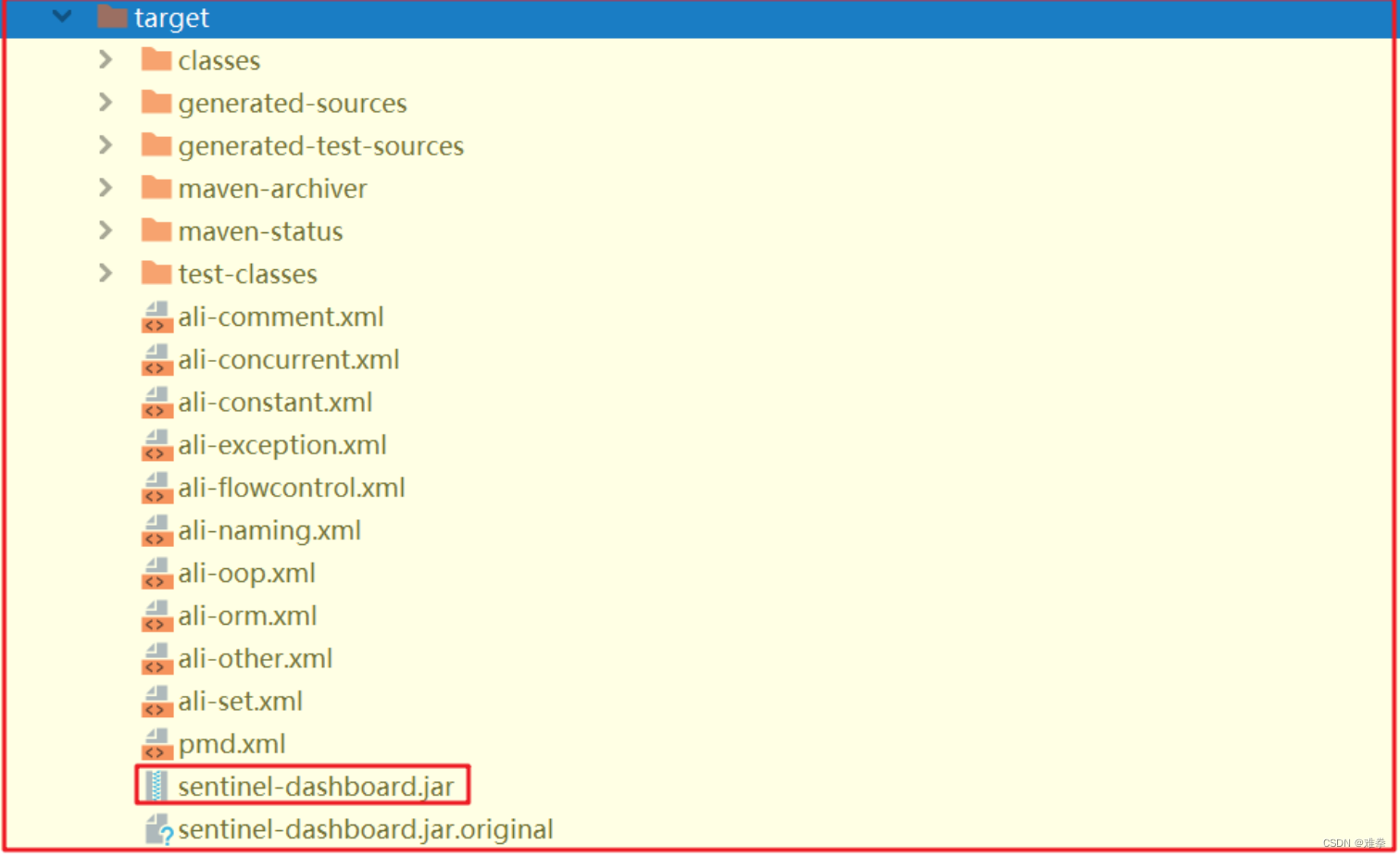

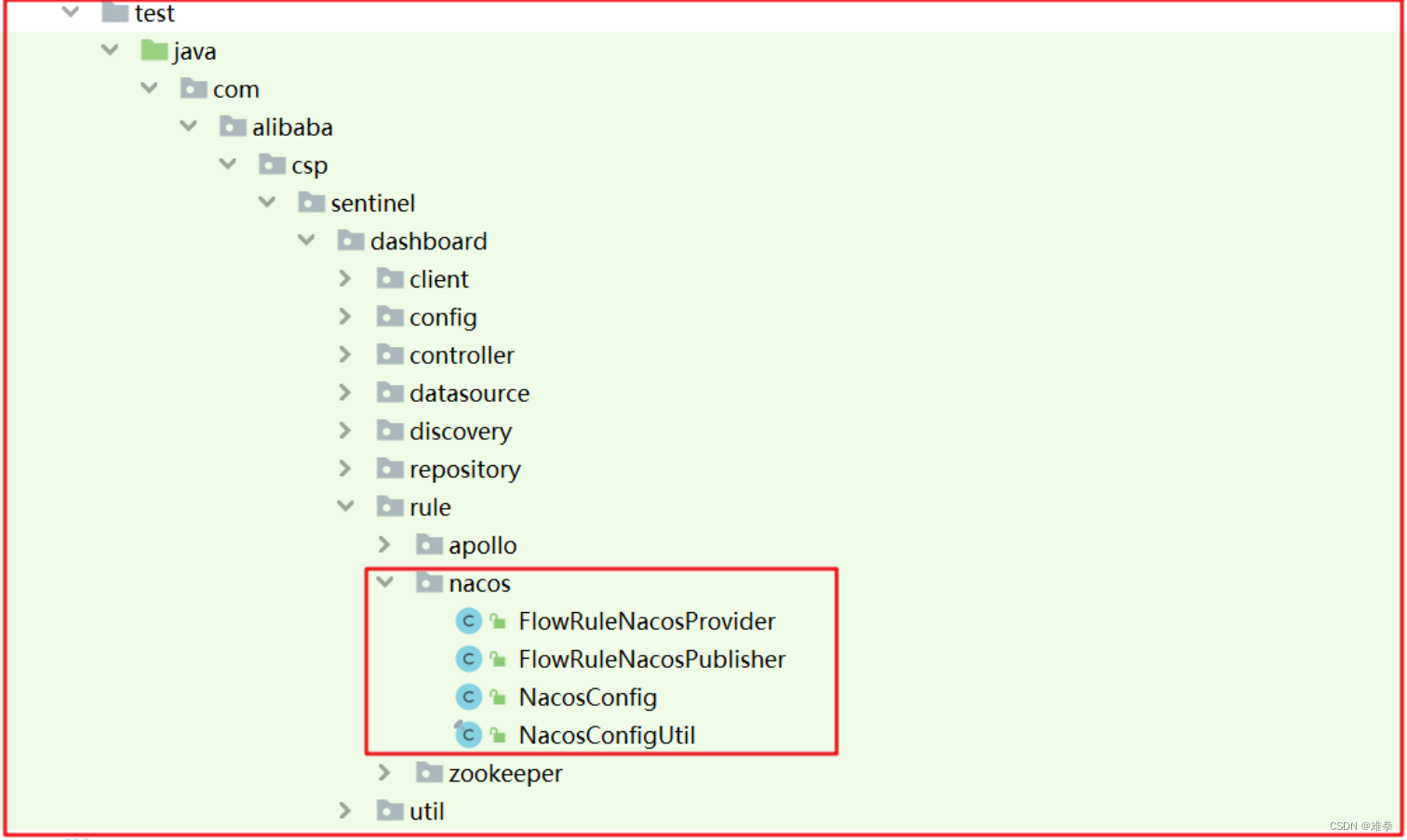

2.3.6.改造源码

2.3.6.1.改造NacosConfifig

2.3.6.2.动态获取流控规则

2.3.6.3.数据持久化测试

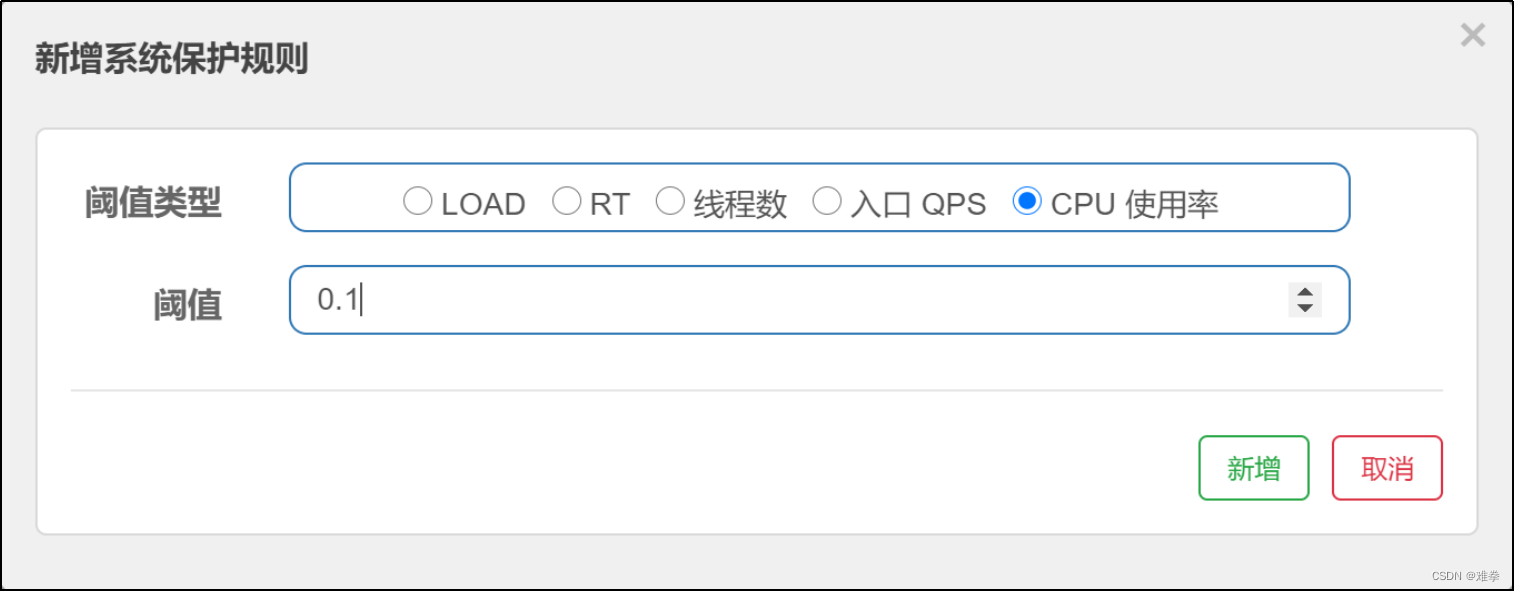

2.3.系统规则定义

1.0.

Nacos源码剖析

Nacos

源码有很多值得我们学习的地方,为了深入理解

Nacos

,我们剖析源码,分析如下2个知识点:

|

1

:

Nacos对注册中心的访问原理

2

:

Nacos注册服务处理流程

|

我们接下来对

Nacos

源码做一个深度剖析,首先搭建

Nacos

源码环境,源码环境搭建起来比较轻松,几乎不会报什么错误,我们这里就不去演示源码环境搭建了。

客户端与注册中心服务端的交互,主要集中在

服务注册

、

服务下线

、

服务发现

、

订阅服务

,其实使用最多的就是

服务注册

和

服务发现

,下面我会从源码的角度分析一下这四个功能。

在

Nacos

源码中

nacos

–

example

中com.alibaba.nacos.example.

NamingExample

类分别演示了这

4

个功能的操作,我们可以把它当做入口,代码如下:

|

public class

NamingExample

{

public static void

main(String[] args)

throws

NacosException {

Properties properties =

new

Properties();

properties.setProperty(

“serverAddr”

, System.

getProperty

(

“serverAddr”

));

properties.setProperty(

“namespace”

, System.

getProperty

(

“namespace”

));

//采用NamingService实现服务注册

NamingService naming = NamingFactory.createNamingService(properties);

//服务注册

naming.registerInstance(

“nacos.test.3”

,

“11.11.11.11”

,

8888

,

“TEST1”

);

naming.registerInstance(

“nacos.test.3”

,

“2.2.2.2”

,

9999

,

“DEFAULT”

);

//服务发现

System.

out

.println(naming.getAllInstances(

“nacos.test.3”

));

//服务下线

naming.deregisterInstance(

“nacos.test.3”

,

“2.2.2.2”

,

9999

,

“DEFAULT”

);

System.

out

.println(naming.getAllInstances(

“nacos.test.3”

));

//服务订阅

Executor executor =

new

ThreadPoolExecutor(

1

,

1

,

0L

,

TimeUnit.MILLISECONDS,

new

LinkedBlockingQueue<Runnable>(),

new

ThreadFactory() {

@Override

public

Thread newThread(Runnable r) {

Thread thread =

new

Thread(r);

thread.setName(

“test-thread”

);

return

thread;

}

}

);

naming.subscribe(

“nacos.test.3”

,

new

AbstractEventListener() {

//EventListener onEvent is sync to handle, If process too low in onEvent, maybe block other onEvent callback.

//So you can override getExecutor() to async handle event.

@Override

public

Executor getExecutor() {

return

executor

;

}

@Override

public void

onEvent(Event event) {

System.

out

.println(((NamingEvent) event).getServiceName());

System.

out

.println(((NamingEvent) event).getInstances());

}

});

}

}

|

1.1.Nacos配置存储mysql数据库

配置文件存储

mysql

,打开

nacos

–

console

工程下

application

.properties文件,添加

mysql

连接信息:

|

# 当前配置存储 采用mysql存储

spring.datasource.platform

=

mysql

### Count of DB:

db.num

=

1

### Connect URL of DB:

db.url.0

=

jdbc:mysql://127.0.0.1:3306/nacos?characterEncoding=utf8&connectTimeout=1000

&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC

db.user.0

=

nacos

db.password.0

=

nacos

|

存储配置的表模型在

nacos

–

console

工程下

resources

/

META

–

INF

下

schema

.sql,在

mysql

库中执行脚本。

配置好上面的信息,

nacos

将会把配置文件信息保存到数据库中。

启动

nacos

,在

nacos

–

console

工程下,启动

Nacos

启动类。

|

@SpringBootApplication

(scanBasePackages =

“com.alibaba.nacos”

)

@ServletComponentScan

// 定时器

@EnableScheduling

public class

Nacos {

public static void

main(String[] args) {

SpringApplication.

run

(Nacos.

class

, args);

}

}

|

1.

2.

客户端工作流程

我们先来看一下客户端是如何实现

服务注册

、

服务发现

、

服务下线

操作

、

服务订阅

操作的。

1.2.1服务创建

一旦创建

NamingService

就会支持服务订阅。

NamingService

对象创建功能:

|

/**

* 创建NamingService 对象

*/

public static

NamingService createNamingService(Properties properties)

throws

NacosException {

try

{

Class<?> driverImplClass = Class.

forName

(

“com.alibaba.nacos.client.naming.NacosNamingService”

);

Constructor constructor = driverImplClass.getConstructor(Properties.

class

);

// NamingService

NamingService vendorImpl = (NamingService) constructor.newInstance(properties);

return

vendorImpl;

}

catch

(Throwable e) {

throw new

NacosException(NacosException.

CLIENT_INVALID_PARAM

, e);

}

}

|

接下来跟踪看看创建的

NamingService

功能,创建对象时会初始化远程代理对象、心跳检测和订阅定时任务。

|

/**

* 构造函数 创建NacosNamingService对象

*/

public

NacosNamingService(String serverList)

throws

NacosException {

Properties properties =

new

Properties();

properties.setProperty(PropertyKeyConst.

SERVER_ADDR

, serverList);

// 初始化 配置

init(properties);

}

public

NacosNamingService(Properties properties)

throws

NacosException {

init(properties);

}

/**

* 初始胡过程

*/

private void

init(Properties properties)

throws

NacosException {

ValidatorUtils.

checkInitParam

(properties);

this

.

namespace

= InitUtils.

initNamespaceForNaming

(properties);

InitUtils.

initSerialization

();

initServerAddr(properties);

InitUtils.

initWebRootContext

(properties);

initCacheDir();

initLogName(properties);

// 创建代理对象 执行远程操作

http协议

this

.

serverProxy

=

new

NamingProxy(

this

.

namespace

,

this

.

endpoint

,

this

.

serverList

, properties);

// 定时任务心跳检测

this

.

beatReactor

=

new

BeatReactor(

this

.

serverProxy

, initClientBeatThreadCount(properties));

// 订阅 定时任务 定时从服务拉取服务列表

this

.

hostReactor

=

new

HostReactor(

this

.

serverProxy

,

beatReactor

,

this

.

cacheDir

, isLoadCacheAtStart(properties),

isPushEmptyProtect(properties), initPollingThreadCount(properties));

}

|

继续跟踪

HostReactor

订阅

|

public

HostReactor(NamingProxy serverProxy, BeatReactor beatReactor, String cacheDir,

boolean

loadCacheAtStart,

boolean

pushEmptyProtection,

int

pollingThreadCount) {

// init executorService

this

.

executor

=

new

ScheduledThreadPoolExecutor(pollingThreadCount,

new

ThreadFactory() {

@Override

public

Thread newThread(Runnable r) {

Thread thread =

new

Thread(r);

thread.setDaemon(

true

);

thread.setName(

“com.alibaba.nacos.client.naming.updater”

);

return

thread;

}

});

this

.

beatReactor

= beatReactor;

this

.

serverProxy

= serverProxy;

this

.

cacheDir

= cacheDir;

if

(loadCacheAtStart) {

this

.

serviceInfoMap

=

new

ConcurrentHashMap<String, ServiceInfo>(DiskCache.

read

(

this

.

cacheDir

));

}

else

{

this

.

serviceInfoMap

=

new

ConcurrentHashMap<String, ServiceInfo>(

16

);

}

this

.

pushEmptyProtection

= pushEmptyProtection;

this

.

updatingMap

=

new

ConcurrentHashMap<String, Object>();

this

.

failoverReactor

=

new

FailoverReactor(

this

, cacheDir);

// go on 创建订阅服务列表对象

this

.

pushReceiver

=

new

PushReceiver(

this

);

this

.

notifier

=

new

InstancesChangeNotifier();

NotifyCenter.

registerToPublisher

(InstancesChangeEvent.

class

,

16384

);

NotifyCenter.

registerSubscriber

(

notifier

);

}

|

跟踪订阅服务列表对象

PushReceiver

|

public class

PushReceiver

implements

Runnable, Closeable {

private static final

Charset

UTF_8

= Charset.

forName

(

“UTF-8”

);

private static final int

UDP_MSS

=

64

*

1024

;

// 定时任务线程池

private

ScheduledExecutorService

executorService

;

private

DatagramSocket

udpSocket

;

private

HostReactor

hostReactor

;

private volatile boolean

closed

=

false

;

public

PushReceiver(HostReactor hostReactor) {

try

{

this

.

hostReactor

= hostReactor;

// 通讯对象(NIO)->远程调用

this

.

udpSocket

=

new

DatagramSocket();

// 创建定时任务线程池->定时任务

this

.

executorService

=

new

ScheduledThreadPoolExecutor(

1

,

new

ThreadFactory() {

@Override

public

Thread newThread(Runnable r) {

Thread thread =

new

Thread(r);

thread.setDaemon(

true

);

thread.setName(

“com.alibaba.nacos.naming.push.receiver”

);

return

thread;

}

});

// 循环执行定时任务 每过一段时间获取一次数据

this

.

executorService

.execute(

this

);

}

catch

(Exception e) {

NAMING_LOGGER

.error(

“[NA] init udp socket failed”

, e);

}

}

@Override

public void

run() {

while

(!

closed

) {

try

{

// byte[] is initialized with 0 full filled by default

byte

[] buffer =

new byte

[

UDP_MSS

];

DatagramPacket packet =

new

DatagramPacket(buffer, buffer.

length

);

// 接收数据 获取远程数据包 NIO

udpSocket

.receive(packet);

String json =

new

String(IoUtils.

tryDecompress

(packet.getData()),

UTF_8

).trim();

NAMING_LOGGER

.info(

“received push data: ”

+ json +

” from ”

+ packet.getAddress().toString());

// 反序列化

PushPacket pushPacket = JacksonUtils.

toObj

(json, PushPacket.

class

);

String ack;

if

(

“dom”

.equals(pushPacket.

type

) ||

“service”

.equals(pushPacket.

type

)) {

// 处理反序列化数据包

hostReactor

.processServiceJson(pushPacket.

data

);

// send ack to server

// ack确认机制 告诉服务器 已经获取到信息了

ack =

“{

\”

type

\”

:

\”

push-ack

\”

”

+

“,

\”

lastRefTime

\”

:

\”

”

+ pushPacket.

lastRefTime

+

”

\”

,

\”

data

\”

:”

+

”

\”\”

}”

;

}

else if

(

“dump”

.equals(pushPacket.

type

)) {

// dump data to server

ack =

“{

\”

type

\”

:

\”

dump-ack

\”

”

+

“,

\”

lastRefTime

\”

:

\”

”

+ pushPacket.

lastRefTime

+

”

\”

,

\”

data

\”

:”

+

”

\”

”

+ StringUtils.

escapeJavaScript

(JacksonUtils.

toJson

(

hostReactor

.getServiceInfoMap()))

+

”

\”

}”

;

}

else

{

// do nothing send ack only

ack =

“{

\”

type

\”

:

\”

unknown-ack

\”

”

+

“,

\”

lastRefTime

\”

:

\”

”

+ pushPacket.

lastRefTime

+

”

\”

,

\”

data

\”

:”

+

”

\”\”

}”

;

}

udpSocket

.send(

new

DatagramPacket(ack.getBytes(

UTF_8

), ack.getBytes(

UTF_8

).

length

,

packet.getSocketAddress()));

}

catch

(Exception e) {

if

(

closed

) {

return

;

}

NAMING_LOGGER

.error(

“[NA] error while receiving push data”

, e);

}

}

}

… …

|

继续处理远程拉取的服务信息,反序列化数据包,将反序列化数据包存储到

serviceInfoMap

中。

|

public

ServiceInfo processServiceJson(String json) {

// 解析数据包

ServiceInfo serviceInfo = JacksonUtils.

toObj

(json, ServiceInfo.

class

);

ServiceInfo oldService =

serviceInfoMap

.get(serviceInfo.getKey());

if

(

pushEmptyProtection

&& !serviceInfo.validate()) {

//empty or error push, just ignore

return

oldService;

}

boolean

changed =

false

;

if

(oldService !=

null

) {

if

(oldService.getLastRefTime() > serviceInfo.getLastRefTime()) {

NAMING_LOGGER

.warn(

“out of date data received, old-t: ”

+ oldService.getLastRefTime() +

“, new-t: ”

+ serviceInfo.getLastRefTime());

}

// ===获取服务数据存储本地serviceInfoMap中===

serviceInfoMap

.put(serviceInfo.getKey(), serviceInfo);

Map<String, Instance> oldHostMap =

new

HashMap<String, Instance>(oldService.getHosts().size());

for

(Instance host : oldService.getHosts()) {

oldHostMap.put(host.toInetAddr(), host);

}

… …

|

总结,创建

NamigService

对象时做了几件事:

1. 创建代理对象,执行远程操作。

2. 创建定时任务执行心跳检测,定时向服务发送心跳检测。

3. 创建订阅定时任务,定时从服务拉取服务列表信息。

A. 创建通讯对象(

NIO

协议)->执行远程调用。

B. 创建定时任务线程池->执行

定时任务

,每过一段时间从服务拉取一次服务信息。将拉取的服务信息反序列化后存入本地的缓存(Map<String, ServiceInfo> serviceInfoMap)。

C. 服务信息变更时,会拉取服务信息存储到

serviceInfoMap

中。

1.

2

.

2.

服务注册

我们沿着案例中的服务注册方法调用找到

nacos

–

api

中的NamingService.

registerInstance

() 并找到它的实现类和方法 com.alibaba.nacos.client.naming.

NacosNamingService

,代码如下:

|

/***

* 服务注册

*

@param

serviceName

服务名字

*

@param

ip

服务IP

*

@param

port

服务端口

*

@param

clusterName

集群名字

*

@throws

NacosException

*/

@Override

public void

registerInstance(String serviceName, String ip,

int

port, String clusterName)

throws

NacosException {

registerInstance(serviceName, Constants.DEFAULT_GROUP, ip, port, clusterName);

}

@Override

public void

registerInstance(String serviceName, String groupName, String ip,

int

port, String clusterName)

throws

NacosException {

//设置实例IP:Port,默认权重为1.0

Instance instance =

new

Instance();

instance.setIp(ip);

instance.setPort(port);

instance.setWeight(

1.0

);

instance.setClusterName(clusterName);

//注册实例

registerInstance(serviceName, groupName, instance);

}

@Override

public void

registerInstance(String serviceName, Instance instance)

throws

NacosException {

registerInstance(serviceName, Constants.DEFAULT_GROUP, instance);

}

/***

* 实例注册

*

@param

serviceName

name of service

*

@param

groupName

group of service

*

@param

instance

instance to register

*

@throws

NacosException

*/

@Override

public void

registerInstance(String serviceName, String groupName, Instance instance)

throws

NacosException {

NamingUtils.checkInstanceIsLegal(instance);

String groupedServiceName = NamingUtils.getGroupedName(serviceName, groupName);

// 该字段表示注册的实例是否是临时实例还是持久化实例。

// 如果是临时实例,则不会在 Nacos 服务端持久化存储,需要通过上报心跳的方式进行包活,

// 如果一段时间内没有上报心跳,则会被 Nacos 服务端摘除。

if

(instance.isEphemeral()) {

// 为注册服务设置一个定时任务获取心跳信息,默认为5s汇报一次

// 心跳检测任务 服务名+@+组名 作为key执行心跳检测

BeatInfo beatInfo = beatReactor.buildBeatInfo(groupedServiceName, instance);

beatReactor.addBeatInfo(groupedServiceName, beatInfo);

}

// 注册到服务端 serverProxy在NamingServer创建的时候创建 代理对象 执行远程调用

serverProxy.registerService(groupedServiceName, groupName, instance);

}

|

注册主要做了两件事

:

第一件事:为注册的服务设置一个

定时任务

,定时

拉取

服务

信息。

第二件事:将服务

注册

到服务端。

|

1

:

启动一个定时任务,定时拉取服务信息,时间间隔为5s,如果拉下来服务正常,不做处理,如果不正常,重新注册

2

:

发送

http

请求给注册中心服务端,调用服务注册接口,注册服务

|

上面代码我们可以看到定时任务添加,但并未完全看到远程请求,serverProxy.

registerService

()方法如下,会先封装请求参数,接下来调用

reqApi

() 而

reqApi

()最后会调用

callServer

() ,代码如下:

|

public void

registerService(String serviceName, String groupName, Instance instance)

throws

NacosException {

NAMING_LOGGER.info(

“[REGISTER-SERVICE] {} registering service {} with instance: {}”

, namespaceId, serviceName, instance);

//封装Http请求参数

final

Map<String, String> params =

new

HashMap<String, String>(

16

);

params.put(CommonParams.NAMESPACE_ID, namespaceId);

params.put(CommonParams.SERVICE_NAME, serviceName);

params.put(CommonParams.GROUP_NAME, groupName);

params.put(CommonParams.CLUSTER_NAME, instance.getClusterName());

params.put(

“ip”

, instance.getIp());

params.put(

“port”

, String.valueOf(instance.getPort()));

params.put(

“weight”

, String.valueOf(instance.getWeight()));

params.put(

“enable”

, String.valueOf(instance.isEnabled()));

params.put(

“healthy”

, String.valueOf(instance.isHealthy()));

params.put(

“ephemeral”

, String.valueOf(instance.isEphemeral()));

params.put(

“metadata”

, JacksonUtils.toJson(instance.getMetadata()));

//执行Http请求

reqApi(UtilAndComs.nacosUrlInstance, params, HttpMethod.POST);

}

|

|

/**

* Request api.

* 请求API

*

*

@param

api

api

*

@param

params

parameters

*

@param

body

body

*

@param

servers

servers

*

@param

method

http method

*

@return

result

*

@throws

NacosException nacos exception

*/

public

String reqApi(String api, Map<String, String> params, Map<String, String> body, List<String> servers,

String method)

throws

NacosException {

params.put(CommonParams.

NAMESPACE_ID

, getNamespaceId());

if

(CollectionUtils.

isEmpty

(servers) && StringUtils.

isBlank

(

nacosDomain

)) {

throw new

NacosException(NacosException.

INVALID_PARAM

,

“no server available”

);

}

NacosException exception =

new

NacosException();

if

(StringUtils.

isNotBlank

(

nacosDomain

)) {

for

(

int

i =

0

; i <

maxRetry

; i++) {

try

{

// 远程调用 入口

return

callServer

(api, params, body,

nacosDomain

, method);

}

catch

(NacosException e) {

exception = e;

if

(

NAMING_LOGGER

.isDebugEnabled()) {

NAMING_LOGGER

.debug(

“request {} failed.”

,

nacosDomain

, e);

}

}

}

}

else

{

Random random =

new

Random(System.

currentTimeMillis

());

int

index = random.nextInt(servers.size());

for

(

int

i =

0

; i < servers.size(); i++) {

String server = servers.get(index);

try

{

return

callServer(api, params, body, server, method);

}

catch

(NacosException e) {

exception = e;

if

(

NAMING_LOGGER

.isDebugEnabled()) {

NAMING_LOGGER

.debug(

“request {} failed.”

, server, e);

}

}

index = (index +

1

) % servers.size();

}

}

NAMING_LOGGER

.error(

“request: {} failed, servers: {}, code: {}, msg: {}”

, api, servers, exception.getErrCode(),

exception.getErrMsg());

throw new

NacosException(exception.getErrCode(),

“failed to req API:”

+ api +

” after all servers(”

+ servers +

“) tried: ”

+ exception.getMessage());

}

|

|

/***

*执行远程调用

**/

public

String callServer(String api, Map<String, String> params, Map<String, String> body, String curServer,

String method)

throws

NacosException {

long

start = System.

currentTimeMillis

();

long

end =

0

;

injectSecurityInfo(params);

//封装请求头部

Header header = builderHeader();

//请求是Http还是Https协议

String url;

if

(curServer.startsWith(UtilAndComs.HTTPS) || curServer.startsWith(UtilAndComs.HTTP)) {

url = curServer + api;

}

else

{

if

(!IPUtil.containsPort(curServer)) {

curServer = curServer + IPUtil.IP_PORT_SPLITER + serverPort;

}

url = NamingHttpClientManager.getInstance().getPrefix() + curServer + api;

}

try

{

//执行远程请求,并获取结果集

HttpRestResult<String> restResult = nacosRestTemplate.exchangeForm(url, header, Query.newInstance().initParams(params), body, method, String.

class

);

end = System.

currentTimeMillis

();

MetricsMonitor.getNamingRequestMonitor(method, url, String.valueOf(restResult.getCode())).observe(end – start);

//结果集解析

if

(restResult.ok()) {

return

restResult.getData();

}

if

(HttpStatus.SC_NOT_MODIFIED == restResult.getCode()) {

return

StringUtils.EMPTY;

}

throw new

NacosException(restResult.getCode(), restResult.getMessage());

}

catch

(Exception e) {

NAMING_LOGGER.error(

“[NA] failed to request”

, e);

throw new

NacosException(NacosException.SERVER_ERROR, e);

}

}

|

执行远程

Http

请求的对象是

NacosRestTemplate

,该对象就是封装了普通的

Http

请求。

|

/**

* Execute the HTTP method to the given URI template, writing the given request entity to the request, and returns

* the response as {

@link

HttpRestResult}.

*

*

@param

url

url

*

@param

header

http header param

*

@param

query

http query param 查询条件封装

*

@param

bodyValues

http body param

*

@param

httpMethod

http method

*

@param

responseType

return type

*

@return

{

@link

HttpRestResult}

*

@throws

Exception ex

*/

public

<

T

> HttpRestResult<

T

> exchangeForm(String url, Header header, Query query, Map<String, String> bodyValues,

String httpMethod, Type responseType)

throws

Exception {

RequestHttpEntity requestHttpEntity =

new

RequestHttpEntity(

header.setContentType(MediaType.

APPLICATION_FORM_URLENCODED

), query, bodyValues);

return

execute

(url, httpMethod, requestHttpEntity, responseType);

}

|

执行远程调用请求 使用

http

协议

|

/**

* 执行远程请求

*

*

@param

url

*

@param

httpMethod

*

@param

requestEntity

*

@param

responseType

*

@param

<T>

*

@return

*

@throws

Exception

*/

@SuppressWarnings

(

“unchecked”

)

private

<

T

> HttpRestResult<

T

> execute(String url, String httpMethod, RequestHttpEntity requestEntity,

Type responseType)

throws

Exception {

// url:http://127.0.0.1:8848/nacos/v1/ns/instance

URI uri = HttpUtils.

buildUri

(url, requestEntity.getQuery());

if

(

logger

.isDebugEnabled()) {

logger

.debug(

“HTTP method: {}, url: {}, body: {}”

, httpMethod, uri, requestEntity.getBody());

}

ResponseHandler<

T

> responseHandler =

super

.selectResponseHandler(responseType);

HttpClientResponse response =

null

;

try

{

// HttpClientRequest执行远程调用

response =

this

.

requestClient

().

execute

(uri, httpMethod, requestEntity);

return

responseHandler.handle(response);

}

finally

{

if

(response !=

null

) {

response.close();

}

}

}

/**

* 获取HttpClientRequest

*/

private

HttpClientRequest

requestClient

() {

if

(CollectionUtils.

isNotEmpty

(

interceptors

)) {

if

(

logger

.isDebugEnabled()) {

logger

.debug(

“Execute via interceptors :{}”

,

interceptors

);

}

return new

InterceptingHttpClientRequest(

requestClient

,

interceptors

.iterator());

}

return

requestClient

;

}

|

这是远程调用使用的是

JdkHttpClientRequest

发起的远程调用

|

@Override

public

HttpClientResponse execute(URI uri, String httpMethod, RequestHttpEntity requestHttpEntity)

throws

Exception {

final

Object body = requestHttpEntity.getBody();

final

Header headers = requestHttpEntity.getHeaders();

replaceDefaultConfig(requestHttpEntity.getHttpClientConfig());

HttpURLConnection conn = (HttpURLConnection) uri.toURL().openConnection();

Map<String, String> headerMap = headers.getHeader();

if

(headerMap !=

null

&& headerMap.size() >

0

) {

for

(Map.Entry<String, String> entry : headerMap.entrySet()) {

conn.setRequestProperty(entry.getKey(), entry.getValue());

}

}

conn.setConnectTimeout(

this

.

httpClientConfig

.getConTimeOutMillis());

conn.setReadTimeout(

this

.

httpClientConfig

.getReadTimeOutMillis());

conn.setRequestMethod(httpMethod);

if

(body !=

null

&& !

“”

.equals(body)) {

String contentType = headers.getValue(HttpHeaderConsts.

CONTENT_TYPE

);

String bodyStr = JacksonUtils.

toJson

(body);

if

(MediaType.

APPLICATION_FORM_URLENCODED

.equals(contentType)) {

Map<String, String> map = JacksonUtils.

toObj

(bodyStr, HashMap.

class

);

bodyStr = HttpUtils.

encodingParams

(map, headers.getCharset());

}

if

(bodyStr !=

null

) {

conn.setDoOutput(

true

);

byte

[] b = bodyStr.getBytes();

conn.setRequestProperty(

“Content-Length”

, String.

valueOf

(b.

length

));

// 获取远程网络输入流 执行远程请求

OutputStream outputStream = conn.getOutputStream();

outputStream.write(b,

0

, b.

length

);

outputStream.flush();

IoUtils.

closeQuietly

(outputStream);

}

}

conn.connect();

return new

JdkHttpClientResponse(conn);

}

|

远程调用,调用的是

nacos

的服务信息。远程掉用

url:http://127.0.0.1:8848/nacos/v1/ns/instance

执行

Http

请求/

nacos

为服务根地址,在nacos-console配置文件中配置。

|

#*************** Spring Boot Related Configurations ***************#

### Default web context path:

默认web服务根地址

server.servlet.contextPath

=

/nacos

### Default web server port:

server.port

=

8848

|

远程调用

post

请求/v1/ns/instance为

nacos

服务地址,在nacos-naming包中。

HealthController

执行心跳检测服务

|

/**

* Health status related operation controller.

* 心跳相关控制器

*

@author

nkorange

*

@author

nanamikon

*

@since

0.8.0

*/

@RestController

(

“namingHealthController”

)

@RequestMapping

(UtilsAndCommons.

NACOS_NAMING_CONTEXT

+

“/health”

)

public class

HealthController {

… …

}

|

InstanceController

执行服务实例注册服务

|

/**

* Instance operation controller.

* 服务实例控制

*

@author

nkorange

*/

@RestController

// /vi/ns/instance

@RequestMapping

(UtilsAndCommons.

NACOS_NAMING_CONTEXT

+

“/instance”

)

public class

InstanceController {

/**

* Register new instance.

*

*

@param

request

http request

*

@return

‘ok’ if success

*

@throws

Exception any error during register

*/

@CanDistro

//Distro协议(数据临时一致性协议)

@PostMapping

@Secured

(parser = NamingResourceParser.

class

, action = ActionTypes.

WRITE

)

public

String register(HttpServletRequest request)

throws

Exception {

final

String namespaceId = WebUtils

.

optional

(request, CommonParams.

NAMESPACE_ID

, Constants.

DEFAULT_NAMESPACE_ID

);

final

String serviceName = WebUtils.

required

(request, CommonParams.

SERVICE_NAME

);

NamingUtils.

checkServiceNameFormat

(serviceName);

final

Instance instance = parseInstance(request);

// 注册服务实例

serviceManager

.registerInstance(namespaceId, serviceName, instance);

return

“ok”

;

}

}

|

1.

2

.

3.

服务发现

我们沿着案例中的服务发现方法调用找到

nacos

–

api

中的NamingService.

getAllInstances

() 并找到它的实现类和方法com.alibaba.nacos.client.naming.NacosNamingService.

getAllInstances

() ,代码如下:

|

@Override

public

List<Instance> getAllInstances(String serviceName, String groupName, List<String> clusters,

boolean

subscribe)

throws

NacosException {

ServiceInfo serviceInfo;

if

(subscribe) {

// 开启服务订阅则从本地获取服务列表 本地服务列表存储在serviceInfoMap中

// 服务订阅会将服务列表定时更新存储到serviceInfoMao中

serviceInfo =

hostReactor

.

getServiceInfo

(NamingUtils.

getGroupedName

(serviceName, groupName),

StringUtils.

join

(clusters,

“,”

));

}

else

{

// 没有开启服务订阅从远端获取 请求nacos服务发起远程调用获取服务信息

serviceInfo =

hostReactor

.getServiceInfoDirectlyFromServer(NamingUtils.

getGroupedName

(serviceName, groupName),

StringUtils.

join

(clusters,

“,”

));

}

List<Instance> list;

if

(serviceInfo ==

null

|| CollectionUtils.

isEmpty

(list = serviceInfo.getHosts())) {

return new

ArrayList<Instance>();

}

return

list;

}

|

上面的代码调用了hostReactor.

getServiceInfo

() 方法

。

|

public

ServiceInfo getServiceInfo(

final

String serviceName,

final

String clusters) {

NAMING_LOGGER.debug(

“failover-mode: ”

+ failoverReactor.isFailoverSwitch());

String key = ServiceInfo.getKey(serviceName, clusters);

if

(failoverReactor.isFailoverSwitch()) {

return

failoverReactor.getService(key);

}

/*1。先从本地缓存中获取服务对象,因为启动是第一次进来,所以缓存站不存在*/

ServiceInfo serviceObj = getServiceInfo0(serviceName, clusters);

if

(

null

== serviceObj) {

/*构建服务实例*/

serviceObj =

new

ServiceInfo(serviceName, clusters);

/*将服务实例存放到缓存中*/

serviceInfoMap.put(serviceObj.getKey(), serviceObj);

/*更新nacos-上的服务*/

updatingMap.put(serviceName,

new

Object());

/*主动获取,并且更新到缓存本地,以及已过期的服务更新等*/

updateServiceNow(serviceName, clusters);

updatingMap.remove(serviceName);

}

else if

(updatingMap.containsKey(serviceName)) {

if

(UPDATE_HOLD_INTERVAL >

0

) {

updateServiceNow(serviceName, clusters);

// hold a moment waiting for update finish

synchronized

(serviceObj) {

try

{

serviceObj.wait(UPDATE_HOLD_INTERVAL);

}

catch

(InterruptedException e) {

NAMING_LOGGER

.error(

“[getServiceInfo] serviceName:”

+ serviceName +

“, clusters:”

+ clusters, e);

}

}

}

}

/*2.开启定时任务*/

scheduleUpdateIfAbsent(serviceName, clusters);

return

serviceInfoMap.get(serviceObj.getKey());

}

|

该方法会先调用

getServiceInfo0

() 方法从本地缓存获取数据

。

|

private

ServiceInfo getServiceInfo0(String serviceName, String clusters) {

// 获取对应的key值 Group@服务名 格式

String key = ServiceInfo.

getKey

(serviceName, clusters);

// 获取key对应的缓存 这里的值是NamingService执行定时任务获取的

return

serviceInfoMap

.get(key);

}

|

缓存没有数据,就构建实例更新到

Nacos

,并从

Nacos

中获取最新数据

。

updateServiceNow

(serviceName, clusters);

主

动

从远程服务器获取更新数据

。

|

private void

updateServiceNow(String serviceName, String clusters) {

try

{

updateService(serviceName, clusters);

}

catch

(NacosException e) {

NAMING_LOGGER

.error(

“[NA] failed to update serviceName: ”

+ serviceName, e);

}

}

|

最终会调用

updateService

()方法,在该方法中完成远程请求和数据处理,源码如下:

|

/**

* Update service now.

*

*

@param

serviceName

service name

*

@param

clusters

clusters

*/

public void

updateService(String serviceName, String clusters)

throws

NacosException {

// 从本地缓存列表获取服务

ServiceInfo oldService =

getServiceInfo0

(serviceName, clusters);

try

{

// 代理发起http请求远程调用 获取服务以及提供者端口信息,端口等

String result =

serverProxy

.queryList(serviceName, clusters,

pushReceiver

.getUdpPort(),

false

);

if

(StringUtils.

isNotEmpty

(result)) {

// 反序列化服务信息 并存储到serviceInfoMap中

processServiceJson

(result);

}

}

finally

{

if

(oldService !=

null

) {

synchronized

(oldService) {

oldService.notifyAll();

}

}

}

}

|

getServiceInfo0

(

)

方法在前面已经介绍过了。

processServiceJson

(

)

方法在服务创建介绍过。

回到开头,没有开启服务订阅会从远端获取,请求

nacos

服务发起远程调用获取服务信息。调用getServiceInfoDirectlyFromServer()方法。

|

/**

* 直接从nacos服务获取服务信息

*/

public

ServiceInfo getServiceInfoDirectlyFromServer(

final

String serviceName,

final

String clusters)

throws

NacosException {

String result =

serverProxy

.

queryList

(serviceName, clusters,

0

,

false

);

if

(StringUtils.

isNotEmpty

(result)) {

return

JacksonUtils.

toObj

(result, ServiceInfo.

class

);

}

return null

;

}

|

这里仍然是

NamingService

对象创建的代理对象发起远程调用获取服务信息

queryList

(

)

。

|

/**

* Query instance list.

*/

public

String queryList(String serviceName, String clusters,

int

udpPort,

boolean

healthyOnly)

throws

NacosException {

final

Map<String, String> params =

new

HashMap<String, String>(

8

);

params.put(CommonParams.

NAMESPACE_ID

,

namespaceId

);

params.put(CommonParams.

SERVICE_NAME

, serviceName);

params.put(

“clusters”

, clusters);

params.put(

“udpPort”

, String.

valueOf

(udpPort));

params.put(

“clientIP”

, NetUtils.

localIP

());

params.put(

“healthyOnly”

, String.

valueOf

(healthyOnly));

return

reqApi

(UtilAndComs.

nacosUrlBase

+

“/instance/list”

, params, HttpMethod.

GET

);

}

|

reqApi

(

)

在服务注册环节已经介绍过了。

1.

2

.

4.

服务下线

我们沿着案例中的服务下线方法调用找到

nacos

–

api

中的NamingService.

deregisterInstance

()并找到它的实现类和方法 NacosNamingService.deregisterInstance(),代码如下:

|

@Override

public void

deregisterInstance(String serviceName, String groupName, String ip,

int

port, String clusterName)

throws

NacosException {

//构建实例信息

Instance instance =

new

Instance();

instance.setIp(ip);

instance.setPort(port);

instance.setClusterName(clusterName);

//服务下线操作

deregisterInstance(serviceName, groupName, instance);

}

@Override

public void

deregisterInstance(String serviceName, String groupName, Instance instance)

throws

NacosException {

if

(instance.isEphemeral()) {

//移除心跳信息监测的定时任务

beatReactor.removeBeatInfo(NamingUtils.getGroupedName(serviceName, groupName), instance.getIp(),

instance.getPort());

}

//发送远程请求执行服务下线销毁操作

serverProxy.deregisterService(NamingUtils.getGroupedName(serviceName, groupName), instance);

}

|

发起远程

delete

请求。

|

/**

* deregister instance from a service.

*

*

@param

serviceName

name of service

*

@param

instance

instance

*

@throws

NacosException nacos exception

*/

public void

deregisterService(String serviceName, Instance instance)

throws

NacosException {

NAMING_LOGGER

.info(

“[DEREGISTER-SERVICE] {} deregistering service {} with instance: {}”

,

namespaceId

, serviceName,

instance);

final

Map<String, String> params =

new

HashMap<String, String>(

8

);

params.put(CommonParams.

NAMESPACE_ID

,

namespaceId

);

params.put(CommonParams.

SERVICE_NAME

, serviceName);

params.put(CommonParams.

CLUSTER_NAME

, instance.getClusterName());

params.put(

“ip”

, instance.getIp());

params.put(

“port”

, String.

valueOf

(instance.getPort()));

params.put(

“ephemeral”

, String.

valueOf

(instance.isEphemeral()));

reqApi(UtilAndComs.

nacosUrlInstance

, params, HttpMethod.

DELETE

);

}

|

这里会远程调用

nacos

–

naming

服务

InstanceController

接口中的

delete

方法销毁服务。

|

/**

* Deregister instances.

*

*

@param

request

http request

*

@return

‘ok’ if success

*

@throws

Exception any error during deregister

*/

@CanDistro

@DeleteMapping

@Secured

(parser = NamingResourceParser.

class

, action = ActionTypes.

WRITE

)

public

String deregister(HttpServletRequest request)

throws

Exception {

Instance instance = getIpAddress(request);

String namespaceId = WebUtils.

optional

(request, CommonParams.

NAMESPACE_ID

, Constants.

DEFAULT_NAMESPACE_ID

);

String serviceName = WebUtils.

required

(request, CommonParams.

SERVICE_NAME

);

NamingUtils.

checkServiceNameFormat

(serviceName);

Service service =

serviceManager

.getService(namespaceId, serviceName);

if

(service ==

null

) {

Loggers.

SRV_LOG

.warn(

“remove instance from non-exist service: {}”

, serviceName);

return

“ok”

;

}

// 移除服务数据

serviceManager

.removeInstance(namespaceId, serviceName, instance.isEphemeral(), instance);

return

“ok”

;

}

|

服务下线方法比较简单,和服务注册做的事情正好相反,也做了两件事,第一件事:不在进行心跳检测。 第二件事:请求服务端服务下线接口。

1.

2

.

4.

服务订阅

我们可以查看订阅服务的案例,会先创建一个线程池,接下来会把线程池封装到监听器中,而监听器中可以监听指定实例信息,代码如下:

|

//服务订阅

Executor executor =

new

ThreadPoolExecutor(

1

,

1

,

0L

, TimeUnit.MILLISECONDS,

new

LinkedBlockingQueue<Runnable>(),

new

ThreadFactory() {

@Override

public

Thread newThread(Runnable r) {

Thread thread =

new

Thread(r);

thread.setName(

“test-thread”

);

return

thread;

}

});

naming.subscribe(

“nacos.test.3”

,

new

AbstractEventListener() {

//EventListener onEvent is sync to handle, If process too low in onEvent,maybe block other onEvent callback.

//So you can override getExecutor() to async handle event.

@Override

public

Executor getExecutor() {

return

executor

;

}

//读取监听到的服务实例

@Override

public void

onEvent(Event event) {

System.

out

.println(((NamingEvent) event).getServiceName());

System.

out

.println(((NamingEvent) event).getInstances());

}

});

|

我们沿着案例中的服务订阅方法调用找到

nacos

–

api

中的NamingService.

subscribe

()并找到它的实现类和方法NacosNamingService.

deregisterInstance

(),代码如下:

|

public void

subscribe(String serviceName, String clusters, EventListener eventListener) {

//注册监听

notifier.registerListener(serviceName, clusters, eventListener);

//获取并更新服务实例

getServiceInfo(serviceName, clusters);

}

|

此时会注册监听,注册监听就是将当前的监听对象信息注入到

listenerMap

集合中,在监听对象的指定方法

onEvent

中可以读取实例信息,代码如下:

|

public void

registerListener(String serviceName, String clusters, EventListener listener) {

String key = ServiceInfo.getKey(serviceName, clusters);

ConcurrentHashSet<EventListener> eventListeners = listenerMap.get(key);

if

(eventListeners ==

null

) {

synchronized

(lock) {

eventListeners = listenerMap.get(key);

if

(eventListeners ==

null

) {

eventListeners =

new

ConcurrentHashSet<EventListener>();

listenerMap.put(key, eventListeners);

}

}

}

//将当前监听对象放入到集合中,在监听对象的onEvent中可以读出对应的实例对象

eventListeners.add(listener);

}

|

get

ServiceInfo

(

serviceName

,

clusters

)

获取服务实例,先从本地缓存获取,本地获取不到就从服务器获取,前面服务发现已经介绍过了。

1.

3.

服务端工作流程

注册中心服务端的主要功能包括,接收客户端的

服务注册

,

服务发现

,

服务下线

的功能,但是除了这些和客户端的交互之外,服务端还要做一些更重要的事情,就是我们常常会在分布式系统中听到的

AP

和

CP

,作为一个集群,

nacos

即实现了

AP

也实现了

CP

,其中

AP

使用的自己实现的

Distro

协议

,而

CP

是采用

raft

协议

实现的,这个过程中牵涉到

心跳

、

选主

等操作。

我们来学习一下注册中心服务端接收客户端服务注册的功能。

1.

3

.1

.

注册处理

我们先来学习一下

Nacos

的工具类

WebUtils

,该工具类在

nacos

–

core

工程下,该工具类是用于

处理请求参数转化

的,里面提供了

2

个常被用到的方法

required

()和

optional

():

|

required

方法通过参数名key,解析HttpServletRequest请求中的参数,并转码为UTF-8编码。

optional

方法在required方法的基础上增加了默认值,如果获取不到,则返回默认值。

|

代码如下:

|

/**

* required方法通过参数名key,解析HttpServletRequest请求中的参数,并转码为UTF-8编码。

*/

public static

String required(

final

HttpServletRequest req,

final

String key) {

String value = req.getParameter(key);

if

(StringUtils.isEmpty(value)) {

throw new

IllegalArgumentException(

“Param ‘”

+ key +

“‘ is required.”

);

}

String encoding = req.getParameter(

“encoding”

);

return

resolveValue(value, encoding);

}

/**

* optional方法在required方法的基础上增加了默认值,如果获取不到,则返回默认值。

*/

public static

String optional(

final

HttpServletRequest req,

final

String key,

final

String defaultValue) {

if

(!req.getParameterMap().containsKey(key) ||

req.getParameterMap().get(key)[

0

] ==

null

) {

return

defaultValue;

}

String value = req.getParameter(key);

if

(StringUtils.isBlank(value)) {

return

defaultValue;

}

String encoding = req.getParameter(

“encoding”

);

return

resolveValue(value, encoding);

}

|

nacos server

–

client

使用了

http

协议来交互,那么在

server

端必定提供了

http

接口的入口,并且在

core

模块看到其依赖了

spring boot starter

,所以它的

http

接口由集成了

Spring

的

web

服务器支持,简单地说就是像我们平时写的业务服务一样,有

controller

层和

service

层。

以

OpenAPI

作为入口来学习,我们找到 /nacos/v1/ns/instance服务注册接口,在

nacos

–

naming

工程中我们可以看到

InstanceController

正是我们要找的对象,如下图:

处理服务注册,我们直接找对应的

POST

方法即可,代码如下:

|

/**

* Register new instance.

* 接收客户端注册信息

*

*

@param

request

http request

*

@return

‘ok’ if success

*

@throws

Exception any error during register

*/

@CanDistro

@PostMapping

@Secured(parser = NamingResourceParser.

class

, action = ActionTypes.WRITE)

public

String register(HttpServletRequest request)

throws

Exception {

//获取namespaceid,该参数是可选参数

final

String namespaceId = WebUtils.optional(request, CommonParams.NAMESPACE_ID, Constants.DEFAULT_NAMESPACE_ID);

//获取服务名字

final

String serviceName = WebUtils.required(request, CommonParams.SERVICE_NAME);

//校验服务的名字,服务的名字格式为groupName@@serviceName

NamingUtils.checkServiceNameFormat(serviceName);

//创建实例

final

Instance instance = parseInstance(request);

//注册服务

serviceManager.registerInstance(namespaceId, serviceName, instance);

return

“ok”

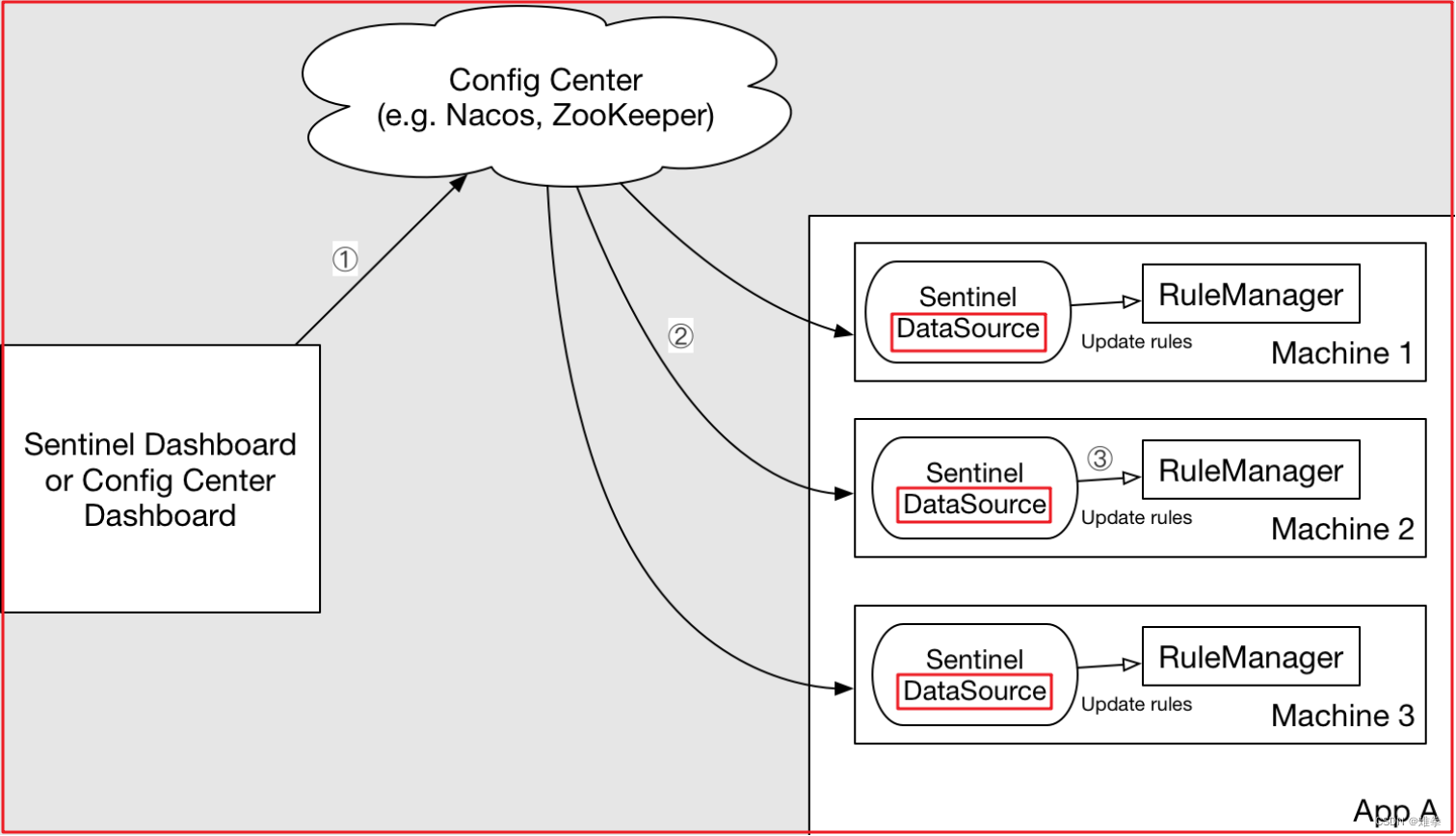

;

}

|

如上图,该方法主要用于接收客户端

注册信息

,并且会校验参数是否存在问题,如果不存在问题就创建服务的实例,服务实例创建后将服务实例注册到

Nacos

中,注册的方法如下:

|

public void

registerInstance(String namespaceId, String serviceName, Instance instance)

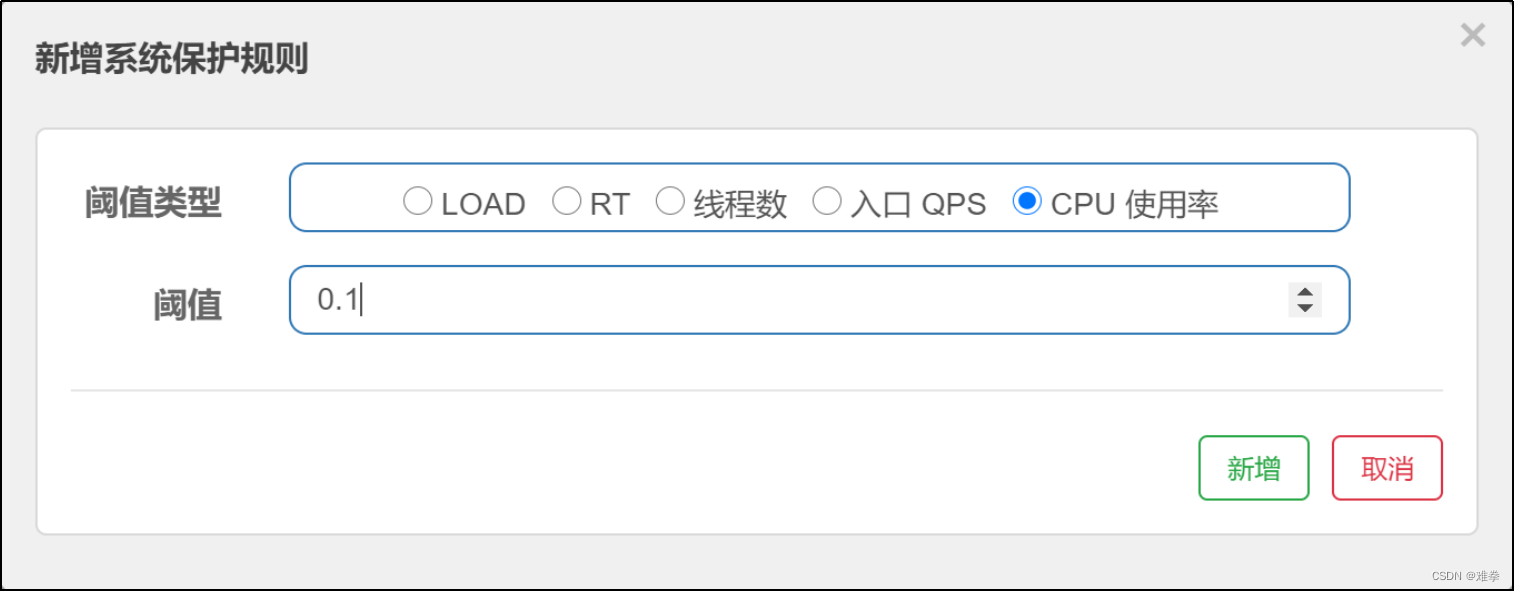

throws

NacosException {

//判断本地缓存中是否存在该命名空间,如果不存在就创建,之后判断该命名空间下是否

//存在该服务,如果不存在就创建空的服务

//如果实例为空,则创建实例,并且会将创建的实例存入到serviceMap集合中

createEmptyService(namespaceId, serviceName, instance.isEphemeral());

//从serviceMap集合中获取创建的实例

Service service = getService(namespaceId, serviceName);

if

(service ==

null

) {

throw new

NacosException(NacosException.INVALID_PARAM,

“service not found, namespace: ”

+ namespaceId +

“, service: ”

+ serviceName);

}

//服务注册,这一步才会把服务的实例信息和服务绑定起来

addInstance(namespaceId, serviceName, instance.isEphemeral(), instance);

}

|

注册的方法中会先创建该实例对象,创建前先检查本地缓存是否存在该实例对象,如果不存在就创建,最后注册该服务,并且该服务会和实例信息捆绑到一起,并将信息同步到磁盘,数据同步到磁盘就涉及到数据一致性了,我们接下来讲解

Nacos

的数据一致性。

1.

3

.2

.

一致性算法Distro协议介绍

Distro

是阿里巴巴的

私有协议

,目前流行的

Nacos

服务管理框架就采用了

Distro

协议。

Distro

协议被定位为临时数据的一致性协议:该类型协议,不需要把数据存储到磁盘或者数据库,因为临时数据通常和服务器保持一个

session

会话,该会话只要存在,数据就不会丢失 。

Distro

协议保证

写必须永远是成功

的,即使可能会发生网络分区。当网络恢复时,把各数据分片的数据进行合并。

Distro

协议具有以下特点:

|

1

:

专门为了注册中心而创造出的协议;

2

:

客户端与服务端有两个重要的交互,服务注册与心跳发送;

3

:

客户端以服务为维度向服务端注册,注册后每隔一段时间向服务端发送一次心跳,心跳包需要带上注册服

务的全部信息,在客户端看来,服务端节点对等,所以请求的节点是随机的;

4

:

客户端请求失败则换一个节点重新发送请求;

5

:

服务端节点都存储所有数据,但每个节点只负责其中一部分服务,在接收到客户端的“写”

(

注册、心

跳、下线等

)

请求后,服务端节点判断请求的服务是否为自己负责,如果是,则处理,否则交由负责的节点

处理;

6

:

每个服务端节点主动发送健康检查到其他节点,响应的节点被该节点视为健康节点;

7

:

服务端在接收到客户端的服务心跳后,如果该服务不存在,则将该心跳请求当做注册请求来处理;

8

:

服务端如果长时间未收到客户端心跳,则下线该服务;

9

:

负责的节点在接收到服务注册、服务心跳等写请求后将数据写入后即返回,后台异步地将数据同步给其他

节点;

10

:

节点在收到读请求后直接从本机获取后返回,无论数据是否为最新。

|

1.

3

.3

.

Distro

服务启动-

寻址模式

Distro

协议服务端节点发现使用

寻址机制

来实现服务端节点的管理。在

Nacos

中,寻址模式有三种:

|

单机模式

(

StandaloneMemberLookup

)

文件模式

(

FileConfigMemberLookup

)

服务器模式

(

AddressServerMemberLookup

)

|

三种寻址模式如下图:

在com.alibaba.nacos.core.cluster.lookup.LookupFactory中有创建寻址方式,可以创建集群启动方式、单机启动方式,不同启动方式就决定了不同寻址模式。

|

/**

* Create the target addressing pattern.

* 创建寻址模式

*

*

@param

memberManager

{

@link

ServerMemberManager}

*

@return

{

@link

MemberLookup}

*

@throws

NacosException NacosException

*/

public static

MemberLookup createLookUp(ServerMemberManager memberManager)

throws

NacosException {

//NacosServer 集群方式启动

if

(!EnvUtil.getStandaloneMode()) {

String lookupType = EnvUtil.getProperty(LOOKUP_MODE_TYPE);

//由参数中传入的寻址方式得到LookupType对象

LookupType type = chooseLookup(lookupType);

//选择寻址方式

LOOK_UP =

find

(type);

//设置当前寻址方式

currentLookupType = type;

}

else

{

//NacosServer单机启动

LOOK_UP =

new

StandaloneMemberLookup();

}

LOOK_UP.injectMemberManager(memberManager);

Loggers.CLUSTER.info(

“Current addressing mode selection : {}”

,

LOOK_UP.getClass().getSimpleName());

return

LOOK_UP;

}

/***

* 选择寻址方式

*

@param

type

*

@return

*/

private static

MemberLookup find(LookupType type) {

//文件寻址模式,也就是配置cluster.conf配置文件将多个节点串联起来,

// 通过配置文件寻找其他节点,以达到和其他节点通信的目的

if

(LookupType.FILE_CONFIG.equals(type)) {

LOOK_UP =

new

FileConfigMemberLookup();

return

LOOK_UP;

}

//服务器模式

if

(LookupType.ADDRESS_SERVER.equals(type)) {

LOOK_UP =

new

AddressServerMemberLookup();

return

LOOK_UP;

}

// unpossible to run here

throw new

IllegalArgumentException();

}

|

单节点寻址模式

会直接创建StandaloneMemberLookup对象,而

文件寻址模式

会创建FileConfigMemberLookup对象,

服务器寻址模式

会创建AddressServerMemberLookup;

1.

3

.3.1

.

单机

寻址

模式

单机模式直接寻找自己的IP:PORT地址。

|

public class

StandaloneMemberLookup

extends

AbstractMemberLookup {

@Override

public void

start() {

if

(

start

.compareAndSet(

false

,

true

)) {

// 获取自己的IP:port

String url = InetUtils.

getSelfIP

() +

“:”

+ EnvUtil.

getPort

();

afterLookup(MemberUtil.

readServerConf

(Collections.

singletonList

(url)));

}

}

}

|

1.

3

.3.2.文件寻址模式

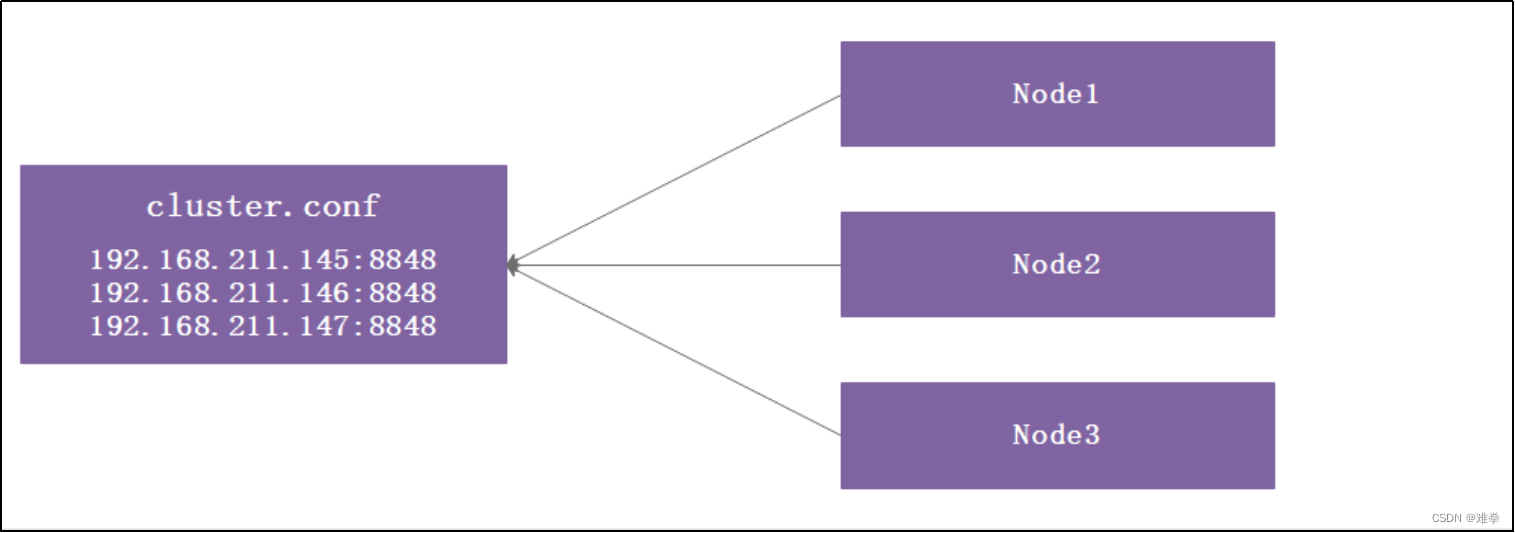

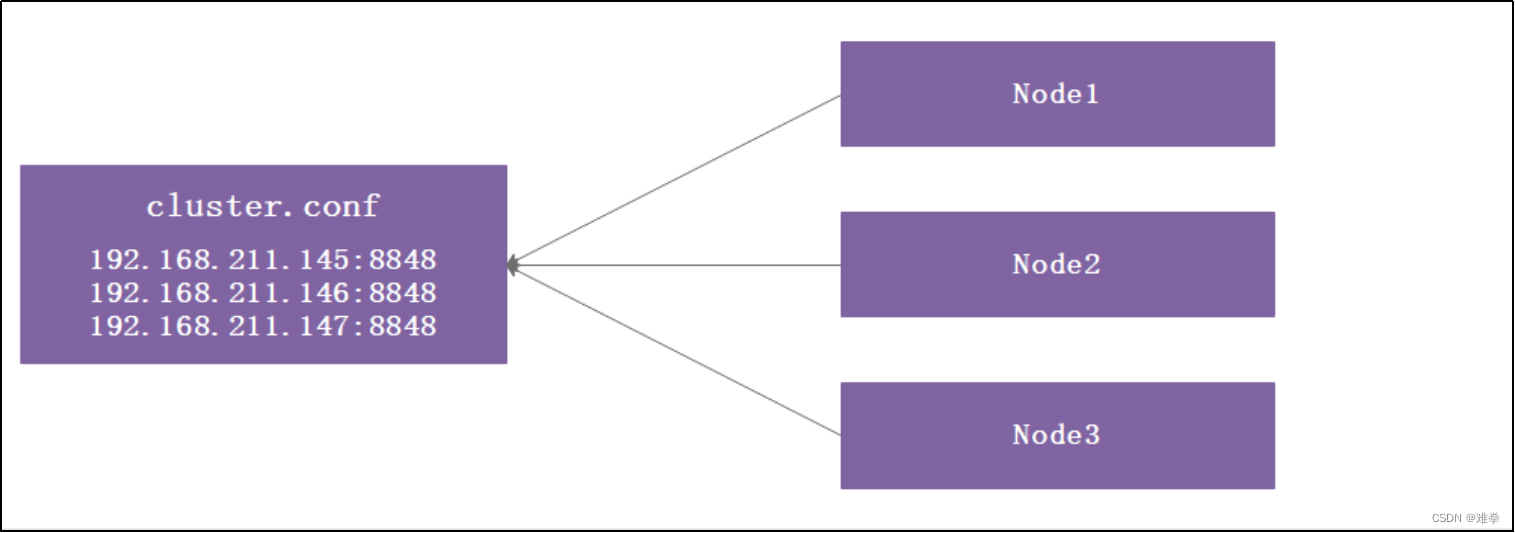

文件寻址模式主要在创建集群的时候,通过

cluster

.conf 来配置集群,程序可以通过监听

cluster

.conf 文件变化实现动态管理节点,FileConfigMemberLookup源码如下:

|

public class

FileConfigMemberLookup

extends

AbstractMemberLookup {

//创建文件监听器

private

FileWatcher

watcher

=

new

FileWatcher() {

//文件发生变更事件

@Override

public void

onChange(FileChangeEvent event) {

readClusterConfFromDisk();

}

//检查context是否包含cluster.conf

@Override

public boolean

interest(String context) {

return

StringUtils.contains(context,

“cluster.conf”

);

}

};

@Override

public void

start()

throws

NacosException {

if

(start.compareAndSet(

false

,

true

)) {

readClusterConfFromDisk();

// 使用inotify机制来监视文件更改,并自动触发对cluster.conf的读取

try

{

WatchFileCenter.registerWatcher(EnvUtil.getConfPath(),

watcher

);

}

catch

(Throwable e) {

Loggers.CLUSTER.error(

“An exception occurred in the launch file monitor : {}”

, e.getMessage());

}

}

}

@Override

public void

destroy()

throws

NacosException {

WatchFileCenter.deregisterWatcher(EnvUtil.getConfPath(),

watcher

);

}

private void

readClusterConfFromDisk() {

Collection<Member> tmpMembers =

new

ArrayList<>();

try

{

List<String> tmp = EnvUtil.readClusterConf();

tmpMembers = MemberUtil.readServerConf(tmp);

}

catch

(Throwable e) {

Loggers.CLUSTER.error(

“nacos-XXXX [serverlist] failed to get serverlist from disk!, error : {}”

, e.getMessage());

}

afterLookup(tmpMembers);

}

}

|

1.

3

.3.3

.

服务器寻址模式

使用地址服务器存储节点信息,会创建AddressServerMemberLookup,服务端定时拉取信息进行管理;

|

public class

AddressServerMemberLookup

extends

AbstractMemberLookup {

private final

GenericType<RestResult<String>>

genericType

=

new

GenericType<RestResult<String>>() {

};

public

String

domainName

;

public

String

addressPort

;

public

String

addressUrl

;

public

String

envIdUrl

;

public

String

addressServerUrl

;

private volatile boolean

isAddressServerHealth

=

true

;

private int

addressServerFailCount

=

0

;

private int

maxFailCount

=

12

;

private final

NacosRestTemplate

restTemplate

=

HttpClientBeanHolder.getNacosRestTemplate(Loggers.CORE);

private volatile boolean

shutdown

=

false

;

@Override

public void

start()

throws

NacosException {

if

(start.compareAndSet(

false

,

true

)) {

this

.

maxFailCount

= Integer.

parseInt

(EnvUtil.getProperty(

“maxHealthCheckFailCount”

,

“12”

));

initAddressSys();

run();

}

}

/***

* 获取服务器地址

*/

private void

initAddressSys() {

String envDomainName = System.

getenv

(

“address_server_domain”

);

if

(StringUtils.isBlank(envDomainName)) {

domainName

= EnvUtil.getProperty(

“address.server.domain”

,

“jmenv.tbsite.net”

);

}

else

{

domainName

= envDomainName;

}

String envAddressPort = System.

getenv

(

“address_server_port”

);

if

(StringUtils.isBlank(envAddressPort)) {

addressPort

= EnvUtil.getProperty(

“address.server.port”

,

“8080”

);

}

else

{

addressPort

= envAddressPort;

}

String envAddressUrl = System.

getenv

(

“address_server_url”

);

if

(StringUtils.isBlank(envAddressUrl)) {

addressUrl

= EnvUtil.getProperty(

“address.server.url”

, EnvUtil.getContextPath() +

“/”

+

“serverlist”

);

}

else

{

addressUrl

= envAddressUrl;

}

addressServerUrl

=

“http://”

+

domainName

+

“:”

+

addressPort

+

addressUrl

;

envIdUrl

=

“http://”

+

domainName

+

“:”

+

addressPort

+

“/env”

;

Loggers.CORE.info(

“ServerListService address-server port:”

+

addressPort

);

Loggers.CORE.info(

“ADDRESS_SERVER_URL:”

+

addressServerUrl

);

}

@SuppressWarnings

(

“PMD.UndefineMagicConstantRule”

)

private void

run()

throws

NacosException {

// With the address server, you need to perform a synchronous member node pull at startup

// Repeat three times, successfully jump out

boolean

success =

false

;

Throwable ex =

null

;

int

maxRetry = EnvUtil.getProperty(

“nacos.core.address-server.retry”

, Integer.

class

,

5

);

for

(

int

i =

0

; i < maxRetry; i++) {

try

{

//拉取集群节点信息

syncFromAddressUrl();

success =

true

;

break

;

}

catch

(Throwable e) {

ex = e;

Loggers.CLUSTER.error(

“[serverlist] exception, error : {}”

, ExceptionUtil.getAllExceptionMsg(ex));

}

}

if

(!success) {

throw new

NacosException(NacosException.SERVER_ERROR, ex);

}

//创建定时任务

GlobalExecutor.scheduleByCommon(

new

AddressServerSyncTask(),

5_000L

);

}

@Override

public void

destroy()

throws

NacosException {

shutdown

=

true

;

}

@Override

public

Map<String, Object> info() {

Map<String, Object> info =

new

HashMap<>(

4

);

info.put(

“addressServerHealth”

,

isAddressServerHealth

);

info.put(

“addressServerUrl”

,

addressServerUrl

);

info.put(

“envIdUrl”

,

envIdUrl

);

info.put(

“addressServerFailCount”

,

addressServerFailCount

);

return

info;

}

private void

syncFromAddressUrl()

throws

Exception {

RestResult<String> result =

restTemplate

.get(

addressServerUrl

, Header.EMPTY, Query.EMPTY,

genericType

.getType());

if

(result.ok()) {

isAddressServerHealth

=

true

;

Reader reader =

new

StringReader(result.getData());

try

{

afterLookup(MemberUtil.readServerConf(EnvUtil.analyzeClusterConf(reader)));

}

catch

(Throwable e) {

Loggers.CLUSTER.error(

“[serverlist] exception for analyzeClusterConf, error : {}”

, ExceptionUtil.getAllExceptionMsg(e));

}

addressServerFailCount

=

0

;

}

else

{

addressServerFailCount

++;

if

(

addressServerFailCount

>=

maxFailCount

) {

isAddressServerHealth

=

false

;

}

Loggers.CLUSTER.error(

“[serverlist] failed to get serverlist, error code {}”

, result.getCode());

}

}

// 定时任务

class

AddressServerSyncTask

implements

Runnable {

@Override

public void

run() {

if

(

shutdown

) {

return

;

}

try

{

//拉取服务列表

syncFromAddressUrl();

}

catch

(Throwable ex) {

addressServerFailCount

++;

if

(

addressServerFailCount

>=

maxFailCount

) {

isAddressServerHealth

=

false

;

}

Loggers.CLUSTER.error(

“[serverlist] exception, error : {}”

, ExceptionUtil.getAllExceptionMsg(ex));

}

finally

{

GlobalExecutor.scheduleByCommon(

this

,

5_000L

);

}

}

}

}

|

1.

3

.

5.集群

数据同步

Nacos

数据同步分为

全量同步

和

增量同步

,所谓全量同步就是初始化数据一次性同步,而

增量同步

是指有数据增加的时候,只同步增加的数据。

1.

3

.

5

.1

.

全量同步

全量同步流程比较复杂,流程如上图:

|

1

:

启动一个定时任务线程DistroLoadDataTask加载数据,调用load()方法加载数据

2

:

调用loadAllDataSnapshotFromRemote()方法从远程机器同步所有的数据

3

:

从namingProxy代理获取所有的数据data

4

:

构造http请求,调用httpGet方法从指定的server获取数据

5

:

从获取的结果result中获取数据bytes

6

:

处理数据processData

7

:

从data反序列化出datumMap

8

:

把数据存储到dataStore,也就是本地缓存dataMap

9

:

监听器不包括key,就创建一个空的service,并且绑定监听器

10

:

监听器listener执行成功后,就更新data store

|

◆

任务启动

在com.alibaba.nacos.core.distributed.distro.

DistroProtocol

的构造函数中调用

startDistroTask

()方法,该方法会执行

startVerifyTask

()和

startLoadTask

() ,我们重点关注

startLoadTask

() ,该方法代码如下:

|

/***

* 启动DistroTask

*/

private void

startDistroTask() {

if

(EnvUtil.getStandaloneMode()) {

isInitialized =

true

;

return

;

}

//启动startVerifyTask,做数据同步校验

startVerifyTask();

//启动DistroLoadDataTask,批量加载数据

startLoadTask();

}

//启动DistroLoadDataTask

private void

startLoadTask() {

//处理状态回调对象

DistroCallback loadCallback =

new

DistroCallback() {

//处理成功

@Override

public void

onSuccess() {

isInitialized =

true

;

}

//处理失败

@Override

public void

onFailed(Throwable throwable) {

isInitialized =

false

;

}

};

//执行DistroLoadDataTask,是一个多线程

GlobalExecutor.submitLoadDataTask(

new

DistroLoadDataTask(memberManager, distroComponentHolder, distroConfig, loadCallback));

}

/***

* 启动startVerifyTask

* 数据校验

*/

private void

startVerifyTask() {

GlobalExecutor.schedulePartitionDataTimedSync(

new

DistroVerifyTask(memberManager, distroComponentHolder), distroConfig.getVerifyIntervalMillis());

}

|

数据校验

|

public class

DistroVerifyTask

implements

Runnable {

private final

ServerMemberManager

serverMemberManager

;

private final

DistroComponentHolder

distroComponentHolder

;

public

DistroVerifyTask(ServerMemberManager serverMemberManager, DistroComponentHolder distroComponentHolder) {

this

.

serverMemberManager

= serverMemberManager;

this

.

distroComponentHolder

= distroComponentHolder;

}

@Override

public void

run() {

try

{

// 获取集群中所有节点

List<Member> targetServer =

serverMemberManager

.allMembersWithoutSelf();

if

(Loggers.

DISTRO

.isDebugEnabled()) {

Loggers.

DISTRO

.debug(

“server list is: {}”

, targetServer);

}

for

(String each :

distroComponentHolder

.getDataStorageTypes()) {

// 同步数据

校验

verifyForDataStorage(each, targetServer);

}

}

catch

(Exception e) {

Loggers.

DISTRO

.error(

“[DISTRO-FAILED] verify task failed.”

, e);

}

}

private void

verifyForDataStorage(String type, List<Member> targetServer) {

DistroData distroData =

distroComponentHolder

.findDataStorage(type).getVerifyData();

if

(

null

== distroData) {

return

;

}

distroData.setType(DataOperation.

VERIFY

);

for

(Member member : targetServer) {

try

{

// 同步数据

校验

distroComponentHolder

.findTransportAgent(type).

syncVerifyData

(distroData, member.getAddress());

}

catch

(Exception e) {

Loggers.

DISTRO

.error(String

.

format

(

“[DISTRO-FAILED] verify data for type %s to %s failed.”

, type, member.getAddress()), e);

}

}

}

}

|

执行校验

|

@Override

public boolean

syncVerifyData(DistroData verifyData, String targetServer) {

if

(!

memberManager

.hasMember(targetServer)) {

return true

;

}

NamingProxy.

syncCheckSums

(verifyData.getContent(), targetServer);

return true

;

}

/**

* 同步检查总结

*/

public static void

syncCheckSums(

byte

[] checksums, String server) {

try

{

Map<String, String> headers =

new

HashMap<>(

128

);

headers.put(HttpHeaderConsts.

CLIENT_VERSION_HEADER

, VersionUtils.

version

);

headers.put(HttpHeaderConsts.

USER_AGENT_HEADER

, UtilsAndCommons.

SERVER_VERSION

);

headers.put(HttpHeaderConsts.

CONNECTION

,

“Keep-Alive”

);

HttpClient.

asyncHttpPutLarge

(

“http://”

+ server + EnvUtil.

getContextPath

() + UtilsAndCommons.

NACOS_NAMING_CONTEXT

+

TIMESTAMP_SYNC_URL

+

“?source=”

+ NetUtils.

localServer

(), headers, checksums,

new

Callback<String>() {

@Override

public void

onReceive(RestResult<String> result) {

if

(!result.ok()) {

Loggers.

DISTRO

.error(

“failed to req API: {}, code: {}, msg: {}”

,

“http://”

+

server

+ EnvUtil.

getContextPath

()

+ UtilsAndCommons.

NACOS_NAMING_CONTEXT

+

TIMESTAMP_SYNC_URL

,

result.getCode(), result.getMessage());

}

}

@Override

public void

onError(Throwable throwable) {

Loggers.

DISTRO

.error(

“failed to req API:”

+

“http://”

+

server

+ EnvUtil.

getContextPath

()

+ UtilsAndCommons.

NACOS_NAMING_CONTEXT

+

TIMESTAMP_SYNC_URL

, throwable);

}

@Override

public void

onCancel() {

}

});

}

catch

(Exception e) {

Loggers.

DISTRO

.warn(

“NamingProxy”

, e);

}

}

|

◆

数据执行加载

上面方法会调用

DistroLoadDataTask

对象,而该对象其实是个线程,因此会执行它的

run

方法,

run

方法会调用

load

()方法实现数据全量加载,代码如下:

|

/***

* 数据加载过程

*/

@Override

public void

run() {

try

{

//加载数据

load();

if

(!checkCompleted()) {

GlobalExecutor.submitLoadDataTask(

this

, distroConfig.getLoadDataRetryDelayMillis());

}

else

{

loadCallback.onSuccess();

Loggers.DISTRO.info(

“[DISTRO-INIT] load snapshot data success”

);

}

}

catch

(Exception e) {

loadCallback.onFailed(e);

Loggers.DISTRO.error(

“[DISTRO-INIT] load snapshot data failed. ”

, e);

}

}

/***

* 加载数据,并同步

*

@throws

Exception

*/

private void

load()

throws

Exception {

while

(memberManager.allMembersWithoutSelf().isEmpty()) {

Loggers.DISTRO.info(

“[DISTRO-INIT] waiting server list init…”

);

TimeUnit.SECONDS.sleep(

1

);

}

while

(distroComponentHolder.getDataStorageTypes().isEmpty()) {

Loggers.DISTRO.info(

“[DISTRO-INIT] waiting distro data storage register…”

);

TimeUnit.SECONDS.sleep(

1

);

}

//同步数据

for

(String each : distroComponentHolder.getDataStorageTypes()) {

if

(!loadCompletedMap.containsKey(each) || !loadCompletedMap.get(each)) {

//从远程机器上同步所有数据

loadCompletedMap.put(each, loadAllDataSnapshotFromRemote(each));

}

}

}

|

◆

数据同步

数据同步会通过

Http

请求从远程服务器获取数据,并同步到当前服务的缓存中,执行流程如下:

|

1

:

loadAllDataSnapshotFromRemote()从远程加载所有数据,并处理同步到本机

2

:

transportAgent.getDatumSnapshot()远程加载数据,通过Http请求执行远程加载

3

:

dataProcessor.processSnapshot()处理数据同步到本地

|

数据处理完整逻辑代码如下:loadAllDataSnapshotFromRemote()方法

:

|

/***

* 从远程机器上同步所有数据

*/

private boolean

loadAllDataSnapshotFromRemote(String resourceType) {

DistroTransportAgent transportAgent = distroComponentHolder.findTransportAgent(resourceType);

DistroDataProcessor dataProcessor = distroComponentHolder.findDataProcessor(resourceType);

if

(

null

== transportAgent ||

null

== dataProcessor) {

Loggers.DISTRO.warn(

“[DISTRO-INIT] Can’t find component for type {}, transportAgent: {}, dataProcessor: {}”

,

resourceType, transportAgent, dataProcessor);

return false

;

}

//遍历集群成员节点,不包括自己

for

(Member each : memberManager.allMembersWithoutSelf()) {

try

{

Loggers.DISTRO.info(

“[DISTRO-INIT] load snapshot {} from {}”

, resourceType, each.getAddress());

//从远程节点加载数据,调用http请求接口: distro/datums;

DistroData distroData = transportAgent.getDatumSnapshot(each.getAddress());

//处理数据

boolean

result = dataProcessor.processSnapshot(distroData);

Loggers.DISTRO.info(

“[DISTRO-INIT] load snapshot {} from {} result: {}”

, resourceType, each.getAddress(), result);

if

(result) {

return true

;

}

}

catch

(Exception e) {

Loggers.DISTRO.error(

“[DISTRO-INIT] load snapshot {} from {} failed.”

, resourceType, each.getAddress(), e);

}

}

return false

;

}

|

远程加载数据代码如下:transportAgent.

getDatumSnapshot

() 方法

|

/***

* 从namingProxy代理获取所有的数据data,从获取的结果result中获取数据bytes;

*

@param

targetServer

target server.

*

@return

*/

@Override

public

DistroData getDatumSnapshot(String targetServer) {

try

{

//从namingProxy代理获取所有的数据data,从获取的结果result中获取数据bytes;

byte

[] allDatum = NamingProxy.getAllData(targetServer);

//将数据封装成DistroData

return new

DistroData(

new

DistroKey(

“snapshot”

, KeyBuilder.INSTANCE_LIST_KEY_PREFIX), allDatum);

}

catch

(Exception e) {

throw new

DistroException(String.

format

(

“Get snapshot from %s failed.”

, targetServer), e);

}

}

/**

* Get all datum from target server.

* NamingProxy.getAllData

* 执行HttpGet请求,并获取返回数据

*

*

@param

server

target server address

*

@return

all datum byte array

*

@throws

Exception exception

*/

public static byte

[] getAllData(String server)

throws

Exception {

//参数封装

Map<String, String> params =

new

HashMap<>(

8

);

//组装URL,并执行HttpGet请求,获取结果集

RestResult<String> result = HttpClient.httpGet(

“http://”

+ server + EnvUtil.getContextPath() +

UtilsAndCommons.NACOS_NAMING_CONTEXT + ALL_DATA_GET_URL,

new

ArrayList<>(), params);

//返回数据

if

(result.ok()) {

return

result.getData().getBytes();

}

throw new

IOException(

“failed to req API: ”

+

“http://”

+ server + EnvUtil.getContextPath()

+ UtilsAndCommons.NACOS_NAMING_CONTEXT

+ ALL_DATA_GET_URL +

“. code:”

+ result.getCode() +

” msg: ”

+ result.getMessage());

}

|

处理数据同步到本地

|

@Override

public boolean

processSnapshot(DistroData distroData) {

try

{

return

processData

(distroData.getContent());

}

catch

(Exception e) {

return false

;

}

}

|

dataProcessor.

processSnapshot

()

|

/**

* 数据处理并更新本地缓存

*

*

@param

data

*

@return

*

@throws

Exception

*/

private boolean

processData(

byte

[] data)

throws

Exception {

if

(data.

length

>

0

) {

//从data反序列化出datumMap

Map<String, Datum<Instances>> datumMap = serializer.deserializeMap(data, Instances.

class

);

// 把数据存储到dataStore,也就是本地缓存dataMap

for

(Map.Entry<String, Datum<Instances>> entry : datumMap.entrySet()) {

dataStore.put(entry.getKey(), entry.getValue());

//监听器不包括key,就创建一个空的service,并且绑定监听器

if

(!listeners.containsKey(entry.getKey())) {

// pretty sure the service not exist:

if

(switchDomain.isDefaultInstanceEphemeral()) {

// create empty service

//创建一个空的service

Loggers.DISTRO.info(

“creating service {}”

, entry.getKey());

Service service =

new

Service();

String serviceName = KeyBuilder.getServiceName(entry.getKey());

String namespaceId = KeyBuilder.getNamespace(entry.getKey());

service.setName(serviceName);

service.setNamespaceId(namespaceId);

service.setGroupName(Constants.DEFAULT_GROUP);

// now validate the service. if failed, exception will be thrown

service.setLastModifiedMillis(System.

currentTimeMillis

());

service.recalculateChecksum();

// The Listener corresponding to the key value must not be empty

// 与键值对应的监听器不能为空,这里的监听器类型是 ServiceManager

RecordListener listener = listeners.get(KeyBuilder.SERVICE_META_KEY_PREFIX).peek();

if

(Objects.isNull(listener)) {

return false

;

}

//为空的绑定监听器