张量操作

一、拼接与切分

1.1 torch.cat()

功能:将张量按维度dim进行拼接

tensors:张量序列

dim:要拼接的维度

函数:torch.cat(tensors,dim=0,out=None)

t = torch.ones([2,3])

t_0 = torch.cat([t,t],dim=0)

t_1 = torch.cat([t,t],dim=1)

print("t_0:{} shape:{}\nt_1:{} shape:{}".format(t_0,t_0.shape,t_1,t_1.shape))

输出:shape:torch.Size([4, 3])

shape:torch.Size([2, 6])

1.2 torch.stack()

功能:在新创建的维度dim上进行拼接

tensors:张量序列

dim:要拼接的维度

函数:torch.stack(tensors,dim=0,out=None)

t_stack = torch.stack([t,t,t,t,t],dim=1)

print("\nt_stack:{} shape:{}".format(t_stack,t_stack.shape))

输出:torch.Size([2, 5, 3])

1.3 torch.chunk()

功能:将张量按维度dim进行平均切分

返回值:张量列表

注意事项:若不能整除,最后一份张量小于其他张量

input:要切分的张量

chunks:要切分额份数

函数:torch.chunk(input,chunks,dim=0)

【注】chunk向上取整

a = torch.ones((2,5))

list_of_tensor = torch.chunk(a,dim=1,chunks=2)

for idx,t in enumerate(list_of_tensor):

print("第{}个张量:{},shape is {}".format(idx+1,t,t.shape))

输出:shape:torch.Size([2, 3])

shape:torch.Size([2, 2])

1.4 torch.split()

功能:将张量按维度dim进行平均切分

返回值:张量列表

tensor:要切分的张量

split_size_or_sections:为int时,表示每一份的长度;

为list时, 按list元素切分

函数:torch.split(tensor,split size_ or_ sections,dim=0)

t = torch.ones((2,5))

list_of_tensors = torch.split(t,[1,1,1,2],dim=1)

for idx,t in enumerate(list_of_tensors):

print("第{}个张量:{},shape is {}".format(idx+1,t,t.shape))

输出:shape:torch.Size([2, 1])

shape:torch.Size([2, 1])

shape:torch.Size([2, 1])

shape:torch.Size([2, 2])

二、索引

2.1 torch.index_select()

功能:在维度dim上,按index索引数据

返回值:依index索引数据拼接的张量

input:要索引的张量

index:索引的序号

函数:torch.index_select(input,dim,index,out=None)

t = torch.randint(0,9,size=(3,3))

#索引的必须是long

idx = torch.tensor([0,2],dtype=torch.long)

t_select = torch.index_select(t,dim=0,index=idx)

print("t:\n{}\nt_select:n{}".format(t,t_select))

输出:t:

tensor([[4, 5, 2],

[4, 3, 6],

[4, 4, 2]])

t_select:

tensor([[4, 5, 2],

[4, 4, 2]])

2.2 torch.masked_select()

功能:按mask中的True进行索引

返回值:一维张量

input:要索引的张量

mask:与input同形状的布尔类型张量

函数:torch.masked_select(inout,mask,out=None)

t = torch.randint(0,9,size=(3,3))

mask = t.ge(5) #>=5 的数

t_select = torch.masked_select(t,mask)

print("t:\n{}\nmask:\n{}\nt_select:n{}".format(t,mask,t_select))

t:

tensor([[3, 8, 0],

[4, 7, 0],

[5, 8, 0]])

mask:

tensor([[False, True, False],

[False, True, False],

[ True, True, False]])

t_select:ntensor([8, 7, 5, 8])

三、变换

3.1 torch.reshape()

功能:变换张量形状

shape:新张量的形状

【注】:张量与input共享数据内存

函数:torch.reshape(input,shape)

t = torch.randperm(8)

t_reshape = torch.reshape(t,(-1,4)) #(-1,4)

print("t:{}\nt_reshape:\n{}".format(t,t_reshape))

输出:t:tensor([7, 3, 0, 2, 4, 5, 1, 6])

t_reshape:

tensor([[7, 3, 0, 2],

[4, 5, 1, 6]])

3.2 torch.transpose()

功能:变换张量的两个维度

dim0:要交换的维度

dim1:要交换的维度

函数:torch.transpose(input,dim0,dim1)

t = torch.rand(2,3,4)

t_transpose = torch.transpose(t,dim0=1,dim1=2)

print("t:{}\nt_transpose:\n{}".format(t.shape,t_transpose.shape))

输出:t:torch.Size([2, 3, 4])

t_transpose:

torch.Size([2, 4, 3])

3.3 torch.t()

功能:二维张量转置

等价于torch.transpose(input,0,1)

函数:torch.t(input)

t = torch.rand(2,3)

t_T = torch.t(t)

print("t_T:\n",t_T)

输出:t:torch.Size([3, 2])

3.4 torch.squeeze()

功能:压缩长度为1的维度

dim:若为None,移除所有长度为1的轴;

指定维度,当且仅当该轴长度为1时,可以被移除

函数:torch.squeeze(input,dim=None,out=None)

t = torch.randint(0,9,size=(1,2,3,1))

t_sq = torch.squeeze(t)

t_0 = torch.squeeze(t,dim=0)

t_1 = torch.squeeze(t,dim=1)

print(t.shape)

print(t_sq.shape)

print(t_0.shape)

print(t_1.shape)

输出:torch.Size([1, 2, 3, 1])

torch.Size([2, 3])

torch.Size([2, 3, 1])

torch.Size([1, 2, 3, 1])

3.5 torch.unsqueeze()

功能:依据dim扩展维度

函数:torch.unsqueeze(input,dim=None,out=None)

t = torch.randint(0,9,size=(2,2))

t_2 = torch.unsqueeze(t,dim=2)

print(t_2.shape)

输出:torch.Size([2, 2, 1])

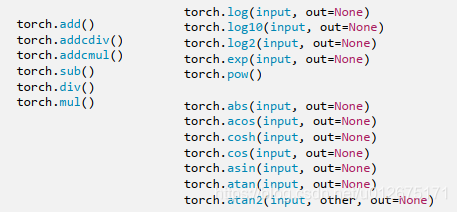

四、数学运算

1.1 加减乘除,对数指数,三角函数

4.1 torch.add()

功能:计算input+ alphax other

input:第一个张量

alpha:乘项因子

other:第二个张量

函数:torch.addcmul( input ,value=1,tensor1,tensor2, out=None )