之前我们都是直接去抓取部署在宿主机上服务暴露出来的指标进行监控

K8是什么就不说了,本章主要是说一下在宿主机和容器中prometheus该如何监控K8S集群

一、宿主机prometheus监听K8

其实比较常见的还是在容器中跑prometheus,但在业务中也碰到过在宿主机部署的情况

1、监听node资源

vi /etc/prometheus/prometheus.yml

global:

scrape_interval: 1m

evaluation_interval: 30s

scrape_configs:

- job_name: test

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: true

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

kubernetes_sd_configs:

- role: node

api_server: https://10.0.16.16:6443

tls_config:

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

配置说明

scrape_configs:

- job_name: test

scrape_interval: 30s

scheme: https #这个https是表示我们要请求/metrics

#这个链接是https模式的

tls_config: #认证部分,意思是我们采集https://xxx/metrics这个接口时的提供认证

#因为是监听K8S,指标一般也是走K8s的接口的,所以证书直接用集群的就行

insecure_skip_verify: true

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

kubernetes_sd_configs: #K8的服务发现

- role: node #要获取的资源是什么

api_server: https://10.0.16.16:6443 #K8S集群master地址

#我们要从这个地址的接口请求指标

tls_config: #请求K8S集群的认证文件

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key启动服务

systemctl restart prometheus

这里说明一下,我这次用的是云主机。只有1台所以即是master也是node

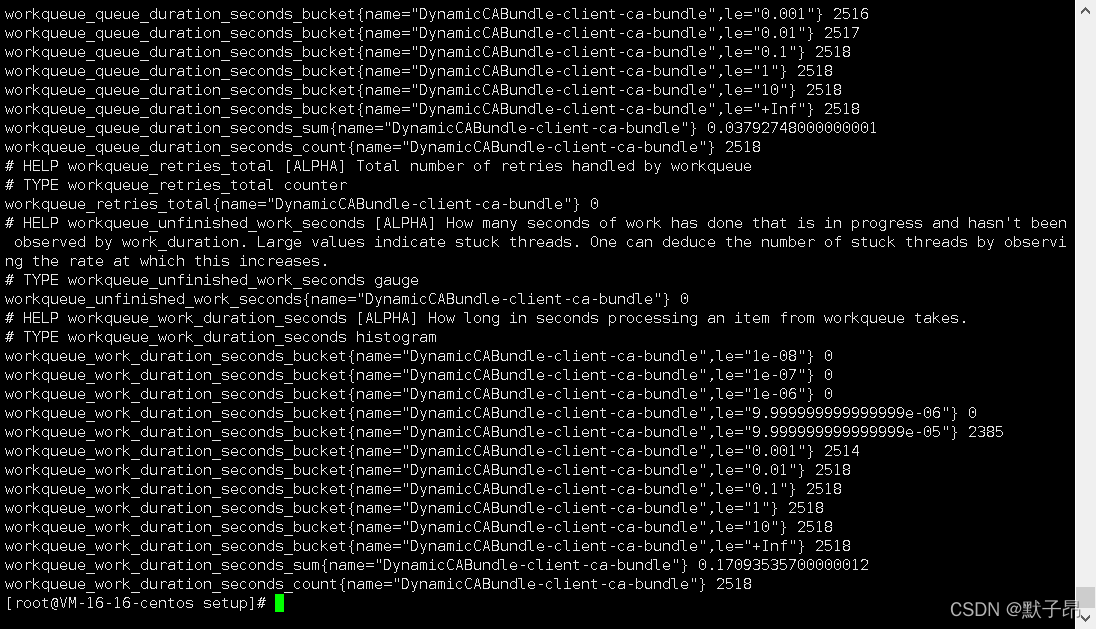

图中找到的是node主机上kubelet10250端口,如果不明白可以用下面的命令去手动请求下

curl https://10.0.16.16:10250/metrics \

--cacert /etc/kubernetes/pki/ca.crt \

--cert /etc/kubernetes/pki/apiserver-kubelet-client.crt \

--key /etc/kubernetes/pki/apiserver-kubelet-client.key \

-k

上面就是”node角色” 所监听的所有指标,当然K8中也不仅仅只有node一种资源

还有ep、ingres、svc等等,我们都要通过 kubernetes_sd_configs 去做服务发现

kubernetes_sd_config · 笔记 · 看云

可以参考这位大大的说明

2、监听集群master资源

我们平时使用K8如果想要获取精准的master地址,通常会使用如下命令

[root@VM-16-16-centos setup]# kubectl -n default get endpoints kubernetes

NAME ENDPOINTS AGE

kubernetes 10.0.16.16:6443 19d

在kubernetes_sd_configs服务发现中有几个类似的内置指标

#别说找不到,上面刚刚分享的链接里都有

__meta_kubernetes_namespace #服务对象的命名空间。

__meta_kubernetes_service_name #服务对象的名称。

__meta_kubernetes_endpoint_port_name #端点端口的名称 (是否是https打头)

编写配置

vi /etc/prometheus/prometheus.yml

- job_name: apiserver

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: true

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

kubernetes_sd_configs:

- role: endpoints

api_server: https://10.0.16.16:6443

tls_config:

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

relabel_configs:

- source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

regex: default;kubernetes;https

action: keep

配置说明

kubernetes_sd_configs:

- role: endpoints #我们上面kubectl get的资源是endpoint

#说明这个指标来自于endpoints这个资源对象

api_server: https://10.0.16.16:6443 #同上node资源

tls_config:

ca_file: /etc/kubernetes/pki/ca.crt

cert_file: /etc/kubernetes/pki/apiserver-kubelet-client.crt

key_file: /etc/kubernetes/pki/apiserver-kubelet-client.key

relabel_configs: #标签重写,上一章的知识点

- source_labels: #要重新的标签是啥

- __meta_kubernetes_namespace #命名空间地址

- __meta_kubernetes_service_name #svc的名称,一般和ep是一样的

- __meta_kubernetes_endpoint_port_name #指标是否是https模式

regex: default;kubernetes;https #按照顺序定义上面3个标签的值

action: keep #将所有指标中,不匹配这3个元标签值的指标都丢弃掉重载配置

curl -XPOST http://10.0.16.16:9090/-/reload

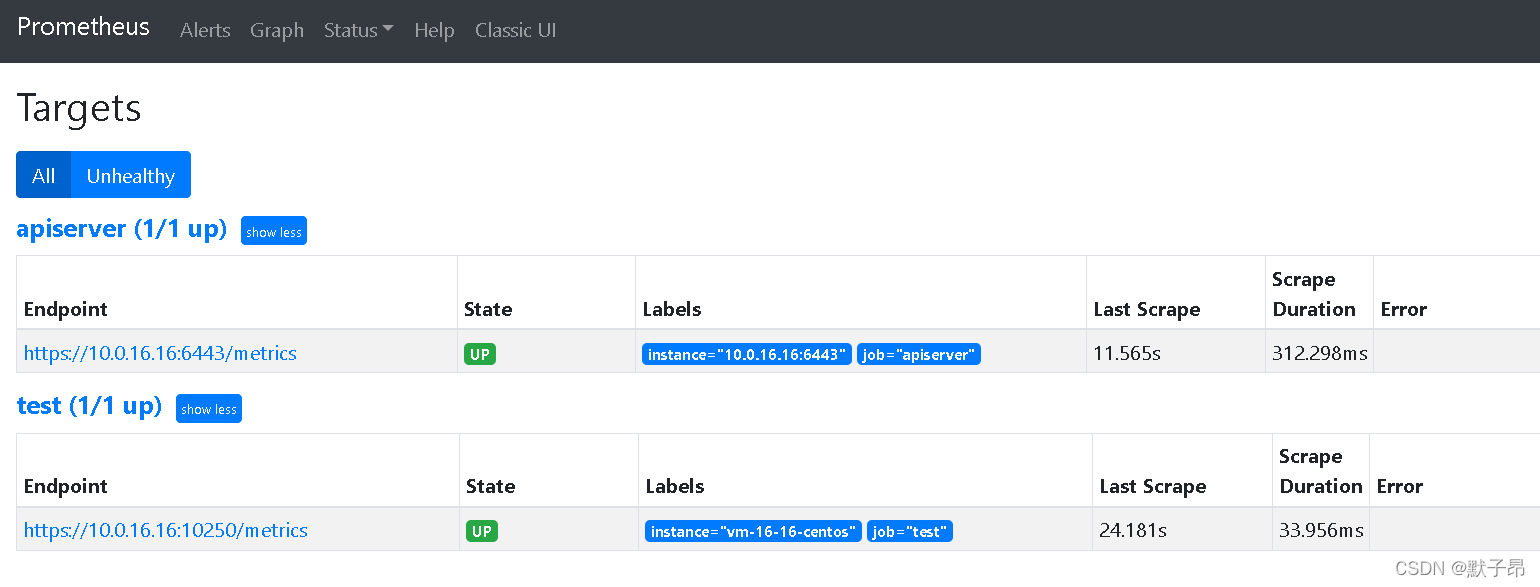

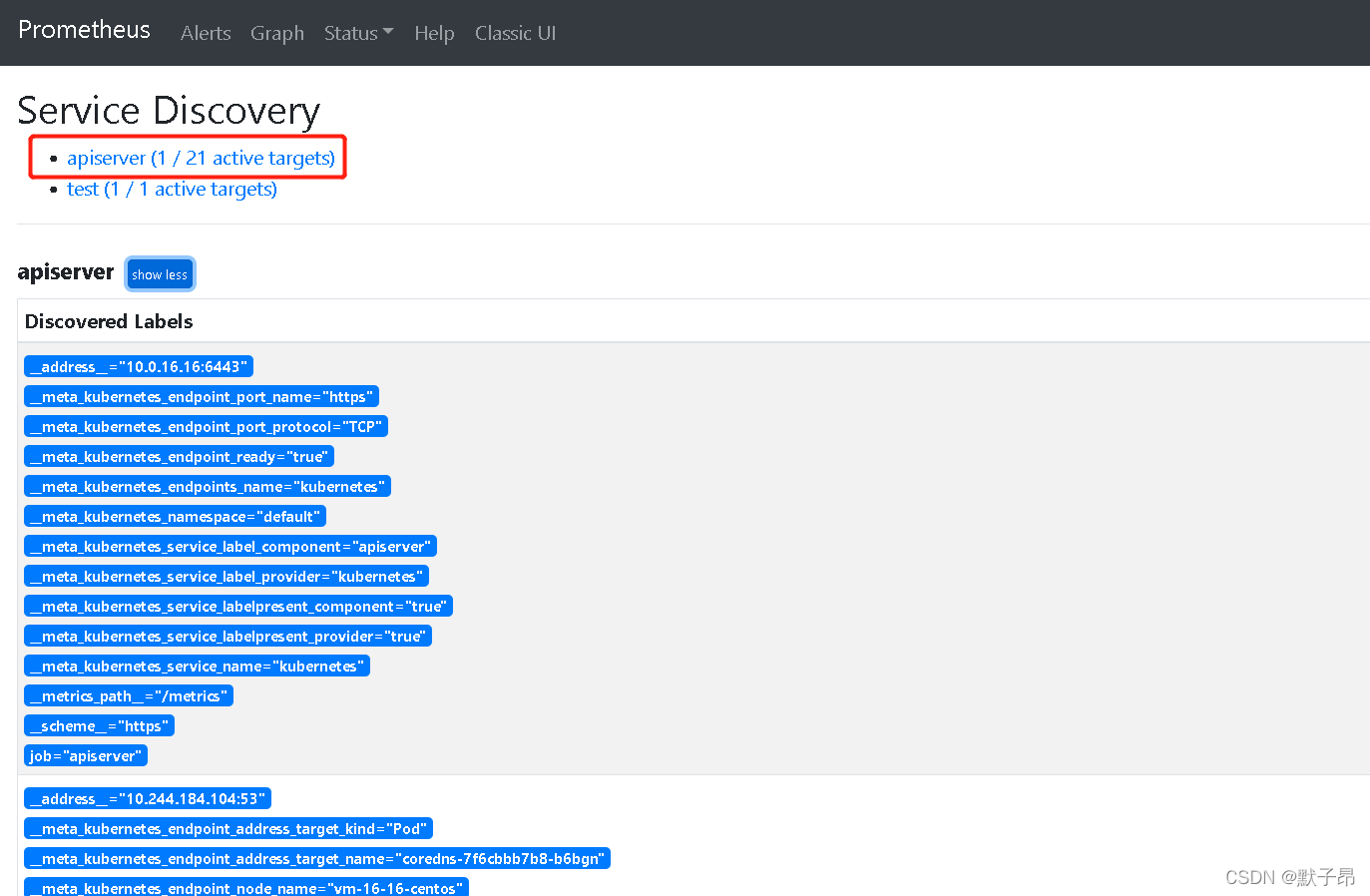

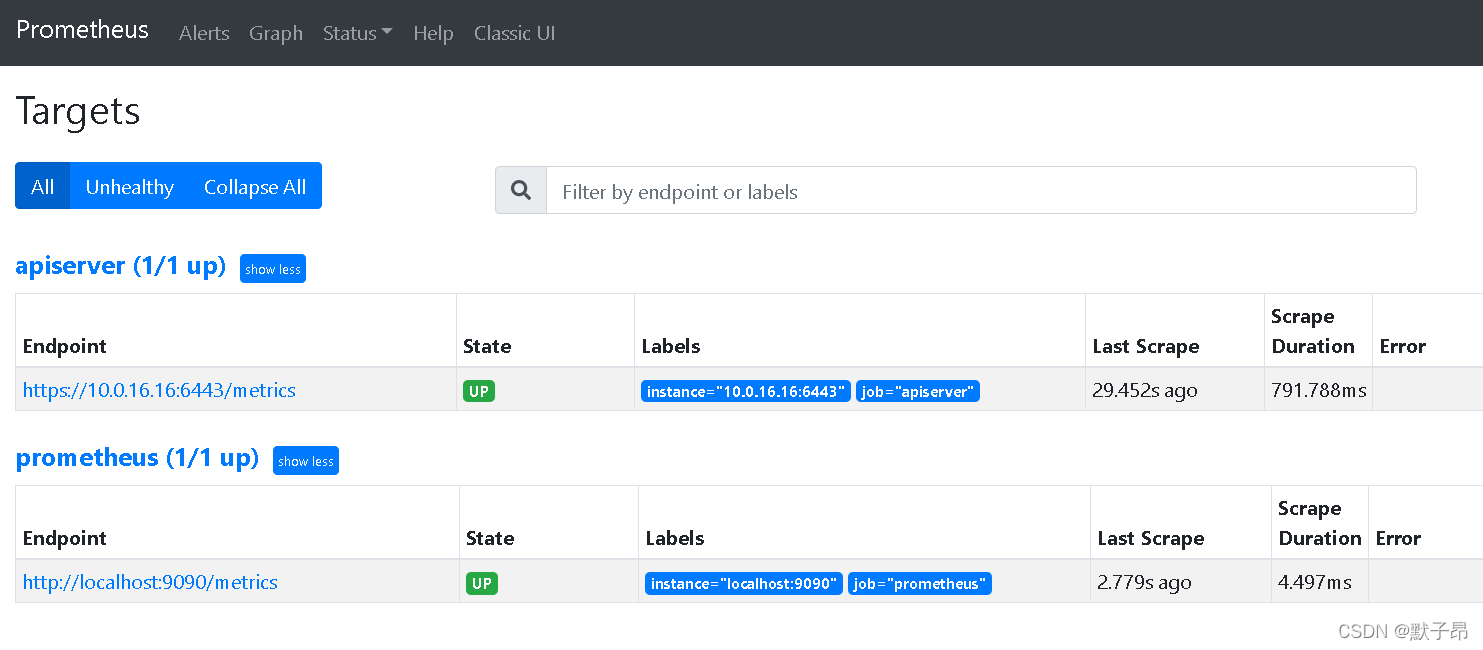

在服务发现中我们可以看到他已经将不满足上面3个标签的指标都丢弃了

容器中prometheus的配置和宿主机配置 基本相同,只需要注意一下证书问题

我们下面则以容器的形式去部署prometheus,来监控整个K8集群的资源

二、容器prometheus监控集群

1、部署prometheus容器

防止冲突,这里先将宿主机的prometheus服务停掉

systemctl stop prometheus

添加prometheusyaml(直接复制即可)

cat > prometheus-dev.yaml <<EOF

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

ports:

- port: 9090

targetPort: 9090

selector:

app: prometheus

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus

name: prometheus

command:

- "/bin/prometheus"

args:

- "--web.enable-lifecycle" #可以curl重载配置了

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

hostPort: 9090

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

EOF

添加configmap配置

cat > cm.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

EOF

部署容器

kubectl apply -f cm.yaml

kubectl apply -f prometheus-dev.yaml

2、监控prometheus本身

vi cm.yml

scrape_configs:

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets: ['localhost:9090'] #这个没什么可说的,添加监控自身,也可以不要重载

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload #有时候会很慢,可以多跑几下

3、监控apiserver端点

这个我们上面宿主机已经写过了,直接复制过来修改一下证书

vi cm.yaml

- job_name: apiserver

scrape_interval: 30s

scheme: https

kubernetes_sd_configs:

- role: endpoints

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace,__meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

配置说明

- job_name: apiserver

scrape_interval: 30s

scheme: https #因为在集群内部,这里就不用写请求指标的证书了

kubernetes_sd_configs:

- role: endpoints

tls_config: #K8是有自动注入的证书的,直接复制下面的使用即可,不要纠结,通用~

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace,__meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https重载配置

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload

#如果实在刷不出来,就把prometheus的pod干掉,效果一样

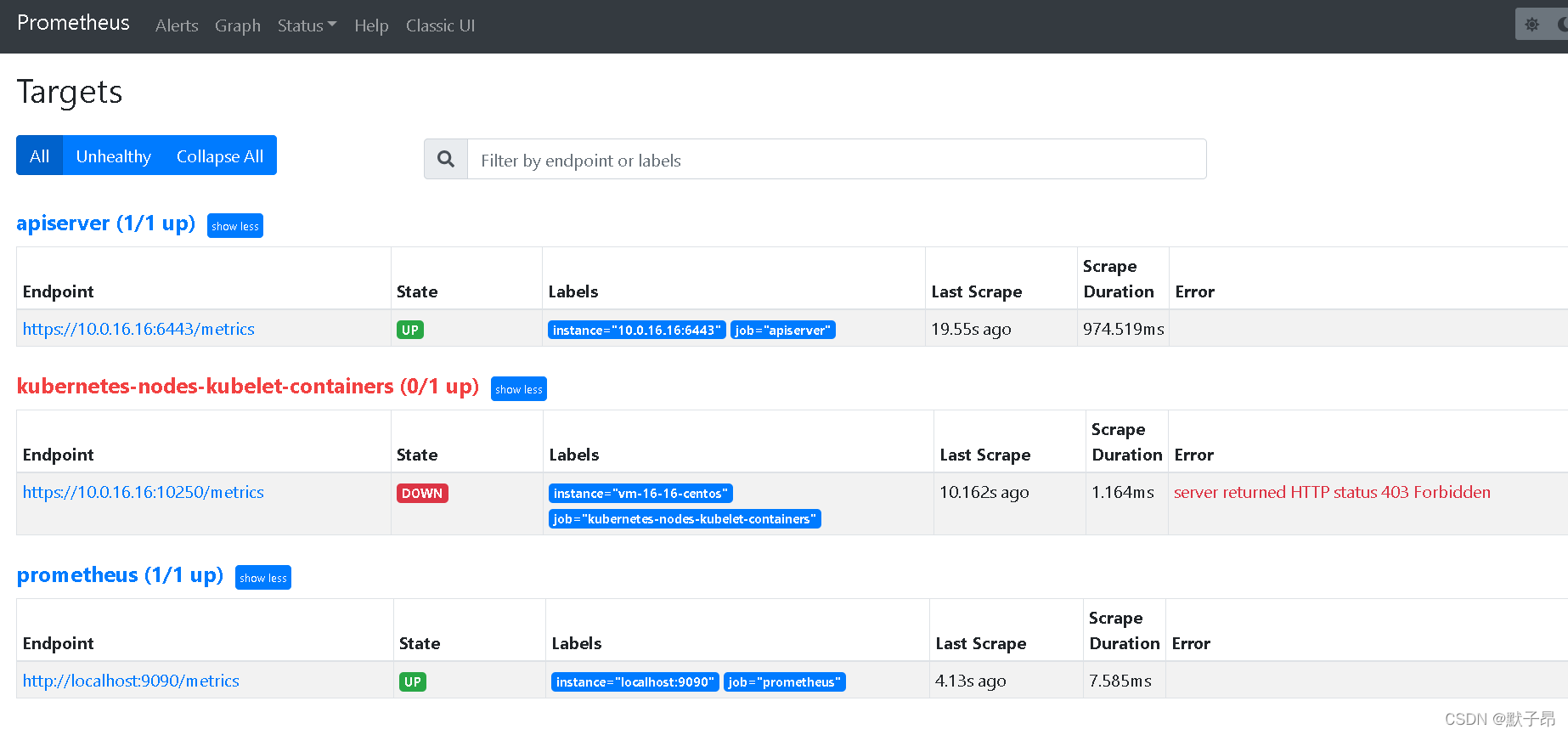

4、监听kubelet组件

简单来说,kubelet有个端口10250、10255 是用来暴露节点metrics指标的

但是后面不怎么用了,改用内部接口了 接口形式如下

/api/v1/nodes/主机名/proxy/metricsvi cm.yaml

- job_name: kubernetes-nodes-kubelet-containers

kubernetes_sd_configs:

- role: node #上面我们得知kubelet的指标是在api/v1/nodes下的,所以用node角色

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

部署

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload

思考

奇奇怪怪,为什么宿主机上这样可以用,K8里面就没权限了呢?

有没有可能是因为prometheus是从容器集群内部去访问集群外部的kubelet接口

然而,外边kubelet不认K8注入的证书呢 如果我们再去外部拿证书又很麻烦

ψ(`∇´)ψ

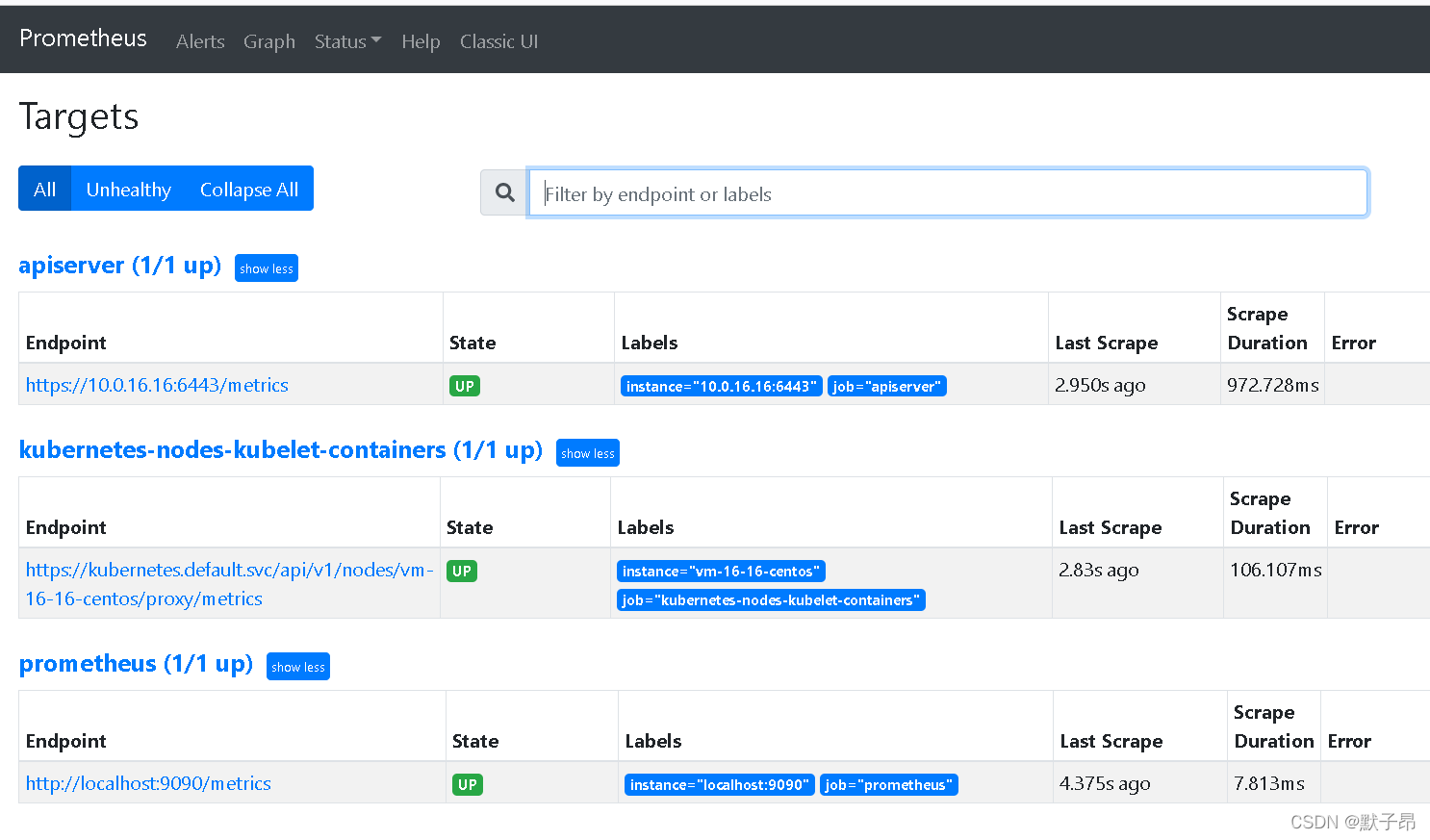

解决方法

偶然间看到 kubelet的指标是可以通过apiserver的接口去获取的。如下:

/api/v1/nodes/主机名/proxy/metrics

#那么我们就要想办法去将抓取指标的操作指定到master的这个接口上

#解决思路

1、首先我们先通过修改"__address__"内置标签默认的值10.0.16.16:10250 ,指定到我们的master地址

2、想办法找到 node的主机名是什么,正好我们上面kubernetes_sd_configs中有这么一条

__meta_kubernetes_node_name 可以获取节点的主机名

3、将prometheus默认抓取的路径/metrics 修改为/api/v1/nodes/主机名/proxy/metrics 的接口路径

添加重写配置

relabel_configs: #重写标签部分

- target_label: __address__

replacement: kubernetes.default.svc:443 #也可以直接指master地址+端口

- source_labels: [__meta_kubernetes_node_name]

regex: (.+) #将匹配到的主机名通过${1} 调用

target_label: __metrics_path__ #替换获取metrics的路径

replacement: /api/v1/nodes/${1}/proxy/metrics #准备替换的metrics路径

重载配置

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload

图中可以看到,我们通过集群内部接口api获取到了主机的kubelet指标

https://kubernetes.default.svc/api/v1/nodes/vm-16-16-centos/proxy/metrics

当然我们也可以在指定kubernetes.default.svc:443时修改为master地址也是可行的

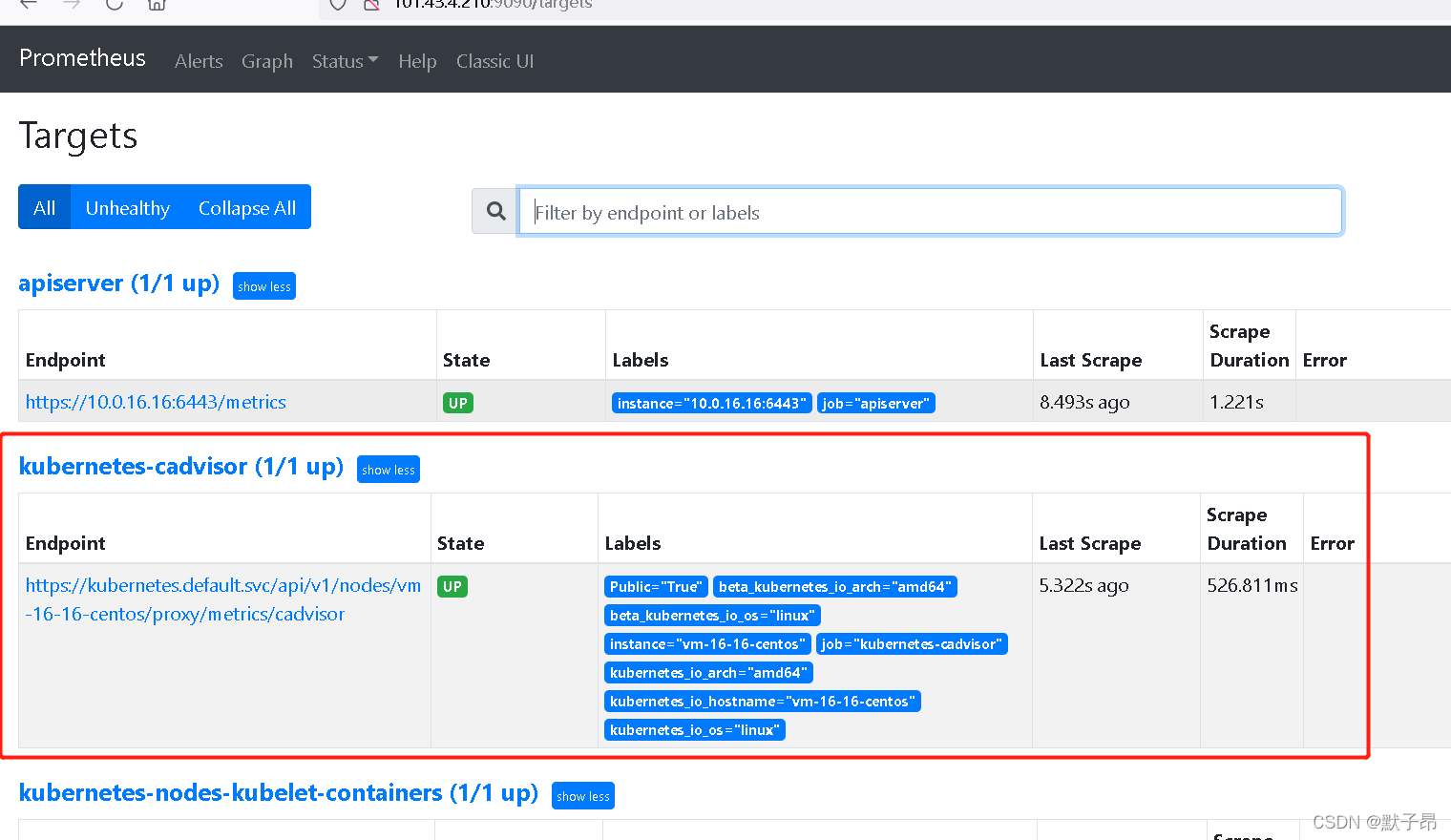

5、监听cadvisor组件

cadvisor已经被集成在kebelet组件中了,采集和和kubelet类似,接口为

/api/v1/nodes/主机名/proxy/metrics/cadvisor

vi cm.yaml

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+) #把该node所有标签都列出来

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor #区别在于接口不同重载配置

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload

6、监控 node-exporter

cat > node-exporter.yaml << EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter:v1.0.1

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

hostPort: 9100

volumeMounts:

- mountPath: /root-disk

name: root-disk

readOnly: true

- mountPath: /docker-disk

name: docker-disk

readOnly: true

volumes:

- name: root-disk

hostPath:

path: /

type: ""

- name: docker-disk

hostPath:

path: /var/lib/docker

type: ""

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

protocol: TCP

selector:

k8s-app: node-exporter

EOF部署

kubectl apply -f node-exporter.yaml

添加监控配置

vi cm.yaml

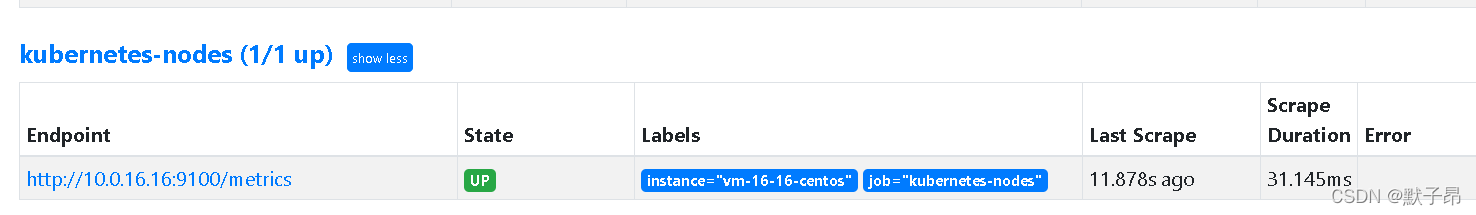

- job_name: 'kubernetes-nodes'

scrape_interval: 30s

scrape_timeout: 10s

kubernetes_sd_configs:

- role: node

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250' #这里匹配每个节点的ip

replacement: '${1}:9100' #然后将ip拿过来,加上9100端口(node-exporter暴露到主机上了)

target_label: __address__ #替换掉拿数据的地址,就直接指定到node-exporter上了

action: replace重载配置

kubectl apply -f cm.yaml

curl -XPOST http://10.0.16.16:9090/-/reload

我是 单节点,你们的会多一些是( •̀ ω •́ )✧

完整configmap配置如下

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets: ['localhost:9090'] #这个没什么可说的,添加监控自身,也可以不要

- job_name: apiserver

scrape_interval: 30s

scheme: https

kubernetes_sd_configs:

- role: endpoints

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace,__meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: kubernetes-nodes-kubelet-containers

kubernetes_sd_configs:

- role: node #上面我们得知kubelet的指标是在api/v1/nodes下的,所以用node角色

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs: #重写标签部分

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+) #这个操作和kubelet基本一致

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor #区别在于接口不同

- job_name: 'kubernetes-nodes'

scrape_interval: 30s

scrape_timeout: 10s

kubernetes_sd_configs:

- role: node

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250' #这里匹配每个节点的ip

replacement: '${1}:9100' #然后将ip拿过来,加上9100端口(node-exporter暴露到主机上了)

target_label: __address__ #替换掉拿数据的地址,就直接指定到node-exporter上了

action: replace