如何扩展Unity URP的后处理Volume组件

Unity在更新到Unity2019.4之后,大家或许已经发现,在使用URP(通用渲染管线)的情况下,Unity原来的Post Processing插件好像不起效了。

原来Unity在Unity2019.4之后在URP内部集成了屏幕后处理的功能,使用方法也很简单,直接在Hierachy视图右键,选择Volume/Global Volume,我们就可以在Hierarchy视图看到一个Global Volume游戏对象。选中它,在资源检视面板可以看到有一个Volume组件,这就是URP实现屏幕后处理的核心组件。

在资源检视面板上,我们看到Volume组件下面有个Profile选项,要求我们给Volume组件选定一个volume profile文件,这个文件保存了我们为场景添加的屏幕后处理特效和效果参数,Volume需要通过读取这个文件的数据来实现我们需要的效果。我们可以直接点击New按钮,unity会自动在当前场景所在目录下新建一个和场景同名的目录,然后在该目录下生成一个profile文件。或者我们可以在Project视图右键选择Create/Volume profile在当前目录下生成一个profile文件。

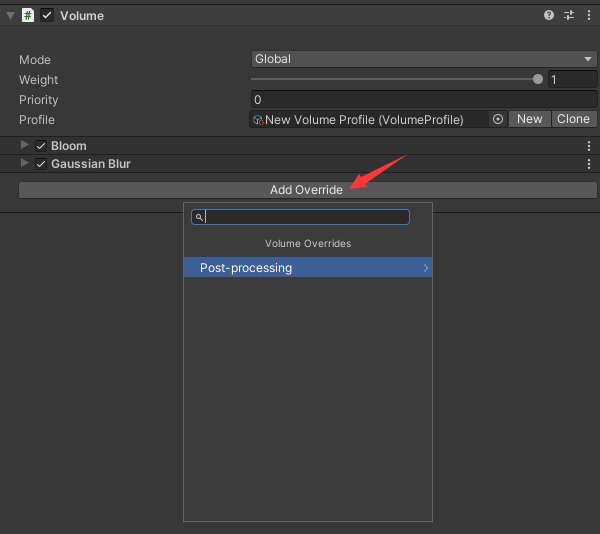

给Volume配置好profile文件之后,检视面板会变成下图所示:

Volume Overrides

Volume Overrides是存储屏幕后处理效果的配置参数的基本单元,一个profile文件其实就是多个Volume Override的集合。当然,我们可以创建多个profile文件,而这些profile文件可以通过Volume Framework进行规范管理。下面说一下怎么通过Volume Override添加屏幕后处理效果。

点击Add Override/Post-processing/Bloom,我们就可以将Bloom Override添加到检视面板上了。

设置好参数,我们对比一下前后的效果:

除了Bloom效果之外,Unity还给我们提供了另外15种后处理效果,大家可以参考unity给的官方文档:

添加自定义Override

虽然,Unity给我们提供了很多内置的屏幕后处理特效,但是在实际的工程项目开发中,这些特效有时候是不能完全满足我们的需求的,这时候我们就希望可以对Volume Override进行扩展。下面我们就一起来探讨一下怎么对Volume Overide进行扩展吧。

在展开下面的讨论之前,我们先来了解一下URP源码的目录结构:

|--Package

|--com.unity.render-piplines.universal

|--Editor

|--Runtime

|--...

|--Materials

|--Overrides

|--Passes

|--...

|--...

|--Shaders

|--...

而Unity URP内置的一些后处理特效代码就放在Overrides文件夹下面:

以Bloom为例,双击点开查看一下源码:

using System;

namespace UnityEngine.Rendering.Universal

{

[Serializable, VolumeComponentMenu("Post-processing/Bloom")]

public sealed class Bloom : VolumeComponent, IPostProcessComponent

{

[Tooltip("Filters out pixels under this level of brightness. Value is in gamma-space.")]

public MinFloatParameter threshold = new MinFloatParameter(0.9f, 0f);

[Tooltip("Strength of the bloom filter.")]

public MinFloatParameter intensity = new MinFloatParameter(0f, 0f);

[Tooltip("Changes the extent of veiling effects.")]

public ClampedFloatParameter scatter = new ClampedFloatParameter(0.7f, 0f, 1f);

[Tooltip("Clamps pixels to control the bloom amount.")]

public MinFloatParameter clamp = new MinFloatParameter(65472f, 0f);

[Tooltip("Global tint of the bloom filter.")]

public ColorParameter tint = new ColorParameter(Color.white, false, false, true);

[Tooltip("Use bicubic sampling instead of bilinear sampling for the upsampling passes. This is slightly more expensive but helps getting smoother visuals.")]

public BoolParameter highQualityFiltering = new BoolParameter(false);

[Tooltip("Dirtiness texture to add smudges or dust to the bloom effect.")]

public TextureParameter dirtTexture = new TextureParameter(null);

[Tooltip("Amount of dirtiness.")]

public MinFloatParameter dirtIntensity = new MinFloatParameter(0f, 0f);

public bool IsActive() => intensity.value > 0f;

public bool IsTileCompatible() => false;

}

}

首先,我们发现Bloom类带有一个Attribute标签,它的作用就是把Bloom添加到add Overrides菜单里面。另外,每个后处理功能必须要继承VolumeComponent类和实现IPostProcessComponent接口,这是为了将新加的后处理功能集成到Volume Framework的规范管理中。另外,我们发现Bloom类中的属性并不是C#或unity中的常规数据类型,这是因为Volume Framework对这些类型做了一层封装,其目的是为了使得资源检测界面更加美观。

那么,我们现在就可以仿照这个代码来写一个自己的后处理特效了,以高斯模糊为例,直接粘贴代码:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

using System;

namespace UnityEngine.Experiemntal.Rendering.Universal

{

[Serializable,VolumeComponentMenu("Addition-post-processing/GaussianBlur")]

public class GaussianBlur : VolumeComponent, IPostProcessComponent

{

public GaussianFilerModeParameter filterMode = new GaussianFilerModeParameter(FilterMode.Bilinear);

public ClampedIntParameter blurCount = new ClampedIntParameter(1, 1, 4);

public ClampedIntParameter downSample = new ClampedIntParameter(1, 1, 4);

public ClampedFloatParameter indensity = new ClampedFloatParameter(0f, 0, 20);

//public

public bool IsActive()

{

return active && indensity.value != 0;

}

public bool IsTileCompatible()

{

return false;

}

}

[Serializable]

public sealed class GaussianFilerModeParameter : VolumeParameter<FilterMode> { public GaussianFilerModeParameter(FilterMode value, bool overrideState = false) : base(value, overrideState) { } }

}

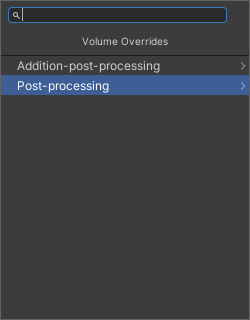

然后我们看看Add Volumes菜单有什么变化。

可以看到高斯模糊已经被添加到add volume菜单上了,但是现在这个功能还没有效果,这是因为我们现在只是添加了这个功能一些必要的参数,具体的实现过程还没有写。接下来我们继续追溯Unity URP是怎么实现后处理的。

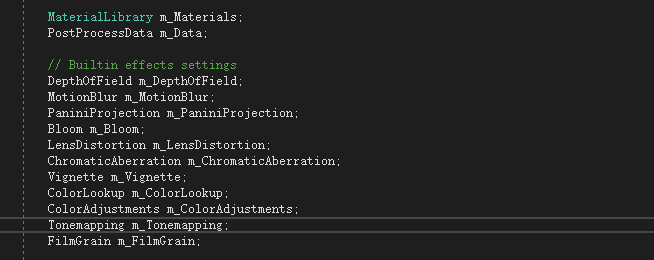

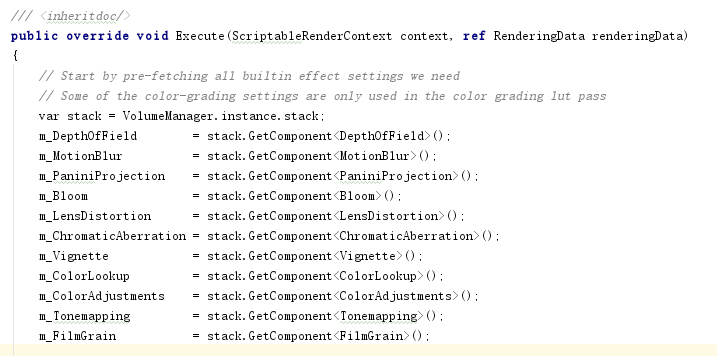

打开Runtime/Passes路径下的PostProcessPass.cs,然后我们发现,URP中内置的后处理特效全都定义在这了:

继续往下查找,这些实例初始化的地方都在Execute(ScriptableRenderContext context,

ref

RenderingData renderingData)函数里面:

而最终实现后处理渲染的地方是在

void

Render(CommandBuffer cmd,

ref

RenderingData renderingData)函数中:

SetupBloom方式则是具体的实现逻辑,有兴趣的可以自己独自去探究了,本文就不在深入介绍了。

那么我们要添加自己定义的后处理效果难道是要修改URP插件吗?理智告诉我们这样是不可取的,最好的结果当然是把我们自定义的特效的代码跟官方的插件完全解耦,而unity也给我们提供了这样的工具,那就是RenderFeature。RenderFeature的作用是可以让我们扩展渲染的pass,可以灵活的在渲染的各个阶段插入commandbuffer,这个插入点由RenderPassEvent决定。下面就让我们一起来创建一个RenderFeature。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

namespace UnityEngine.Experiemntal.Rendering.Universal

{

public class AdditionPostProcessRendererFeature : ScriptableRendererFeature

{

public RenderPassEvent evt = RenderPassEvent.AfterRenderingTransparents;

public AdditionalPostProcessData postData;

AdditionPostProcessPass postPass;

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

var cameraColorTarget = renderer.cameraColorTarget;

var cameraDepth = renderer.cameraDepth;

var dest = RenderTargetHandle.CameraTarget;

if (postData == null)

return;

postPass.Setup(evt, cameraColorTarget, cameraDepth, dest, postData);

renderer.EnqueuePass(postPass);

}

public override void Create()

{

postPass = new AdditionPostProcessPass();

}

}

}

每个RenderFeature必须要继承ScriptableRendererFeature抽象类,并且实现AddRenderPasses跟Create函数。在ScriptableRendererFeature对象被初始化的时候,首先会调用Create方法,我们可以在这构建一个ScriptableRenderPass实例,然后在AddRenderPasses方法中对其进行初始化并把它添加到渲染队列中,这里大家或许已经明白,ScriptableRendererFeature对象跟ScriptableRenderPass对象需要搭配使用。创建好AdditionPostProcessRendererFeature,我们就可以在ForwardRenderer中添加新定义的RenderFeature了。

接着我们需要实现AdditionPostProcessPass。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

namespace UnityEngine.Experiemntal.Rendering.Universal

{

/// <summary>

/// 对URP屏幕后处理扩展

/// </summary>

public class AdditionPostProcessPass : ScriptableRenderPass

{

/// <summary>

/// 高斯模糊

/// </summary>

RenderTargetIdentifier m_ColorAttachment;

RenderTargetIdentifier m_CameraDepthAttachment;

RenderTargetHandle m_Destination;

const string k_RenderPostProcessingTag = "Render AdditionalPostProcessing Effects";

const string k_RenderFinalPostProcessingTag = "Render Final AdditionalPostProcessing Pass";

//additonal effects settings

GaussianBlur m_GaussianBlur;

MaterialLibrary m_Materials;

AdditionalPostProcessData m_Data;

RenderTargetHandle m_TemporaryColorTexture01;

RenderTargetHandle m_TemporaryColorTexture02;

RenderTargetHandle m_TemporaryColorTexture03;

public AdditionPostProcessPass()

{

m_TemporaryColorTexture01.Init("_TemporaryColorTexture1");

m_TemporaryColorTexture02.Init("_TemporaryColorTexture2");

m_TemporaryColorTexture03.Init("_TemporaryColorTexture3");

}

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

var stack = VolumeManager.instance.stack;

m_GaussianBlur = stack.GetComponent<GaussianBlur>();

var cmd = CommandBufferPool.Get(k_RenderPostProcessingTag);

Render(cmd, ref renderingData);

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

}

public void Setup(RenderPassEvent @event, RenderTargetIdentifier source,RenderTargetIdentifier cameraDepth, RenderTargetHandle destination, AdditionalPostProcessData data)

{

m_Data = data;

renderPassEvent = @event;

m_ColorAttachment = source;

m_CameraDepthAttachment = cameraDepth;

m_Destination = destination;

m_Materials = new MaterialLibrary(data);

}

void Render(CommandBuffer cmd,ref RenderingData renderingData)

{

ref var cameraData = ref renderingData.cameraData;

if (m_GaussianBlur.IsActive() && !cameraData.isSceneViewCamera)

{

SetupGaussianBlur(cmd, ref renderingData, m_Materials.gaussianBlur);

}

}

public void SetupGaussianBlur(CommandBuffer cmd, ref RenderingData renderingData, Material blurMaterial)

{

RenderTextureDescriptor opaqueDesc = renderingData.cameraData.cameraTargetDescriptor;

opaqueDesc.width = opaqueDesc.width >> m_GaussianBlur.downSample.value;

opaqueDesc.height = opaqueDesc.height >> m_GaussianBlur.downSample.value;

opaqueDesc.depthBufferBits = 0;

cmd.GetTemporaryRT(m_TemporaryColorTexture01.id, opaqueDesc, m_GaussianBlur.filterMode.value);

cmd.GetTemporaryRT(m_TemporaryColorTexture02.id, opaqueDesc, m_GaussianBlur.filterMode.value);

cmd.GetTemporaryRT(m_TemporaryColorTexture03.id, opaqueDesc, m_GaussianBlur.filterMode.value);

cmd.BeginSample("GaussianBlur");

cmd.Blit(this.m_ColorAttachment, m_TemporaryColorTexture03.Identifier());

for (int i = 0; i < m_GaussianBlur.blurCount.value; i++)

{

blurMaterial.SetVector("_offsets", new Vector4(0, m_GaussianBlur.indensity.value, 0, 0));

cmd.Blit(m_TemporaryColorTexture03.Identifier(), m_TemporaryColorTexture01.Identifier(), blurMaterial);

blurMaterial.SetVector("_offsets", new Vector4(m_GaussianBlur.indensity.value, 0, 0, 0));

cmd.Blit(m_TemporaryColorTexture01.Identifier(), m_TemporaryColorTexture02.Identifier(), blurMaterial);

cmd.Blit(m_TemporaryColorTexture02.Identifier(), m_ColorAttachment);

}

cmd.Blit(m_TemporaryColorTexture03.Identifier(), this.m_Destination.Identifier());

cmd.EndSample("Blur");

}

}

}

创建ScriptableRenderPass对象我们必须要重写Execute方法,URP会自动调用这个方法来执行我们的后处理任务。为了方便对自定义后处理特效的效果的管理,我们可以仿照URP的管理方式,创建一个ScriptableObject对象,主要负责管理自定义后处理特效的shader,代码如下:

using UnityEngine;

using System.Collections;

using System;

#if UNITY_EDITOR

using UnityEditor;

using UnityEditor.ProjectWindowCallback;

#endif

using UnityEngine.Rendering.Universal;

using UnityEngine.Rendering;

namespace UnityEngine.Experiemntal.Rendering.Universal

{

[Serializable]

public class AdditionalPostProcessData : ScriptableObject

{

#if UNITY_EDITOR

[System.Diagnostics.CodeAnalysis.SuppressMessage("Microsoft.Performance", "CA1812")]

[MenuItem("Assets/Create/Rendering/Universal Render Pipeline/Additional Post-process Data", priority = CoreUtils.assetCreateMenuPriority3 + 1)]

static void CreateAdditionalPostProcessData()

{

//ProjectWindowUtil.StartNameEditingIfProjectWindowExists(0, CreateInstance<CreatePostProcessDataAsset>(), "CustomPostProcessData.asset", null, null);

var instance = CreateInstance<AdditionalPostProcessData>();

AssetDatabase.CreateAsset(instance, string.Format("Assets/Settings/{0}.asset", typeof(AdditionalPostProcessData).Name));

Selection.activeObject = instance;

}

#endif

[Serializable]

public sealed class Shaders

{

public Shader gaussianBlur = Shader.Find("Custom/GaussianBlur");

}

public Shaders shaders;

}

}

然后在Project面板,右键选择菜单Create/Rendering/Universal Render Pipeline/Additional Post-process Data,就会在Settings目录下创建一个AdditionalPostProcessData.asset文件,然后把它赋值给ForwardRenderer下的AdditionPostProcessRendererFeature即可。

在AdditionPostProcessPass中,我还引用了一个MaterialLibrary对象,这个对象的作用是可以动态创建每个自定义后处理效果所需要的材质,直接粘贴源代码:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

namespace UnityEngine.Experiemntal.Rendering.Universal

{

public class MaterialLibrary

{

public readonly Material gaussianBlur;

public MaterialLibrary(AdditionalPostProcessData data)

{

gaussianBlur = Load(data.shaders.gaussianBlur);

}

Material Load(Shader shader)

{

if (shader == null)

{

Debug.LogErrorFormat($"Missing shader. {GetType().DeclaringType.Name} render pass will not execute. Check for missing reference in the renderer resources.");

return null;

}

else if (!shader.isSupported)

{

return null;

}

return CoreUtils.CreateEngineMaterial(shader);

}

internal void Cleanup()

{

CoreUtils.Destroy(gaussianBlur);

}

}

}

最后最重要的一部分就是我们的shader了,话不多说,直接上代码吧:

Shader "Custom/GaussianBlur"

{

Properties

{

_MainTex("Base (RGB)", 2D) = "white" {}

_offsets("Offset",vector) = (0,0,0,0)

}

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

//#include "Packages/com.unity.render-pipelines.universal/Shaders/PostProcessing/Common.hlsl"

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _MainTex_TexelSize;

float4 _offsets;

CBUFFER_END

struct ver_blur{

float4 positionOS : POSITION;

float2 uv : TEXCOORD0;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct v2f_blur{

float4 pos : SV_POSITION;

float2 uv : TEXCOORD0;

float4 uv01 : TEXCOORD1;

float4 uv23 : TEXCOORD2;

float4 uv45 : TEXCOORD3;

UNITY_VERTEX_OUTPUT_STEREO

};

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

//vertex shader

v2f_blur vert_blur(ver_blur v)

{

v2f_blur o;

UNITY_SETUP_INSTANCE_ID(v);

UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO(o);

o.pos = TransformObjectToHClip(v.positionOS.xyz);

o.uv = v.uv;

_offsets *= _MainTex_TexelSize.xyxy;

o.uv01 = v.uv.xyxy + _offsets.xyxy * float4(1, 1, -1, -1);

o.uv23 = v.uv.xyxy + _offsets.xyxy * float4(1, 1, -1, -1) * 2.0;

o.uv45 = v.uv.xyxy + _offsets.xyxy * float4(1, 1, -1, -1) * 3.0;

return o;

}

//fragment shader

float4 frag_blur(v2f_blur i) : SV_Target

{

half4 color = half4(0,0,0,0);

//将像素本身以及像素左右(或者上下,取决于vertex shader传进来的uv坐标)像素值的加权平均

color = 0.4 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv);

color += 0.15 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv01.xy);

color += 0.15 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv01.zw);

color += 0.10 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv23.xy);

color += 0.10 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv23.zw);

color += 0.05 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv45.xy);

color += 0.05 * SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex, i.uv45.zw);

return color;

}

ENDHLSL

//开始SubShader

SubShader

{

Tags {"RenderType"="Opaque" "RenderPipeline"="UniversalPipeline"}

LOD 100

ZTest Always Cull Off ZWrite Off

Pass

{

Name "Gaussian Blur"

//后处理效果一般都是这几个状态

//使用上面定义的vertex和fragment shader

HLSLPROGRAM

#pragma vertex vert_blur

#pragma fragment frag_blur

ENDHLSL

}

}

//后处理效果一般不给fallback,如果不支持,不显示后处理即可

}