1、在Activity界面代码中:

private var mCamera: Camera? = null

private val mWidth = GwApplication.DEFAULT_REMOTE_WIDTH_EXT

private val mHeight = GwApplication.DEFAULT_REMOTE_HEIGHT_EXT

private var imgData: ImageData = ImageData(mWidth, mHeight)

/**

* Camera初始化

**/

private fun initCameara() {

//log("====initCameara()")

try {

mCamera = Camera.open(GwApplication.DEFAULT_CR_CAMERA)

val params = mCamera!!.getParameters()

params.previewFormat = ImageFormat.NV21

params.setPreviewSize(mWidth, mHeight)

//params.pictureFormat = ImageFormat.NV21

params.setPictureSize(mWidth, mHeight)

//params.zoom = 0

//params.setRotation(0)

params.setPreviewFpsRange(10, 15)

mCamera!!.setParameters(params)

} catch (ex: RuntimeException) {

ex.printStackTrace()

}

}

/**

* 开始监听回调,设置预览

**/

private fun setCallback() {

//log("====setCallback()")

try {

// 主要是surfaceTexture获取预览数据,但不显示

val surfaceTexture = SurfaceTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES)

mCamera!!.setPreviewTexture(surfaceTexture)

} catch (e: IOException) {

e.printStackTrace()

}

// 设置 mCamera.addCallbackBuffer(mPreviewData) 后才会回调,旨在每处理完一帧数据回调一次

mCamera!!.setPreviewCallbackWithBuffer(mPreviewCallback)

mCamera!!.addCallbackBuffer(imgData.data)

mCamera!!.startPreview()

}

/**

* 帧数据监听实现

**/

private val mPreviewCallback =

android.hardware.Camera.PreviewCallback { data, camera -> // 在此处处理当前帧数据,并设置下一帧回调

//log("====PreviewCallback()")

imgData.setTempData(data)

mCamera!!.addCallbackBuffer(imgData.data)

// if (isShow) {

// showPic(imgData.previewData)

// }

}

/**

* 关闭相机

**/

private fun closeCamera() {

mCamera!!.stopPreview()

mCamera!!.setPreviewCallbackWithBuffer(null)

mCamera!!.release()

mCamera = null

}

/**

* 显示图片

**/

private fun showPic(data: ByteArray) {

val time = System.currentTimeMillis()

try {

val image = YuvImage(data, ImageFormat.NV21, mWidth, mHeight, null)

if (image != null) {

val stream = ByteArrayOutputStream()

image.compressToJpeg(Rect(0, 0, mWidth, mHeight), 80, stream)

runOnUiThread {

val curTime = System.currentTimeMillis()

ivCamera.setImageBitmap(null)

releaseBitmap()

mBitmap = BitmapFactory.decodeByteArray(stream.toByteArray(), 0, stream.size())//fastYUVtoRGB!!.convertYUVtoRGB(data, mWidth, mHeight)

stream.close()

ivCamera.setImageBitmap(mBitmap)

ivCamera.invalidate()

}

}

} catch (e: Exception) {

}

}

private fun releaseBitmap() {

if(mBitmap != null) {

if(!mBitmap!!.isRecycled) {

mBitmap!!.recycle()

}

mBitmap = null

}

}

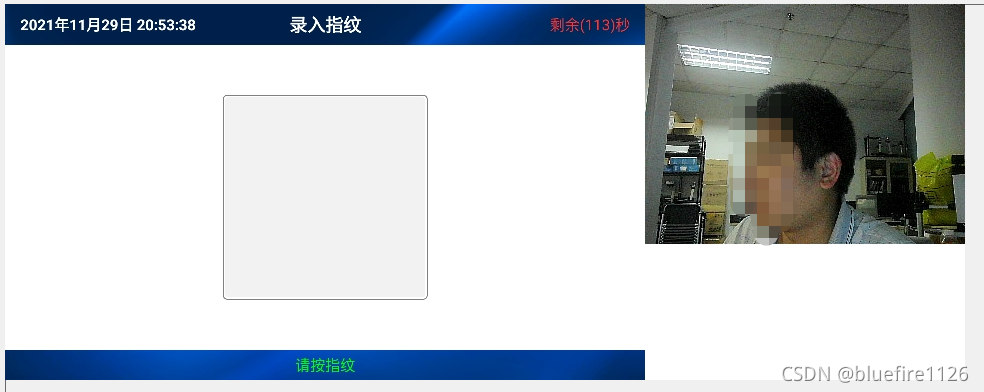

2、屏幕和视频图像的效果图:

其他依赖代码:

ImageData.java文件

/**

-

author zoufeng

-

date:2021/10/15

-

desc:

*/

public class ImageData {

private int width;

private int height;

private int size;

private byte[] data;

private byte[] temp;

private Boolean isNew;public ImageData(int width, int height) {

this.width = width;

this.height = height;

size = width * height * 3 / 2;

data = new byte[size];

temp = new byte[size];

isNew = true;

}public int getWidth() {

return width;

}public int getHeight() {

return height;

}public byte[] getData() {

return data;

}public void setTempData(byte[] preData) {

synchronized (isNew) {

if(!isNew) {

System.arraycopy(preData, 0, temp, 0, preData.length);

isNew = true;

}

}

}public byte[] getTempData() {

return temp;

}public boolean isNew() {

return isNew;

}public void setNew(boolean isNew) {

synchronized (this.isNew) {

this.isNew = isNew;

}

}

}

FastYUVtoRGB.java文件:(用于YUV直接转换成Bitmap,速度比较快,图像转换在4毫秒一下。)

/**

- author zoufeng

- date:2021/11/17

-

desc:

*/

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.Canvas;

import android.graphics.Matrix;

import android.renderscript.Allocation;

import android.renderscript.Element;

import android.renderscript.RenderScript;

import android.renderscript.ScriptIntrinsicYuvToRGB;

import android.renderscript.Type;

/**

-

使用RenderScript将视频YUV流转换为BMP

-

注:这个类适用于CameraPreview不变的情况

*/

public class FastYUVtoRGB {

private RenderScript rs;

private ScriptIntrinsicYuvToRGB yuvToRgbIntrinsic;

private Type.Builder yuvType, rgbaType;

private Allocation in, out;public FastYUVtoRGB(Context context) {

rs = RenderScript.create(context);

yuvToRgbIntrinsic = ScriptIntrinsicYuvToRGB.create(rs, Element.U8_4(rs));

}public Bitmap convertYUVtoRGB(byte[] yuvData, int width, int height) {

if (yuvType == null) {

yuvType = new Type.Builder(rs, Element.U8(rs)).setX(yuvData.length);

in = Allocation.createTyped(rs, yuvType.create(), Allocation.USAGE_SCRIPT);rgbaType = new Type.Builder(rs, Element.RGBA_8888(rs)).setX(width).setY(height); out = Allocation.createTyped(rs, rgbaType.create(), Allocation.USAGE_SCRIPT); } in.copyFrom(yuvData); yuvToRgbIntrinsic.setInput(in); yuvToRgbIntrinsic.forEach(out); Bitmap bmpout = Bitmap.createBitmap(width, height, Bitmap.Config.ARGB_8888); out.copyTo(bmpout); return bmpout;}

/**

-

Returns a transformation matrix from one reference frame into another.

-

Handles cropping (if maintaining aspect ratio is desired) and rotation.

-

@param srcWidth Width of source frame.

-

@param srcHeight Height of source frame.

-

@param dstWidth Width of destination frame.

-

@param dstHeight Height of destination frame.

-

@param applyRotation Amount of rotation to apply from one frame to another.

-

Must be a multiple of 90. -

@param flipHorizontal should flip horizontally

-

@param flipVertical should flip vertically

-

@param maintainAspectRatio If true, will ensure that scaling in x and y remains constant,

-

cropping the image if necessary. -

@return The transformation fulfilling the desired requirements.

*/

public static Matrix getTransformationMatrix(

final int srcWidth,

final int srcHeight,

final int dstWidth,

final int dstHeight,

final int applyRotation, boolean flipHorizontal, boolean flipVertical,

final boolean maintainAspectRatio) {

final Matrix matrix = new Matrix();if (applyRotation != 0) {

if (applyRotation % 90 != 0) {

throw new IllegalArgumentException(String.format(“Rotation of %d % 90 != 0”, applyRotation));

}// Translate so center of image is at origin. matrix.postTranslate(-srcWidth / 2.0f, -srcHeight / 2.0f); // Rotate around origin. matrix.postRotate(applyRotation);}

// Account for the already applied rotation, if any, and then determine how

// much scaling is needed for each axis.

final boolean transpose = (Math.abs(applyRotation) + 90) % 180 == 0;final int inWidth = transpose ? srcHeight : srcWidth;

final int inHeight = transpose ? srcWidth : srcHeight;int flipHorizontalFactor = flipHorizontal ? -1 : 1;

int flipVerticalFactor = flipVertical ? -1 : 1;// Apply scaling if necessary.

if (inWidth != dstWidth || inHeight != dstHeight) {

final float scaleFactorX = flipHorizontalFactor * dstWidth / (float) inWidth;

final float scaleFactorY = flipVerticalFactor * dstHeight / (float) inHeight;if (maintainAspectRatio) { // Scale by minimum factor so that dst is filled completely while // maintaining the aspect ratio. Some image may fall off the edge. final float scaleFactor = Math.max(Math.abs(scaleFactorX), Math.abs(scaleFactorY)); matrix.postScale(scaleFactor, scaleFactor); } else { // Scale exactly to fill dst from src. matrix.postScale(scaleFactorX, scaleFactorY); }}

if (applyRotation != 0) {

// Translate back from origin centered reference to destination frame.

float dx = dstWidth / 2.0f;

float dy = dstHeight / 2.0f;

matrix.postTranslate(dx, dy);

// postScale中心点如果出错,图像不会被变换

matrix.postScale(flipHorizontalFactor, flipVerticalFactor, dx, dy);

}return matrix;

}

/*

/*

example

final Canvas canvas = new Canvas(out);

// 这里的空白bitmap尺寸需要与变换后的预期尺寸一致

Bitmap src = Bitmap.createBitmap(height, width, Bitmap.Config.ARGB_8888);

Matrix transformation = getTransformationMatrix(src.getWidth(), src.getHeight(), targetWidth, targetHeight, rotation,true,false, true);

canvas.drawBitmap(src, transformation, null);

*/

} -