视觉SLAM十四讲代码学习记录

问题:

已知一组3D点的位置,以及它们在某个相机中的投影位置,求该相机的位姿。(即书中的PnP问题)

求解方法:

对于连续运动的相机,可以通过最小化重投影误差(Bundle Adjustment)构建非线性最小二乘问题,采用最优化算法直接求解相机位姿。

优化模型:

T

∗

=

arg min

T

1

2

∑

i

=

1

n

∥

u

i

−

1

s

i

K

T

P

i

∥

2

2

T^* = \argmin_{T} \frac{1}{2}\sum_{i=1}^n \left\| u_i – \frac{1}{s_i} KTP_i \right\| _2^2

T

∗

=

T

a

r

g

m

i

n

2

1

i

=

1

∑

n

∥

∥

∥

∥

u

i

−

s

i

1

K

T

P

i

∥

∥

∥

∥

2

2

代码解读:

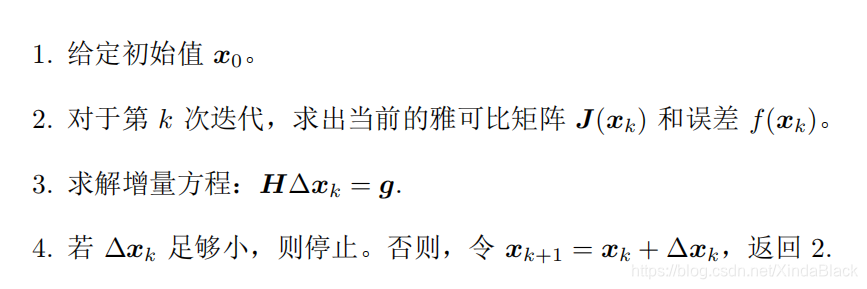

书中给出了高斯牛顿法和g2o实现非线性最小二乘问题的求解,阅读代码主要按照以下思路。

高斯牛顿法求解

//传入的参数分别是匹配好的一组3D点世界坐标‘points_3d’和它们在相机中的投影位置‘points_2d’,内参矩阵K,以及位姿的初始值‘pose’。(步骤1)

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

typedef Eigen::Matrix<double, 6, 1> Vector6d;

const int iterations = 10;

double cost = 0, lastCost = 0;

double fx = K.at<double>(0, 0);

double fy = K.at<double>(1, 1);

double cx = K.at<double>(0, 2);

double cy = K.at<double>(1, 2);

//进行迭代(步骤2)

for (int iter = 0; iter < iterations; iter++) {

Eigen::Matrix<double, 6, 6> H = Eigen::Matrix<double, 6, 6>::Zero();

Vector6d b = Vector6d::Zero();

cost = 0;

// compute cost

for (int i = 0; i < points_3d.size(); i++) {

//将3D点重投影到像素平面

Eigen::Vector3d pc = pose * points_3d[i];

double inv_z = 1.0 / pc[2];

double inv_z2 = inv_z * inv_z;

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);

//计算重投影误差

Eigen::Vector2d e = points_2d[i] - proj;

//计算损失函数

cost += e.squaredNorm();

Eigen::Matrix<double, 2, 6> J;

// 计算Jacabian

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

//计算增量方程的系数H和g(即代码中的b)

H += J.transpose() * J;

b += -J.transpose() * e;

}

//求解增量方程(步骤3),得到的dx为一个向量,即位姿变化量的李代数

Vector6d dx;

dx = H.ldlt().solve(b);

if (isnan(dx[0])) {

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastCost << endl;

break;

}

// update your estimation

//更新位姿(步骤4),Sophus::SE3d::exp(dx)主要将位姿增量的李代数转换到李群。(注意:位姿的更新是左乘位姿变化量)

pose = Sophus::SE3d::exp(dx) * pose;

lastCost = cost;

cout << "iteration " << iter << " cost=" << cout.precision(12) << cost << endl;

if (dx.norm() < 1e-6) {

// converge

break;

}

}

cout << "pose by g-n: \n" << pose.matrix() << endl;

}

g2o求解

// vertex and edges used in g2o ba

// template <int D, typename T>

// D -> 顶点的最小维度,此处是相机的位姿,故维度为6;

// T -> 估计量的类型,此处是SE(3)

class VertexPose : public g2o::BaseVertex<6, Sophus::SE3d> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

// 顶点重置

virtual void setToOriginImpl() override {

_estimate = Sophus::SE3d();

}

/// left multiplication on SE3,顶点(位姿)的更新

virtual void oplusImpl(const double *update) override {

Eigen::Matrix<double, 6, 1> update_eigen;

update_eigen << update[0], update[1], update[2], update[3], update[4], update[5];

_estimate = Sophus::SE3d::exp(update_eigen) * _estimate;

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

};

// 顶点只有相机位姿,边是观测误差,一元边

class EdgeProjection : public g2o::BaseUnaryEdge<2, Eigen::Vector2d, VertexPose> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeProjection(const Eigen::Vector3d &pos, const Eigen::Matrix3d &K) : _pos3d(pos), _K(K) {}

virtual void computeError() override {

const VertexPose *v = static_cast<VertexPose *> (_vertices[0]);

Sophus::SE3d T = v->estimate();

Eigen::Vector3d pos_pixel = _K * (T * _pos3d);

pos_pixel /= pos_pixel[2];

_error = _measurement - pos_pixel.head<2>();

}

//计算Jacobian matrix,此处是重投影误差关于相机位姿李代数的导数,书中式(7.46)。

virtual void linearizeOplus() override {

const VertexPose *v = static_cast<VertexPose *> (_vertices[0]);

Sophus::SE3d T = v->estimate();

Eigen::Vector3d pos_cam = T * _pos3d;

double fx = _K(0, 0);

double fy = _K(1, 1);

double cx = _K(0, 2);

double cy = _K(1, 2);

double X = pos_cam[0];

double Y = pos_cam[1];

double Z = pos_cam[2];

double Z2 = Z * Z;

_jacobianOplusXi

<< -fx / Z, 0, fx * X / Z2, fx * X * Y / Z2, -fx - fx * X * X / Z2, fx * Y / Z,

0, -fy / Z, fy * Y / (Z * Z), fy + fy * Y * Y / Z2, -fy * X * Y / Z2, -fy * X / Z;

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

private:

Eigen::Vector3d _pos3d;

Eigen::Matrix3d _K;

};

void bundleAdjustmentG2O(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

// 构建图优化,先设定g2o

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 3>> BlockSolverType; // pose is 6, landmark is 3

typedef g2o::LinearSolverDense<BlockSolverType::PoseMatrixType> LinearSolverType; // 线性求解器类型

// 梯度下降方法,可以从GN, LM, DogLeg 中选

auto solver = new g2o::OptimizationAlgorithmGaussNewton(

g2o::make_unique<BlockSolverType>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm(solver); // 设置求解器

optimizer.setVerbose(true); // 打开调试输出

// vertex

VertexPose *vertex_pose = new VertexPose(); // camera vertex_pose

vertex_pose->setId(0);

vertex_pose->setEstimate(Sophus::SE3d());

optimizer.addVertex(vertex_pose);

// K

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at<double>(0, 0), K.at<double>(0, 1), K.at<double>(0, 2),

K.at<double>(1, 0), K.at<double>(1, 1), K.at<double>(1, 2),

K.at<double>(2, 0), K.at<double>(2, 1), K.at<double>(2, 2);

// edges

int index = 1;

for (size_t i = 0; i < points_2d.size(); ++i) {

auto p2d = points_2d[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);

edge->setId(index);

edge->setVertex(0, vertex_pose);

edge->setMeasurement(p2d);

edge->setInformation(Eigen::Matrix2d::Identity());

optimizer.addEdge(edge);

index++;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose(true);

optimizer.initializeOptimization();

optimizer.optimize(10);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << "pose estimated by g2o =\n" << vertex_pose->estimate().matrix() << endl;

pose = vertex_pose->estimate();

}

课后习题6的代码

第一个相机的观测也考虑,即第一个相机的位姿不固定,空间点还是固定的(本人是这么认为的)。现在图模型有两个顶点,即第一个相机的位姿和第二个相机的位姿;每个顶点都有许多一元边,即每个世界坐标中的3D在相机坐标中的投影,以观测方程描述。因此,原先代码中顶点和边的类都不需要改动。

// 估计两个相机的位姿,空间点固定,因此有两个顶点(相机位姿),每个顶点连接着许多一元边(观测模型)。

void bundleAdjustmentG2O(

const VecVector3d &points_3d, // 空间点坐标(这里借用空间点在第一个相机坐标系下的坐标,所以预期估计位姿T1为单位矩阵)

const VecVector2d &points_2d_1, // 第一个相机的观测值(像素坐标)

const VecVector2d &points_2d_2, // 第二个相机的观测值

const Mat &K,

Sophus::SE3d &pose) {

// 构建图优化,先设定g2o

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 3>> BlockSolverType; // pose is 6, landmark is 3

typedef g2o::LinearSolverDense<BlockSolverType::PoseMatrixType> LinearSolverType; // 线性求解器类型

// 梯度下降方法,可以从GN, LM, DogLeg 中选

// auto linearSolver = g2o::make_unique<LinearSolverType>();

// auto block_slover( g2o::make_unique<BlockSolverType>(move(linearSolver)) );

// auto solver = new g2o::OptimizationAlgorithmGaussNewton( move(block_slover));

auto solver = new g2o::OptimizationAlgorithmGaussNewton(

g2o::make_unique<BlockSolverType>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm(solver); // 设置求解器

optimizer.setVerbose(true); // 打开调试输出

// 设置第一个相机的顶点

VertexPose *vertex_pose1 = new VertexPose(); // camera vertex_pose1

vertex_pose1->setId(0); //从0开始

// vertex_pose1->setFixed(1); //固定顶点参数,不进行优化调整

vertex_pose1->setEstimate(Sophus::SE3d()); //设置相机位姿初始值为I

optimizer.addVertex(vertex_pose1);

// 设置第二个相机的顶点

VertexPose *vertex_pose2 = new VertexPose(); // camera vertex_pose2

vertex_pose2->setId(1);

vertex_pose2->setEstimate(Sophus::SE3d());

optimizer.addVertex(vertex_pose2);

// K

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at<double>(0, 0), K.at<double>(0, 1), K.at<double>(0, 2),

K.at<double>(1, 0), K.at<double>(1, 1), K.at<double>(1, 2),

K.at<double>(2, 0), K.at<double>(2, 1), K.at<double>(2, 2);

// 第一个相机的观测模型

int index = 1;

for (size_t i = 0; i < points_2d_1.size(); ++i) {

auto p2d = points_2d_1[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);

edge->setId(index); //从1开始?

// 设置边连接的顶点,序号从0开始,一元边连接一个顶点,故只设置序号为0的顶点

edge->setVertex(0, vertex_pose1);

// 设置观测值

edge->setMeasurement(p2d);

// 信息矩阵:协方差矩阵之逆(注:观测误差e_k'*omega_k*e_k,这里omega取I)

edge->setInformation(Eigen::Matrix2d::Identity());

optimizer.addEdge(edge);

index++;

}

// 第二个相机的观测模型

for (size_t i = 0; i < points_2d_2.size(); ++i) {

auto p2d = points_2d_2[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);

edge->setId(index);

edge->setVertex(0, vertex_pose2);

edge->setMeasurement(p2d);

edge->setInformation(Eigen::Matrix2d::Identity());

optimizer.addEdge(edge);

index++;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose(true);

optimizer.initializeOptimization();

optimizer.optimize(10);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << "T1 by g2o =\n" << vertex_pose1->estimate().matrix() << endl;

cout << "T2 by g2o =\n" << vertex_pose2->estimate().matrix() << endl;

pose = vertex_pose2->estimate();

}

从运行的结果来看,第一个相机的位姿近似为单位矩阵,第二个相机的位姿与之前估计的一样,所以计是符合预期的。

calling bundle adjustment by g2o

iteration= 0 chi2= 413.221882 time= 3.2732e-05 cumTime= 3.2732e-05 edges= 152 schur= 0

iteration= 1 chi2= 301.367057 time= 2.1651e-05 cumTime= 5.4383e-05 edges= 152 schur= 0

iteration= 2 chi2= 301.365779 time= 2.0719e-05 cumTime= 7.5102e-05 edges= 152 schur= 0

iteration= 3 chi2= 301.365779 time= 2.0448e-05 cumTime= 9.555e-05 edges= 152 schur= 0

iteration= 4 chi2= 301.365779 time= 2.0007e-05 cumTime= 0.000115557 edges= 152 schur= 0

iteration= 5 chi2= 301.365779 time= 1.9868e-05 cumTime= 0.000135425 edges= 152 schur= 0

iteration= 6 chi2= 301.365779 time= 1.9566e-05 cumTime= 0.000154991 edges= 152 schur= 0

iteration= 7 chi2= 301.365779 time= 1.9637e-05 cumTime= 0.000174628 edges= 152 schur= 0

iteration= 8 chi2= 301.365779 time= 1.9577e-05 cumTime= 0.000194205 edges= 152 schur= 0

iteration= 9 chi2= 301.365779 time= 1.9597e-05 cumTime= 0.000213802 edges= 152 schur= 0

optimization costs time: 0.000445064 seconds.

T1 by g2o =

1 -4.8829624272e-10 5.12989017975e-10 -1.17821245254e-09

4.88296241894e-10 1 1.60949755627e-09 -2.926308116e-09

-5.12989018761e-10 -1.60949755602e-09 1 -1.4388309307e-09

0 0 0 1

T2 by g2o =

0.997866202583 -0.0516724161336 0.0399124436024 -0.127225965696

0.050595891596 0.998339762772 0.0275276919261 -0.00750729765631

-0.04126860183 -0.0254495477384 0.998823919929 0.0613858417711

0 0 0 1

课后习题7的代码

将空间点也作为优化变量考虑进来,需要增加路标顶点

VertexLandmark

和路标与位姿顶点之间的二元边

EdgeProjectXYZRGBD

。

class VertexLandmark : public g2o::BaseVertex<3, Eigen::Vector3d> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

//顶点的重置函数

virtual void setToOriginImpl() override {

_estimate = Eigen::Vector3d(0,0,0);

}

/// left multiplication on SE3 , 顶点的更新函数

virtual void oplusImpl(const double *update) override {

_estimate += Eigen::Vector3d(update[0],update[1],update[2]);

}

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

};

class EdgeProjectXYZRGBD : public g2o::BaseBinaryEdge<3, Eigen::Vector3d, VertexPose, VertexLandmark> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

//边的误差计算函数

virtual void computeError() override {

const VertexPose *pose = static_cast<const VertexPose *> ( _vertices[0] );

const VertexLandmark *point= static_cast<const VertexLandmark *> ( _vertices[1] );

// 注意估计的是第二个相机的位姿 pose 以及空间点在第二个相机坐标系下的坐标 point,

// _measurement是空间点的世界坐标(即第一个相机坐标系下的坐标)

_error = _measurement - pose->estimate() * point->estimate();

}

/**

* 求Jacobian

* _jacobianOplusXi -> 误差对相机位姿的导数

* _jacobianOplusXj -> 误差对空间特征点的导数

*/

virtual void linearizeOplus() override {

VertexPose *pose = static_cast<VertexPose *>(_vertices[0]);

const VertexLandmark *point= static_cast<const VertexLandmark *> ( _vertices[1] );

Sophus::SE3d T = pose->estimate();

Eigen::Vector3d xyz_trans = T * point->estimate();

//误差对相机位姿李代数的导数 -1*[I,-P'^],其中-1为误差对第二个相机坐标系下特征点坐标的求导结果(de/dP')

_jacobianOplusXi.block<3, 3>(0, 0) = -Eigen::Matrix3d::Identity(); //(0,0)为左上角的3*3的

_jacobianOplusXi.block<3, 3>(0, 3) = Sophus::SO3d::hat(xyz_trans);

//误差对世界坐标系下空间点的导数, -1*R

_jacobianOplusXj = -T.rotationMatrix();

}

bool read(istream &in) {}

bool write(ostream &out) const {}

};

// pts1 -> 第一个相机坐标系下的3D点坐标。优化中认为第一个相机坐标系为世界坐标系,则pts1作为观测值

// pts2 -> 第二个相机坐标系下的3D点坐标

// pts2Esti -> 待调整的第二个相机坐标系下的3D点坐标

void bundleAdjustment_7(

const vector<Point3f> &pts1, // [in]

const vector<Point3f> &pts2, // [in]

vector<Point3f> &pts2Esti, //[out]

Mat &R, Mat &t) { //[out]

// 构建图优化,先设定g2o

typedef g2o::BlockSolverX BlockSolverType;

typedef g2o::LinearSolverDense<BlockSolverType::PoseMatrixType> LinearSolverType; // 线性求解器类型

// 梯度下降方法,可以从GN, LM, DogLeg 中选

auto solver = new g2o::OptimizationAlgorithmLevenberg(

g2o::make_unique<BlockSolverType>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm(solver); // 设置求解器

optimizer.setVerbose(true); // 打开调试输出

// 第二个相机的位姿

VertexPose *pose = new VertexPose(); // camera pose

pose->setId(0);

// Sophus::SE3d() -> Default constructor initializes rigid body motion to the identity. (默认初始化为单位矩阵)

// pose->setEstimate(Sophus::SE3d());

// 位姿的传入值作为估计的初始值

// Eigen::Matrix3d R_eigen;

// Eigen::Vector3d t_eigen;

// cv::cv2eigen(R,R_eigen);

// cv::cv2eigen(t,t_eigen);

// pose->setEstimate(Sophus::SE3d(R_eigen,t_eigen));

pose->setEstimate(Sophus::SE3d());

optimizer.addVertex(pose);

// 第二个相机坐标系下的3D点坐标

vector<VertexLandmark *> landmarks;

for(size_t i = 0; i < pts2.size(); i++ ){

VertexLandmark *point = new VertexLandmark(); // camera pose

point->setId(i+1);

// 设置空间点初始坐标为(1,2,3),不能用pts1作为初始值,因为位姿初始值取了I,会造成第一次迭代就终止,优化失败

point->setEstimate( Eigen::Vector3d(1, 2, 3 ) );

optimizer.addVertex(point);

landmarks.push_back(point);

}

// edges

vector<EdgeProjectXYZRGBD *> edges;

for (size_t i = 0; i < pts1.size(); i++) {

EdgeProjectXYZRGBD *edge = new EdgeProjectXYZRGBD();

edge->setVertex(0, pose);

edge->setVertex(1, landmarks[i]);

//此处设定第一个相机为初始帧,相机坐标系即世界坐标系,可以作为观测值

edge->setMeasurement(Eigen::Vector3d(

pts1[i].x, pts1[i].y, pts1[i].z));

edge->setInformation(Eigen::Matrix3d::Identity()); //信息矩阵设置为单位矩阵

optimizer.addEdge(edge);

edges.push_back(edge);

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.initializeOptimization();

optimizer.optimize(10);

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << endl << "after optimization:" << endl;

cout << "T=\n" << pose->estimate().matrix() << endl;

// convert to cv::Mat

Eigen::Matrix3d R_ = pose->estimate().rotationMatrix();

Eigen::Vector3d t_ = pose->estimate().translation();

R = (Mat_<double>(3, 3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

for(VertexLandmark * lm : landmarks){

Eigen::Vector3d tmp = lm -> estimate();

pts2Esti.push_back(cv::Point3f(tmp(0),tmp(1),tmp(2)));

}

double chi2 = 0;

cout << "The chi2 of every edge: " << endl;

for(EdgeProjectXYZRGBD* edge: edges){

cout << edge->chi2() << endl;

chi2 += edge->chi2();

}

cout << "The sum of chi2 of all edges : " << chi2 << endl;

//个别边的chi2相比较于其它边很大,是outlier,说明此特征点对匹配有误

}

从运行结果来看,将空间点也作为优化变量考虑,最终的chi2(cost function)可以接近为0,同时运行时间也变长很多。(个人在尝试取不同估计初始值的时候,发现估计的位姿T和空间点在第二个相机的坐标p2Esti结果有很大的不同,但是最终空间点的世界坐标R*p2Esti+t很准确(等于p1),感觉是对观测值过拟合了。分析具体原因:加入空间点作为优化变量后,对于此处局部的两个相机位姿估计,可以列举的观测方程数74少于变量的个数74+1,因此存在多种解的可能。)

iteration= 0 chi2= 11.241404 time= 0.00061704 cumTime= 0.00061704 edges= 74 schur= 0 lambda= 0.003207 levenbergIter= 1

iteration= 1 chi2= 0.137620 time= 0.000470106 cumTime= 0.00108715 edges= 74 schur= 0 lambda= 0.001069 levenbergIter= 1

iteration= 2 chi2= 0.000004 time= 0.000539606 cumTime= 0.00162675 edges= 74 schur= 0 lambda= 0.000356 levenbergIter= 1

iteration= 3 chi2= 0.000000 time= 0.000468944 cumTime= 0.0020957 edges= 74 schur= 0 lambda= 0.000238 levenbergIter= 1

iteration= 4 chi2= 0.000000 time= 0.000493249 cumTime= 0.00258894 edges= 74 schur= 0 lambda= 0.000158 levenbergIter= 1

iteration= 5 chi2= 0.000000 time= 0.000491616 cumTime= 0.00308056 edges= 74 schur= 0 lambda= 0.000106 levenbergIter= 1

iteration= 6 chi2= 0.000000 time= 0.000479153 cumTime= 0.00355971 edges= 74 schur= 0 lambda= 0.000070 levenbergIter= 1

iteration= 7 chi2= 0.000000 time= 0.0004667 cumTime= 0.00402641 edges= 74 schur= 0 lambda= 0.000047 levenbergIter= 1

iteration= 8 chi2= 0.000000 time= 0.00319919 cumTime= 0.00722561 edges= 74 schur= 0 lambda= 65.598463 levenbergIter= 7

iteration= 9 chi2= 0.000000 time= 0.000465908 cumTime= 0.00769152 edges= 74 schur= 0 lambda= 43.732309 levenbergIter= 1

optimization costs time: 0.00795365 seconds.

after optimization:

T=

0.999539 -0.017654 -0.0247169 -0.898792

0.0162139 0.998225 -0.0572988 -1.92858

0.0256846 0.0568716 0.998051 -1.44376

0 0 0 1

Compare p2 with p2Esti

p2 = [-0.0111479, -0.746763, 2.7652]

p2Esti = [0.986363, 1.31882, 4.09621]

verify p1 = R * p2Esti + t

p1 = [-0.0374123, -0.830816, 2.7448]

(R*p2Esti+t) = [-0.03741231993637884;

-0.8308156663209174;

2.744800061331437]

Compare p2 with p2Esti

p2 = [-0.299118, -0.0975683, 1.6558]

p2Esti = [0.76194, 1.96832, 2.90271]

verify p1 = R * p2Esti + t

p1 = [-0.243698, -0.117719, 1.5848]

(R*p2Esti+t) = [-0.2436983790622381;

-0.1177192871870092;

1.584800038768302]

Compare p2 with p2Esti

p2 = [-0.709645, 0.159033, 1.4212]

p2Esti = [0.376271, 2.23857, 2.65156]

verify p1 = R * p2Esti + t

p1 = [-0.627753, 0.160186, 1.3396]

(R*p2Esti+t) = [-0.6277526721105515;

0.1601862850961036;

1.339599942812919]

Compare p2 with p2Esti

p2 = [-0.399079, 0.12047, 1.4838]

p2Esti = [0.681778, 2.18293, 2.73404]

verify p1 = R * p2Esti + t

p1 = [-0.323443, 0.104873, 1.4266]

(R*p2Esti+t) = [-0.3234430027320211;

0.1048728320349044;

1.426599983250943]

Compare p2 with p2Esti

p2 = [-0.709709, 0.100216, 1.3998]

p2Esti = [0.375131, 2.17834, 2.62696]

verify p1 = R * p2Esti + t

p1 = [-0.627221, 0.101454, 1.3116]

(R*p2Esti+t) = [-0.6272212118287084;

0.1014538330490162;

1.311599858805601]