<一>总览

MindSpore支持保存两种类型的数据:训练参数和网络模型(模型中包含参数信息)。

-

训练参数指的是

Checkpoint

格式文件。 -

网络模型包括MindIR、AIR和ONNX三种格式文件。

下面介绍一下这几种格式的基本概念及其应用场景。

-

Checkpoint

-

采用了Protocol Buffers格式,存储了网络中所有的参数值。

-

一般用于训练任务中断后恢复训练,或训练后的微调(Fine Tune)任务。

-

-

MindIR

-

全称MindSpore IR,是MindSpore的一种基于图表示的函数式IR,定义了可扩展的图结构以及算子的IR表示。

-

它消除了不同后端的模型差异,一般用于跨硬件平台执行推理任务。

-

-

ONNX

-

全称Open Neural Network Exchange,是一种针对机器学习模型的通用表达。

-

一般用于不同框架间的模型迁移或在推理引擎(

TensorRT

)上使用

-

-

AIR

-

全称Ascend Intermediate Representation,是华为定义的针对机器学习所设计的开放式文件格式。

-

它能更好地适应华为AI处理器,一般用于Ascend 310上执行推理任务。

-

<二> 推理

总体步骤:

开发环境准备,Python3.7.5 ,ubuntu 18.04.5 x86_64,配套开发软件包 CNN、mindx_sdk等。

导出AIR模型文件

使用ATC工具将AIR模型文件转成OM模型。

编译推理代码,生成可执行

main

文件。加载保存的OM模型,执行推理并查看结果。

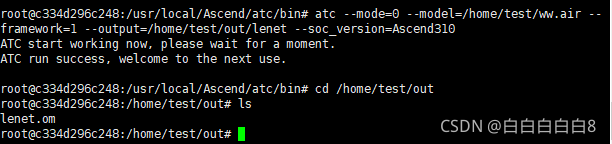

(1)离线模型转换

总体流程:Checkpoint—->lenet.air—–>om

使用ATC工具将air文件转换为om文件

model_path=$1

output_model_name=$2

/usr/local/Ascend/atc/bin/atc \

--model=$model_path \

--mode=0 \

--framework=1 \

--output=$output_model_name \

--input_format=NCHW \

--soc_version=Ascend310 \参数说明:

–mode=0 0表示转为离线om模型

–model 待转文件路径与文件名。

–framework=1 原始框架类型,1为mindspore

–output 存放转换后的离线模型的路径以及文件名

–soc_version 模型转换时指定芯片版本(一定要指名,刚开始没填写,一直报错)

命令运行脚本 bash air2om.sh ./ww.air ../mxbase/models/lenet

最终结果:

得到om模型文件

其他Caffe、Tensorflow等框架的转换方式以及配置参数,见

昇腾CANN社区版

(2)MxBase推理过程

大致目录结构:不同模型有所不同

├── data

├── config # 模型配置信息

├── coco.names # 数据集类别

├── infer.txt # 保存推理图片路径

├── images # 推理图片

├── 1.jpg

├── 2.jpg

├── 3.jpg

......

├── model # 保存模型文件

├── model_tf_aipp.om

├── mxbase

├── main.cpp # 推理启动脚本

├── modelDetection.h # 模型推理.h文件

├── modelDetection.cpp # 模型推理脚本

├── CMakeLists.txt # Cmake编译文件

├── build.sh # 编译脚本

2.1、build.sh编译脚本:

设置编译环境,需要根据本地机子实际环境做修改

#!/bin/bash

# Copyright 2020 Huawei Technologies Co., Ltd

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

path_cur=$(dirname $0)

echo "now_path:${path_cur}"

echo "ASCEND_VERSION:${ASCEND_VERSION}"

function check_env()

{

# set ASCEND_VERSION to ascend-toolkit/latest when it was not specified by user

if [ ! "${ASCEND_VERSION}" ]; then

export ASCEND_VERSION=nnrt/latest

echo "Set ASCEND_VERSION to the default value: ${ASCEND_VERSION}"

else

echo "ASCEND_VERSION is set to ${ASCEND_VERSION} by user"

fi

if [ ! "${ARCH_PATTERN}" ]; then

# set ARCH_PATTERN to ./ when it was not specified by user

export ARCH_PATTERN=./

echo "ARCH_PATTERN is set to the default value: ${ARCH_PATTERN}"

else

echo "ARCH_PATTERN is set to ${ARCH_PATTERN} by user"

fi

}

function build_lenet()

{

cd $path_cur

rm -rf build

mkdir -p build

cd build

cmake ..

make

ret=$?

if [ ${ret} -ne 0 ]; then

echo "Failed to build lenet."

exit ${ret}

fi

make install

}

check_env

build_lenet

2.2、 CMakeLists.txt编译文件:

根据实际情况做修改

cmake_minimum_required(VERSION 3.14.1)

project(lenet)

set(TARGET lenet)

add_definitions(-DENABLE_DVPP_INTERFACE)

add_compile_options(-std=c++11 -fPIE -fstack-protector-all -fPIC -Wall)

add_link_options(-Wl,-z,relro,-z,now,-z,noexecstack -pie)

# 检查环境变量

if(NOT DEFINED ENV{ASCEND_HOME})

message(FATAL_ERROR "please define environment variable:ASCEND_HOME")

endif()

if(NOT DEFINED ENV{ASCEND_VERSION})

message(WARNING "please define environment variable:ASCEND_VERSION")

endif()

if(NOT DEFINED ENV{ARCH_PATTERN})

message(WARNING "please define environment variable:ARCH_PATTERN")

endif()

set(ACL_INC_DIR $ENV{ASCEND_HOME}/$ENV{ASCEND_VERSION}/acllib/include)

set(ACL_LIB_DIR $ENV{ASCEND_HOME}/$ENV{ASCEND_VERSION}/acllib/lib64)

set(MXBASE_ROOT_DIR $ENV{MX_SDK_HOME})

set(MXBASE_INC ${MXBASE_ROOT_DIR}/include)

set(MXBASE_LIB_DIR ${MXBASE_ROOT_DIR}/lib)

set(MXBASE_POST_LIB_DIR ${MXBASE_ROOT_DIR}/lib/modelpostprocessors)

set(MXBASE_POST_PROCESS_DIR ${MXBASE_INC}/MxBase/postprocess/include)

if(DEFINED ENV{MXSDK_OPENSOURCE_DIR})

set(OPENSOURCE_DIR $ENV{MXSDK_OPENSOURCE_DIR})

else()

set(OPENSOURCE_DIR ${MXBASE_ROOT_DIR}/opensource)

endif()

#包含编译需要用到的目录

include_directories(${ACL_INC_DIR})

include_directories(${OPENSOURCE_DIR}/include)

include_directories(${OPENSOURCE_DIR}/include/opencv4)

include_directories(${MXBASE_INC})

include_directories(${MXBASE_POST_PROCESS_DIR})

link_directories(${ACL_LIB_DIR})

link_directories(${OPENSOURCE_DIR}/lib)

link_directories(${MXBASE_LIB_DIR})

link_directories(${MXBASE_POST_LIB_DIR})

#设置主执行文件

add_executable(${TARGET} main.cpp)

#target_link_libraries(${TARGET} glog cpprest mxbase opencv_world)

install(TARGETS ${TARGET} RUNTIME DESTINATION ${PROJECT_SOURCE_DIR}/)

2.3、推理脚本

2.3.1 主函数启动脚本 main_opencv.cpp

作用:初始化模型配置所需参数,加载图片供后续推理

#include "MxBase/Log/Log.h"

#include <dirent.h>

#include "LenetOpencv.h"

namespace {

const uint32_t CLASS_NUM = 10;

} // namespace

APP_ERROR ScanImages(const std::string &path, std::vector<std::string> &imgFiles)

{

DIR *dirPtr = opendir(path.c_str());

if (dirPtr == nullptr) {

LogError << "opendir failed. dir:" << path;

return APP_ERR_INTERNAL_ERROR;

}

dirent *direntPtr = nullptr;

while ((direntPtr = readdir(dirPtr)) != nullptr) {

std::string fileName = direntPtr->d_name;

if (fileName == "." || fileName == "..") {

continue;

}

imgFiles.emplace_back(path + "/" + fileName);

}

closedir(dirPtr);

return APP_ERR_OK;

}

int main(int argc, char* argv[]){

if (argc <= 1) {

LogError << "Please input image path, such as './lenet image_dir'.";

return APP_ERR_OK;

}

InitParam initParam = {};

initParam.deviceId = 0;

initParam.classNum = CLASS_NUM;

initParam.checkTensor = true;

initParam.modelPath = "../models/lenet.om";

auto lenet = std::make_shared<LenetOpencv>();

APP_ERROR ret = lenet->Init(initParam);

if (ret != APP_ERR_OK) {

LogError << "Lenet init failed, ret=" << ret << ".";

return ret;

}else{

LogError<<"Lenet init success";

}

std::string imgPath = argv[1];

std::vector<std::string> imgFilePaths;

ret = ScanImages(imgPath, imgFilePaths);

auto startTime = std::chrono::high_resolution_clock::now();

for (auto &imgFile : imgFilePaths) {

ret = lenet->Process(imgFile);

if (ret != APP_ERR_OK) {

LogError << "lenet process failed, ret=" << ret << ".";

lenet->DeInit();

return ret;

}

}

auto endTime = std::chrono::high_resolution_clock::now();

lenet->DeInit();

double costMilliSecs = std::chrono::duration<double, std::milli>(endTime - startTime).count();

double fps = 1000.0 * imgFilePaths.size() / lenet->GetInferCostMilliSec();

LogInfo << "[Process Delay] cost: " << costMilliSecs << " ms\tfps: " << fps << " imgs/sec";

return APP_ERR_OK;

}

2.3.2 模型推理脚本和 .h文件

作用:模型推理具体实现代码

#LenetOpencv.cpp文件

#include "LenetOpencv.h"

#include "MxBase/DeviceManager/DeviceManager.h"

#include "MxBase/Log/Log.h"

#include "MxBase/ConfigUtil/ConfigUtil.h"

using namespace MxBase;

namespace {

const uint32_t YUV_BYTE_NU = 3;

const uint32_t YUV_BYTE_DE = 2;

const uint32_t VPC_H_ALIGN = 2;

}

APP_ERROR LenetOpencv::Init(const InitParam &initParam)

{

deviceId_ = initParam.deviceId;

APP_ERROR ret = MxBase::DeviceManager::GetInstance()->InitDevices();

if (ret != APP_ERR_OK) {

LogError << "Init devices failed, ret=" << ret << ".";

return ret;

}

ret = MxBase::TensorContext::GetInstance()->SetContext(initParam.deviceId);

if (ret != APP_ERR_OK) {

LogError << "Set context failed, ret=" << ret << ".";

return ret;

}

dvppWrapper_ = std::make_shared<MxBase::DvppWrapper>();

ret = dvppWrapper_->Init();

if (ret != APP_ERR_OK) {

LogError << "DvppWrapper init failed, ret=" << ret << ".";

return ret;

}

model_ = std::make_shared<MxBase::ModelInferenceProcessor>();

ret = model_->Init(initParam.modelPath, modelDesc_);

if (ret != APP_ERR_OK) {

LogError << "ModelInferenceProcessor init failed, ret=" << ret << ".";

return ret;

}

MxBase::ConfigData configData;

const std::string checkTensor = initParam.checkTensor ? "true" : "false";

configData.SetJsonValue("CLASS_NUM", std::to_string(initParam.classNum));

configData.SetJsonValue("CHECK_MODEL", checkTensor);

auto jsonStr = configData.GetCfgJson().serialize();

std::map<std::string, std::shared_ptr<void>> config;

return APP_ERR_OK;

}

APP_ERROR LenetOpencv::DeInit()

{

dvppWrapper_->DeInit();

model_->DeInit();

MxBase::DeviceManager::GetInstance()->DestroyDevices();

return APP_ERR_OK;

}

APP_ERROR LenetOpencv::ReadImage(const std::string &imgPath, cv::Mat &imageMat)

{

imageMat = cv::imread(imgPath, cv::IMREAD_COLOR);

return APP_ERR_OK;

}

APP_ERROR LenetOpencv::CVMatToTensorBase(const cv::Mat &imageMat, MxBase::TensorBase &tensorBase)

{

const uint32_t dataSize = imageMat.cols * imageMat.rows * YUV444_RGB_WIDTH_NU;

LogInfo << "image size " << imageMat.cols << " " << imageMat.rows;

MemoryData memoryDataDst(dataSize, MemoryData::MEMORY_DEVICE, deviceId_);

MemoryData memoryDataSrc(imageMat.data, dataSize, MemoryData::MEMORY_HOST_MALLOC);

APP_ERROR ret = MemoryHelper::MxbsMallocAndCopy(memoryDataDst, memoryDataSrc);

if (ret != APP_ERR_OK) {

LogError << GetError(ret) << "Memory malloc failed.";

return ret;

}

std::vector<uint32_t> shape = {imageMat.rows * YUV444_RGB_WIDTH_NU, static_cast<uint32_t>(imageMat.cols)};

tensorBase = TensorBase(memoryDataDst, false, shape, TENSOR_DTYPE_UINT8);

return APP_ERR_OK;

}

APP_ERROR LenetOpencv::Inference(const std::vector<MxBase::TensorBase> &inputs,

std::vector<MxBase::TensorBase> &outputs)

{

//model_ is <MxBase::ModelInferenceProcessor> object, modelDesc_ is an object which save the model_info

auto dtypes = model_->GetOutputDataType();

for (size_t i = 0; i < modelDesc_.outputTensors.size(); ++i) {

std::vector<uint32_t> shape = {};

for (size_t j = 0; j < modelDesc_.outputTensors[i].tensorDims.size(); ++j) {

shape.push_back((uint32_t)modelDesc_.outputTensors[i].tensorDims[j]);

}

TensorBase tensor(shape, dtypes[i], MemoryData::MemoryType::MEMORY_DEVICE, deviceId_);

APP_ERROR ret = TensorBase::TensorBaseMalloc(tensor);

if (ret != APP_ERR_OK) {

LogError << "TensorBaseMalloc failed, ret=" << ret << ".";

return ret;

}

outputs.push_back(tensor);

}

DynamicInfo dynamicInfo = {};

dynamicInfo.dynamicType = DynamicType::STATIC_BATCH;

auto startTime = std::chrono::high_resolution_clock::now();

APP_ERROR ret = model_->ModelInference(inputs, outputs, dynamicInfo);

auto endTime = std::chrono::high_resolution_clock::now();

double costMs = std::chrono::duration<double, std::milli>(endTime - startTime).count();// save time

inferCostTimeMilliSec += costMs;

if (ret != APP_ERR_OK) {

LogError << "ModelInference failed, ret=" << ret << ".";

return ret;

}

return APP_ERR_OK;

}

//image process

APP_ERROR LenetOpencv::Process(const std::string &imgPath)

{

cv::Mat imageMat;

APP_ERROR ret = ReadImage(imgPath, imageMat);

if (ret != APP_ERR_OK) {

LogError << "ReadImage failed, ret=" << ret << ".";

return ret;

}

std::vector<MxBase::TensorBase> inputs = {};

std::vector<MxBase::TensorBase> outputs = {};

TensorBase tensorBase;

//image ==>tensorBase

ret = CVMatToTensorBase(imageMat, tensorBase);

if (ret != APP_ERR_OK) {

LogError << "CVMatToTensorBase failed, ret=" << ret << ".";

return ret;

}

//put tensorBase to inputs

inputs.push_back(tensorBase);

auto startTime = std::chrono::high_resolution_clock::now();

//start inference for each image

ret = Inference(inputs, outputs);

auto endTime = std::chrono::high_resolution_clock::now();

double costMs = std::chrono::duration<double, std::milli>(endTime - startTime).count();// save time

inferCostTimeMilliSec += costMs;

if (ret != APP_ERR_OK) {

LogError << "Inference failed, ret=" << ret << ".";

return ret;

}

/*

//post process

std::vector<std::vector<MxBase::ClassInfo>> BatchClsInfos = {};

ret = PostProcess(outputs, BatchClsInfos);

if (ret != APP_ERR_OK) {

LogError << "PostProcess failed, ret=" << ret << ".";

return ret;

}

ret = SaveResult(imgPath, batchClsInfos);

if (ret != APP_ERR_OK) {

LogError << "Save infer results into file failed. ret = " << ret << ".";

return ret;

}

*/

return APP_ERR_OK;

}

#LenetOpencv.h头文件

#ifndef MXBASE_LENETOPENCV_H

#define MXBASE_LENETOPENCV_H

#include <opencv2/opencv.hpp>

#include "MxBase/DvppWrapper/DvppWrapper.h"

#include "MxBase/ModelInfer/ModelInferenceProcessor.h"

#include "MxBase/Tensor/TensorContext/TensorContext.h"

struct InitParam {

uint32_t deviceId;

uint32_t classNum;

bool checkTensor;

std::string modelPath;

};

class LenetOpencv{

public:

APP_ERROR Init(const InitParam &initParam);

APP_ERROR DeInit();

APP_ERROR ReadImage(const std::string &imgPath, cv::Mat &imageMat);

APP_ERROR CVMatToTensorBase(const cv::Mat &imageMat, MxBase::TensorBase &tensorBase);

APP_ERROR Inference(const std::vector<MxBase::TensorBase> &inputs, std::vector<MxBase::TensorBase> &outputs);

APP_ERROR Process(const std::string &imgPath);

// get infer time

double GetInferCostMilliSec() const {return inferCostTimeMilliSec;}

private:

std::shared_ptr<MxBase::DvppWrapper> dvppWrapper_;

std::shared_ptr<MxBase::ModelInferenceProcessor> model_;

MxBase::ModelDesc modelDesc_;

uint32_t deviceId_ = 0;

// infer time

double inferCostTimeMilliSec = 0.0;

};

#endif

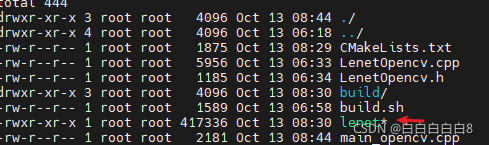

2.4、编译

上述所有步骤完成就可以进行编译,执行bash build.sh

就会生成绿色字体的可执行文件。

2.5、MNIST数据集预处理,转为jpg图片

可以看我的另一篇博文

python 将MNIST数据集转为jpg图片格式_白白白-CSDN博客

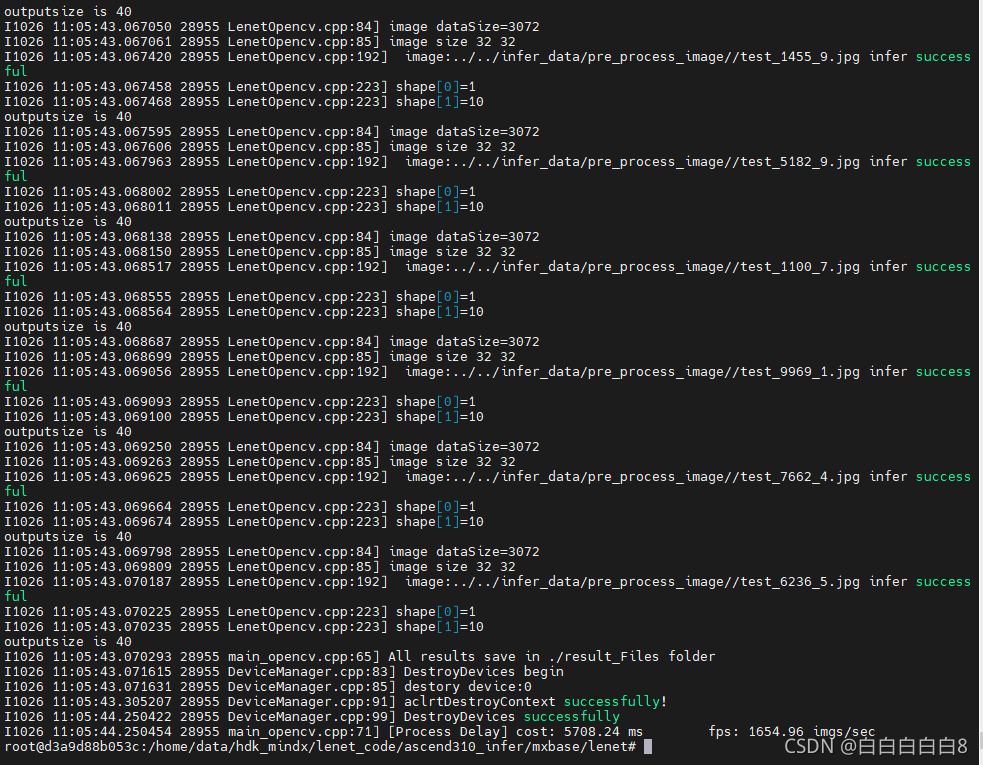

2.6、运行推理服务。

./lenet

image_path (参数解释:image_path 代表待推理图片的路径)。

在 “mxbase/lenet” 目录下执行推理命令,如

./lenet ../../infer_data/pre_process_image/

结果

如果结果和上图一致,表明推理运行成功,所有推理结果会保存在同级目录下的 result_Files 文件夹中。

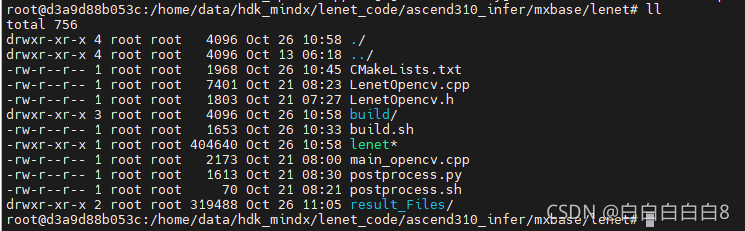

2.7、后处理与观察结果。

接着,需要对推理的结果做后处理,计算精度。

python postprocess.py

result_path infer_data (参数解释:result_path 表示推理后结果存储的路径,infer_data 表示上一步中推理所用到的图片路径)。

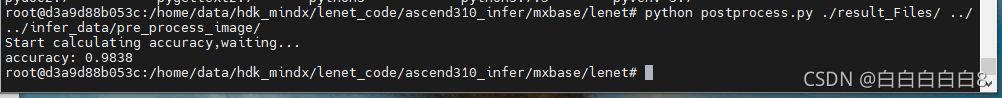

在 “mxbase/lenet”目录下 执行后处理命令:

python postprocess.py ./result_Files/ ../../infer_data/pre_process_image/

结果:如上图所示,可以看到精度的计算结果,为0.9838 。精度结果也会存于acc.log 文件夹中。

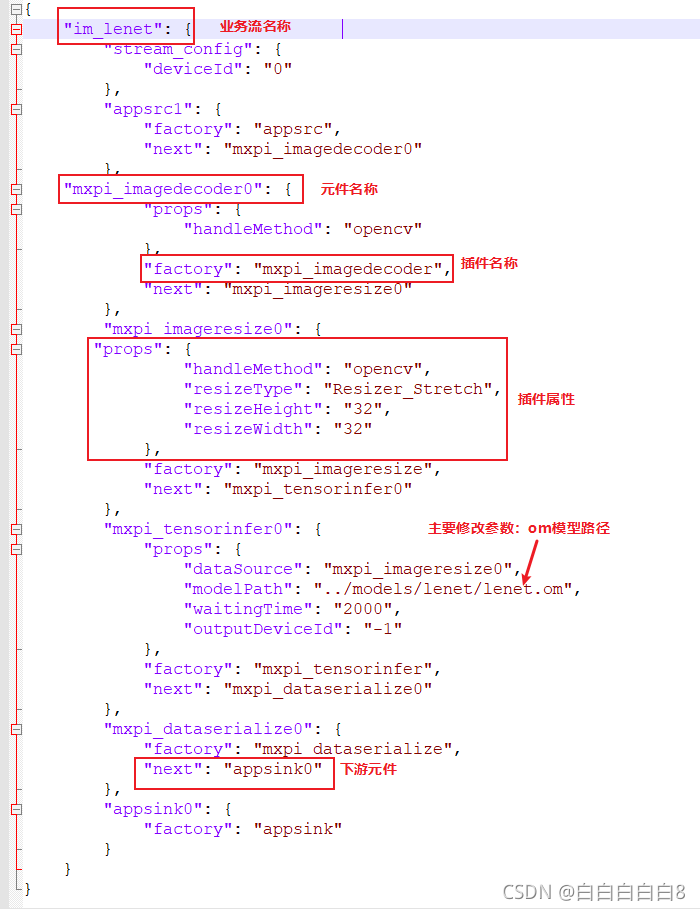

MindX SDK推理

-

修改配置文件。

-

修改

pipeline

文件。(默认位置 ascend310_infer –> sdk –> pipeline –> lenet.pipeline)进入pipeline目录:

cd /home/data/hdk_mindx/lenet_code/ascend310_infer/sdk/pipeline/

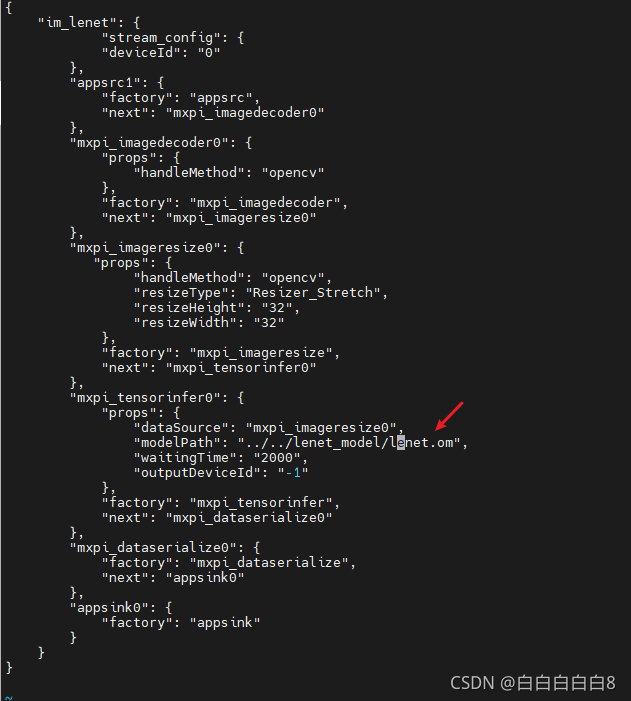

由于之前步骤中,om模型位置为 : ascend310_infer –> lenet_model –> lenet.om ,因此根据实际做修改,其他都不用改。

-

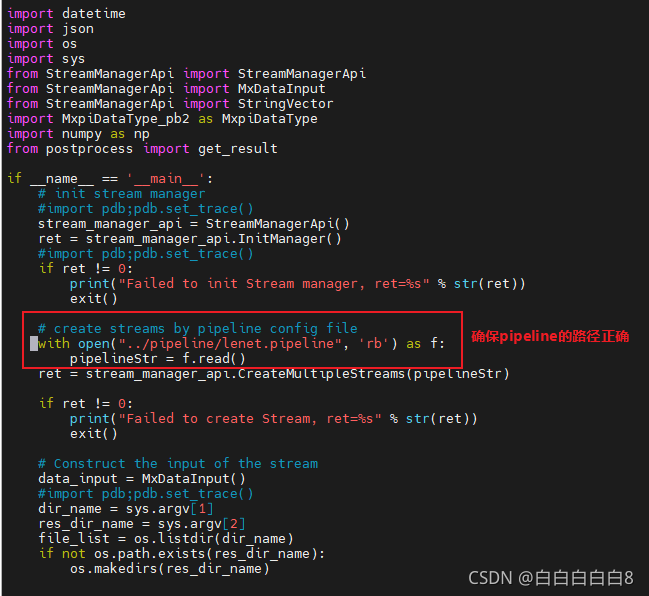

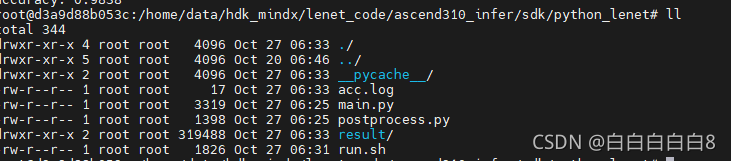

sdk –> python_lenet –> main.py

推理文件需要注意的参数

-

sdk –> python_lenet –> run.sh

推理启动脚本需要注意的环境变量配置

-

确保上述

${MX_SDK_HOME}

等系统环境变量设置正确。

-

-

-

运行推理服务。

-

执行推理。

bash run.sh

infer_image_path output_result_path (

参数解释:

infer_image_path : 待推理图片的路径 output_result_path : 结果保存路径)如:

bash run.sh ../../infer_data/pre_process_image/ ./result

前面步骤,推理图片路径为: ascend310_infer –>infer_data –> pre_process_image

结果保存于 同级目录下result文件夹中。

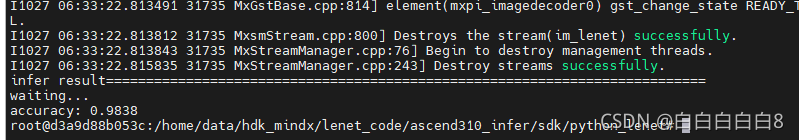

出现上图结果表示推理完成!

-

查看推理结果。

(main.py 推理脚本 已包含 后处理、计算精度步骤,只需运行run.sh ,结束后 即可看到精度)

可以看到此处的精度和mxbase推理的精度保持一致。

-