王俊凯真nb

假唱

原视频链接(bilibili):

一眼就能看出唱功和假唱?用软件来分析一下跨年演唱会的歌手们!

1. 使用ffmpeg裁剪音频片段

没有ffmpeg,用pr等剪辑软件也可以

ffmpeg -ss 0:30 -i input.wav -t 15 out.wav

代表将

input.wav

,从00:30开始,截取15s片段,输出到

out.wav

只是用来做频率分析的话,编码,采样率等参数不太重要,保持默认即可

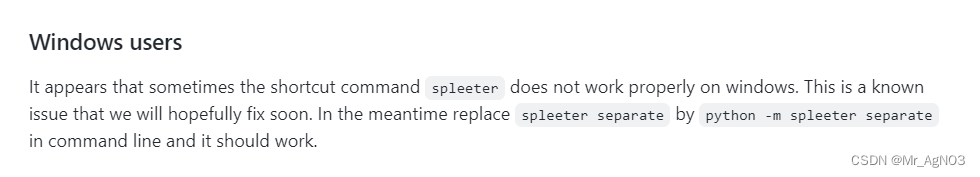

2. 使用spleeter消除背景音

安装细节自行百度,装不上或者跑不起来的话,用网上其他平台也能实现消除背景音

github地址:

https://github.com/deezer/spleeter

安装直接使用pip即可(python3.11不支持,需要3.10及以下):

pip install spleeter

使用:

python -m spleeter separate 'pm.mp3' -p spleeter:2stems -o 'output' --verbose

建议使用绝对路径

输出在

output\<音频名>

,其中

vocals.wav

是人声,

accompaniment.wav

是伴奏

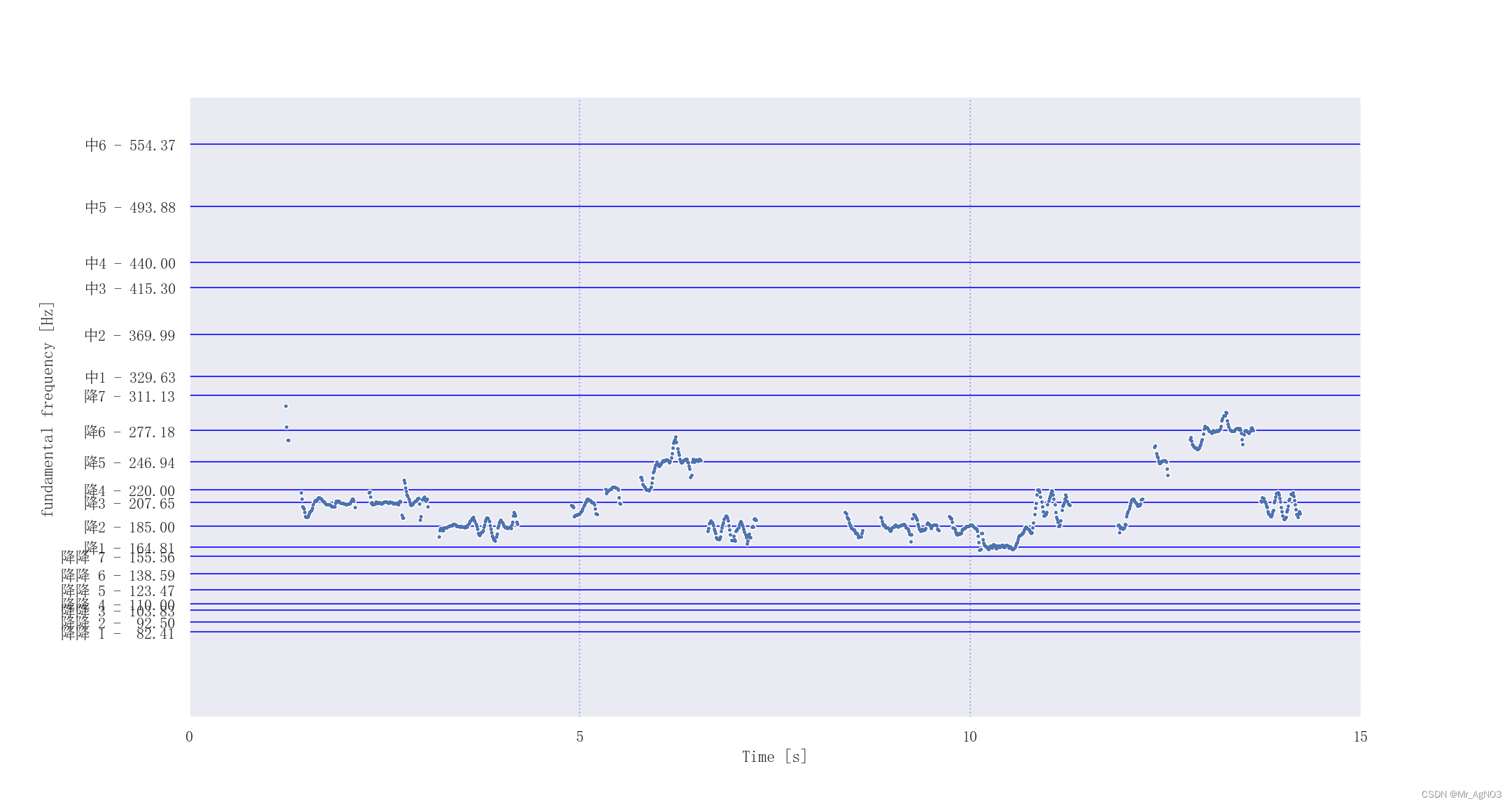

3. 使用parselmouth分析频率

脚本在末尾

基于github上给的代码和天哥视频中放出的代码,经过我修饰的

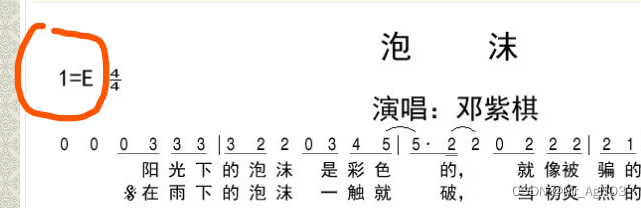

tone = 'E'

代表当前歌曲的调子

此为 E 调

snd = parselmouth.Sound(r"output\pm\vocals.wav")

调整输入音频文件,这里传入的参数是经过上两步处理后的音频文件。

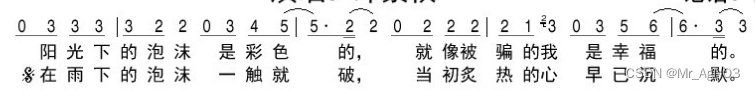

这是《泡沫》的前两句的频率图,对比简谱图(只能看懂简朴图了)

这是QQ音乐上下载的歌,自然是修过的,音准极好。

4. 完整代码

import parselmouth

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from itertools import product

from matplotlib import rcParams

from matplotlib.ticker import MultipleLocator

config = {

"font.family":'serif',

"font.size": 8,

"mathtext.fontset":'stix',

"font.serif": ['SimSun'],

}

sns.set()

rcParams.update(config)

tone = 'E'

class Tonality:

base_diff = [0,2,4,5,7,9,11]

tones = ['A','A#','B','C','C#','D','D#','E','F','F#','G','G#']

def __init__(self,tone):

assert (tone.upper() in self.tones) or (tone[::-1].upper() in self.tones)

self.base_freq = self.tone_to_freq(tone)

def tone_to_freq(self,tone):

A_freq = 440

return A_freq * (2**(self.tones.index(tone)/12))

def get_freq(self):

ret = []

for r in range(-3,2):

base = self.base_freq * (2**r)

ret.extend([base * (2**(diff/12)) for diff in self.base_diff])

return ret

# snd = parselmouth.Sound("output\\pm\\vocals.wav")

snd = parselmouth.Sound(r"pm_.wav")

pitch = snd.to_pitch()

plt.figure(figsize=(15,8),dpi=144)

y_ticks_v = Tonality(tone).get_freq()

stage = ['low-low ','low ','midium ','high ','high-high ']

stage = ['降降 ','降','中','升','升升']

basePitch = ['1','1#','2','2#','3','4','4#','5','5#','6','6#','7']

basePitch = list('1234567')

y_ticks = [''.join(i) for i in product(stage,basePitch)]

y_ticks = [i+" - %6.2f"%(j) for i,j in zip(y_ticks,y_ticks_v)]

plt.yticks(y_ticks_v,y_ticks)

pitch_values = pitch.selected_array['frequency']

pitch_values[pitch_values==0] = np.nan

plt.plot(pitch.xs(), pitch_values, 'o', markersize=3, color='w')

plt.plot(pitch.xs(), pitch_values, 'o', markersize=1.5)

plt.ylim(0, pitch.ceiling)

plt.ylabel("fundamental frequency [Hz]")

plt.xlabel('Time [s]')

plt.xlim([snd.xmin, snd.xmax])

ax = plt.gca()

# plt.grid(ls='-',color='blue')

ax.xaxis.set_major_locator(MultipleLocator(5));

ax.xaxis.grid(color='blue',ls=':',lw=1,alpha=0.3)

ax.yaxis.grid(color='blue',ls='-',lw=1,alpha=0.6)

plt.savefig("pitch.png")