一、运行环境:win10 flink1.10 kafka0.10 scala2.11 idea2021

二、核心代码

object KafkaSource {

def main(args: Array[String]): Unit = {

//创建执行环境

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//配置kafka

val properties = new Properties()

properties.setProperty("bootstrap.servers", "hadoop102:9092")

properties.setProperty("group.id", "consumer-group")

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer")

properties.setProperty("auto.offset.reset", "latest")

//添加kafkasource

val stream:DataStream[String] = env.addSource(new FlinkKafkaConsumer011[String]("sensor",new SimpleStringSchema(),properties))

//打印数据流

stream.print("stream:").setParallelism(1)

//开启job

env.execute("stream")

}

}

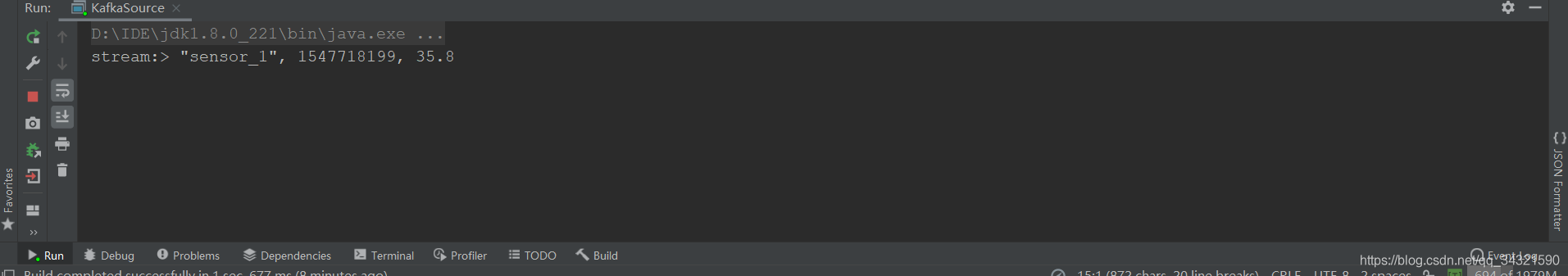

三、执行结果

版权声明:本文为qq_34321590原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。