写这篇文章的原因是本人在使用自己的数据集训练BiSeNet模型过程中,制作cityscapes格式的数据困扰了我两天。所以写下这篇文章记录一下过程。不对BiSeNet模型理论做介绍,因为我也不懂,也不对下面每一步为什么这么做做详细介绍,因为我只是个无情的代码搬运工,只需要能跑起来就行,懒得管为什么。

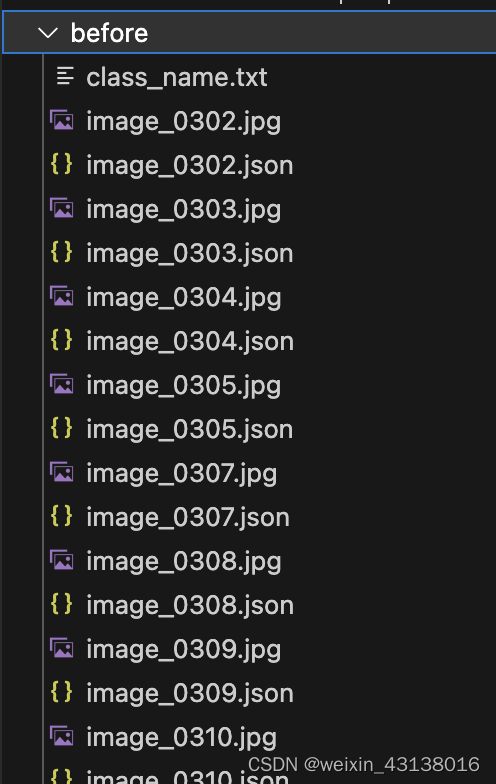

关于如何使用labelme标注数据在这里不做详细介绍,网络上有很多教程,假设我们现在已经有了labelme标注好的数据。我们将标注好的json文件和原图片放在同一文件夹下,这里我把他们放在before文件夹下。然后在before文件夹下新建一个class_name.txt文件,并在里面写明你所标注的类别,本人只是做一个火车轨道的识别,所以只有_background_和lane两个类别。

接下来需要将before文件夹下的图片和json文件做一个局部类转化为全局类的操作,直接运行get_jpg_and_png.py。

############################

## get_jpg_and_png.py ##

############################

import os

import numpy as np

from PIL import Image

def main():

# 读取原文件夹

count = os.listdir("./before/")

for i in range(0, len(count)):

# 如果里的文件以jpg结尾

# 则寻找它对应的png

if count[i].endswith("jpg"):

path = os.path.join("./before", count[i])

img = Image.open(path)

img.save(os.path.join("./jpg", count[i]))

# 找到对应的png

path = "./output/" + count[i].split(".")[0] + "_json/label.png"

img = Image.open(path)

# 找到全局的类

class_txt = open("./before/class_name.txt","r")

class_name = class_txt.read().splitlines()

# ["bk","cat","dog"]

# 打开json文件里面存在的类,称其为局部类

with open("./output/" + count[i].split(".")[0] + "_json/label_names.txt","r") as f:

names = f.read().splitlines()

# ["bk","dog"]

new = Image.new("RGB",[np.shape(img)[1],np.shape(img)[0]])

for name in names:

# index_json是json文件里存在的类,局部类

index_json = names.index(name)

# index_all是全局的类

index_all = class_name.index(name)

# 将局部类转换成为全局类

new = new + np.expand_dims(index_all*(np.array(img) == index_json),-1)

new = Image.fromarray(np.uint8(new))

new.save(os.path.join("./png", count[i].replace("jpg","png")))

print(np.max(new),np.min(new))

if __name__ == '__main__':

main()

运行完之后我们得到一个output文件夹,如下图所示。img.png为原图,info.yaml,label_names.txt为里面是你标注的类别,label_viz.png为原图中标注了分割区域的图片,label.png为分割图像。

然后将里面的所有img.png和label.png分别取出来,分别放在JPEGImages文件夹下和SegmentationClass文件夹下。可以通过moveSrcMasksImage.py实现。

#########################

## moveSrcMasksImage.py ##

#########################

import os

import os.path as osp

import shutil

def moveSrcMasksImage(json_dir):

# 获取_json文件夹上级目录

pre_dir = os.path.abspath(os.path.dirname(os.path.dirname(json_dir)))

img_dir = osp.join(pre_dir, "JPEGImages")

mask_dir = osp.join(pre_dir, "SegmentationClass")

# 目录不存在创建

if not osp.exists(img_dir):

os.makedirs(img_dir)

if not osp.exists(mask_dir):

os.makedirs(mask_dir)

# 批量移动srcimg和mask到指定目录

count = 0 # 记录移动次数

for dirs in os.listdir(json_dir):

dir_name = osp.join(json_dir, dirs)

if not osp.isdir(dir_name): # 不是目录

continue

if dir_name.rsplit('_', 1)[-1] != 'json': # 非_json文件夹

continue

if not os.listdir(dir_name): # 目录为空

continue

count += 1

# 所有__json目录下的img.png,label.png 用目录名改为同名文件

'''

img.png

label.png

label_names.txt

label_viz.png

'''

img_path = osp.join(dir_name, 'img.png')

label_path = osp.join(dir_name, 'label.png')

new_name = dirs.rsplit('_', 1)[0] + '.png'

# print('new_name: ', new_name)

# 先复制文件到源目录,再分别移动到img和masks

new_name_path = osp.join(dir_name, new_name)

shutil.copy(img_path, new_name_path) # copy srcimg

shutil.move(new_name_path, osp.join(img_dir, new_name)) # move img_dir

print('{} ====> {}'.format(new_name, "JPEGImages"))

shutil.copy(label_path, new_name_path) # copy srcimg

shutil.move(new_name_path, osp.join(mask_dir, new_name)) # move img_dir

print('{} ====> {}'.format(new_name, "SegmentationClass"))

print('共整理、移动{}张图像'.format(count))

return img_dir, mask_dir

if __name__ == "__main__":

path = 'output'

moveSrcMasksImage(path)

然后运行get_gray.py把SegmentationClass文件夹下的图片变成灰度图。

import cv2

import os

input_dir = 'SegmentationClass' #上一步保存.png图像文件夹

out_dir = 'SegmentationClass_8'

a = os.listdir(input_dir)

for i in a:

img = cv2.imread(input_dir+'/'+i)

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

cv2.imencode('.png', gray)[1].tofile(out_dir+'/'+i)运行train_val_test.py将上一步得到的图片划分为训练集,验证集,测试集。

# train_val_test.py

'''

将数据分为train val test

'''

import os

import random

import shutil

total_list = []

train_list = []

val_list = []

test_list = []

image_path = 'JPEGImages'

label_path = 'SegmentationClass_8'

# 清空

for dir in ['train', 'val', 'test']:

image_dir = os.path.join(image_path, dir)

label_dir = os.path.join(label_path, dir)

if os.path.exists(image_dir):

shutil.rmtree(image_dir)

os.makedirs(image_dir)

if os.path.exists(label_dir):

shutil.rmtree(label_dir)

os.makedirs(label_dir)

for root, dirs, files in os.walk(image_path):

for file in files:

if file.endswith('png'):

total_list.append(file)

total_size = len(total_list)

train_size = int(total_size * 0.7)

val_size = int(total_size * 0.2)

train_list = random.sample(total_list, train_size)

remain_list = list(set(total_list) - set(train_list))

val_list = random.sample(remain_list, val_size)

test_list = list(set(remain_list) - set(val_list))

print(len(total_list))

print(len(train_list))

print(len(val_list))

print(len(test_list))

image_path = 'JPEGImages'

label_path = 'SegmentationClass_8'

# 清空

for dir in ['train', 'val', 'test']:

image_dir = os.path.join(image_path, dir)

label_dir = os.path.join(label_path, dir)

if os.path.exists(image_dir):

shutil.rmtree(image_dir)

os.makedirs(image_dir)

if os.path.exists(label_dir):

shutil.rmtree(label_dir)

os.makedirs(label_dir)

for file in total_list:

image_path_0 = os.path.join(image_path, file)

label_file = file.split('.')[0] + '.png'

label_path_0 = os.path.join(label_path, label_file)

if file in train_list:

image_path_1 = os.path.join(image_path, 'train', file)

shutil.move(image_path_0, image_path_1)

label_path_1 = os.path.join(label_path, 'train', label_file)

shutil.move(label_path_0, label_path_1)

elif file in val_list:

image_path_1 = os.path.join(image_path, 'val', file)

shutil.move(image_path_0, image_path_1)

label_path_1 = os.path.join(label_path, 'val', label_file)

shutil.move(label_path_0, label_path_1)

elif file in test_list:

image_path_1 = os.path.join(image_path, 'test', file)

shutil.move(image_path_0, image_path_1)

label_path_1 = os.path.join(label_path, 'test', label_file)

shutil.move(label_path_0, label_path_1)

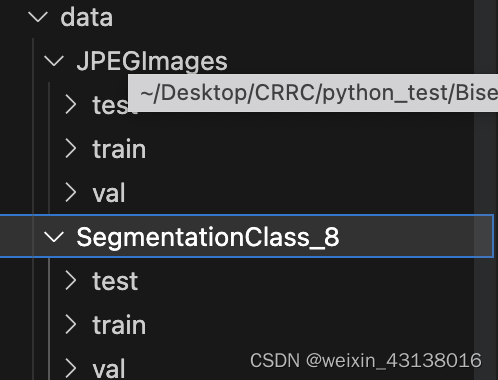

将得到的JPEGImages和SegmentationClass_8放在一个新建的data文件夹下。

然后运行train_val_test_txt.py得到对应的train,val,test的txt文件。

####################

## train_val_test_txt.py ##

####################

import os

import tqdm

input_dir_train =os.listdir('data/JPEGImages/train')

with open(r'data/train.txt', "w", encoding='utf-8')as f:

for jpg_name in input_dir_train:

name = jpg_name.split('.')[0]

jpg_name = 'JPEGImages/train/'+jpg_name

png_name = 'SegmentationClass_8/train/'+name + '.png'

txt = jpg_name + ',' + png_name

f.write(txt)

f.write('\n')

input_dir_test =os.listdir('data/JPEGImages/test')

with open(r'data/test.txt', "w", encoding='utf-8')as f:

for jpg_name in input_dir_test:

name = jpg_name.split('.')[0]

jpg_name = 'JPEGImages/test/'+jpg_name

png_name = 'SegmentationClass_8/test/'+name + '.png'

txt = jpg_name + ',' + png_name

f.write(txt)

f.write('\n')

input_dir_val =os.listdir('data/JPEGImages/val')

with open(r'data/val.txt', "w", encoding='utf-8')as f:

for jpg_name in input_dir_val:

name = jpg_name.split('.')[0]

jpg_name = 'JPEGImages/val/'+jpg_name

png_name = 'SegmentationClass_8/val/'+name + '.png'

txt = jpg_name + ',' + png_name

f.write(txt)

f.write('\n')

至此我们CityScapes格式的数据就制作好了,下面可以下载代码进行训练了。

代码下载地址:

https://github.com/CoinCheung/BiSeNet

下载好后我们制作好的数据,上文的data文件夹放在工程的根目录下。之后需要修改几处代码。

configs文件夹下的bisenetv2_city.py(因为我用bisenetv2训练模型所以修改bisenetv2_city.py)。这里放出我修改后的,仅供参考。

## bisenetv2

cfg = dict(

model_type='bisenetv2',

n_cats=2, #对应的类别,我只有背景和lane两个类别所以为2,根据自己数据实际情况设置

num_aux_heads=4,

lr_start=5e-3,

weight_decay=5e-4,

warmup_iters=1000, #这个我也不知道干啥用的,没仔细看代码

max_iter=300, #训练epoch次数

dataset='CityScapes',

im_root='data',#数据的根地址

train_im_anns='data/train.txt',#训练集地址

val_im_anns='data/val.txt',#验证集地址

scales=[0.25, 2.],

cropsize=[512, 1024],

eval_crop=[1024, 1024],

eval_scales=[0.5, 0.75, 1.0, 1.25, 1.5, 1.75],

ims_per_gpu=24,#好像是batchsize,根据自己显存设置,显存小就设置小一点

eval_ims_per_gpu=2,

use_fp16=True,

use_sync_bn=False,

respth='./res',#训练后的权重参数和日志保存的路径

)

接下来就可以训练了,由于原作者给的train_amp.py脚本我总是报错,所以自己修改了一下,仅供参考。

#!/usr/bin/python

# -*- encoding: utf-8 -*-

import sys

sys.path.insert(0, '.')

import os

import os.path as osp

import random

import logging

import time

import json

import argparse

import numpy as np

from tabulate import tabulate

import torch

import torch.nn as nn

import torch.distributed as dist

from torch.utils.data import DataLoader

import torch.cuda.amp as amp

from lib.models import model_factory

from configs import set_cfg_from_file

from lib.data import get_data_loader

from evaluate import eval_model

from lib.ohem_ce_loss import OhemCELoss

from lib.lr_scheduler import WarmupPolyLrScheduler

from lib.meters import TimeMeter, AvgMeter

from lib.logger import setup_logger, print_log_msg

## fix all random seeds

# torch.manual_seed(123)

# torch.cuda.manual_seed(123)

# np.random.seed(123)

# random.seed(123)

# torch.backends.cudnn.deterministic = True

# torch.backends.cudnn.benchmark = True

# torch.multiprocessing.set_sharing_strategy('file_system')

def parse_args():

parse = argparse.ArgumentParser()

parse.add_argument('--config', dest='config', type=str,

default='configs/bisenetv2_city.py',)

parse.add_argument('--finetune-from', type=str, default=None,)

return parse.parse_args()

args = parse_args()

cfg = set_cfg_from_file(args.config)

def set_model(lb_ignore=255):

logger = logging.getLogger()

net = model_factory[cfg.model_type](cfg.n_cats)

if not args.finetune_from is None:

logger.info(f'load pretrained weights from {args.finetune_from}')

msg = net.load_state_dict(torch.load(args.finetune_from,

map_location='cpu'), strict=False)

logger.info('\tmissing keys: ' + json.dumps(msg.missing_keys))

logger.info('\tunexpected keys: ' + json.dumps(msg.unexpected_keys))

if cfg.use_sync_bn: net = nn.SyncBatchNorm.convert_sync_batchnorm(net)

# net.cuda()

net.train()

criteria_pre = OhemCELoss(0.7, lb_ignore)

criteria_aux = [OhemCELoss(0.7, lb_ignore)

for _ in range(cfg.num_aux_heads)]

return net, criteria_pre, criteria_aux

def set_optimizer(model):

if hasattr(model, 'get_params'):

wd_params, nowd_params, lr_mul_wd_params, lr_mul_nowd_params = model.get_params()

# wd_val = cfg.weight_decay

wd_val = 0

params_list = [

{'params': wd_params, },

{'params': nowd_params, 'weight_decay': wd_val},

{'params': lr_mul_wd_params, 'lr': cfg.lr_start * 10},

{'params': lr_mul_nowd_params, 'weight_decay': wd_val, 'lr': cfg.lr_start * 10},

]

else:

wd_params, non_wd_params = [], []

for name, param in model.named_parameters():

if param.dim() == 1:

non_wd_params.append(param)

elif param.dim() == 2 or param.dim() == 4:

wd_params.append(param)

params_list = [

{'params': wd_params, },

{'params': non_wd_params, 'weight_decay': 0},

]

optim = torch.optim.SGD(

params_list,

lr=cfg.lr_start,

momentum=0.9,

weight_decay=cfg.weight_decay,

)

return optim

def set_model_dist(net):

# local_rank = int(os.environ['LOCAL_RANK'])

local_rank = 0

# net = nn.parallel.DistributedDataParallel(

# net,

# device_ids=[local_rank, ],

# # find_unused_parameters=True,

# output_device=local_rank

# )

net = torch.nn.DataParallel(net)

return net

def set_meters():

time_meter = TimeMeter(cfg.max_iter)

loss_meter = AvgMeter('loss')

loss_pre_meter = AvgMeter('loss_prem')

loss_aux_meters = [AvgMeter('loss_aux{}'.format(i))

for i in range(cfg.num_aux_heads)]

return time_meter, loss_meter, loss_pre_meter, loss_aux_meters

def train():

logger = logging.getLogger()

## dataset

dl = get_data_loader(cfg, mode='train')

## model

net, criteria_pre, criteria_aux = set_model(dl.dataset.lb_ignore)

## optimizer

optim = set_optimizer(net)

## mixed precision training

scaler = amp.GradScaler()

## ddp training

net = set_model_dist(net)

## meters

time_meter, loss_meter, loss_pre_meter, loss_aux_meters = set_meters()

## lr scheduler

lr_schdr = WarmupPolyLrScheduler(optim, power=0.9,

max_iter=cfg.max_iter, warmup_iter=cfg.warmup_iters,

warmup_ratio=0.1, warmup='exp', last_epoch=-1,)

## train loop

for iter in range(cfg.max_iter):

for it, (im, lb) in enumerate(dl):

im = im.cuda()

lb = lb.cuda()

lb = torch.squeeze(lb, 1)

optim.zero_grad()

with amp.autocast(enabled=cfg.use_fp16):

logits, *logits_aux = net(im)

loss_pre = criteria_pre(logits, lb)

loss_aux = [crit(lgt, lb) for crit, lgt in zip(criteria_aux, logits_aux)]

loss = loss_pre + sum(loss_aux)

scaler.scale(loss).backward()

scaler.step(optim)

scaler.update()

torch.cuda.synchronize()

time_meter.update()

loss_meter.update(loss.item())

loss_pre_meter.update(loss_pre.item())

_ = [mter.update(lss.item()) for mter, lss in zip(loss_aux_meters, loss_aux)]

print(it,'/',len(dl),'======================','第',iter+1,'/',cfg.max_iter,'轮')

## print training log message

if (iter + 1) % 10 == 0:

lr = lr_schdr.get_lr()

lr = sum(lr) / len(lr)

print_log_msg(

iter, cfg.max_iter, lr, time_meter, loss_meter,

loss_pre_meter, loss_aux_meters)

save_pth = osp.join(cfg.respth, 'model_final.pth')

logger.info('\nsave models to {}'.format(save_pth))

lr_schdr.step()

state = net.module.state_dict()

# if dist.get_rank() == 0: torch.save(state, save_pth)

torch.save(state, save_pth)

## dump the final model and evaluate the result

logger.info('\nevaluating the final model')

torch.cuda.empty_cache()

iou_heads, iou_content, f1_heads, f1_content = eval_model(cfg, net.module)

logger.info('\neval results of f1 score metric:')

logger.info('\n' + tabulate(f1_content, headers=f1_heads, tablefmt='orgtbl'))

logger.info('\neval results of miou metric:')

logger.info('\n' + tabulate(iou_content, headers=iou_heads, tablefmt='orgtbl'))

return

def main():

local_rank = 0

# local_rank = int(os.environ['LOCAL_RANK'])

torch.cuda.set_device(local_rank)

# dist.init_process_group(backend='nccl')

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

if not osp.exists(cfg.respth): os.makedirs(cfg.respth)

setup_logger(f'{cfg.model_type}-{cfg.dataset.lower()}-train', cfg.respth)

train()

if __name__ == "__main__":

main()

训练结束后res文件夹会存放我们的权重文件和日志。

接下来可以根据训练的权重进行推断。

import sys

sys.path.insert(0, '.')

import argparse

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

from PIL import Image

import numpy as np

import cv2

import copy

import os

import tqdm

import lib.data.transform_cv2 as T

from lib.models import model_factory

from configs import set_cfg_from_file

import time

# uncomment the following line if you want to reduce cpu usage, see issue #231

# torch.set_num_threads(4)

torch.set_grad_enabled(False)

np.random.seed(123)

def get_FPS(test_interval):

im = cv2.imread(args.img_path)[:, :, ::-1]

old_img = Image.open(args.img_path)

im_tensor = to_tensor(dict(im=im, lb=None))['im'].unsqueeze(0).cuda()

# shape divisor

org_size = im_tensor.size()[2:]

new_size = [math.ceil(el / 32) * 32 for el in im_tensor.size()[2:]]

# inference

im_inter = F.interpolate(im_tensor, size=new_size, align_corners=False, mode='bilinear')

t1 = time.time()

for _ in range(test_interval):

out = net(im_inter)[0]

t2 = time.time()

out = F.interpolate(out, size=org_size, align_corners=False, mode='bilinear')

out = out.argmax(dim=1)

# visualize

out = out.squeeze().detach().cpu().numpy()

pred = palette[out]

cv2.imwrite('./res.jpg', pred)

pred = Image.open('./res.jpg')

image = Image.blend(old_img,pred,0.3)

image.save('img.jpg')

tact_time = (t2-t1) / test_interval

return tact_time

# args

parse = argparse.ArgumentParser()

parse.add_argument('--config', dest='config', type=str, default='configs/bisenetv2_city.py',)

parse.add_argument('--weight-path', type=str, default='./res/model_final.pth') #模型训练出的权重参数

parse.add_argument('--img-path', dest='img_path', type=str, default='data/jpg/train/image_0326.jpg')#要推理的图片路径

parse.add_argument('--img-dir', dest='img_dir', type=str, default='data/jpg/test')#要推理的图片所在文件夹路径

args = parse.parse_args()

cfg = set_cfg_from_file(args.config)

palette = np.random.randint(0, 256, (256, 3), dtype=np.uint8)

# define model

net = model_factory[cfg.model_type](cfg.n_cats, aux_mode='eval')

net.load_state_dict(torch.load(args.weight_path, map_location='cpu'), strict=False)

net.eval()

net.cuda()

# prepare data

to_tensor = T.ToTensor(

mean=(0.3257, 0.3690, 0.3223), # city, rgb

std=(0.2112, 0.2148, 0.2115),

)

select = 'img_path' ## img_path:推理单张图片====##img_dir:推理整个文件下的图片=====fps:计算fps

if select == 'img_path':

im = cv2.imread(args.img_path)[:, :, ::-1]

# img = Image.open(args.img_path)

old_img = Image.open(args.img_path)

im = to_tensor(dict(im=im, lb=None))['im'].unsqueeze(0).cuda()

# shape divisor

org_size = im.size()[2:]

new_size = [math.ceil(el / 32) * 32 for el in im.size()[2:]]

# inference

im = F.interpolate(im, size=new_size, align_corners=False, mode='bilinear')

out = net(im)[0]

out = F.interpolate(out, size=org_size, align_corners=False, mode='bilinear')

out = out.argmax(dim=1)

# visualize

out = out.squeeze().detach().cpu().numpy()

pred = palette[out]

cv2.imwrite('./res.jpg', pred)

pred = Image.open('./res.jpg')

image = Image.blend(old_img,pred,0.3)

image.save('img.jpg')

elif select == 'img_dir':

img_names = os.listdir(args.img_dir)

for img_name in img_names:

if img_name.lower().endswith(('.bmp', '.dib', '.png', '.jpg', '.jpeg', '.pbm', '.pgm', '.ppm', '.tif', '.tiff')):

image_path = os.path.join(args.img_dir, img_name)

old_img = Image.open(image_path)

im = cv2.imread(image_path)[:, :, ::-1]

im = to_tensor(dict(im=im, lb=None))['im'].unsqueeze(0).cuda()

# shape divisor

org_size = im.size()[2:]

new_size = [math.ceil(el / 32) * 32 for el in im.size()[2:]]

im = F.interpolate(im, size=new_size, align_corners=False, mode='bilinear')

out = net(im)[0]

out = F.interpolate(out, size=org_size, align_corners=False, mode='bilinear')

out = out.argmax(dim=1)

# visualize

out = out.squeeze().detach().cpu().numpy()

pred = palette[out]

cv2.imwrite('./res.jpg', pred)

pred = Image.open('./res.jpg')

image = Image.blend(old_img,pred,0.4)

image.save(os.path.join('res/img_dir', img_name))

elif select == 'fps':

test_interval = 100

tact_time = get_FPS(test_interval = test_interval)

print(str(tact_time) + ' seconds, ' + str(1/tact_time) + 'FPS, @batch_size 1')

这篇文章只是作为自己的记录,如果有错误之处还请指出。

参考:

将自己的数据制作成cityscape格式_cityscape 格式label 目录_s534435877的博客-CSDN博客

自制多分类cityscapes格式数据集用于HRNet网络进行语义分割_逃不掉的深度小白的博客-CSDN博客

BiSeNet训练labelme标注的语义分割数据集_setuptools==50.3.1.post20201107_无为旅人的博客-CSDN博客

【工程测试与训练】使用BiSeNetv2测试、训练cityscapes数据集、训练自己的数据集_magic_ll的博客-CSDN博客