导语: k8s通过psp限制nvidia-plugin插件的使用。刚开始接触psp 记录一下 后续投入生产测试了再完善。

通过apiserver开启psp 静态pod会自动更新

# PSP(Pod Security Policy) 在默认情况下并不会开启。通过将PodSecurityPolicy关键词添加到 --enbale-admission-plugins 配置数组后,可以开启PSP权限认证功能。

# /etc/kubernetes/manifests/kube-apiserver.yaml 在NodeRestriction后添加PodSecurityPolicy

- --enable-admission-plugins=NodeRestriction,PodSecurityPolicy

直接创建容器测试

lung.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: lung

labels:

k8s-app: lung

k8s-med-type: biz-internel

spec:

strategy:

type: Recreate

replicas: 1

selector:

matchLabels:

k8s-app: lung

template:

metadata:

labels:

k8s-app: lung

spec:

# runtimeClassName: nvidia

# hostPID: true

containers:

- name: lung

image: nvidia/cuda:11.3.0-base-ubi8

command: ["sh","-c","tail -f /dev/null "]

#command: ["sh","-c","for i in `ls /srv/conf-drwise220531`;do rm -rf /root/lung/$i/conf && ln -s /srv/conf-drwise220531/$i/conf /root/lung/$i/ ;done && rm -rf /root/lung/Release/path.conf /root/lung/path.conf && ln -s /srv/conf-drwise220531/Release/path.conf /root/lung/Release/ && ln -s /root/lung/Release/path.conf /root/lung/ && sh /root/aiclassifier/startup.sh && sh /root/lung/startup.sh "]

# securityContext:

# privileged: true

env:

- name: NVIDIA_DRIVER_CAPABILITIES

value: compute,utility,video,graphics,display

- name: NVIDIA_VISIBLE_DEVICES

value: all

volumeMounts:

- mountPath: /dev/shm

name: dshm

volumes:

- name: dshm

emptyDir:

medium: Memory

sizeLimit: 1Gi

#deepwise-operator

# serviceAccountName: deepwise-operator

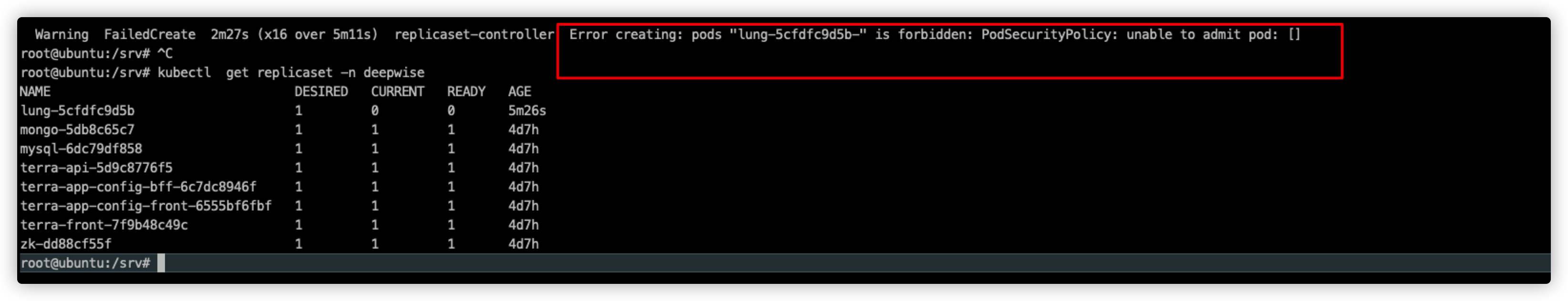

# 创建deployment测试 发下会有psp的问题

# 注意:开启PodSecurityPolicy功能后,即使没有使用任何安全策略,都会使得创建pods(包括调度任务重新创建pods)失败

kubectl apply -f lung -n deepwise

创建对应的资源限制策略

4.nvidia-plugin.yaml

# 显卡驱动的限制

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp-nvidia

spec:

privileged: false

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- "*"

hostPID: false

hostIPC: false

hostNetwork: false

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: nvidiaoperator

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: psp-permissive-nvidia

namespace: kube-system

rules:

- apiGroups:

- extensions

resources:

- podsecuritypolicies

resourceNames:

- psp-nvidia

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: psp-permissive-nvidia

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: psp-permissive-nvidia

subjects:

- kind: ServiceAccount

name: nvidiaoperator

namespace: kube-system

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nvidia-device-plugin-daemonset

namespace: kube-system

spec:

selector:

matchLabels:

name: nvidia-device-plugin-ds

updateStrategy:

type: RollingUpdate

template:

metadata:

# This annotation is deprecated. Kept here for backward compatibility

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

name: nvidia-device-plugin-ds

spec:

runtimeClassName: nvidia

tolerations:

# This toleration is deprecated. Kept here for backward compatibility

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

- key: CriticalAddonsOnly

operator: Exists

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

# Mark this pod as a critical add-on; when enabled, the critical add-on

# scheduler reserves resources for critical add-on pods so that they can

# be rescheduled after a failure.

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

priorityClassName: "system-node-critical"

containers:

- image: harbor.deepwise.com/terra-k8s/k8s-device-plugin:v0.10.0

name: nvidia-device-plugin-ctr

args: ["--fail-on-init-error=false"]

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

volumeMounts:

- name: device-plugin

mountPath: /var/lib/kubelet/device-plugins

volumes:

- name: device-plugin

hostPath:

path: /var/lib/kubelet/device-plugins

serviceAccountName: nvidiaoperator

5.nvida-psp.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp-nvidia

spec:

privileged: false

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- "*"

hostPID: false

hostIPC: false

hostNetwork: false

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: nvidiaoperator

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: psp-permissive-nvidia

namespace: kube-system

rules:

- apiGroups:

- extensions

resources:

- podsecuritypolicies

resourceNames:

- psp-nvidia

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: psp-permissive-nvidia

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: psp-permissive-nvidia

subjects:

- kind: ServiceAccount

name: nvidiaoperator

namespace: kube-system

6.deepwise-psp.yaml

# 用户的限制

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp-deepwise

spec:

privileged: false

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- "*"

hostPID: false

hostIPC: false

hostNetwork: false

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: deepwise

name: deepwise-operator

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: psp-permissive-deepwise

namespace: deepwise

rules:

- apiGroups:

- extensions

resources:

- podsecuritypolicies

resourceNames:

- psp-deepwise

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: psp-permissive-deepwise

namespace: deepwise

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: psp-permissive-deepwise

subjects:

- kind: ServiceAccount

name: deepwise-operator

namespace: deepwise

7.runtimeclass.yaml

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: "nvidia"

handler: "nvidia"

如果是docker运行时,handler需要调整为docker。使用containerd则不需要调整。参考https://opni.io/setup/gpu/

重新创建lung的deployment

# 加上runtimeClassName: nvidia

# 加上serviceAccountName: deepwise-operator

apiVersion: apps/v1

kind: Deployment

metadata:

name: lung

labels:

k8s-app: lung

k8s-med-type: biz-internel

spec:

strategy:

type: Recreate

replicas: 1

selector:

matchLabels:

k8s-app: lung

template:

metadata:

labels:

k8s-app: lung

spec:

runtimeClassName: nvidia

# hostPID: true

containers:

- name: lung

image: nvidia/cuda:11.3.0-base-ubi8

command: ["sh","-c","tail -f /dev/null "]

#command: ["sh","-c","for i in `ls /srv/conf-drwise220531`;do rm -rf /root/lung/$i/conf && ln -s /srv/conf-drwise220531/$i/conf /root/lung/$i/ ;done && rm -rf /root/lung/Release/path.conf /root/lung/path.conf && ln -s /srv/conf-drwise220531/Release/path.conf /root/lung/Release/ && ln -s /root/lung/Release/path.conf /root/lung/ && sh /root/aiclassifier/startup.sh && sh /root/lung/startup.sh "]

# securityContext:

# privileged: true

env:

- name: NVIDIA_DRIVER_CAPABILITIES

value: compute,utility,video,graphics,display

- name: NVIDIA_VISIBLE_DEVICES

value: all

volumeMounts:

- mountPath: /dev/shm

name: dshm

volumes:

- name: dshm

emptyDir:

medium: Memory

sizeLimit: 1Gi

#deepwise-operator

serviceAccountName: deepwise-operator

参考文档

https://kubernetes.io/zh-cn/docs/reference/access-authn-authz/admission-controllers/

https://blog.csdn.net/tushanpeipei/article/details/121940757

https://blog.csdn.net/weixin_45081220/article/details/125407608