参考自:

https://www.cnblogs.com/surpassal/archive/2012/12/19/zed_webcam_lab1.html

https://www.cnblogs.com/liusiluandzhangkun/p/8690604.html

https://blog.csdn.net/wr132/article/details/54428144

这里默认安装了基本的环境,比如v4l2 qt opencv等其他所需依赖

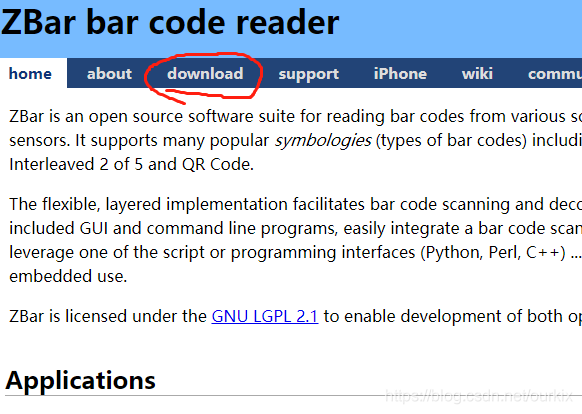

一、zbar编译安装

打开zbar的官网,

http://zbar.sourceforge.net/

点击download后

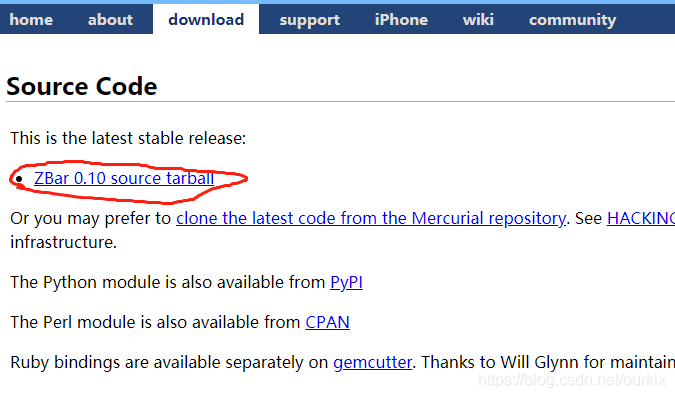

下载源码

下载回来是zbar-0.10.tar.bz2

解压到随便一个地方

tar -xzvf zbar-0.10.tar.bz2 ./然后切换到zbar-0.10的目录里面

cd zbar-0.10如果直接运行./configure会报错找不到构建目标平台,这里指定目标平台,加速编译去掉不要的组件

./configure --build=arm --without-gtk --without-qt --without-imagemagick --without-python --disable-video

还能用–prefix来指定安目录,这里默认安装路径

配置完成后

make -j4

sudo make install这样就安装完成了

二、工程构建

这里先配置好qt的zed_YUV_camera_prj.pro文件

######################################################################

# Automatically generated by qmake (3.1) Mon Jun 22 15:37:04 2020

######################################################################

QT += core gui

greaterThan(QT_MAJOR_VERSION, 4): QT += widgets

TEMPLATE = app

TARGET = zed_YUV_camera_prj

INCLUDEPATH += .

# The following define makes your compiler warn you if you use any

# feature of Qt which has been marked as deprecated (the exact warnings

# depend on your compiler). Please consult the documentation of the

# deprecated API in order to know how to port your code away from it.

DEFINES += QT_DEPRECATED_WARNINGS

# You can also make your code fail to compile if you use deprecated APIs.

# In order to do so, uncomment the following line.

# You can also select to disable deprecated APIs only up to a certain version of Qt.

#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0

# Input

HEADERS += common.h videodevice.h widget.h ui_widget.h

SOURCES += main.cpp videodevice.cpp widget.cpp

INCLUDEPATH+= ./ \

/usr/local/include \

/usr/local/include/opencv \

/usr/local/include/opencv2

LIBS+= /usr/local/lib/libopencv_highgui.so \

/usr/local/lib/libopencv_core.so \

/usr/local/lib/libopencv_imgproc.so \

/usr/local/lib/libopencv_imgcodecs.so \

/usr/local/lib/libopencv_shape.so \

/usr/lib/aarch64-linux-gnu/libzbar.so

这里包含了头文件的目录,和动态库的路径,根据自己的更改即可

然后是头文件 common.h 一些宏定义

#ifndef COMMON_H

#define COMMON_H

// definitions

#define BYTE unsigned char

#define SBYTE signed char

#define SWORD signed short int

#define WORD unsigned short int

#define DWORD unsigned long int

#define SDWORD signed long int

#define IMG_WIDTH 640

#define IMG_HEIGTH 480

#endif // COMMON_H

main.cpp文件

#include <QApplication>

#include "widget.h"

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

Widget w;

w.show();

return a.exec();

}

v4l2相机操作的类 头文件 videodevice.h

#ifndef VIDEODEVICE_H

#define VIDEODEVICE_H

//#include <errno.h>

#include <QString>

#include <QObject>

#include <QTextCodec>

#define CLEAR(x) memset(&(x), 0, sizeof(x))

class VideoDevice : public QObject

{

Q_OBJECT

public:

VideoDevice(QString dev_name);

~VideoDevice();

int get_frame(unsigned char ** yuv_buffer_pointer, unsigned int * len);

int unget_frame();

void setExposureAbs(int exposureAbs);// unit per 100us

void getExposureAbs();

void setExposureLevel(int exposurelevel);

void getExposureLevel();

void setExposureMode(int exposureMode);// 1 手动曝光模式 0 auto曝光模式

void getExposureMode();

int stop_capturing();

int start_capturing();

private:

int open_device();

int init_device();

int init_mmap();

int uninit_device();

int close_device();

struct buffer

{

void * start;

unsigned int length;

};

QString dev_name;

int fd;//video0 file

buffer* buffers;

unsigned int n_buffers;

int index;

signals:

//void display_error(QString);

};

#endif // VIDEODEVICE_H

v4l2相机操作的类 videodevice.cpp

#include "videodevice.h"

#include "common.h"

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <fcntl.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <asm/types.h>

#include <linux/videodev2.h>

#include "unistd.h"

#define FILE_VIDEO "/dev/video0"

#define TRUE 1

#define FALSE 0

VideoDevice::VideoDevice(QString dev_name)

{

this->dev_name = dev_name;

this->fd = -1;

this->buffers = NULL;

this->n_buffers = 0;

this->index = -1;

if(open_device() == FALSE)

{

close_device();

}

if(init_device() == FALSE)

{

close_device();

}

if(start_capturing() == FALSE)

{

stop_capturing();

close_device();

printf("start capturing is faile!\n");

}

}

VideoDevice::~VideoDevice()

{

if(stop_capturing() == FALSE)

{

}

if(uninit_device() == FALSE)

{

}

if(close_device() == FALSE)

{

}

}

int VideoDevice::open_device()

{

fd = open(FILE_VIDEO,O_RDWR);

if(fd == -1)

{

printf("Error opening V4L interface\n");

return FALSE;

}

return TRUE;

}

int VideoDevice::close_device()

{

if( close(fd) == FALSE)

{

printf("Error closing V4L interface\n");

return FALSE;

}

return TRUE;

}

int VideoDevice::init_device()

{

v4l2_capability cap;

v4l2_format fmt;

v4l2_streamparm setfps;

if(ioctl(fd, VIDIOC_QUERYCAP, &cap) == -1)

{

printf("Error opening device %s: unable to query device.\n",FILE_VIDEO);

return FALSE;

}

else

{

printf("driver:\t\t%s\n",cap.driver);

printf("card:\t\t%s\n",cap.card);

printf("bus_info:\t%s\n",cap.bus_info);

printf("version:\t%d\n",cap.version);

printf("capabili ties:\t%x\n",cap.capabilities);

if ((cap.capabilities & V4L2_CAP_VIDEO_CAPTURE) == V4L2_CAP_VIDEO_CAPTURE)

{

printf("Device %s: supports capture.\n",FILE_VIDEO);

}

if ((cap.capabilities & V4L2_CAP_STREAMING) == V4L2_CAP_STREAMING)

{

printf("Device %s: supports streaming.\n",FILE_VIDEO);

}

}

//set fmt

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

//fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_MJPEG;

fmt.fmt.pix.height = 240;

fmt.fmt.pix.width = 320;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

if(ioctl(fd, VIDIOC_S_FMT, &fmt) == -1)

{

printf("Unable to set format\n");

return FALSE;

}

if(ioctl(fd, VIDIOC_G_FMT, &fmt) == -1)

{

printf("Unable to get format\n");

return FALSE;

}

printf("fmt.type:\t\t%d\n",fmt.type);

printf("pix.pixelformat:\t%c%c%c%c\n",fmt.fmt.pix.pixelformat & 0xFF, (fmt.fmt.pix.pixelformat >> 8) & 0xFF,(fmt.fmt.pix.pixelformat >> 16) & 0xFF, (fmt.fmt.pix.pixelformat >> 24) & 0xFF);

printf("pix.height:\t\t%d\n",fmt.fmt.pix.height);

printf("pix.width:\t\t%d\n",fmt.fmt.pix.width);

printf("pix.field:\t\t%d\n",fmt.fmt.pix.field);

//set fps

setfps.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

setfps.parm.capture.timeperframe.numerator = 1;

setfps.parm.capture.timeperframe.denominator = 60;

if(ioctl(fd, VIDIOC_S_PARM, &setfps) == -1)

{

printf("Unable to set frame rate\n");

return FALSE;

}

else

{

printf("set fps OK!\n");

}

if(ioctl(fd, VIDIOC_G_PARM, &setfps) == -1)

{

printf("Unable to get frame rate\n");

return FALSE;

}

else

{

printf("get fps OK!\n");

printf("timeperframe.numerator:\t%d\n",setfps.parm.capture.timeperframe.numerator);

printf("timeperframe.denominator:\t%d\n",setfps.parm.capture.timeperframe.denominator);

}

//setExposureMode(1);

//setExposureAbs(85);

//setExposureMode(1);

//setExposureMode(0);

//setExposureAbs(1);

//mmap

if(init_mmap() == FALSE )

{

printf("cannot mmap!\n");

return FALSE;

}

return TRUE;

}

int VideoDevice::init_mmap()

{

v4l2_requestbuffers req;

//2

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

//printf("1\n");

if(ioctl(fd, VIDIOC_REQBUFS, &req) == -1)

{

printf("request for buffers error\n");

return FALSE;

}

//printf("2\n");

if(req.count < 2)

{

return FALSE;

}

//printf("3\n");

buffers = (buffer*)calloc(req.count, sizeof(*buffers));

if(!buffers)

{

return FALSE;

}

//printf("4\n");

for(n_buffers = 0; n_buffers < req.count; n_buffers++)

{

v4l2_buffer buf;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if(ioctl(fd, VIDIOC_QUERYBUF, &buf) == -1)

{

return FALSE;

}

//printf("5\n");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start =

mmap(NULL, // start anywhere// allocate RAM*4

buf.length,

PROT_READ | PROT_WRITE,

MAP_SHARED,

fd, buf.m.offset);

if(MAP_FAILED == buffers[n_buffers].start)

{

printf("buffer map error\n");

return FALSE;

}

}

//printf("6\n");

return TRUE;

}

int VideoDevice::start_capturing()

{

unsigned int i;

for(i = 0; i < 4; i++)

{

v4l2_buffer buf;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory =V4L2_MEMORY_MMAP;

buf.index = i;

if(-1 == ioctl(fd, VIDIOC_QBUF, &buf))

{

return FALSE;

}

}

v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(-1 == ioctl(fd, VIDIOC_STREAMON, &type))

{

return FALSE;

}

return TRUE;

}

int VideoDevice::stop_capturing()

{

v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(ioctl(fd, VIDIOC_STREAMOFF, &type) == -1)

{

return FALSE;

}

/*

if(fd != -1)

{

close(fd);

return (TRUE);

}

*/

return TRUE;

}

int VideoDevice::uninit_device()

{

unsigned int i;

for(i = 0; i < n_buffers; ++i)

{

if(-1 == munmap(buffers[i].start, buffers[i].length))

{

printf("munmap error\n");

return FALSE;

}

}

delete buffers;

return TRUE;

}

int VideoDevice::get_frame(unsigned char ** yuv_buffer_pointer, unsigned int * len)

{

v4l2_buffer queue_buf;

//printf("get frame 1\n");

queue_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

queue_buf.memory = V4L2_MEMORY_MMAP;

if(ioctl(fd, VIDIOC_DQBUF, &queue_buf) == -1)

{

return FALSE;

}

*yuv_buffer_pointer = (unsigned char *)buffers[queue_buf.index].start;

*len = buffers[queue_buf.index].length;

index = queue_buf.index;

//printf("get frame 2\n");

return TRUE;

}

int VideoDevice::unget_frame()

{

if(index != -1)

{

v4l2_buffer queue_buf;

queue_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

queue_buf.memory = V4L2_MEMORY_MMAP;

queue_buf.index = index;

if(ioctl(fd, VIDIOC_QBUF, &queue_buf) == -1)

{

return FALSE;

}

return TRUE;

}

return FALSE;

}

void VideoDevice::getExposureMode()

{

int ret;

struct v4l2_control ctrl;

//得到曝光模式

ctrl.id = V4L2_CID_EXPOSURE_AUTO;

ret = ioctl(fd, VIDIOC_G_CTRL, &ctrl);

if (ret < 0)

{

printf("Get exposure Mode failed\n");

//return V4L2_UTILS_GET_EXPSURE_AUTO_TYPE_ERR;

}

else

{

printf("\nGet Exposure Mode:[%d]\n", ctrl.value);

}

}

// 1 手动曝光模式 0 auto曝光模式

void VideoDevice::setExposureMode(int exposureMode)

{

int ret;

struct v4l2_control ctrl;

ctrl.id = V4L2_CID_EXPOSURE_AUTO;

if(exposureMode == 0)

{

ctrl.value = V4L2_EXPOSURE_APERTURE_PRIORITY; //V4L2_EXPOSURE_AUTO;//auto曝光模式

}

else

{

ctrl.value = V4L2_EXPOSURE_MANUAL;//手动曝光模式

}

ret = ioctl(fd, VIDIOC_S_CTRL, &ctrl);

if (ret < 0)

{

printf("Set exposure Mode failed\n");

//return V4L2_UTILS_SET_EXPSURE_AUTO_TYPE_ERR;

}

else

{

printf("\nSet Exposure Mode Success :[%d]\n", ctrl.value);

}

}

void VideoDevice::getExposureLevel()

{

int ret;

struct v4l2_control ctrl;

ctrl.id = V4L2_CID_EXPOSURE;//得到曝光档次,A20接受从 -4到4 共9个档次

ret = ioctl(fd, VIDIOC_G_CTRL, &ctrl);

if (ret < 0)

{

printf("Get exposure Level failed (%d)\n", ret);

//return V4L2_UTILS_GET_EXPSURE_ERR;

}

else

{

printf("\nGet Exposure Level :[%d]\n", ctrl.value);

}

}

void VideoDevice::setExposureLevel(int exposurelevel)

{

int ret;

struct v4l2_control ctrl;

//设置曝光档次

ctrl.id = V4L2_CID_EXPOSURE;

ctrl.value = exposurelevel;

ret = ioctl(fd, VIDIOC_S_CTRL, &ctrl);

if (ret < 0)

{

printf("Set exposure level failed (%d)\n", ret);

//return V4L2_UTILS_SET_EXPSURE_ERR;

}

else

{

printf("\nSet exposure level Success:[%d]\n", ctrl.value);

}

}

void VideoDevice::getExposureAbs()

{

int ret;

struct v4l2_control ctrl;

ctrl.id = V4L2_CID_EXPOSURE_ABSOLUTE;

ret = ioctl(fd, VIDIOC_G_CTRL, &ctrl);

if (ret < 0)

{

printf("Get exposure Abs failed (%d)\n", ret);

//return V4L2_UTILS_SET_EXPSURE_ERR;

}

else

{

printf("\nGet exposure ABS Success:[%d]\n", ctrl.value);

}

}

void VideoDevice::setExposureAbs(int exposureAbs)

{

int ret;

struct v4l2_control ctrl;

//设置曝光绝对值

ctrl.id = V4L2_CID_EXPOSURE_ABSOLUTE;

ctrl.value = exposureAbs; //单位100us

ret = ioctl(fd, VIDIOC_S_CTRL, &ctrl);

if (ret < 0)

{

printf("Set exposure Abs failed (%d)\n", ret);

//return V4L2_UTILS_SET_EXPSURE_ERR;

}

else

{

printf("\nSet exposure Abs Success:[%d]\n", ctrl.value);

}

}

这里分辨根据自己相机支持的分辨率来设置,我的相机选320*240能够上到120fps,具体是这几个参数,实际支持参数可以通过v4l2的命令行查看,注意-d2这个参数,2是指设备/dev/video2,按你自己的相机设备号来。

v4l2-ctl -d2 --list-formats-ext高帧数的话只能用mjpeg模式

//fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_MJPEG;

fmt.fmt.pix.height = 240;

fmt.fmt.pix.width = 320;qt窗口类 具体实现 头文件 widget.h

#ifndef WIDGET_H

#define WIDGET_H

#include <QWidget>

#include <QLabel>

#include <QTime>

#include <QPainter>

#include <QLineEdit>

#include "videodevice.h"

#include <string>

#include <exception>

#include <zbar.h>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

using namespace cv;

using namespace zbar;

using namespace std;

//定义一个结构体用于存储每个码

typedef struct

{

string type;

string data;

vector <Point> location;

}decodedObject;

namespace Ui {

class Widget;

}

class Widget : public QWidget

{

Q_OBJECT

public:

explicit Widget(QWidget *parent = 0);

~Widget();

private:

Ui::Widget *ui;

QImage *frame;

QLabel *tp;

QByteArray *aa;

QPixmap *pix;

int rs;

unsigned int len;

int convert_yuv_to_rgb_buffer();

void print_quartet(unsigned int i);

VideoDevice *vd;

FILE * yuvfile;

unsigned char rgb_buffer[640*480*3];

unsigned char * yuv_buffer_pointer;

char Y_frame[640*480*2+600];

int writejpg();

cv::Mat scancoder(cv::Mat &image);

QPixmap cvMatToQPixmap(const cv::Mat &inMat);

cv::Mat QImage_to_cvMat( const QImage &image, bool inCloneImageData = true );

QImage cvMat_to_QImage(const cv::Mat &mat );

// 展示码

void display(Mat &im, vector<decodedObject>&decodedObjects);

// 对图像进行码检测

void decode(Mat &im, vector<decodedObject>&decodedObjects);

private slots:

void on_pushButton_start_clicked();

void paintEvent(QPaintEvent *);

void on_changeex_clicked();

void on_changesize_clicked();

//void on_pushButton_changeExposure();

};

#endif // WIDGET_H

qt窗口类 具体实现 widget.cpp

#include "ui_widget.h"

#include "widget.h"

#include "common.h"

#include "videodevice.h"

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <stdio.h>

#include <unistd.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <stdio.h>

#include <sys/ioctl.h>

#include <stdlib.h>

#include <sys/mman.h>

#include <linux/types.h>

#include <linux/videodev2.h>

#define TOTALFRAMES 151

#define CAPTURE_IDLE 0

#define CAPTURE_START 1

#define CAPTURE_STOP 2

#define CAPTURE_COMPRESS 3

char last_state = 2;

char state = 0;

long framecnt=0;

char yuvfilename[11] = {'r','c','q','0','0','0','.','j','p','g','\0'};

static QTime timec;

static int framesc = 0;

static bool started = false;

static bool multi = false;

int imagesizes = 500;

QString m_current_fps;

//二维码检测和识读

vector<decodedObject> decodedObjects;

typedef struct tagBITMAPFILEHEADER{

unsigned short bfType; // the flag of bmp, value is "BM"

unsigned long bfSize; // size BMP file ,unit is bytes

unsigned long bfReserved; // 0

unsigned long bfOffBits; // must be 54

}BITMAPFILEHEADER;

typedef struct tagBITMAPINFOHEADER{

unsigned long biSize; // must be 0x28

unsigned long biWidth; //

unsigned long biHeight; //

unsigned short biPlanes; // must be 1

unsigned short biBitCount; //

unsigned long biCompression; //

unsigned long biSizeImage; //

unsigned long biXPelsPerMeter; //

unsigned long biYPelsPerMeter; //

unsigned long biClrUsed; //

unsigned long biClrImportant; //

}BITMAPINFOHEADER;

BITMAPFILEHEADER bf;

BITMAPINFOHEADER bi;

QMatrix matrix;

Widget::Widget(QWidget *parent) :

QWidget(parent),

ui(new Ui::Widget)

{

matrix.rotate(180);

//Set BITMAPINFOHEADER

bi.biSize = 40;

bi.biWidth = IMG_WIDTH;

bi.biHeight = IMG_HEIGTH;

bi.biPlanes = 1;

bi.biBitCount = 24;

bi.biCompression = 0;

bi.biSizeImage = IMG_WIDTH*IMG_HEIGTH*3;

bi.biXPelsPerMeter = 3780;

bi.biYPelsPerMeter = 3780;

bi.biClrUsed = 0;

bi.biClrImportant = 0;

//Set BITMAPFILEHEADER

bf.bfType = 0x4d42;

bf.bfSize = 54 + bi.biSizeImage;

bf.bfReserved = 0;

bf.bfOffBits = 54;

ui->setupUi(this);

vd = new VideoDevice(tr("/dev/video1"));

rs = vd->unget_frame();

frame = new QImage(rgb_buffer,640,480,QImage::Format_RGB888);

aa = new QByteArray();

pix = new QPixmap();

}

Widget::~Widget()

{

delete ui;

//delete frame;

//delete [] Y_frame;

//delete [] Cr_frame;

//delete [] Cb_frame;

}

void Widget::paintEvent(QPaintEvent *)

{

rs = vd->get_frame((unsigned char **)(&yuv_buffer_pointer),&len);

if(last_state==2 && state == 0)

{

yuvfile = fopen(yuvfilename,"wb+");

yuvfilename[5]++;

}

if(state == 1)

{

rs = writejpg();

printf("writejpg\n");

}

if(last_state==1 && state==2)

{

fwrite(Y_frame, len, 1, yuvfile);

fclose(yuvfile);

}

last_state=state;

//MJPEG

//qpixmap

//pix->loadFromData(yuv_buffer_pointer,len);

//ui->label->setPixmap(*pix);

//qimage

//frame->loadFromData(yuv_buffer_pointer,len);

//ui->label->setPixmap(QPixmap::fromImage(*frame));

frame->loadFromData(yuv_buffer_pointer,len);

QImage swapped = *frame;

Mat images = QImage_to_cvMat(swapped);

int new_width,new_height,width,height,channel;

width=images.cols;

height=images.rows;

channel=images.channels();

//调整图像大小

new_width=imagesizes;

new_height=int(new_width*1.0/width*height);

cv::resize(images, images, cv::Size(new_width, new_height));

scancoder(images);

QImage xii = cvMat_to_QImage(images);

ui->label->setPixmap(QPixmap::fromImage(xii));//cvMatToQPixmap(image)

rs = vd->unget_frame();

QPainter painter(this);

if (!started || timec.elapsed() > 1000) {

qreal fps = framesc * 1000. / timec.elapsed();

if (fps == 0)

m_current_fps = "counting fps...";

else

m_current_fps = QString::fromLatin1("%3 FPS").arg((int) qRound(fps));

timec.start();

started = true;

framesc = 0;

//printf("fps %f\n",fps);

} else {

++framesc;

ui->fpslabel->setText(m_current_fps);

}

}

int Widget::writejpg()

{

int x,y;

long int index1 =0;

if (yuv_buffer_pointer[0] == '\0')

{

return -1;

}

for(x=0;x<len;x++)

{

Y_frame[x]=yuv_buffer_pointer[x];

}

printf("writed frame %ld\n",framecnt);

return 0;

}

/*yuv格式转换为rgb格式*/

int Widget::convert_yuv_to_rgb_buffer()

{

unsigned long in, out = 640*480*3 - 1;

int y0, u, y1, v;

int r, g, b;

for(in = 0; in < IMG_WIDTH * IMG_HEIGTH * 2; in += 4)

{

y0 = yuv_buffer_pointer[in + 0];

u = yuv_buffer_pointer[in + 1];

y1 = yuv_buffer_pointer[in + 2];

v = yuv_buffer_pointer[in + 3];

r = y0 + (1.370705 * (v-128));

g = y0 - (0.698001 * (v-128)) - (0.337633 * (u-128));

b = y0 + (1.732446 * (u-128));

if(r > 255) r = 255;

if(g > 255) g = 255;

if(b > 255) b = 255;

if(r < 0) r = 0;

if(g < 0) g = 0;

if(b < 0) b = 0;

rgb_buffer[out--] = r;

rgb_buffer[out--] = g;

rgb_buffer[out--] = b;

r = y1 + (1.370705 * (v-128));

g = y1 - (0.698001 * (v-128)) - (0.337633 * (u-128));

b = y1 + (1.732446 * (u-128));

if(r > 255) r = 255;

if(g > 255) g = 255;

if(b > 255) b = 255;

if(r < 0) r = 0;

if(g < 0) g = 0;

if(b < 0) b = 0;

rgb_buffer[out--] = r;

rgb_buffer[out--] = g;

rgb_buffer[out--] = b;

}

return 0;

}

void Widget::on_pushButton_start_clicked()

{

switch(state)

{

case 0:

{

ui->pushButton_start->setText("stop");

state = 1;

break;

}

case 1:

{

ui->pushButton_start->setText("compress");

state = 2;

break;

}

case 2:

{

ui->pushButton_start->setText("start");

framecnt=0;

state = 0;

break;

}

default :break;

}

}

void Widget::on_changeex_clicked()

{

QString strtext = ui->edit->text();

int a = strtext.toInt();

vd->stop_capturing();

vd->setExposureMode(1);

vd->setExposureAbs(a);

vd->start_capturing();

}

void Widget::on_changesize_clicked()

{

QString strtext = ui->sizeedit->text();

int a = strtext.toInt();

imagesizes = a;

}

// 对图像进行码检测

void Widget::decode(Mat &im, vector<decodedObject>&decodedObjects)

{

// 创建zbar扫码器

ImageScanner scanner;

// 配置扫码器参数

scanner.set_config(ZBAR_NONE, ZBAR_CFG_ENABLE, 1);//ZBAR_NONE表示针对所有码进行检测(ZBAR_QRCODE表示只检测二维码)

// 将图像转换为灰度图

Mat imGray;

cvtColor(im, imGray,COLOR_BGR2GRAY);

// 将图像转换为zbar图像格式,即Y800格式

Image image(im.cols, im.rows, "Y800", (uchar *)imGray.data, im.cols * im.rows);

// 开始扫码

int n = scanner.scan(image);

// 输出扫码结果

for(Image::SymbolIterator symbol = image.symbol_begin(); symbol != image.symbol_end(); ++symbol)

{

decodedObject obj;

obj.type = symbol->get_type_name();//码类型

obj.data = symbol->get_data();//码解析字符串

// 输出结果至控制台

//printf( "类型 : %s", obj.type);

//printf( " 解码内容 : %s\n", obj.data);

// Draw text

putText(im, obj.data, Point(symbol->get_location_x(2),symbol->get_location_y(2)), FONT_HERSHEY_PLAIN, 1, Scalar( 110, 220, 0 ));

// 获取码的定位坐标

for(int i = 0; i< symbol->get_location_size(); i++)

{

obj.location.push_back(Point(symbol->get_location_x(i),symbol->get_location_y(i)));

}

//每个检测结果存储于decodedObjects变量

decodedObjects.push_back(obj);

}

}

// 展示码

void Widget::display(Mat &im, vector<decodedObject>&decodedObjects)

{

// 循环每一个码

for(int i = 0; i < decodedObjects.size(); i++)

{

vector<Point> points = decodedObjects[i].location;

vector<Point> hull;//多边形

// 如果超过4个点,则采用多边形进行外接框绘制

if(points.size() > 4)

convexHull(points, hull);

else

hull = points;

// 获取多边形点的个数

int n = hull.size();

for(int j = 0; j < n; j++)

{

line(im, hull[j], hull[ (j+1) % n], Scalar(255,0,0), 3);

}

}

// 显示结果

//imshow("Results", im);

//waitKey(0);

}

cv::Mat Widget::scancoder(cv::Mat &image)

{

decode(image, decodedObjects);

display(image, decodedObjects);

decodedObjects.clear();

return image.clone();

}

QImage Widget::cvMat_to_QImage(const cv::Mat &mat ) {

/* switch ( mat.type() )

{

// 8-bit, 4 channel

case CV_8UC4:

{

QImage image( mat.data, mat.cols, mat.rows, mat.step, QImage::Format_RGB32 );

return image;

}

// 8-bit, 3 channel

case CV_8UC3:

{

QImage image( mat.data, mat.cols, mat.rows, mat.step, QImage::Format_RGB888 );

return image.rgbSwapped();

}

// 8-bit, 1 channel

case CV_8UC1:

{

static QVector<QRgb> sColorTable;

// only create our color table once

if ( sColorTable.isEmpty() )

{

for ( int i = 0; i < 256; ++i )

sColorTable.push_back( qRgb( i, i, i ) );

}

QImage image( mat.data, mat.cols, mat.rows, mat.step, QImage::Format_Indexed8 );

image.setColorTable( sColorTable );

return image;

}

default:

printf("Image format is not supported: depth=%d and %d channels\n", mat.depth(), mat.channels());

//qDebug("Image format is not supported: depth=%d and %d channels\n", mat.depth(), mat.channels());

break;

}

return QImage();

*/

// 8-bits unsigned, NO. OF CHANNELS = 1

if(mat.type() == CV_8UC1)

{

QImage image(mat.cols, mat.rows, QImage::Format_Indexed8);

// Set the color table (used to translate colour indexes to qRgb values)

static QVector<QRgb> sColorTable;

// only create our color table once

if ( sColorTable.isEmpty() )

{

for ( int i = 0; i < 256; ++i )

sColorTable.push_back( qRgb( i, i, i ) );

}

image.setColorTable( sColorTable );

// Copy input Mat

uchar *pSrc = mat.data;

for(int row = 0; row < mat.rows; row ++)

{

uchar *pDest = image.scanLine(row);

memcpy(pDest, pSrc, mat.cols);

pSrc += mat.step;

}

return image;

}

// 8-bits unsigned, NO. OF CHANNELS = 3

else if(mat.type() == CV_8UC3)

{

// Copy input Mat

const uchar *pSrc = (const uchar*)mat.data;

// Create QImage with same dimensions as input Mat

QImage image(pSrc, mat.cols, mat.rows, mat.step, QImage::Format_RGB888);

return image.rgbSwapped();

}

else if(mat.type() == CV_8UC4)

{

// Copy input Mat

const uchar *pSrc = (const uchar*)mat.data;

// Create QImage with same dimensions as input Mat

QImage image(pSrc, mat.cols, mat.rows, mat.step, QImage::Format_ARGB32);

return image.copy();

}

else

{

return QImage();

}

}

cv::Mat Widget::QImage_to_cvMat( const QImage &image, bool inCloneImageData) {

switch ( image.format() )

{

case QImage::Format_ARGB32:

case QImage::Format_ARGB32_Premultiplied:

// 8-bit, 4 channel

case QImage::Format_RGB32:

{

cv::Mat mat( image.height(), image.width(), CV_8UC4, const_cast<uchar*>(image.bits()), image.bytesPerLine() );

return (inCloneImageData ? mat.clone() : mat);

}

// 8-bit, 3 channel

case QImage::Format_RGB888:

{

if ( !inCloneImageData ) {

printf(" Conversion requires cloning since we use a temporary QImage\n");

//qWarning() << "ASM::QImageToCvMat() - Conversion requires cloning since we use a temporary QImage";

}

QImage swapped = image.rgbSwapped();

return cv::Mat( swapped.height(), swapped.width(), CV_8UC3, const_cast<uchar*>(swapped.bits()), swapped.bytesPerLine() ).clone();

}

// 8-bit, 1 channel

case QImage::Format_Indexed8:

{

cv::Mat mat( image.height(), image.width(), CV_8UC1, const_cast<uchar*>(image.bits()), image.bytesPerLine() );

return (inCloneImageData ? mat.clone() : mat);

}

default:

printf("Image format is not supported: depth=%d and %d format\n", image.depth(), image.format());

//qDebug("Image format is not supported: depth=%d and %d format\n", image.depth(), image.format();

break;

}

return cv::Mat();

}

QPixmap Widget::cvMatToQPixmap(const cv::Mat &inMat)

{

return QPixmap::fromImage(cvMat_to_QImage(inMat));

}

qt界面文件 ui_widget.h

/********************************************************************************

** Form generated from reading UI file 'widget.ui'

**

** Created by: Qt User Interface Compiler version 5.9.5

**

** WARNING! All changes made in this file will be lost when recompiling UI file!

********************************************************************************/

#ifndef UI_WIDGET_H

#define UI_WIDGET_H

#include <QtCore/QVariant>

#include <QtWidgets/QAction>

#include <QtWidgets/QApplication>

#include <QtWidgets/QButtonGroup>

#include <QtWidgets/QHeaderView>

#include <QtWidgets/QLabel>

#include <QtWidgets/QPushButton>

#include <QtWidgets/QWidget>

#include <QtWidgets/QLineEdit>

QT_BEGIN_NAMESPACE

class Ui_Widget

{

public:

QLabel *label,*fpslabel;

QLineEdit *edit,*sizeedit;

QPushButton *pushButton_start,*changeex,*changesize;

void setupUi(QWidget *Widget)

{

if (Widget->objectName().isEmpty())

Widget->setObjectName(QStringLiteral("Widget"));

Widget->resize(650, 620);

Widget->setMinimumSize(QSize(650, 720));

Widget->setMaximumSize(QSize(1280, 720));

label = new QLabel(Widget);

label->setObjectName(QStringLiteral("label"));

label->setGeometry(QRect(5, 5, 640, 480));

label->setMinimumSize(QSize(640, 480));

label->setMaximumSize(QSize(1280, 720));

fpslabel = new QLabel(Widget);

fpslabel->setObjectName(QStringLiteral("fpslabel"));

fpslabel->setGeometry(QRect(140, 500, 101, 41));

fpslabel->setMinimumSize(QSize(101, 41));

fpslabel->setMaximumSize(QSize(101, 41));

edit = new QLineEdit(Widget);

edit->setObjectName(QStringLiteral("edit"));

edit->setPlaceholderText("Normal");

edit->setEchoMode(QLineEdit::Normal);

edit->setGeometry(QRect(20, 570, 101, 41));

changeex = new QPushButton(Widget);

changeex->setObjectName(QStringLiteral("changeex"));

changeex->setGeometry(QRect(140, 570, 101, 41));

sizeedit = new QLineEdit(Widget);

sizeedit->setObjectName(QStringLiteral("sizeedit"));

sizeedit->setPlaceholderText("Normal");

sizeedit->setEchoMode(QLineEdit::Normal);

sizeedit->setGeometry(QRect(20, 640, 101, 41));

changesize = new QPushButton(Widget);

changesize->setObjectName(QStringLiteral("changesize"));

changesize->setGeometry(QRect(140, 640, 101, 41));

pushButton_start = new QPushButton(Widget);

pushButton_start->setObjectName(QStringLiteral("pushButton_start"));

pushButton_start->setGeometry(QRect(20, 500, 101, 41));

retranslateUi(Widget);

QMetaObject::connectSlotsByName(Widget);

} // setupUi

void retranslateUi(QWidget *Widget)

{

Widget->setWindowTitle(QApplication::translate("Widget", "zed_camera_AVI", Q_NULLPTR));

label->setText(QString());

fpslabel->setText(QString());

pushButton_start->setText(QApplication::translate("Widget", "Start", Q_NULLPTR));

changeex->setText(QApplication::translate("Widget", "change", Q_NULLPTR));

changesize->setText(QApplication::translate("Widget", "cgsize", Q_NULLPTR));

} // retranslateUi

};

namespace Ui {

class Widget: public Ui_Widget {};

} // namespace Ui

QT_END_NAMESPACE

#endif // UI_WIDGET_H

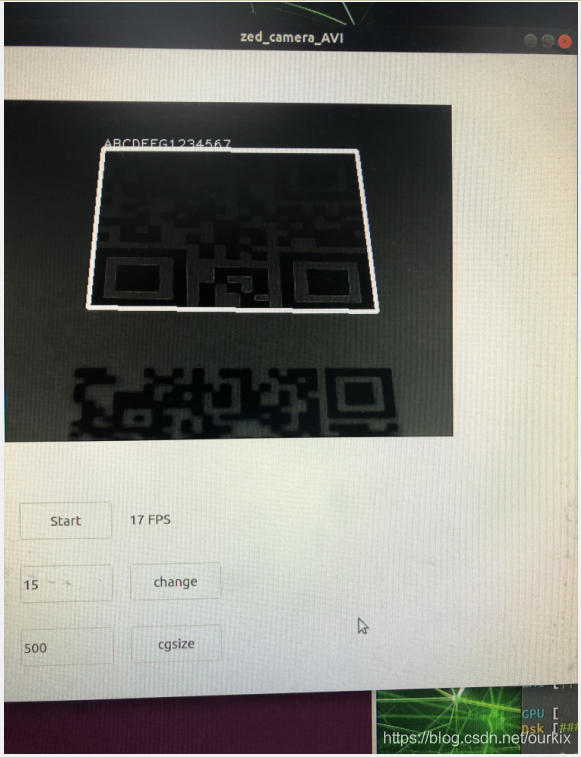

这些文件都有打包,下载下来后解压,进入执行命令编译

文件下载地址:

点击下载

qmake zed_YUV_camera_prj.pro

make -j4最后执行

./zed_YUV_camera

第一个start是获取图片保存

第二个编辑框是曝光时间 单位是100us,上面也就是1500us

第三个是改变输入zbar中识别的图片大小,越小速度越快

这里是采集的jpeg的格式图片,直接写入文件就是jpeg的图了,但是如果采集yuv格式转bmp,在jetson中要bmp的文件头一个一个属性分别写入才不会出错,整个结构体写入会出现问题

//Set BITMAPINFOHEADER

bi.biSize = 40;

bi.biWidth = IMG_WIDTH;

bi.biHeight = IMG_HEIGTH;

bi.biPlanes = 1;

bi.biBitCount = 24;

bi.biCompression = 0;

bi.biSizeImage = IMG_WIDTH*IMG_HEIGTH*3;

bi.biXPelsPerMeter = 3780;

bi.biYPelsPerMeter = 3780;

bi.biClrUsed = 0;

bi.biClrImportant = 0;

//Set BITMAPFILEHEADER

bf.bfType = 0x4d42;

bf.bfSize = 54 + bi.biSizeImage;

bf.bfReserved = 0;

bf.bfOffBits = 54;

//写入就把上面的成员一个一个写入,在树莓派整个结构体写入没问题,但是jetson nano却有问题。

像这样,不过这里是伪代码,实际自己按自己用的写入函数来

write(fd1,bi.biSize)

write(fd1,bi.biWidth)

等等