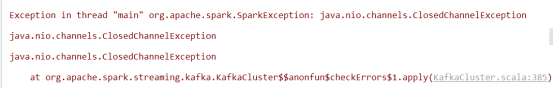

在运行sparkStreaming+kafka的时候报错 java io报错,

如果broker-list的端口不对或者kafka服务端未启动

,会遇到以下错误:

Exception in thread "main" org.apache.spark.SparkException: java.nio.channels.ClosedChannelException

at org.apache.spark.streaming.kafka.KafkaCluster$$anonfun$checkErrors$1.apply(KafkaCluster.scala:366)

at org.apache.spark.streaming.kafka.KafkaCluster$$anonfun$checkErrors$1.apply(KafkaCluster.scala:366)

at scala.util.Either.fold(Either.scala:97)

at org.apache.spark.streaming.kafka.KafkaCluster$.checkErrors(KafkaCluster.scala:365)

at org.apache.spark.streaming.kafka.KafkaUtils$.getFromOffsets(KafkaUtils.scala:222)

at org.apache.spark.streaming.kafka.KafkaUtils$.createDirectStream(KafkaUtils.scala:484)

at com.xxx.spark.main.xxx.createStream(xxx.scala:223)

at com.xxx.spark.main.xxx.createStreamingContext(xxx.scala:72)

at com.xxx.spark.main.xxx$$anonfun$getOrCreateStreamingContext$1.apply(xxx.scala:47)

at com.xxx.spark.main.xxx$$anonfun$getOrCreateStreamingContext$1.apply(xxx.scala:47)

at scala.Option.getOrElse(Option.scala:120)

at org.apache.spark.streaming.StreamingContext$.getOrCreate(StreamingContext.scala:864)

at com.xxx.spark.main.xxx.getOrCreateStreamingContext(xxx.scala:47)

at com.xxx.spark.main.xxx$.main(xxx.scala:34)

at com.xxx.spark.main.xxx.main(xxx.scala)

解决方式

:检查kafka服务器端口是否正常,如果已经启动kafka把进程杀掉,kill 进程号 重新启动

kafka设置一键启停

https://blog.csdn.net/qq_43412289/article/details/100633902

版权声明:本文为qq_43412289原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。