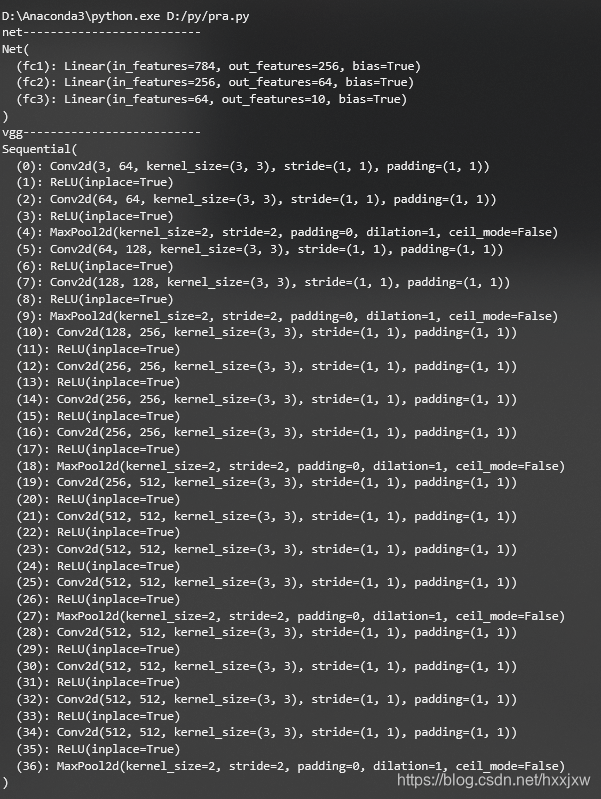

建立了模型对象后直接打印模型就会输出它的比较完整的层

net = Net()

print(net)

net模型还没有使用sequential结构

from torch import nn from torch.nn import functional as F from torchvision import models class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.fc1 = nn.Linear(28 * 28, 256) self.fc2 = nn.Linear(256, 64) self.fc3 = nn.Linear(64, 10) def forward(self, x): x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x print('net--------------------------') net = Net() print(net) print('vgg--------------------------') vgg = models.vgg19(pretrained=True).features print(vgg)

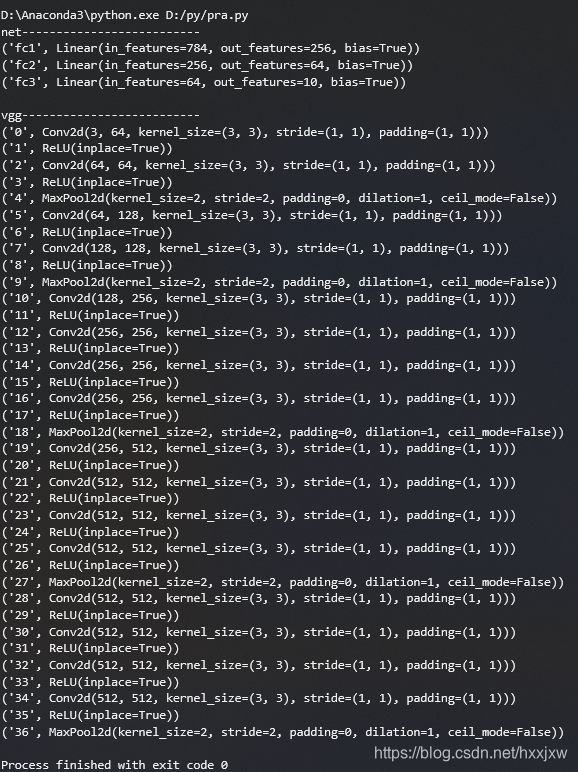

model._modules.items()

可以遍历输出每一层

from torch import nn from torch.nn import functional as F from torchvision import models class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.fc1 = nn.Linear(28 * 28, 256) self.fc2 = nn.Linear(256, 64) self.fc3 = nn.Linear(64, 10) def forward(self, x): x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x net = Net() vgg = models.vgg19(pretrained=True).features print('net--------------------------') for i in net._modules.items(): print(i) print() print('vgg--------------------------') for i in vgg._modules.items(): print(i)

model.modules() & model.children()

modules()与children()都是返回网络模型里的组成元素,但是children()返回的是最外层的元素,modules()返回的是所有的元素,包括不同级别的子元素。

用list举例就是

model.modules()

from torch import nn from torch.nn import functional as F from torchvision import models class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.fc1 = nn.Linear(28 * 28, 256) self.fc2 = nn.Linear(256, 64) self.fc3 = nn.Linear(64, 10) def forward(self, x): x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x net = Net() vgg = models.vgg19(pretrained=True).features print('net--------------------------') for i in net.modules(): print(i) print() print('vgg--------------------------') for i in vgg.modules(): print(i)

model.children()

from torch import nn from torch.nn import functional as F from torchvision import models class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.fc1 = nn.Linear(28 * 28, 256) self.fc2 = nn.Linear(256, 64) self.fc3 = nn.Linear(64, 10) def forward(self, x): x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x net = Net() vgg = models.vgg19(pretrained=True).features print('net--------------------------') for i in net.children(): print(i) print() print('vgg--------------------------') for i in vgg.children(): print(i)在这里和model.modules()的结果是一样的

它们的区别展现在例如网络结构有嵌套的时候

class Net4(torch.nn.Module): def __init__(self): super(Net4, self).__init__() self.conv = torch.nn.Sequential( OrderedDict( [ ("conv1", torch.nn.Conv2d(3, 32, 3, 1, 1)), ("relu1", torch.nn.ReLU()), ("pool1", torch.nn.MaxPool2d(2)) ] )) self.dense = torch.nn.Sequential( OrderedDict([ ("dense1", torch.nn.Linear(32 * 3 * 3, 128)), ("relu2", torch.nn.ReLU()), ("dense2", torch.nn.Linear(128, 10)) ]) ) def forward(self, x): conv_out = self.conv1(x) res = conv_out.view(conv_out.size(0), -1) out = self.dense(res) return out print("Method 4:") model4 = Net4() # modules方法将整个模型的所有构成(包括包装层Sequential、单独的层、自定义层等)由浅入深依次遍历出来,直到最深处的单层 for i in model4.modules(): print(i) print('==============================') print('=============华丽分割线=================') # named_modules()同上,但是返回的每一个元素是一个元组,第一个元素是名称,第二个元素才是层对象本身。 for i in model4.named_modules(): print(i) print('==============================') ''' 离模型最近即最浅处,就是模型本身 Net4( (conv): Sequential( (conv1): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (relu1): ReLU() (pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) ) (dense): Sequential( (dense1): Linear(in_features=288, out_features=128, bias=True) (relu2): ReLU() (dense2): Linear(in_features=128, out_features=10, bias=True) ) ) ============================== 由浅入深,到模型的属性 Sequential( (conv1): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (relu1): ReLU() (pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) ) ============================== 由浅入深,再到模型的属性的内部 Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) ============================== 由浅入深,再到模型的属性的内部,依次将这个属性遍历结束 ReLU() ============================== MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) 由浅入深,再到模型的属性的内部,依次将这个属性遍历结束,再遍历另个属性 ============================== Sequential( (dense1): Linear(in_features=288, out_features=128, bias=True) (relu2): ReLU() (dense2): Linear(in_features=128, out_features=10, bias=True) ) ============================== Linear(in_features=288, out_features=128, bias=True) ============================== ReLU() ============================== Linear(in_features=128, out_features=10, bias=True) ============================== =============华丽分割线================= ('', Net4( (conv): Sequential( (conv1): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (relu1): ReLU() (pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) ) (dense): Sequential( (dense1): Linear(in_features=288, out_features=128, bias=True) (relu2): ReLU() (dense2): Linear(in_features=128, out_features=10, bias=True) ) )) ============================== ('conv', Sequential( (conv1): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (relu1): ReLU() (pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) )) ============================== ('conv.conv1', Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))) ============================== ('conv.relu1', ReLU()) ============================== ('conv.pool1', MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)) ============================== ('dense', Sequential( (dense1): Linear(in_features=288, out_features=128, bias=True) (relu2): ReLU() (dense2): Linear(in_features=128, out_features=10, bias=True) )) ============================== ('dense.dense1', Linear(in_features=288, out_features=128, bias=True)) ============================== ('dense.relu2', ReLU()) ============================== ('dense.dense2', Linear(in_features=128, out_features=10, bias=True)) ============================== '''

版权声明:本文为hxxjxw原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。