Contents

-

Week1

-

1 Setting up your Machine Learning Application

-

2 Regularizing your Neural Network

-

3 Setting Up your Optimization Problem

-

Week2

-

1 Optimization Algorithms

-

-

1.1 Mini-batch Gradient Descent

-

1.2 Understanding Mini-batch Gradient Descent

-

1.3 Exponentially Weighted Moving Averages

-

1.4 Understanding Exponentially Weighted Averages

-

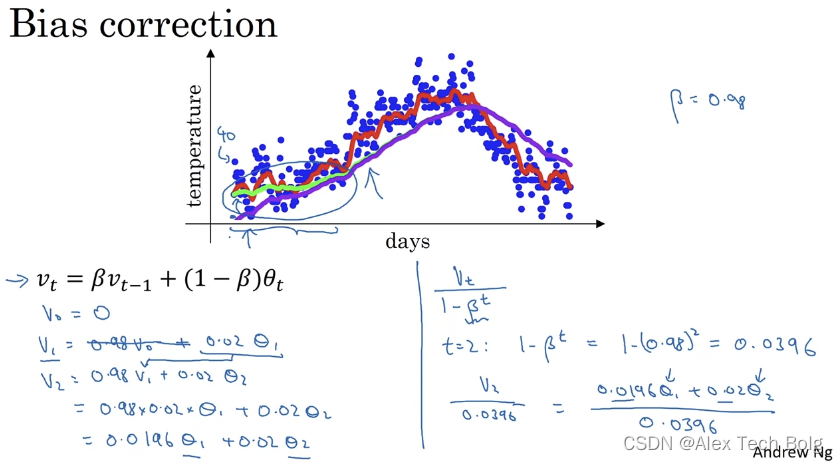

1.5 Bias Correction in Exponentially Weighted Averages

-

1.6 Gradient Descent with Momentum

-

1.7 RMSprop

-

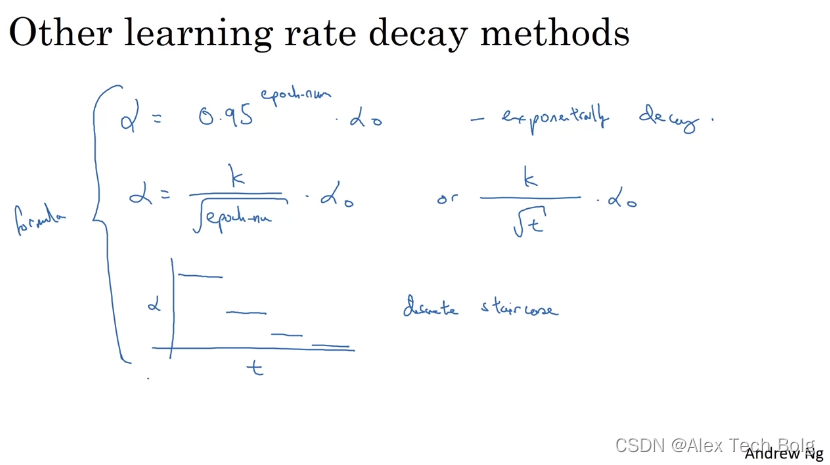

1.8 Adam Optimization Algorithm

-

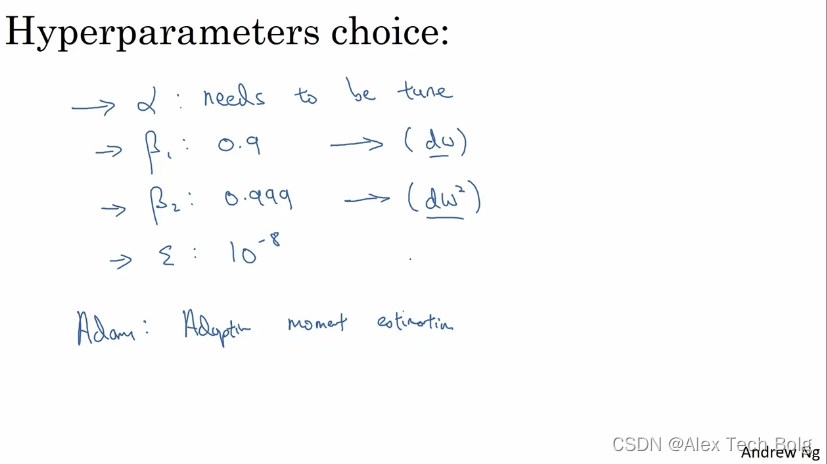

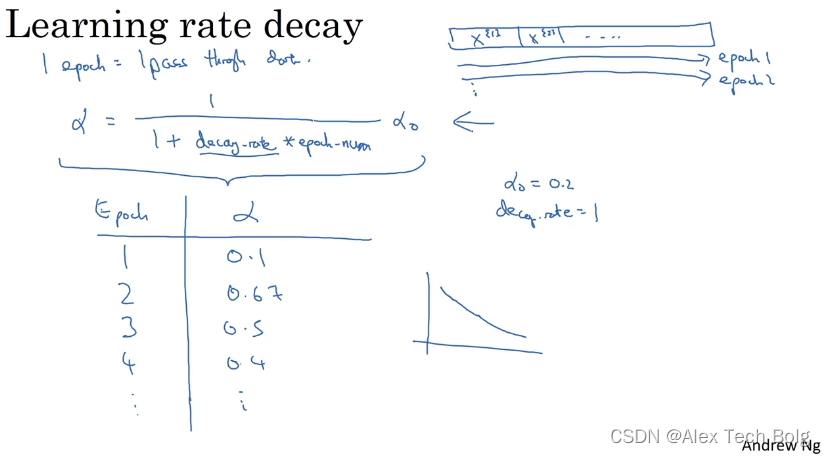

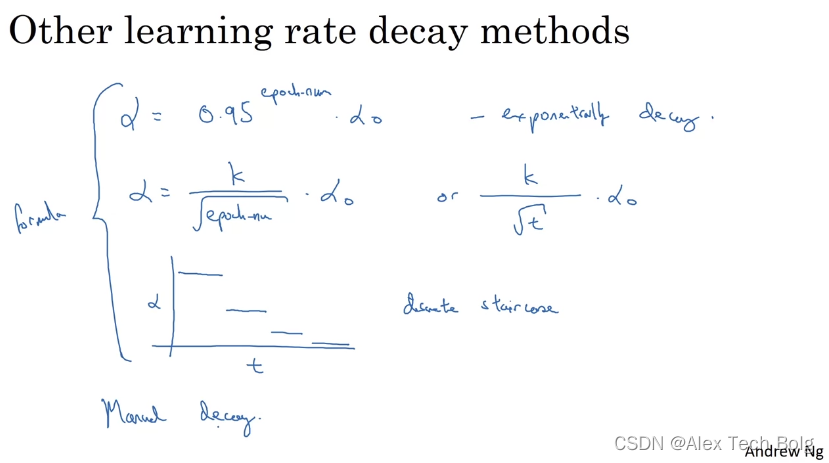

1.9 Learning Rate Decay

-

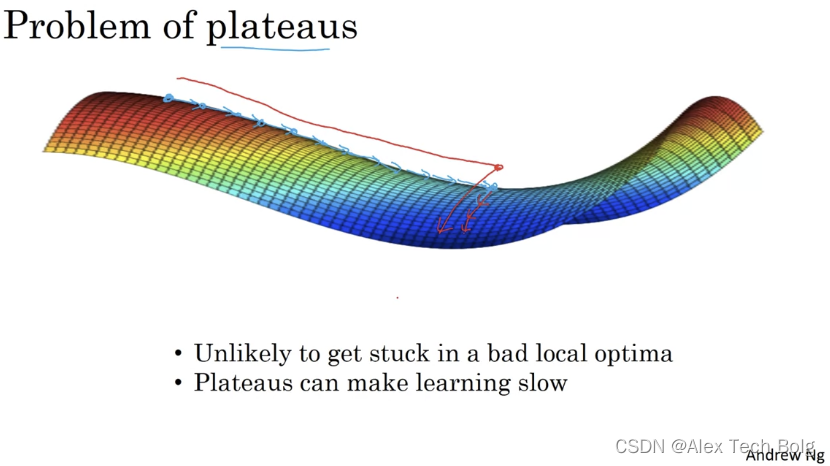

1.10 The Problem of Local Optima

-

-

Week 3

-

1 Parameter Tuning

-

2 Batch normalization

-

3 Multi-class Classification

-

4 Introduction to Programming Frameworks

-

5 Homework

-

Reference

Week1

1 Setting up your Machine Learning Application

1.1 Train/Dev/Test sets

- Train and dev set don’t need to be 70% – 30%. (Dev set just needs to be big enough for us to evaluate. )

- Make sure dev and test set come from same distribution. (Deep learning model needs to be fed lots of data to train, so sometime we need web crawler to get more data, which comes from different distribution. This rule of thumb can make that the progress in machine learning algorithm will be faster. )

- If you don’t need an unbiased estimate of performance, it’s fine to only have train and dev set.

1.2 Bias / Variance

- optimal (Bayes) error

- Compare the error on train and dev set with the optimal (Bayes) error to diagnose whether the model has high bias or high variance or both or neither.

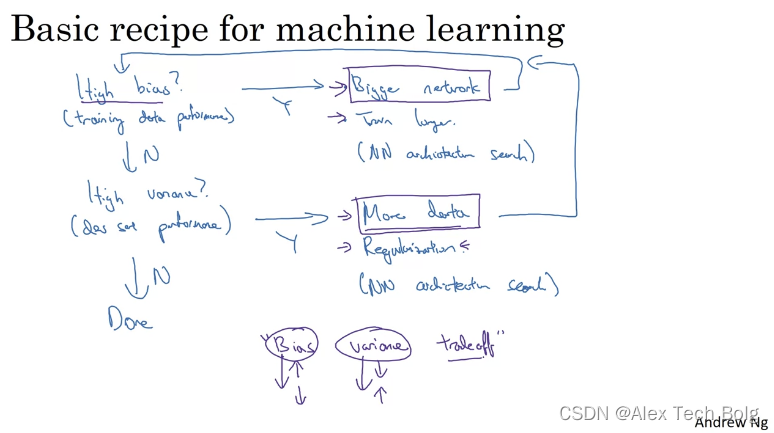

1.3 Basic Recipe for Machine Learning

2 Regularizing your Neural Network

2.1 Regularization

-

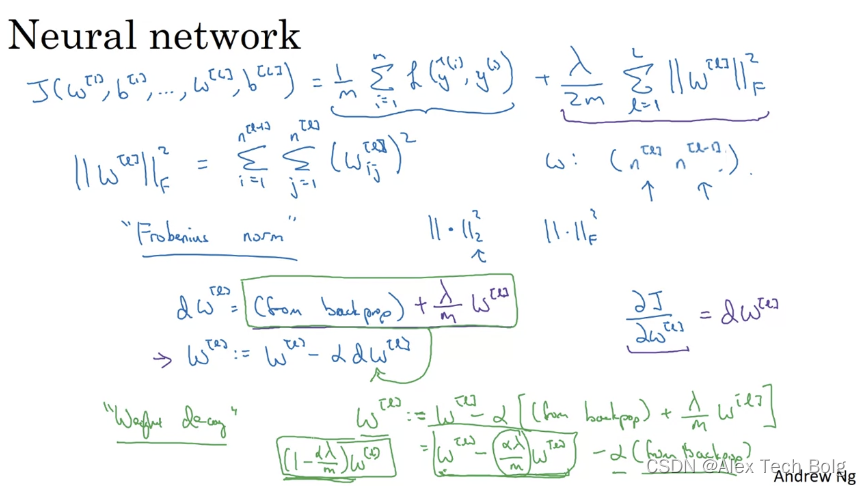

L2 regularization (Frobenius norm) – also called

weight decay

- L1 regularization

2.2 Why Regularization Reduces Overfitting?

-

第一种直观的解释:把

λ\lambda

λ

设置成一个非常大的数值后,很多参数w变成0,这样模型就从一个复杂的神经网络变成了更简单的神经网络,极端情况可能变成了线形模型。 -

第二种解释:以tanh为例,增大

λ\lambda

λ

,w减小,z会被限制在一个较小的范围内,这段范围在tanh上,正好是近似线性的

2.3 Dropout Regularization

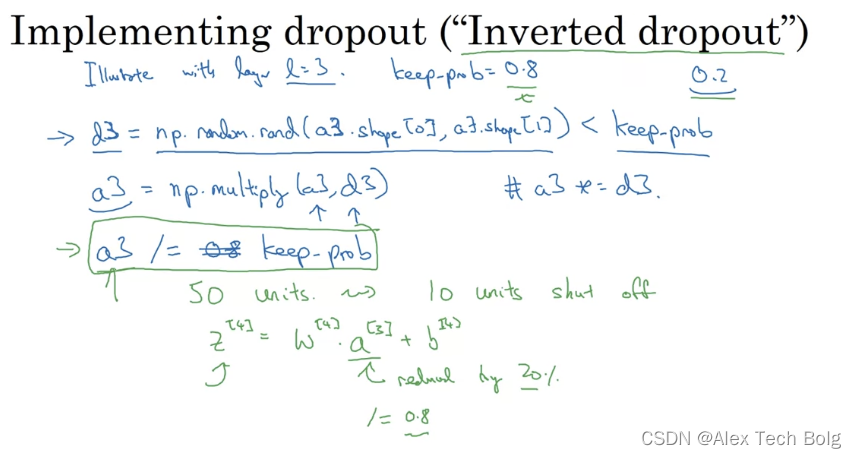

implement dropout (inverted dropout)

- Inverted dropout 是为了不改变 E(Z),这样在预测的时候,就不会再有scaling的问题

- 在每一次iteration,都有不同的notes被dropout设置为0

Making predictions at test time

- No dropout – dropout 会增加预测的噪音

2.4 Understanding Dropout

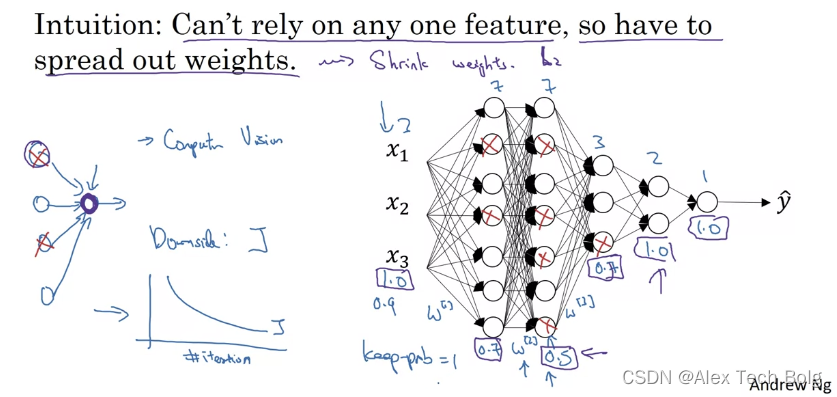

- Intuition:紫色的note不能依赖某一个input,因为每一个input都可能被随机eliminate,所以dropout可以spread out weights,从而起到了shrink weights的作用,因此和L2 normalization一样的效果。

- Downside:loss function J 难以计算,因为每一次dropout都是随机的,所以不能通过loss的图像来看模型训练得怎么样。常用的做法是先把dropout去掉,如果loss function曲线下降得很好,然后再把dropout加上,看最后结果的变化。

2.5 Other Regularization Methods

Data augmentation

Early stopping

3 Setting Up your Optimization Problem

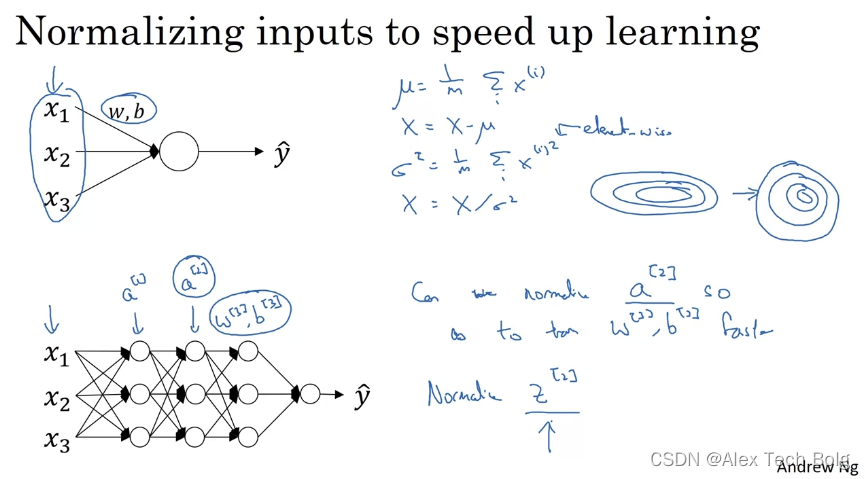

3.1 Normalizing Inputs

-

Normalize training data. Then use same

μ\mu

μ

and

σ\sigma

σ

to normalize test data because we want to guarantee the train and test data go through

same transformation

.

Why normalize inputs?

- Rough intuition:Your cost function will be in a more round and easier to optimize when your features are on similar scales.

- For dramatic difference of scales in features, it is important to normalize them. If your features come in on similar scales, then this step is less important although performing this normalization pretty much never does any harm.

3.2 Vanishing / Exploding Gradients

- If your activations or gradients increase or decrease exponentially as a function of L(number of layers), then these values could get really big or small. This will make training very difficult.

- Especially for exponentially small, the gradient descent will take tiny little steps, which will take a long time for gradient descent to learn anything.

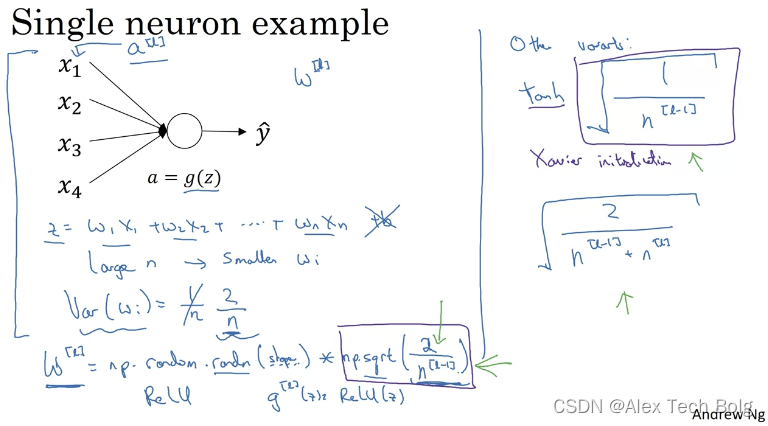

3.3 Weight Initialization for Deep Networks

- 如果输入的节点数量比较多,那么就需要每个w更小,因此 larger n –> smaller wi

-

通常使用

Va

r

(

w

i

)

=

1

n

Var(w_i) = \frac{1}{n}

Va

r

(

w

i

)

=

n

1

来进行约束。因为

输入的特征是均值为0,方差为1 的,所以这样能够尽量保证让

zz

z

也在同样的范围内

,这种方法没有解决梯度爆炸或消失的问题,但的确帮助减少了这类问题的出现(因为让

wi

w_i

w

i

不会大于1或小于1很多,所以不会很快出现梯度消失或爆炸) -

如果使用

Re

l

u

Relu

R

e

l

u

作为激活函数,那么就需要用

np

.

s

q

r

t

(

2

n

[

l

−

1

]

)

np.sqrt(\frac{2}{n^{[l-1]}})

n

p

.

s

q

r

t

(

n

[

l

−

1

]

2

)

来进行约束;其他激活函数也有对应的约束方式

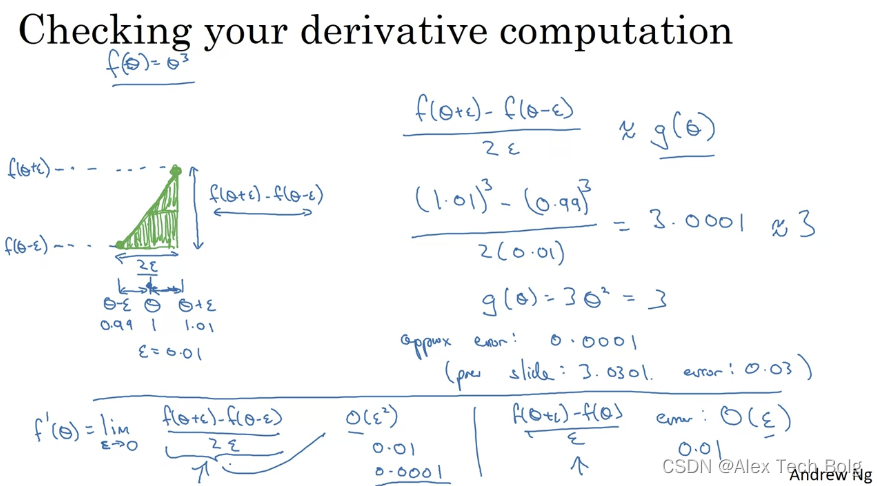

3.4 Numerical Approximation of Gradients

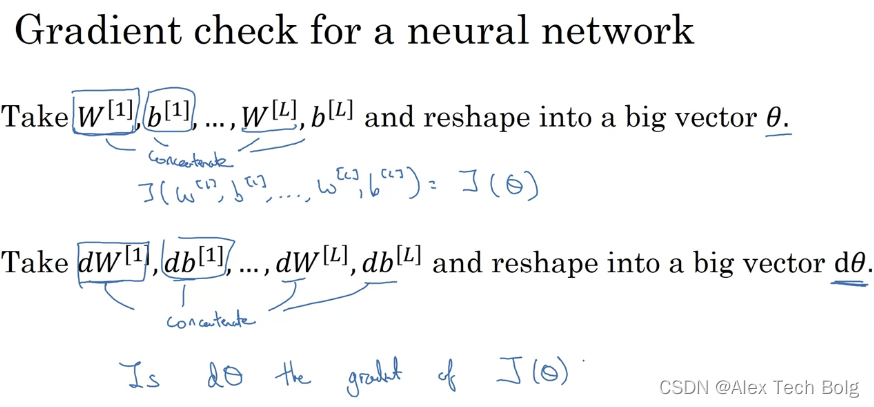

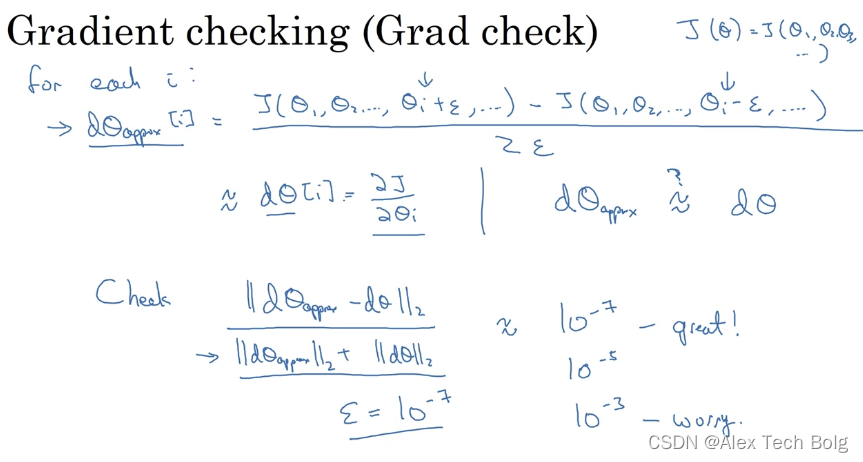

3.5 Gradient Checking

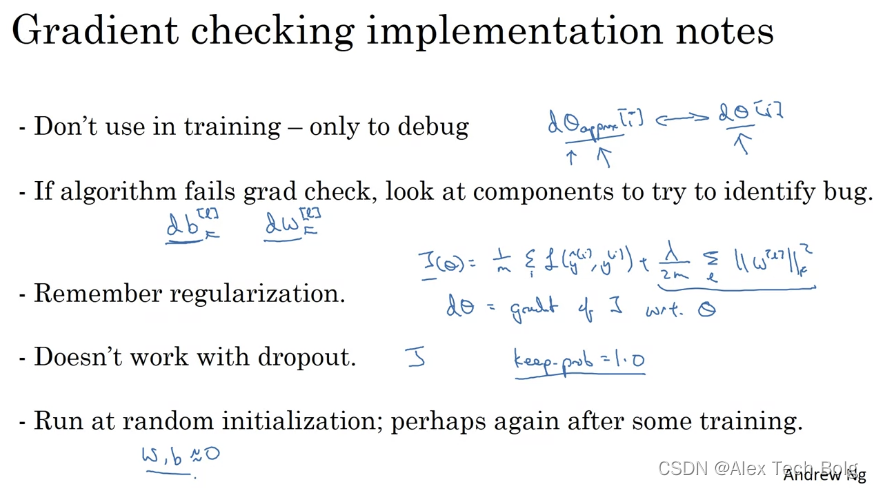

3.6 Gradient Checking Implementation Notes

Week2

1 Optimization Algorithms

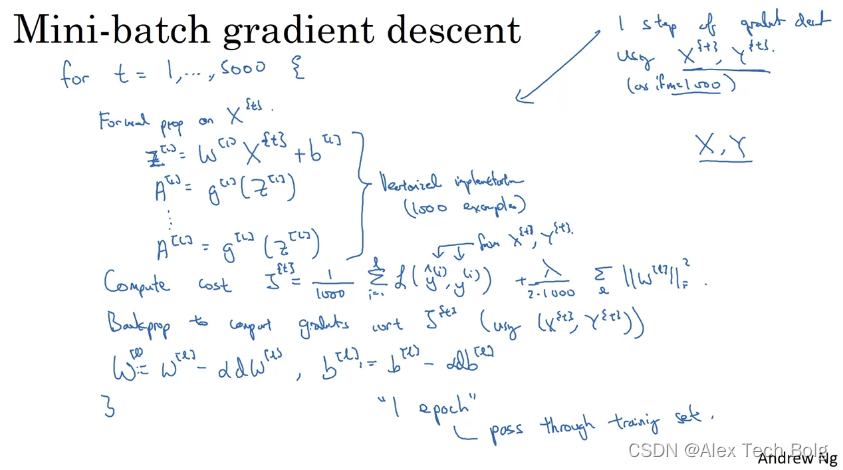

1.1 Mini-batch Gradient Descent

- epoch:pass through training set 全部的训练数据被用来训练模型;在batch gradient descent只能做一次梯度更新

1.2 Understanding Mini-batch Gradient Descent

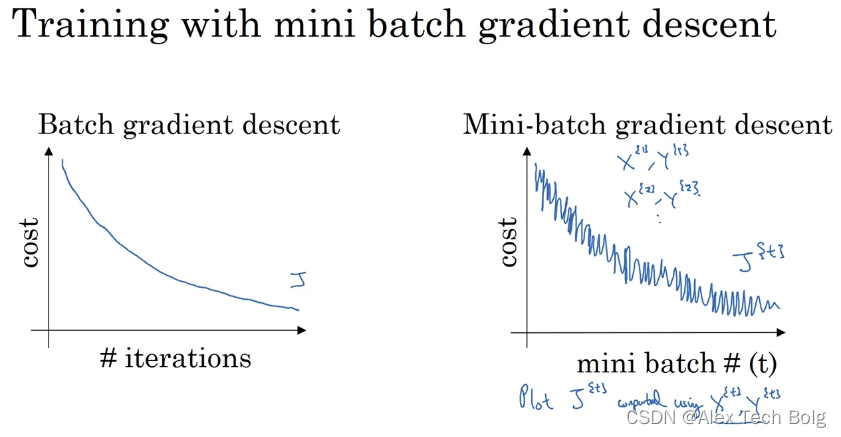

Training with mini batch gradient descent

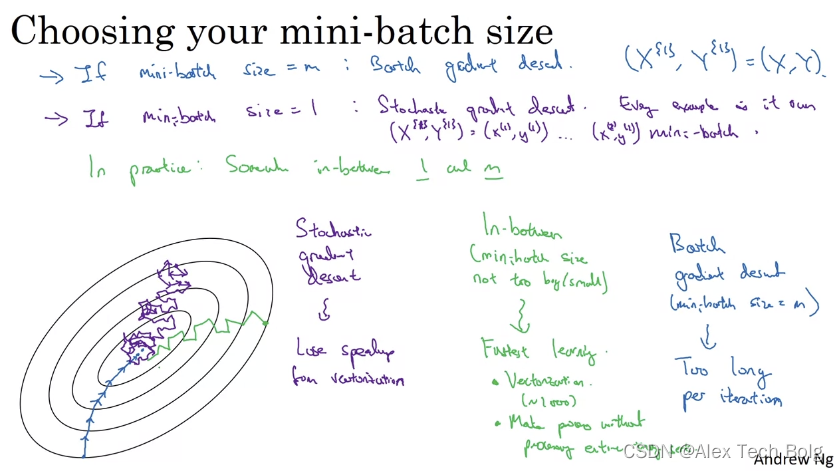

Choosing your mini-batch size

- mini-batch size = m:batch gradient descent(too long per iteration)

- In-between size not too big or small:fastest learning

- mini-batch size = 1:stochastic gradient descent(lose speedup from variation)

- If small training set: Use batch gradient descent

- Typical mini-batch sizes: 64, 128, 256, 512 (power of 2)

- Make sure mini-batch fit in CPU/GPU memory

1.3 Exponentially Weighted Moving Averages

V

t

=

β

V

t

−

1

+

(

1

−

β

)

θ

t

V_t = \beta V_{t-1} + (1 – \beta)\theta_t

V

t

=

β

V

t

−

1

+

(

1

−

β

)

θ

t

1.4 Understanding Exponentially Weighted Averages

1.5 Bias Correction in Exponentially Weighted Averages

-

因为初始化

V0

=

0

V_0 = 0

V

0

=

0

,所以在最开始的阶段,

V1

V_1

V

1

非常小,因此在估测初期进行bias correction,

Vt

1

−

β

t

\frac{V_t}{1 – \beta t}

1

−

βt

V

t

,当

tt

t

逐渐增大,

βt

\beta^t

β

t

接近于0,bias correction几乎没有影响

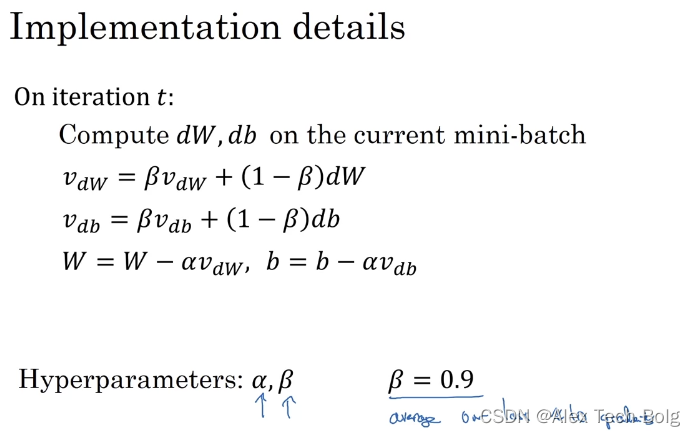

1.6 Gradient Descent with Momentum

-

Vd

w

V_{dw}

V

d

w

相当于速度 velocity,

dw

dw

d

w

相当于加速度accelerator -

想象你有一个碗,你拿一个球,微分项给了这个球一个加速度,此时球正向山下滚,球因为加速度越滚越快,而因为

β\beta

β

稍小于1,表现出一些摩擦力,所以球不会无限加速下去,所以不像梯度下降法,每一步都独立于之前的步骤,你的球可以向下滚,获得动量,可以从碗向下加速获得动量。 -

实际操作中,也有使用

Vd

w

=

β

V

d

w

+

d

w

V_{dw} = \beta V_{dw} + dw

V

d

w

=

β

V

d

w

+

d

w

,这种情况下,

α\alpha

α

也需要相应进行调整

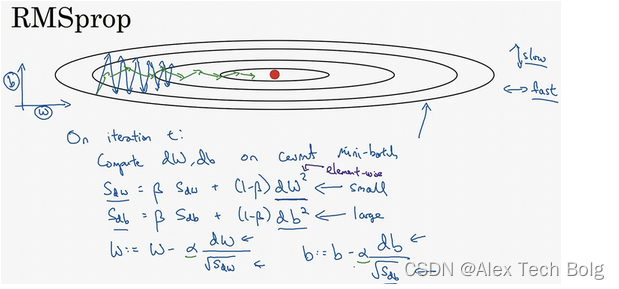

1.7 RMSprop

Root Mean Square prop

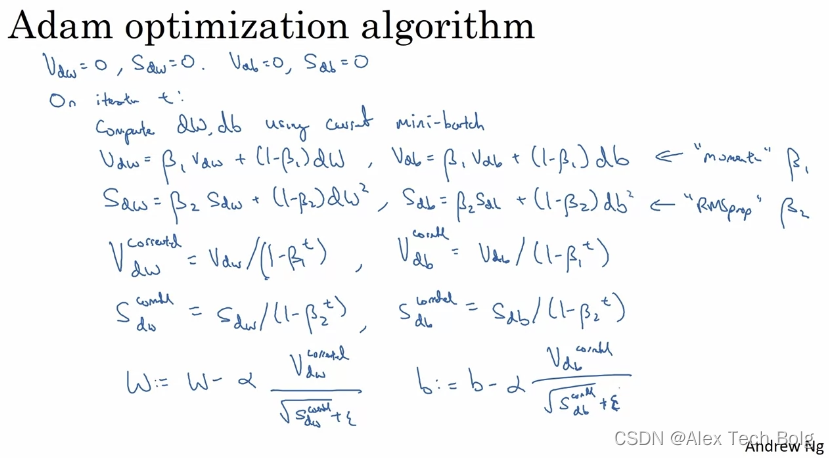

1.8 Adam Optimization Algorithm

1.9 Learning Rate Decay

1.10 The Problem of Local Optima

Week 3

1 Parameter Tuning

1.1 Tuning Process

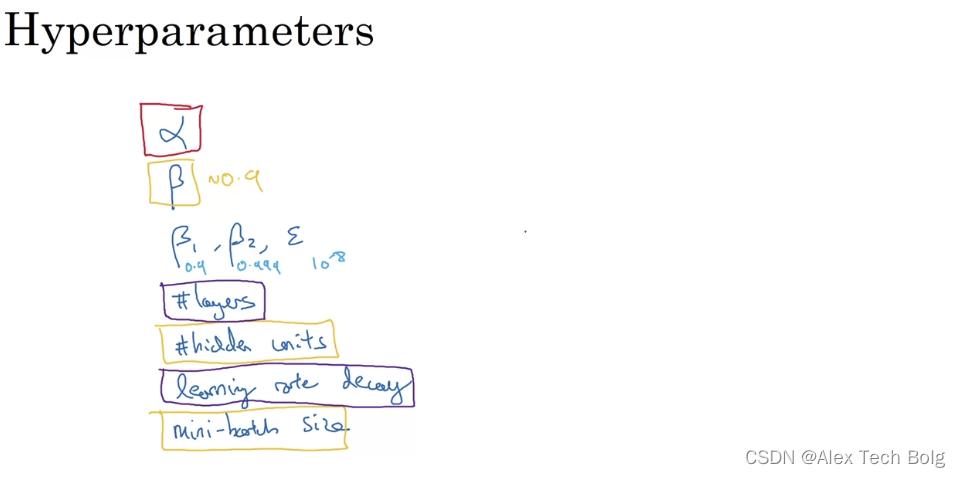

Hyperparameters

-

Learning rate

α\alpha

α

通常是最重要的需要调的参数 - 其次是hidden units,mini-batch size

- 再之后是layers的数量,learning rate decay

- Adam通常用默认的参数

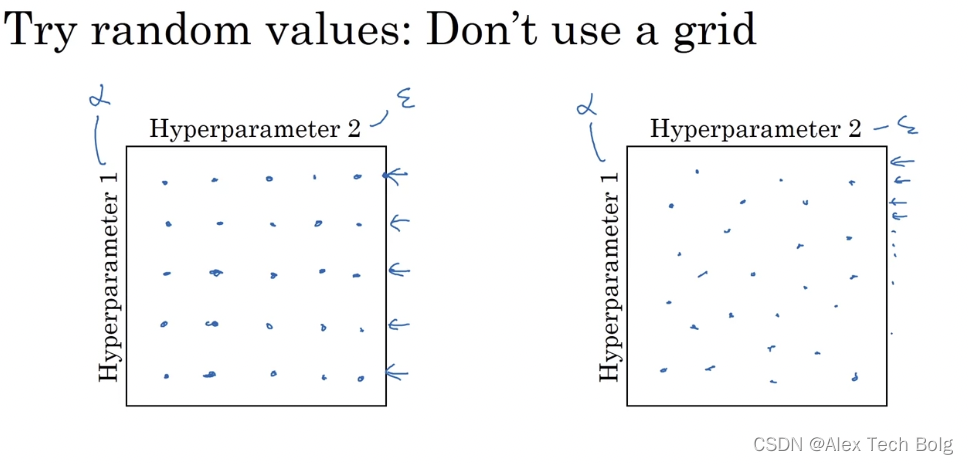

Try random values: Don’t use a grid

-

比如说,一个超参是学习率

α\alpha

α

,另一个是Adam的

ϵ\epsilon

ϵ

,那么得到的结果很可能就是,对于同样的不管

α\alpha

α

,怎么调

ϵ\epsilon

ϵ

,结果都一样。那么对于左图,我们实际上只得到了5个调参的结果,但是右图,random的调参就能让我们有25个不同的结果

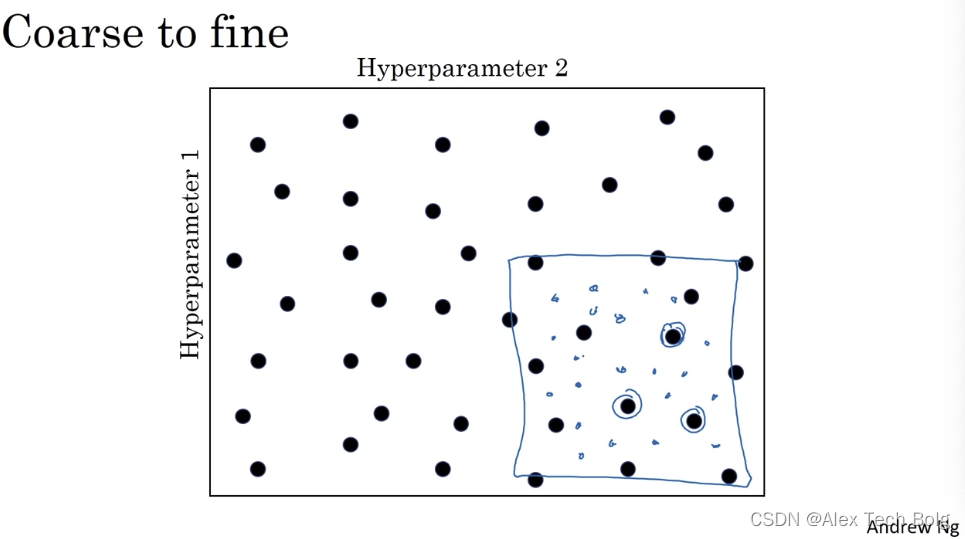

coarse to fine

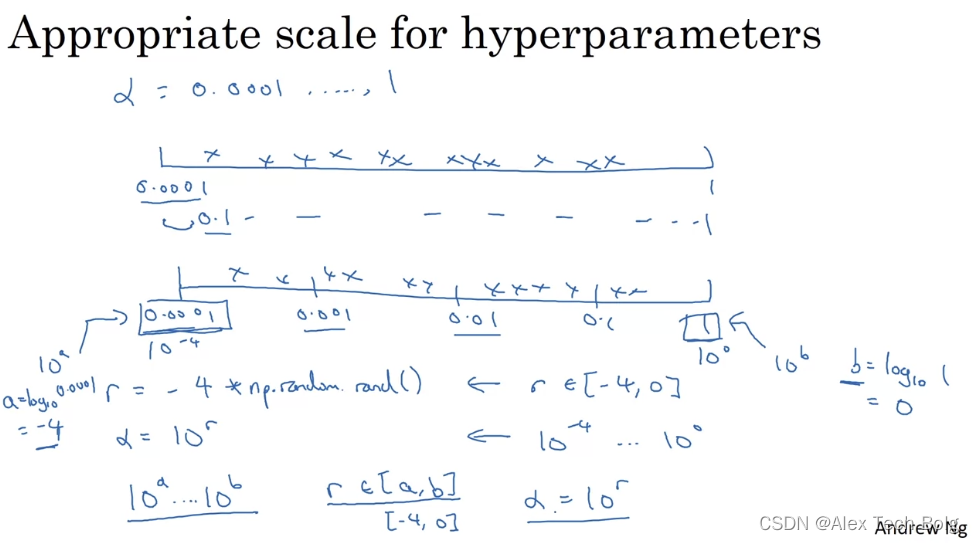

1.2 Using an Appropriate Scale to pick Hyperparameters

Picking hyperparameters at random

Appropriate scale for hyperparameters

- Log scale

Hyperparameters for exponentially weighted averages

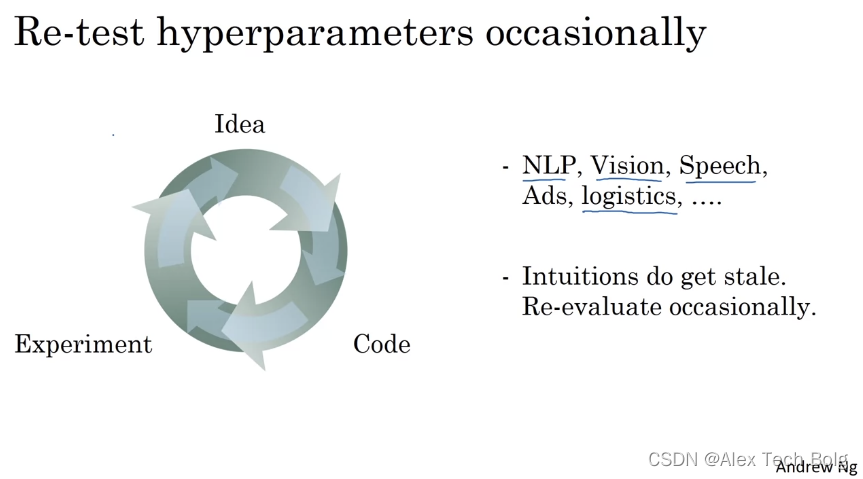

1.3 Hyperparameters Tuning in Practice: Pandas vs. Caviar

Re-test hyperparameters occasionally

- Even if you work on just one problem, you might have found a good setting for the hyperparameters and kept on developing your algorithm. Data gradually change over the course of several months, or maybe just servers are upgraded in your data center. Those changes can make the best setting of your hyperparameters get stale. So it is recommended maybe just retesting or re-evaluating your hyperparameters at least once every several months.

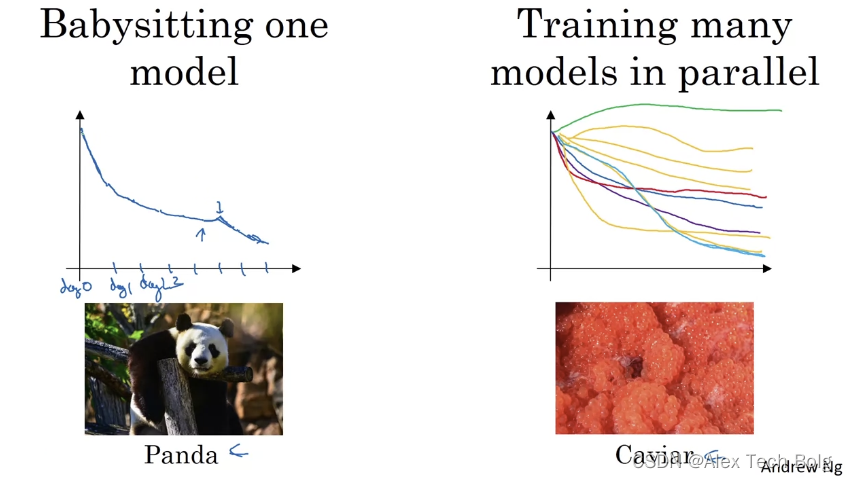

How to search hyperparameters?

- 一种是你照看一个模型,通常是有庞大的数据组,但没有许多计算资源或足够的CPU和GPU的前提下,基本而言,你只可以一次负担起试验一个模型或一小批模型,在这种情况下,即使当它在试验时,你也可以逐渐改良。

- 另一种方法则是同时试验多种模型,你设置了一些超参数,尽管让它自己运行,或者是一天甚至多天,然后你会获得像这样的学习曲线。用这种方式你可以试验许多不同的参数设定,然后只是最后快速选择工作效果最好的那个

2 Batch normalization

2.1 Normalizing Activations in a Network

-

通常是对

zz

z

(激活层之前)进行normalize

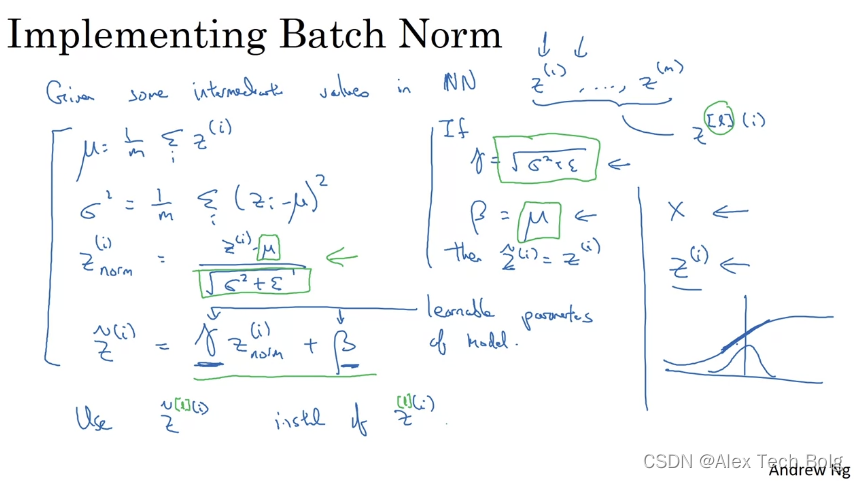

Implement Batch Norm

-

For numerical stability, add

ϵ\epsilon

ϵ

-

不想让隐藏层一直都是均值为0方差为1,所以加上了

γ\gamma

γ

和

β\beta

β

-

Batch normalization 使隐藏单元值的均值和方差标准化,即有固定的均值和方差,均值和方差可以是0和1,也可以是其它值,这些都是通过

γ\gamma

γ

和

β\beta

β

控制,而这两个参数是通过学习算法学到的

Batch normalization can make sure your hidden units has fixed standardized mean and variance, where standardized mean and variance are controlled by

γ\gamma

γ

and

β\beta

β

, and learning algorithms can set whatever it wants.

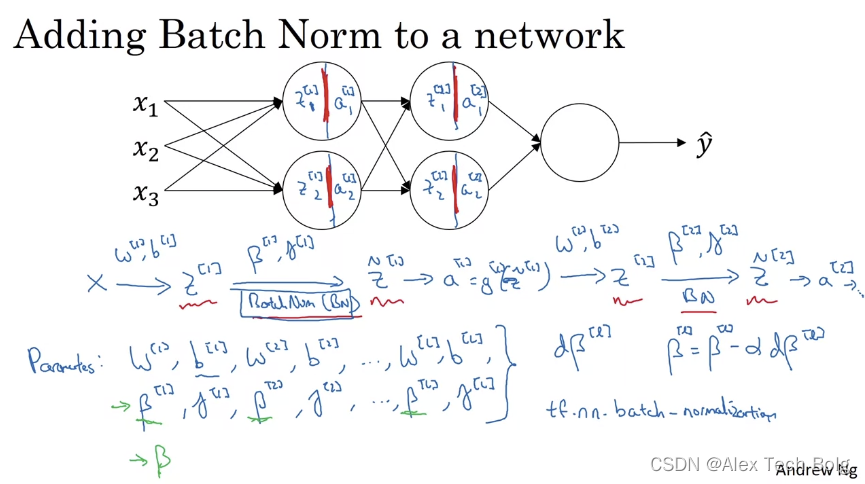

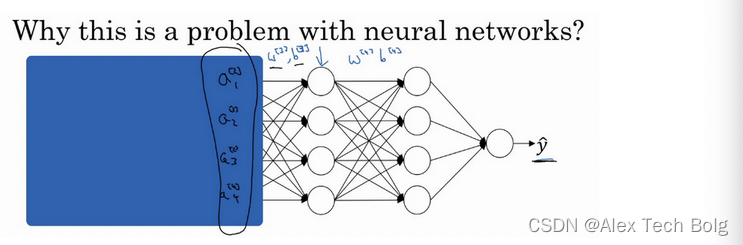

2.2 Fitting Batch Norm into a Neural Network

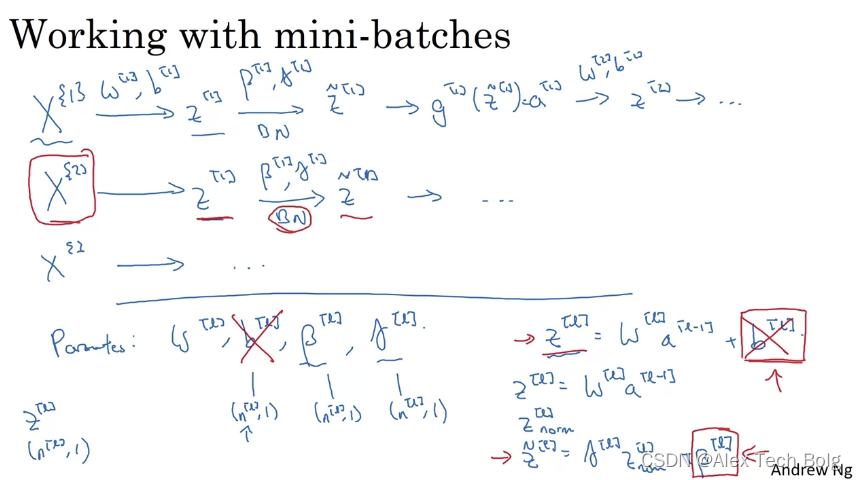

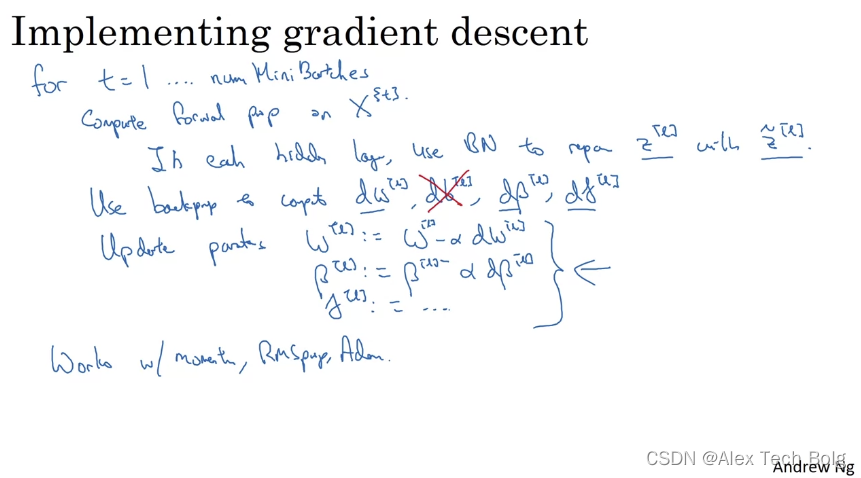

Working with mini-batches

-

因为计算完

Z[

l

]

Z^{[l]}

Z

[

l

]

之后,BN需要通过

γ\gamma

γ

和

β\beta

β

来normalize均值,所以说对所有的样本加上一个常数,和不加是一样的

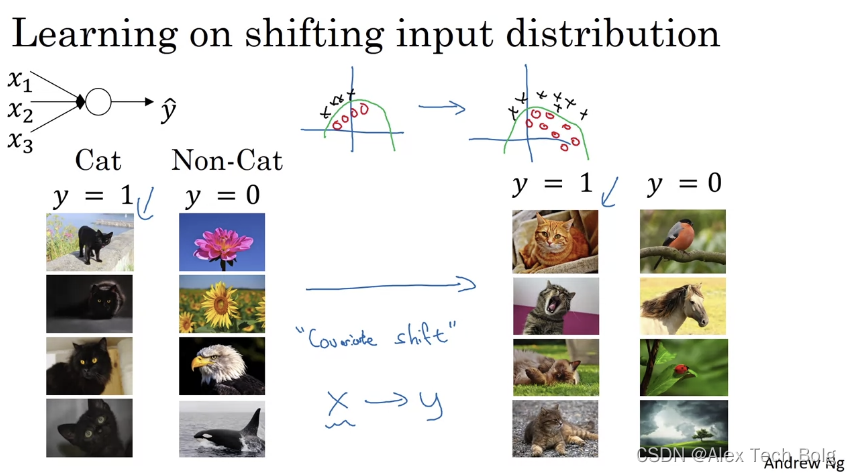

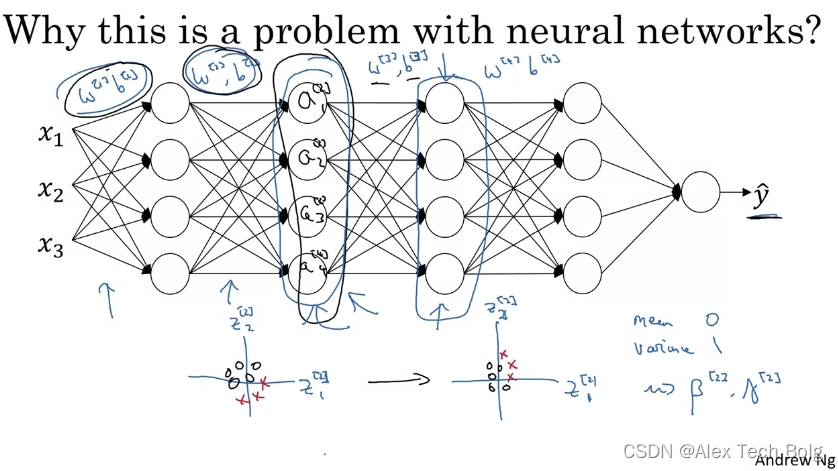

2.3 Why does Batch Norm work?

-

从第三隐藏层的角度来看,它得到一些值,

a1

[

2

]

a_1^{[2]}

a

1

[

2

]

,

a2

[

2

]

a_2^{[2]}

a

2

[

2

]

,

a3

[

2

]

a_3^{[2]}

a

3

[

2

]

,

a4

[

2

]

a_4^{[2]}

a

4

[

2

]

,第三层隐藏层的工作是找到一种方式,使这些值映射到

y^

\hat{y}

y

^

-

现在我们把网络的左边揭开,这个网络还有参数

w[

2

]

w^{[2]}

w

[

2

]

和

b[

2

]

b^{[2]}

b

[

2

]

,

w[

1

]

w^{[1]}

w

[

1

]

和

b[

1

]

b^{[1]}

b

[

1

]

,如果这些参数改变,这些

a[

2

]

a^{[2]}

a

[

2

]

的值也会改变。所以从第三层隐藏层的角度来看,这些隐藏单元的值在不断地改变,所以它就有了**“Covariate shift”**的问题,上张幻灯片中我们讲过的 -

What BM does is that it reduces the amount that the distribution of these hidden unit values shifts around.

-

Even the exact values of previous hidden units values change, the mean and variance will stay the same.

-

It weaken the coupling between what the early layers parameters has to do and what the later layers parameters have to do, so it allows each layer of the network to learn by itself.(independently of other layers)

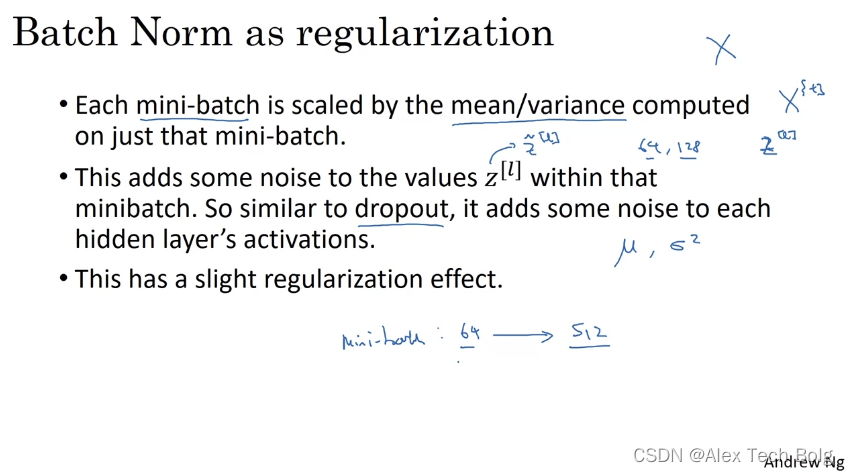

- By using a larger mini-batch size, you reduce the regularization effect.

- 尽管BN有regularization的效果,但是不要将BN作为regularization

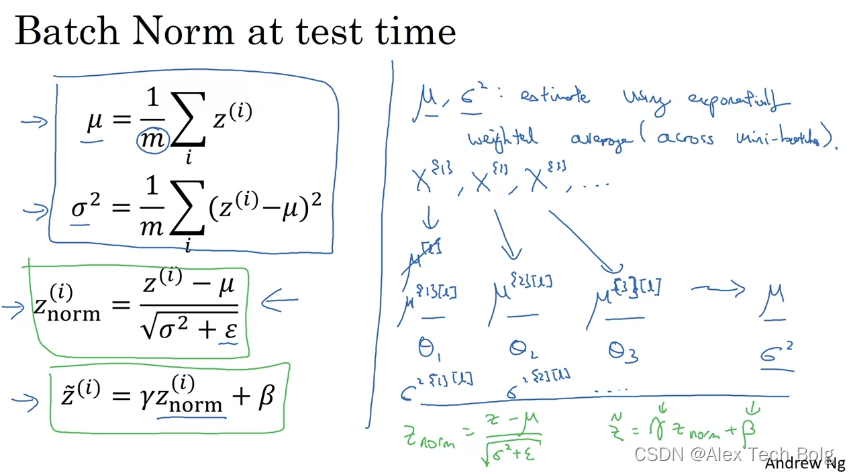

2.4 Batch Norm at Test Time

-

在做测试的时候,因为只有一个sample,没法计算

μ\mu

μ

和

σ\sigma

σ

,所以需要用train set的数据来确定

μ\mu

μ

和

σ\sigma

σ

。通常使用exponential weighted average来确定

μ\mu

μ

和

σ\sigma

σ

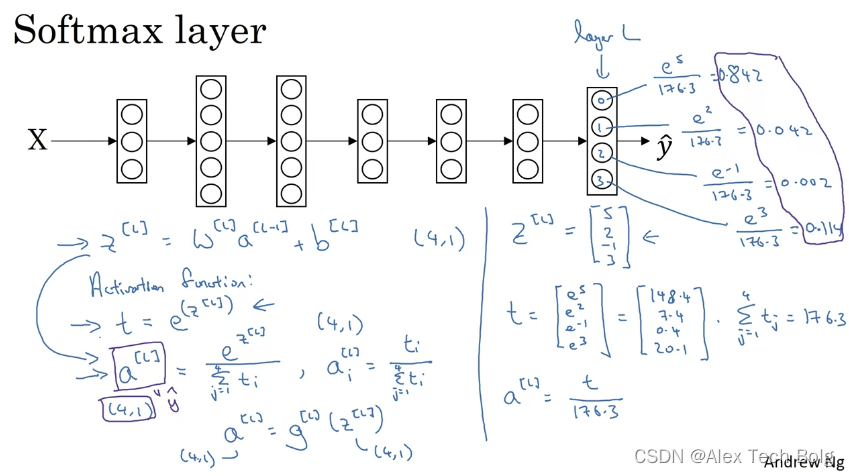

3 Multi-class Classification

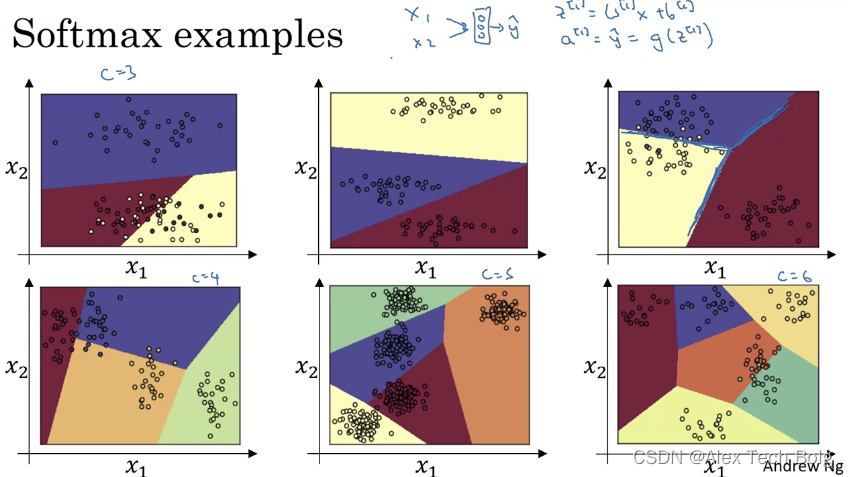

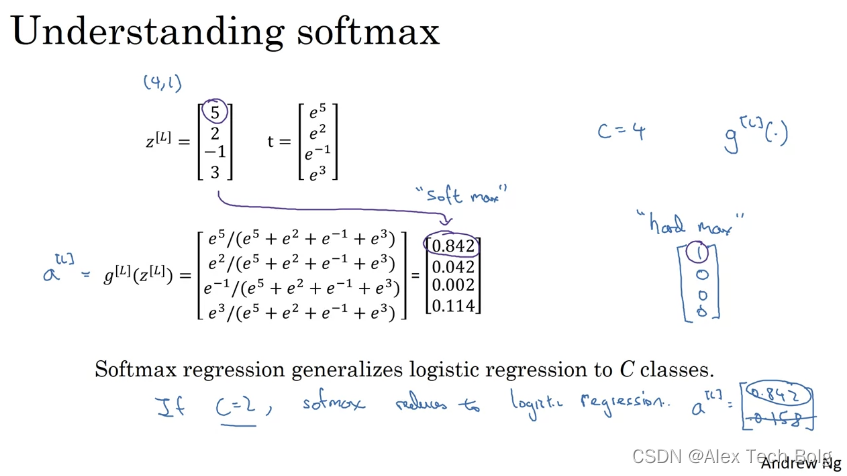

3.1 Softmax Regression

- (没有加hidden layer)softmax是线性的决策边界 linear decision boundary

3.2 Training a Softmax Classifier

- softmax regression generalizes logistic regression to C classes

- If C=2,softmax reduces to logistic regression.

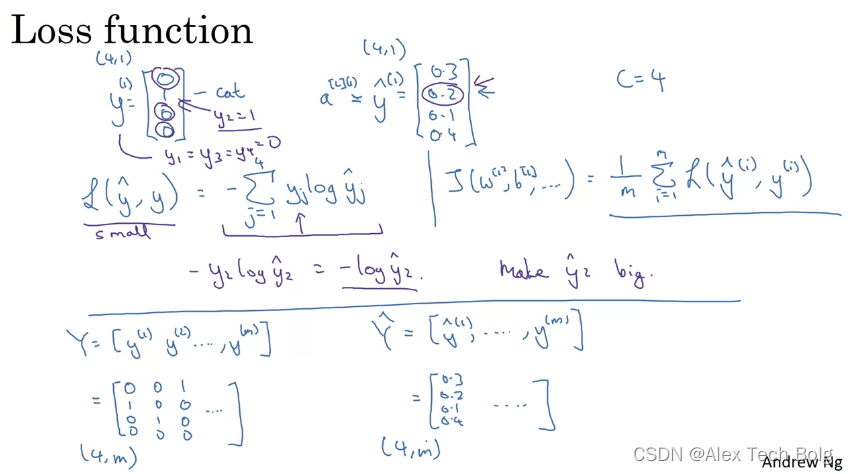

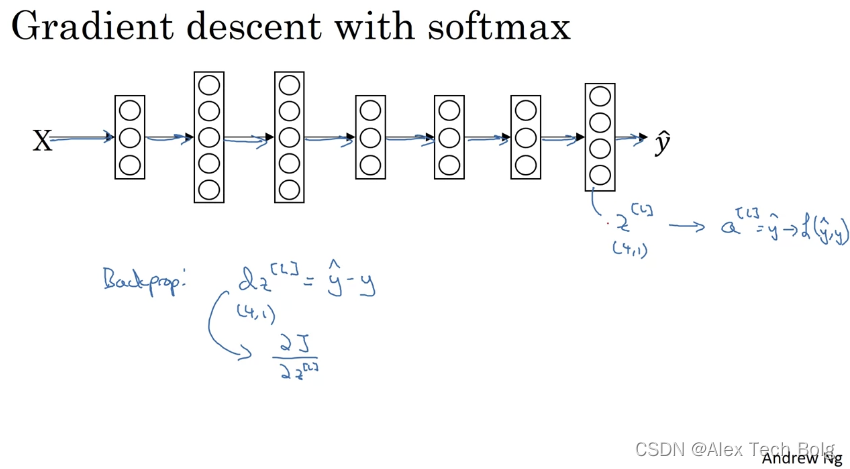

Loss function

-

求导之后,梯度正好是

dz

[

L

]

=

y

^

−

y

dz^{[L]} = \hat{y} – y

d

z

[

L

]

=

y

^

−

y

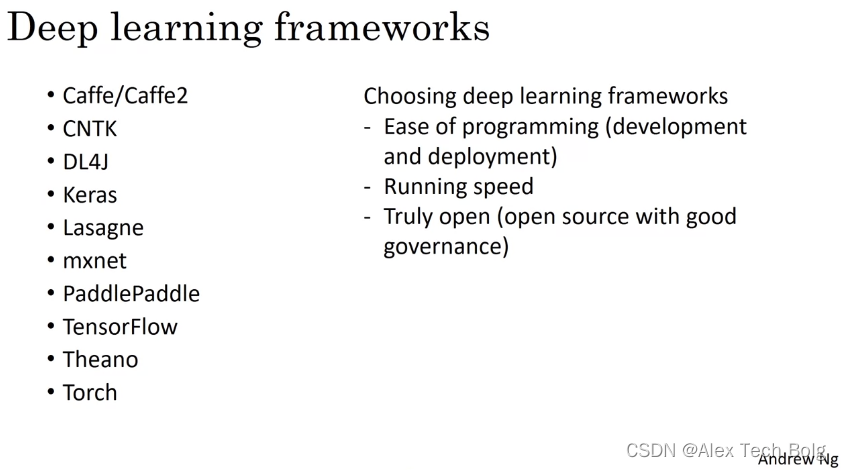

4 Introduction to Programming Frameworks

4.1 Deep Learning Frameworks

4.2 Tensorflow

5 Homework

作业中的一个地方

tf.keras.losses.categorical_crossentropy(tf.transpose(labels), tf.transpose(logits), from_logits=True)

-

注意这里需要使用

from_logits=True

-

如果使用了softmax,就是False;如果没有就用True,

from_logits

可以获得数值稳定性numerical stability

作业答案参考

Reference