k8s_day03_04

service 要想合理的关联到后端pod 来, 必须借助合适的标签选择器 label selector, 标签选择器依赖于被选择对象之上的各个标签

Service的类型

1、ClusterIP:

通过集群内部IP地址暴露服务,但该地址仅在集群内部可见、可达,它无法被集群外部的客户端访问;

默认类型

;

eg:创建svc

[root@master01 chapter7]# cat services-clusterip-demo.yaml

# Maintainer: MageEdu <mage@magedu.com>

# URL: http://www.magedu.com

---

kind: Service

apiVersion: v1

metadata:

name: demoapp-svc

namespace: default

spec:

clusterIP: 10.97.72.1

selector:

app: demoapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

查看svc

[root@master01 chapter7]# kubectl get svc/demoapp-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp-svc ClusterIP 10.97.72.1 <none> 80/TCP 100s

[root@master01 chapter7]# kubectl describe svc/demoapp-svc

Name: demoapp-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=demoapp

Type: ClusterIP

IP Families: <none>

IP: 10.97.72.1

IPs: <none>

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.4.8:80,10.244.4.9:80,10.244.5.9:80

Session Affinity: None

Events: <none>

[root@master01 chapter7]#

发现endpoints IP 和我们自己用标签选的pod ip一样,service 添加标签选择器的pod是由ep 完成的

[root@node01 ~]# kubectl get po -l app=demoapp -o wide |awk '{print $6}'

IP

10.244.5.15

10.244.5.16

10.244.5.17

是因为创建service 时,自动创建同名的endpoints 资源。ep中含有这3个端点,这3个端点就被service 使用,只不过ep 是被service 控制器自动请求创建的 ep资源是被ep的控制管理器管理到后端pod 上去的

eg: 端点的地址变动,service 会自动同步

[root@node01 ~]# kubectl scale deployment demoapp --replicas=2

deployment.apps/demoapp scaled

[root@node01 ~]# kubectl describe svc/demoapp-svc

Name: demoapp-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=demoapp

Type: ClusterIP

IP Families: <none>

IP: 10.97.72.1

IPs: <none>

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.5.15:80,10.244.5.16:80

Session Affinity: None

Events: <none>

service 对后端pod的调度请求是轮询的【请求样本足够大就是均衡的】

[root@node01 ~]# curl 10.97.72.1

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

[root@node01 ~]# curl 10.97.72.1

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-vbwg9, ServerIP: 10.244.5.17!

[root@node01 ~]# curl 10.97.72.1

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-cz22l, ServerIP: 10.244.5.15!

2、NodePort:

NodePort是ClusterIP的增强类型,它会于ClusterIP的功能之外,

在每个节点上使用一个相同的端口号将外部流量引入到该Service上来。

[root@node01 chapter7]# kubectl apply -f services-nodeport-demo.yaml

service/demoapp-nodeport-svc created

[root@node01 chapter7]# cat services-nodeport-demo.yaml

# Maintainer: MageEdu <mage@magedu.com>

# URL: http://www.magedu.com

---

kind: Service

apiVersion: v1

metadata:

name: demoapp-nodeport-svc

spec:

type: NodePort

clusterIP: 10.97.56.1

selector:

app: demoapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 31398

# externalTrafficPolicy: Local

建议ports 中的port 和target 保持, nodePort 可以不用指定,会自动生成(主要是为了避免冲突)

Cluster 集群内访问方式: Client -> ClusterIP:ServicePort -> PodIP:targetPort

Out Cluster 集群外访问方式 : Client -> NodeIP:NodePort -> PodIP:targetPort

3、LoadBalancer:

LB是NodePort的增强类型,要借助于底层IaaS云服务上的LBaaS产品来按需管理LoadBalancer。

同样如果不写node Port 可以不用指定,会自动分配。loadBalancerIP 不能随便指定,能不能指定取决于IaaS云服务商 支不支持手动定义。如果没有IaaS云服务服务商 ,也别提labas服务了 ,那么仅仅会把自己绑定成 NodePort 类型的service

[root@node01 chapter7]# cat services-loadbalancer-demo.yaml

kind: Service

apiVersion: v1

metadata:

name: demoapp-loadbalancer-svc

spec:

type: LoadBalancer

selector:

app: demoapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

loadBalancerIP: 1.2.3.4

[root@node01 chapter7]# kubectl apply -f services-loadbalancer-demo.yaml

[root@node01 chapter7]# kubectl get svc/demoapp-loadbalancer-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp-loadbalancer-svc LoadBalancer 10.98.151.232 <pending> 80:31988/TCP 59s

发现会被自动分配port ,因为没有Iaas 服务商 ,所以external-ip 为pending 状态

4、ExternalName:

借助集群上KubeDNS来实现,服务的名称会被解析为一个CNAME记录,而CNAME名称会被DNS解析为集群外部的服务的IP地址; 这种Service既不会有ClusterIP,也不会有NodePort;

service 资源格式

清单

Service资源的定义格式,名称空间级别的资源:

apiVersion: v1

kind: Service

metadata:

name: …

namespace: …

labels:

key1: value1

key2: value2

spec:

type <string> # Service类型,默认为ClusterIP

selector <map[string]string> # 等值类型的标签选择器,内含“与”逻辑

ports: # Service的端口对象列表

- name <string> # 端口名称

protocol <string> # 协议,目前仅支持TCP、UDP和SCTP,默认为TCP

port <integer> # Service的端口号

targetPort <string> # 后端目标进程的端口号或名称,名称需由Pod规范定义

nodePort <integer> # 节点端口号,仅适用于NodePort和LoadBalancer类型.通常是在3万到32767之间

clusterIP <string> # Service的集群IP,和nodePort一样建议由系统自动分配

externalTrafficPolicy <string> # 外部流量策略处理方式,Local表示由当前节点处理,Cluster表示向集群范围调度

loadBalancerIP <string> # 外部负载均衡器使用的IP地址,仅适用于LoadBlancer

externalName <string> # 外部服务名称,该名称将作为Service的DNS CNAME值

selector: 内含“与”逻辑”

必选字段,如果指了2个以上的字段,必须同时满足所有条件

clusterIP 建议由K8S动态指定一个;10.96.0.0/12 选一个, 也支持用户手动明确指定;

ServicePort: 与后端pod 相对应 , 被映射进Pod上的应用程序监听的端口; 而且如果后端Pod有多个端口,并且每个端口都想通过Service暴露的话,每个都要单独定义。

最终接收请求的是PodIP和containerPort;

在 k8s 中 k1:v1 k2:v2 的数据格式叫 map[string]string>

标签

定义

标签中的键名称通常由“键前缀”和“键名”组成,其格式形如“KEY_PREFIX/KEY_NAME”,键前缀为可选部分。键名至多能使用63个字符,支持字母、数字、连接号(-)、下划线(_)、点号(.)等字符,且只能以字母或数字开头。而键前缀必须为DNS子域名格式,且不能超过253个字符。省略键前缀时,键将被视为用户的私有数据。那些由Kubernetes系统组件或第三方组件自动为用户资源添加的键必须使用键前缀,kubernetes.io/和k8s.io/前缀预留给了kubernetes的核心组件使用,例如Node对象上常用的kubernetes.io/os、kubernetes.io/arch和kubernetes.io/hostname等。

如果之一键名,键值为空 ,表示用于判断某个键是否存在,也行

eg:

查看 资源的标签

[root@master01 ~]# kubectl get no/master01 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master01 Ready master 10d v1.19.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master01,kubernetes.io/os=linux,node-role.kubernetes.io/master=

beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=master01

kubernetes.io/os=linux

node-role.kubernetes.io/master=

对于以上标签来说,beta.kubernetes.io 、 node-role.kubernetes.io \node-role.kubernetes.io都是键前缀; arch 、os、hostanme 是键名称。amd64 、linux、master01 都是键值

标签规范

标签的键值必须不能多于63个字符,它要么为空,要么是以字母或数字开头及结尾,且中间仅使用了字母、数字、连接号(-)、下划线(_)或点号(.)等字符的数据。

常用示例标签:

版本标签:"release" : "stable","release" : "canary","release" : "beta"。

环境标签:"environment" : "dev","environment" : "qa","environment" : "prod"。

应用标签:"app" : "ui","app" : "as","app" : "pc","app" : "sc"。

架构层级标签:"tier" : "frontend","tier" : "backend", "tier" : "cache"。

分区标签:"partition" : "customerA","partition" : "customerB"。

品控级别标签:"track" : "daily","track" : "weekly"。

标签选择器

创建标签时:release=alpha,

这里的等号表示赋值

标签选择器使用:release=alpha

这里的等号表示判断,隐藏的含义就是双等号

标签选择器的参数是 -l

标签选择器用于表达标签的查询条件或选择标准,Kubernetes API目前支持两个选择器:

1、基于等值关系(equality-based)的标签选项器:

同时指定多个选择器时需要以逗号将其分隔,各选择器之间遵循“与”逻辑,即必须要满足所有条件,而且空值的选择器将不选择任何对象。

基于等值关系的标签选择器的可用操作符有=、==和!=三种,其中前两个意义相同,都表示“等值”关系,最后一个表示“不等”。例如env=dev和env!=prod都是基于等值关系的选择器,而tier in (frontend,backend)则是基于集合关系的选择器。

2、基于集合关系(set-based)的标签选择器4类:

- KEY in (VALUE1,VALUE2,…) :指定的键名的值存在于给定的列表中即满足条件;

- KEY notin (VALUE1,VALUE2,…) :指定的键名的值不存在于给定列表中即满足条件;

- KEY:所有存在此键名标签的资源;

- !KEY:所有不存在此键名标签的资源。

eg1: 等值标签选择器

[root@node01 ~]# kubectl get po -l app=demoapp

NAME READY STATUS RESTARTS AGE

demoapp-5f7d8f9847-4t7sk 1/1 Running 0 3d17h

demoapp-5f7d8f9847-lkwxw 1/1 Running 4 3d17h

demoapp-5f7d8f9847-rt7gb 1/1 Running 0 3d17h

使用标签选择器之后,仍然可以显示标签

[root@node01 ~]# kubectl get po -l app=demoapp --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demoapp-5f7d8f9847-4t7sk 1/1 Running 0 3d17h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-lkwxw 1/1 Running 4 3d17h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-rt7gb 1/1 Running 0 3d17h app=demoapp,pod-template-hash=5f7d8f9847

eg2: 不等值标签选择器

注意 包括不含有该标签的none 也是

NAME READY STATUS RESTARTS AGE LABELS

init-container-demo 1/1 Running 0 24h <none>

lifecycle-demo 1/1 Running 0 25h <none>

liveness-exec-demo 1/1 Running 4 40h <none>

liveness-httpget-demo 1/1 Running 3 30h <none>

liveness-tcpsocket-demo 0/1 CrashLoopBackOff 336 31h <none>

memleak-pod 0/1 CrashLoopBackOff 32 14h <none>

mypod 1/1 Running 4 3d17h app=mypod,release=canary

mypod-with-hostnetwork 1/1 Running 4 2d21h app=mypod,release=canary

mypod-with-ports 1/1 Running 0 2d22h app=mypod,release=canary

eg3:

基于集合关系 存在判断 【只要包含app 键就行,标签值不用管】

[root@node01 ~]# kubectl get po -l app --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demoapp-5f7d8f9847-4t7sk 1/1 Running 0 3d18h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-lkwxw 1/1 Running 4 3d18h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-rt7gb 1/1 Running 0 3d18h app=demoapp,pod-template-hash=5f7d8f9847

mypod 1/1 Running 4 3d18h app=mypod,release=canary

mypod-with-hostnetwork 1/1 Running 4 2d22h app=mypod,release=canary

mypod-with-ports 1/1 Running 0 2d22h app=mypod,release=canary

[root@node01 ~]#

eg4 基于集合关系 不存在判断 【只要包含不包含app 键就行,得用引号】

root@node01 ~]# kubectl get po -l '!app' --show-labels

NAME READY STATUS RESTARTS AGE LABELS

init-container-demo 1/1 Running 0 24h environment=dev

lifecycle-demo 1/1 Running 0 26h release=alpha

liveness-exec-demo 1/1 Running 4 40h <none>

liveness-httpget-demo 1/1 Running 3 30h <none>

liveness-tcpsocket-demo 0/1 CrashLoopBackOff 342 31h <none>

memleak-pod 0/1 CrashLoopBackOff 35 15h <none>

readiness-httpget-demo 1/1 Running 2 30h <none>

securitycontext-capabilities-demo 1/1 Running 4 2d3h <none>

securitycontext-runasuser-demo 1/1 Running 0 2d4h <none>

securitycontext-sysctls-demo 0/1 SysctlForbidden 0 2d <none>

sidecar-container-demo 2/2 Running 0 13h <none>

eg5:基于集合关系 存在判断 满足其中之一即可

[root@node01 ~]# kubectl get po -l 'app in (mypod,demoapp)' --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demoapp-5f7d8f9847-4t7sk 1/1 Running 0 3d18h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-lkwxw 1/1 Running 4 3d18h app=demoapp,pod-template-hash=5f7d8f9847

demoapp-5f7d8f9847-rt7gb 1/1 Running 0 3d18h app=demoapp,pod-template-hash=5f7d8f9847

mypod 1/1 Running 4 3d18h app=mypod,release=canary

mypod-with-hostnetwork 1/1 Running 4 2d22h app=mypod,release=canary

mypod-with-ports 1/1 Running 0 2d22h app=mypod,release=canary

eg5:基于集合关系 不存在判断 满足其任一条件或者该标签不存在

NAME READY STATUS RESTARTS AGE LABELS

init-container-demo 1/1 Running 0 24h environment=dev

lifecycle-demo 1/1 Running 0 26h release=alpha

liveness-exec-demo 1/1 Running 4 40h <none>

liveness-httpget-demo 1/1 Running 3 30h <none>

liveness-tcpsocket-demo 0/1 CrashLoopBackOff 344 31h <none>

memleak-pod 0/1 CrashLoopBackOff 37 15h <none>

readiness-httpget-demo 1/1 Running 2 30h <none>

securitycontext-capabilities-demo 1/1 Running 4 2d3h <none>

securitycontext-runasuser-demo 1/1 Running 0 2d4h <none>

securitycontext-sysctls-demo 0/1 SysctlForbidden 0 2d <none>

sidecar-container-demo 2/2 Running 0 13h app=demoapp2

eg6: 多条件判断 ,有号包裹、逗号隔开条件

[root@node01 ~]# kubectl get po -l 'app=mypod ,environment=prod' --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mypod 1/1 Running 4 3d18h app=mypod,environment=prod,release=canary

标签管理

kubectl label

添加标签

[root@node01 ~]# kubectl label po/lifecycle-demo -n default release=alpha

pod/lifecycle-demo labeled

查看标签

[root@node01 ~]# kubectl get po/lifecycle-demo --show-labels

NAME READY STATUS RESTARTS AGE LABELS

lifecycle-demo 1/1 Running 0 25h release=alpha

删除标签

直接在标签后面写减号就行

[root@node01 ~]# kubectl label po/lifecycle-demo -n default release-

pod/lifecycle-demo labeled

[root@node01 ~]# kubectl get po/lifecycle-demo --show-labels

NAME READY STATUS RESTARTS AGE LABELS

lifecycle-demo 1/1 Running 0 25h <none>

[root@node01 ~]# kubectl label po/lifecycle-demo -n default release-

label "release" not found.

pod/lifecycle-demo labeled

如果一个资源上没有标签再删除就报错

externalTrafficPolicy

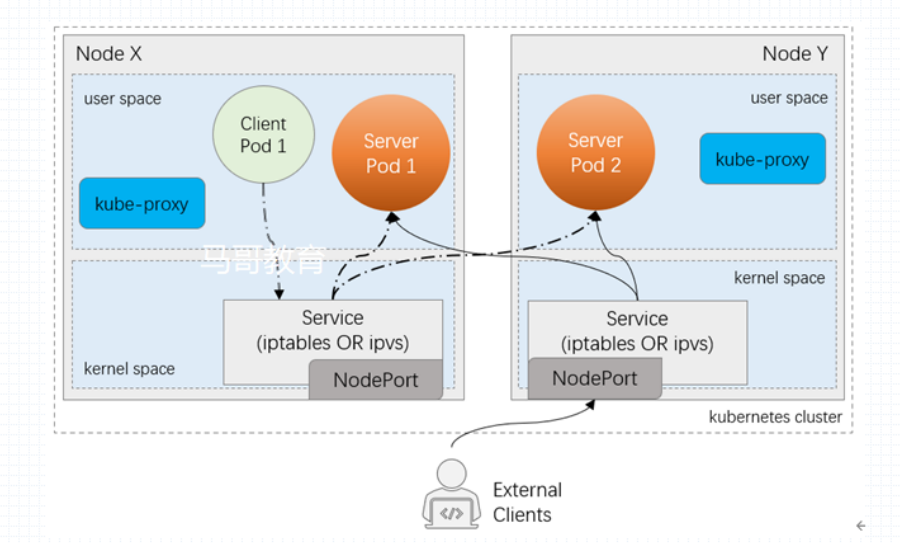

如图: 有2个pod1、pod2 分别位于NodeX 、NodeY. 如果外部流量访问NodeX_ip:nodeport .请求会一直是被调度度到Pod1 吗? 不是的 ,取决于externalTrafficPolicy

2种:

外部流量策略处理方式,Local表示由当前节点处理,Cluster表示向集群范围调度(默认值)

local 当前处理的话,如果恰巧访问的节点上没有该pod 就GG

例子:

设置为集群策略时 ,多pod, 发现虽然访问的是本机的pod ,但是也会调度到其他节点访问

[root@node01 chapter7]# kubectl get po -o wide |grep ^d

demoapp-5f7d8f9847-4wz6s 1/1 Running 0 4m20s 10.244.4.20 node02 <none> <none>

demoapp-5f7d8f9847-hwxrb 1/1 Running 0 118m 10.244.5.16 node01 <none> <none>

[root@node01 chapter7]# while true ; do curl 192.168.2.2:31398 ;done

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.0, ServerName: demoapp-5f7d8f9847-4wz6s, ServerIP: 10.244.4.20!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.0, ServerName: demoapp-5f7d8f9847-4wz6s, ServerIP: 10.244.4.20!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

设置为集群策略时 ,只有1个pod ,只要是node 节点,即使node节点上没有也能访问该pod

[root@master01 ~]# kubectl scale deployment demoapp --replicas=1

deployment.apps/demoapp scaled

[root@master01 ~]# kubectl get po/demoapp-5f7d8f9847-hwxrb -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-5f7d8f9847-hwxrb 1/1 Running 0 148m 10.244.5.16 node01 <none> <none>

验证结果

[root@master01 ~]# curl node01:31398

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

[root@master01 ~]# curl node02:31398

iKubernetes demoapp v1.0 !! ClientIP: 10.244.4.0, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

[root@master01 ~]#

如果设置为local 那么就不会再调度到其他节点上 ,如果该节点有多个pod ,访问该节点,也只是该一个节点上的pod 做负载均衡

externalIPs

假如现在LoadBalancer没有iaas 公有云环境,又不想使用NodePort访问 。或者来说不想在nodeport 外面再做一层负载均衡器, 因为可能需要需要手动改配置文件,把节点ip 进行增减,或者需要二次开发apI。

但是我们想通过 节点ip 和总所周知的端口来访问集群内服务,而又不用共享宿主机网络名称空间其实也是可以的:

在service 上 添加外部IP来实现 。如果我们集群当中只有一个节点或者有限节点拥有外部Ip地址,甚至没有外部ip 用节点自身的IP 也行,把外部流量引入进来。如果节点上的知名端口如果没有被用,我们甚至可以直接拿来使用

clusterip 既可以用nodeport 也可以用external ip 接入外部流量

在node 节点添加额外ip

[root@node02 ~]# ip -4 a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.2.3/21 brd 192.168.7.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.2.6/21 scope global secondary eth0

valid_lft forever preferred_lft forever

[root@node02 ~]#

[root@node01 chapter7]# cat services-externalip-demo.yaml

# Maintainer: MageEdu <mage@magedu.com>

# URL: http://www.magedu.com

---

kind: Service

apiVersion: v1

metadata:

name: demoapp-externalip-svc

namespace: default

spec:

type: ClusterIP

selector:

app: demoapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

externalIPs:

- 192.168.2.6

验证结果 访问的是 external_ip:service_port

[root@node01 chapter7]# curl 192.168.2.6

iKubernetes demoapp v1.0 !! ClientIP: 10.244.4.0, ServerName: demoapp-5f7d8f9847-hwxrb, ServerIP: 10.244.5.16!

[root@node01 chapter7]# kubectl get svc/demoapp-externalip-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp-externalip-svc ClusterIP 10.106.240.186 192.168.2.6 80/TCP 7m50s

[root@node01 chapter7]#

为了防止单点失败 ,可以用keepalived

Endpoint 资源

传统的端点指的是 通过LAN或者WAN 连接通信的设备,在k8s 上端点指的是pod 或者节点上能够连接通信的套接字。。表现形式 就是 ip +port ?

端点和就绪探针:

创建service 的同时会创建同名的ep 。service 并不直接匹配后端的标签,只有就绪探针就绪以后,才会被endpoint 捕获并且添加为后端可用端点。所有那些 NotReadyAddresses 不会被service 分配流量

[root@node01 chapter7]# kubectl describe ep/demoapp

Name: demoapp

Namespace: default

Labels: app=demoapp

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2021-12-05T17:05:13Z

Subsets:

Addresses: 10.244.4.21,10.244.4.22,10.244.5.16

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

80-80 80 TCP

Events: <none>

[root@node01 chapter7]#

eg:

验证当pod 内的就绪探针检测通过时,pod ip才会被加入到ep 中

[root@node01 chapter7]# cat services-readiness-demo.yaml

# Author: MageEdu <mage@magedu.com>

---

kind: Service

apiVersion: v1

metadata:

name: services-readiness-demo

namespace: default

spec:

selector:

app: demoapp-with-readiness

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demoapp2

spec:

replicas: 2

selector:

matchLabels:

app: demoapp-with-readiness

template:

metadata:

creationTimestamp: null

labels:

app: demoapp-with-readiness

spec:

containers:

- image: ikubernetes/demoapp:v1.0

name: demoapp

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

path: '/readyz'

port: 80

initialDelaySeconds: 15

periodSeconds: 10

---

[root@node01 chapter7]#

创建完成后 ,当匹配的pod/demoapp2是ready状态时,endpoint 后有2个地址

[root@node01 chapter7]# kubectl get po -l app=demoapp-with-readiness

NAME READY STATUS RESTARTS AGE

demoapp2-677db795b4-65h2f 1/1 Running 0 6m8s

demoapp2-677db795b4-zh5fz 1/1 Running 0 6m8s

[root@node01 chapter7]# kubectl get svc/services-readiness-demo -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

services-readiness-demo ClusterIP 10.98.48.27 <none> 80/TCP 6m46s app=demoapp-with-readiness

[root@node01 chapter7]#

[root@node01 chapter7]# kubectl describe svc/services-readiness-demo

Name: services-readiness-demo

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=demoapp-with-readiness

Type: ClusterIP

IP Families: <none>

IP: 10.98.48.27

IPs: <none>

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.4.26:80,10.244.5.21:80

Session Affinity: None

Events: <none>

[root@node01 chapter7]#

现在把其中一个pod 的就绪探针人为改失败了,等30秒3个个周期后

[root@node01 chapter7]# curl -XPOST -d 'readyz=FAIL' 10.244.4.26/readyz

[root@node01 chapter7]# kubectl get po -l app=demoapp-with-readiness

NAME READY STATUS RESTARTS AGE

demoapp2-677db795b4-65h2f 0/1 Running 0 13m

demoapp2-677db795b4-zh5fz 1/1 Running 0 13m

发现ep 中10.244.4.26 为 NotReadyAddresses

Name: services-readiness-demo

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2021-12-06T02:38:40Z

Subsets:

Addresses: 10.244.5.21

NotReadyAddresses: 10.244.4.26

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>

[root@node01 chapter7]#

这时后不停访问svc/services-readiness-demo,发现请求只会调度在10.244.5.21上

当把就绪探针 改为ok 检测通过时,默认1周期10秒钟,ep 又把10.244.4.26 加上去,

[root@node02 ~]# curl -XPOST -d 'readyz=OK' 10.244.4.26/readyz

这时访问service 是轮询的效果

[root@node01 chapter7]# while true ;do curl 10.98.48.27; done

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.0, ServerName: demoapp2-677db795b4-65h2f, ServerIP: 10.244.4.26!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp2-677db795b4-zh5fz, ServerIP: 10.244.5.21!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.0, ServerName: demoapp2-677db795b4-65h2f, ServerIP: 10.244.4.26!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp2-677db795b4-zh5fz, ServerIP: 10.244.5.21!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.1, ServerName: demoapp2-677db795b4-zh5fz, ServerIP: 10.244.5.21!

iKubernetes demoapp v1.0 !! ClientIP: 10.244.5.0, ServerName: demoapp2-677db795b4-65h2f, ServerIP: 10.244.4.26!

手动创建ep

使用场景: 把集群外部的服务引入到k8s。 如果靠直接创建service是不行的 ,因为标签选择器无法选择到外部的机器上。可以 手动创建ep 添加外部固定端点,再创建相对应的service 即可。

这种方式 缺点就是 无法通过 就绪探针 对端点管理。需要用户手动维护关系的调用关系的正确性

资源清单格式

apiVersion: v1

kind: Endpoint

metadata: # 对象元数据

name:

namespace:

subsets: # 端点对象的列表

- addresses: # 处于“就绪”状态的端点地址对象列表

- hostname <string> # 端点主机名

ip <string> # 端点的IP地址,必选字段

nodeName <string> # 节点主机名

targetRef: # 提供了该端点的对象引用

apiVersion <string> # 被引用对象所属的API群组及版本

kind <string> # 被引用对象的资源类型,多为Pod

name <string> # 对象名称

namespace <string> # 对象所属的名称究竟

fieldPath <string> # 被引用的对象的字段,在未引用整个对象时使用,常用于仅引用

# 指定Pod对象中的单容器,例如spec.containers[1]

uid <string> # 对象的标识符;

notReadyAddresses: # 处于“未就绪”状态的端点地址对象列表,格式与address相同

ports: # 端口对象列表

- name <string> # 端口名称;

port <integer> # 端口号,必选字段;

protocol <string> # 协议类型,仅支持UDP、TCP和SCTP,默认为TCP;

appProtocol <string> # 应用层协议;

因为自定义的ep无法靠标签 来添加端点,所以自定义的ep用不着标签,它和service的关系只能靠相同名称空间下的相同name来匹配

eg: 引入外部mysql

[root@node01 chapter7]# cat mysql-endpoints-demo.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: mysql-external

namespace: default

subsets:

- addresses:

- ip: 192.168.2.8

- ip: 192.168.2.9

ports:

- name: mysql

port: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: mysql-external

namespace: default

spec:

type: ClusterIP

ports:

- name: mysql

port: 3306

targetPort: 3306

protocol: TCP

不可用 地址可以不必要写,费事。直接写可用的就行

[root@node01 chapter7]# kubectl get svc/mysql-external

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mysql-external ClusterIP 10.111.180.31 <none> 3306/TCP 5m

[root@node01 chapter7]# kubectl get ep/mysql-external

NAME ENDPOINTS AGE

mysql-external 192.168.2.8:3306,192.168.2.9:3306 5m23s

访问mysql

[root@node01 chapter7]# mysql -h10.111.180.31 -p123 -e "status"|grep user

Current user: root@192.168.2.2

如果这时后外部端点地址192.168.2.8 或者192.168.2.9不可用,需要手动 设置NotReadyAddresses 或者删除不可用的Addresses 就行