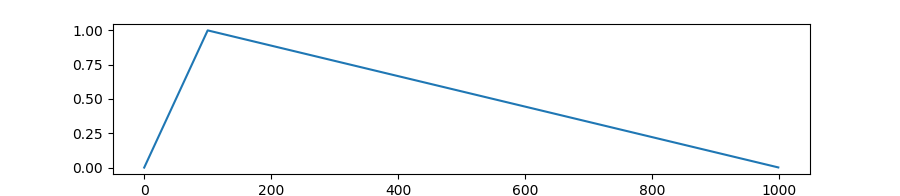

学习率预热

-

在预热期间,学习率从0线性增加到优化器中的初始lr。

-

在预热阶段之后创建一个schedule,使其学习率从优化器中的初始lr线性降低到0

Parameters

-

optimizer (Optimizer)

– The optimizer for which to schedule the learning rate. -

num_warmup_steps (int)

– The number of steps for the warmup phase. -

num_training_steps (int)

– The total number of training steps. -

last_epoch (int, optional, defaults to -1)

– The index of the last epoch when resuming training.

Returns

-

torch.optim.lr_scheduler.LambdaLR

with the appropriate schedule.

# training steps 的数量: [number of batches] x [number of epochs].

total_steps = len(train_dataloader) * epochs

# 设计 learning rate scheduler

scheduler = get_linear_schedule_with_warmup(optimizer, num_warmup_steps = 50,

num_training_steps = total_steps)

版权声明:本文为Xiao_CangTian原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。